Using electrodes implanted in the temporal lobes of seven awake epilepsy patients, University of Washington scientists have decoded brain signals (representing images) at nearly the speed of perception for the first time* — enabling the scientists to predict in real time which images of faces and houses the patients were viewing and when, and with better than 95 percent accuracy.

Multi-electrode placements on thalamus surface (credit: K.J. Miller et al./PLoS Comput Biol)

The research, published Jan. 28 in open-access PLOS Computational Biology, may lead to an effective way to help locked-in patients (who were paralyzed or have had a stroke) communicate, the scientists suggest.

Predicting what someone is seeing in real time

“We were trying to understand, first, how the human brain perceives objects in the temporal lobe, and second, how one could use a computer to extract and predict what someone is seeing in real time,” explained University of Washington computational neuroscientist Rajesh Rao. He is a UW professor of computer science and engineering and directs the National Science Foundation’s Center for Sensorimotor Engineering, headquartered at UW.

The study involved patients receiving care at Harborview Medical Center in Seattle. Each had been experiencing epileptic seizures not relieved by medication, so each had undergone surgery in which their brains’ temporal lobes were implanted (temporarily, for about a week) with electrodes to try to locate the seizures’ focal points.

Temporal lobes process sensory input and are a common site of epileptic seizures. Situated behind mammals’ eyes and ears, the lobes are also involved in Alzheimer’s and dementias and appear somewhat more vulnerable than other brain structures to head traumas, said UW Medicine neurosurgeon Jeff Ojemann.

Recording digital signatures of images in real time

In the experiment, signals from electrocorticographic (ECoG) electrodes from multiple temporal-lobe locations were processed powerful computational software that extracted two characteristic properties of the brain signals: “event-related potentials” (voltages from hundreds of thousands of neurons activated by an image) and “broadband spectral changes” (processing of power measurements across a wide range of frequencies).

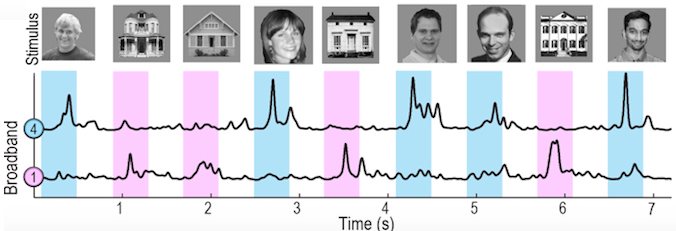

Averaged broadband power at two multi-electrode locations (1 and 4) following presentation of different images; note that responses to people are stronger than to houses. (credit: K.J. Miller et al./PLoS Comput Biol)

Target image (credit: K.J. Miller et al./PLoS Comput Biol)

The subjects, watching a computer monitor, were shown a random sequence of pictures: brief (400 millisecond) flashes of images of human faces and houses, interspersed with blank gray screens. Their task was to watch for an image of an upside-down house and verbally report this target, which appeared once during each of 3 runs (3 of 300 stimuli). Patients identified the target with less than 3 percent errors across all 21 experimental runs.

The computational software sampled and digitized the brain signals 1,000 times per second to extract their characteristics. The software also analyzed the data to determine which combination of electrode locations and signal types correlated best with what each subject actually saw.

By training an algorithm on the subjects’ responses to the (known) first two-thirds of the images, the researchers could examine the brain signals representing the final third of the images, whose labels were unknown to them, and predict with 96 percent accuracy whether and when (within 20 milliseconds) the subjects were seeing a house, a face or a gray screen, with only ~20 milliseconds timing error.

This accuracy was attained only when event-related potentials and broadband changes were combined for prediction, which suggests they carry complementary information.

Steppingstone to real-time brain mapping

“Traditionally scientists have looked at single neurons,” Rao said. “Our study gives a more global picture, at the level of very large networks of neurons, of how a person who is awake and paying attention perceives a complex visual object.”

The scientists’ technique, he said, is a steppingstone for brain mapping, in that it could be used to identify in real time which locations of the brain are sensitive to particular types of information.

“The computational tools that we developed can be applied to studies of motor function, studies of epilepsy, studies of memory. The math behind it, as applied to the biological, is fundamental to learning,” Ojemann added.

Lead author of the study is Kai Miller, a neurosurgery resident and physicist at Stanford University who obtained his M.D. and Ph.D. at the UW. Other collaborators were Dora Hermes, a Stanford postdoctoral fellow in neuroscience, and Gerwin Schalk, a neuroscientist at the Wadsworth Institute in New York.

This work was supported by National Aeronautics and Space Administration Graduate Student Research Program, the National Institutes of Health, the National Science Foundation, and the U.S. Army.

* In previous studies, such as these three covered on KurzweilAI, brain images were reconstructed after they were viewed, not in real time: Study matches brain scans with topics of thoughts, Neuroscape Lab visualizes live brain functions using dramatic images, How to make movies of what the brain sees.

Abstract of Spontaneous Decoding of the Timing and Content of Human Object Perception from Cortical Surface Recordings Reveals Complementary Information in the Event-Related Potential and Broadband Spectral Change

The link between object perception and neural activity in visual cortical areas is a problem of fundamental importance in neuroscience. Here we show that electrical potentials from the ventral temporal cortical surface in humans contain sufficient information for spontaneous and near-instantaneous identification of a subject’s perceptual state. Electrocorticographic (ECoG) arrays were placed on the subtemporal cortical surface of seven epilepsy patients. Grayscale images of faces and houses were displayed rapidly in random sequence. We developed a template projection approach to decode the continuous ECoG data stream spontaneously, predicting the occurrence, timing and type of visual stimulus. In this setting, we evaluated the independent and joint use of two well-studied features of brain signals, broadband changes in the frequency power spectrum of the potential and deflections in the raw potential trace (event-related potential; ERP). Our ability to predict both the timing of stimulus onset and the type of image was best when we used a combination of both the broadband response and ERP, suggesting that they capture different and complementary aspects of the subject’s perceptual state. Specifically, we were able to predict the timing and type of 96% of all stimuli, with less than 5% false positive rate and a ~20ms error in timing.