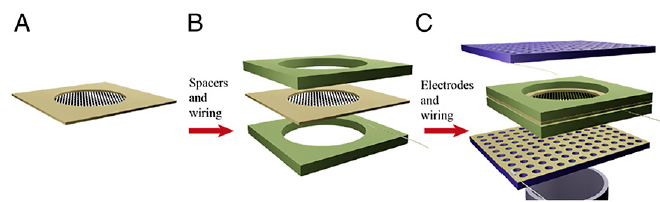

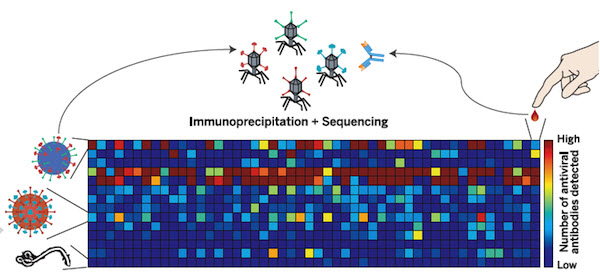

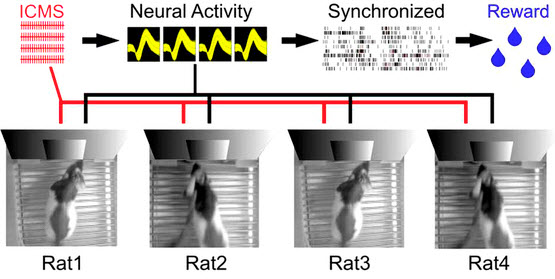

Experimental apparatus scheme for a Brainet computing device. A Brainet of four interconnected brains is shown. The arrows represent the flow of information through the Brainet. Inputs were delivered (red) as simultaneous intracortical microstimulation (ICMS) patterns (via implanted electrodes) to the somatosensory cortex of each rat. Neural activity (black) was then recorded and analyzed in real time. Rats were required to synchronize their neural activity with the other Brainet participants to receive water. (credit: Miguel Pais-Vieira et al./Scientific Reports)

Duke University neuroscientists have created a network called “Brainet” that uses signals from an array of electrodes implanted in the brains of multiple rodents in experiments to merge their collective brain activity and jointly control a virtual avatar arm or even perform sophisticated computations — including image pattern recognition and even weather forecasting.

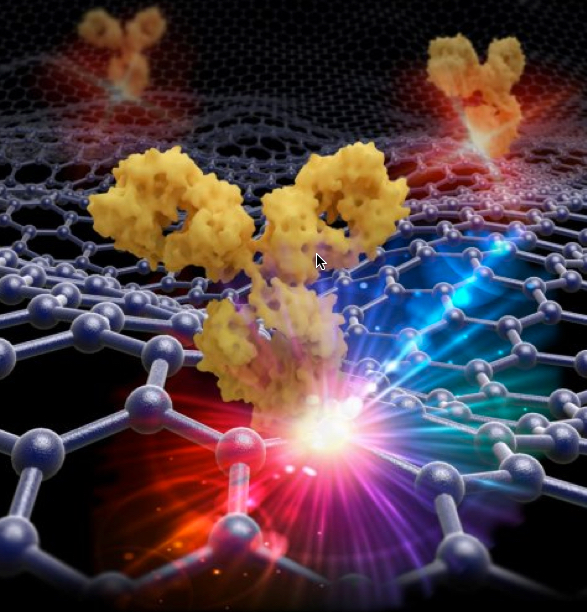

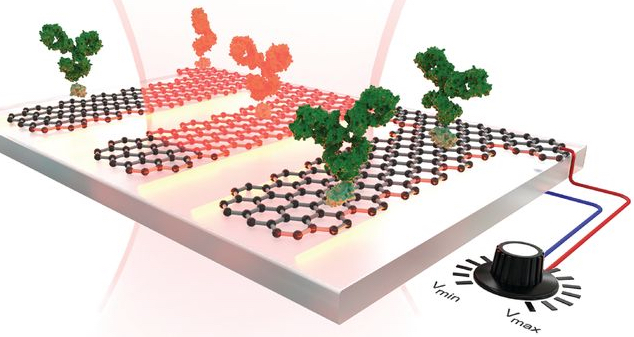

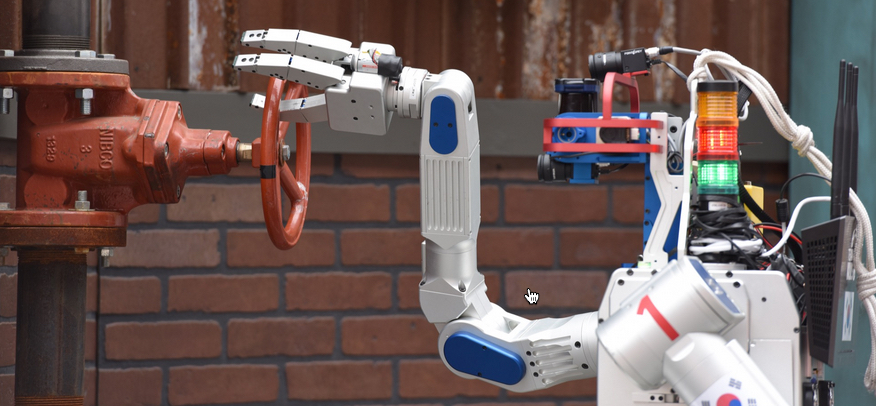

Brain-machine interfaces (BMIs) are computational systems that allow subjects to use their brain signals to directly control the movements of artificial devices, such as robotic arms, exoskeletons or virtual avatars. The Duke researchers at the Center for Neuroengineering previously built BMIs to capture and transmit the brain signals of individual rats, monkeys, and even human subjects, to control devices.

“Supra-brain” — the Matrix for monkeys?

As reported in two open-access papers in the July 9th 2015 issue of Scientific Reports, in the new research, rhesus monkeys were outfitted with electrocorticographic (ECoG) multiple-electrode arrays implanted in their motor and somatosensory cortices to capture and transmit their brain activity.

For one experiment, two monkeys were placed in separate rooms where they observed identical images of an avatar on a display monitor in front of them, and worked together to move the avatar on the screen to touch a moving target.

In another experiment, three monkeys were able to mentally control three degrees of freedom (dimensions) of a virtual arm movement in 3-D space. To achieve this performance, all three monkeys had to synchronize their collective brain activity to produce a “supra-brain” in charge of generating the 3-D movements of the virtual arm.

In the second Brainet study, three to four rats whose brains have been interconnected via pairwise brain-to-brain interfaces (BtBIs) were able to perform a variety of sophisticated shared classification and other computational tasks in a distributed, parallel computing architecture.

Human Brainets next

These results support the original claim of the Duke researchers that brainets may serve as test beds for the development of organic computers created by interfacing multiple animals brains with computers. This arrangement would employ a unique hybrid digital-analog computational engine as the basis of its operation, in a clear departure from the classical digital-only mode of operation of modern computers.

“This is the first demonstration of a shared brain-machine interface, said Miguel Nicolelis, M.D., Ph. D., co-director of the Center for Neuroengineering at the Duke University School of Medicine and principal investigator of the study. “We foresee that shared-BMIs will follow the same track and soon be translated to clinical practice.”

Nicolelis and colleagues of the Walk Again Project, based at the project’s laboratory in Brazil, are currently working to implement a non-invasive human Brainet to be employed in their neuro-rehabilitation training paradigm with severely paralyzed patients.

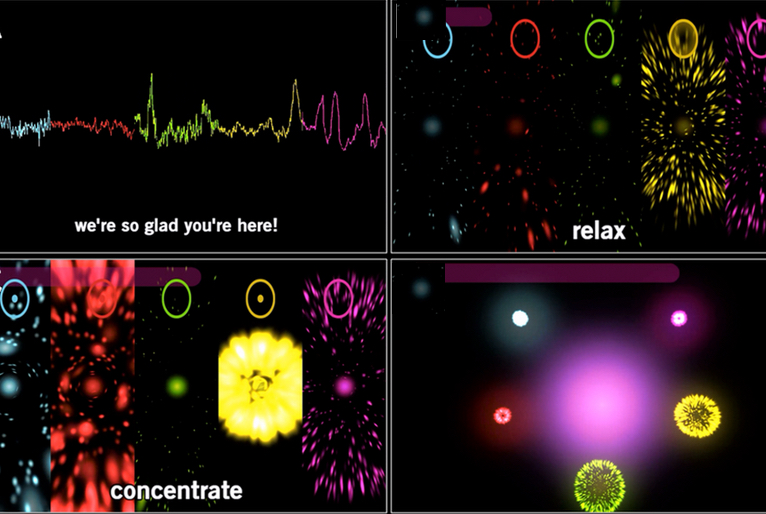

In this movie, three monkeys share control over the movement of a virtual arm in 3-D space. Each monkey contributes to two of three axes (X, Y and Z). Monkey C contributes to y- and z-axes (red dot), monkey M contributes to x- and y-axes (blue dot), and monkey K contributes to y- and z-axes (green dot). The contribution of the two monkeys to each axis is averaged to determine the arm position (represented by the black dot). (credit: Arjun Ramakrishnan et al./Scientific Reports)

Abstract of Building an organic computing device with multiple interconnected brains

Recently, we proposed that Brainets, i.e. networks formed by multiple animal brains, cooperating and exchanging information in real time through direct brain-to-brain interfaces, could provide the core of a new type of computing device: an organic computer. Here, we describe the first experimental demonstration of such a Brainet, built by interconnecting four adult rat brains. Brainets worked by concurrently recording the extracellular electrical activity generated by populations of cortical neurons distributed across multiple rats chronically implanted with multi-electrode arrays. Cortical neuronal activity was recorded and analyzed in real time, and then delivered to the somatosensory cortices of other animals that participated in the Brainet using intracortical microstimulation (ICMS). Using this approach, different Brainet architectures solved a number of useful computational problems, such as discrete classification, image processing, storage and retrieval of tactile information, and even weather forecasting. Brainets consistently performed at the same or higher levels than single rats in these tasks. Based on these findings, we propose that Brainets could be used to investigate animal social behaviors as well as a test bed for exploring the properties and potential applications of organic computers.

Abstract of Computing arm movements with a monkey Brainet

Traditionally, brain-machine interfaces (BMIs) extract motor commands from a single brain to control the movements of artificial devices. Here, we introduce a Brainet that utilizes very-large-scale brain activity (VLSBA) from two (B2) or three (B3) nonhuman primates to engage in a common motor behaviour. A B2 generated 2D movements of an avatar arm where each monkey contributed equally to X and Y coordinates; or one monkey fully controlled the X-coordinate and the other controlled the Y-coordinate. A B3 produced arm movements in 3D space, while each monkey generated movements in 2D subspaces (X-Y, Y-Z, or X-Z). With long-term training we observed increased coordination of behavior, increased correlations in neuronal activity between different brains, and modifications to neuronal representation of the motor plan. Overall, performance of the Brainet improved owing to collective monkey behaviour. These results suggest that primate brains can be integrated into a Brainet, which self-adapts to achieve a common motor goal.