(credit: Gerd Altmann/Pixabay)

By Susan Schneider, PhD, and Edwin Turner, PhD

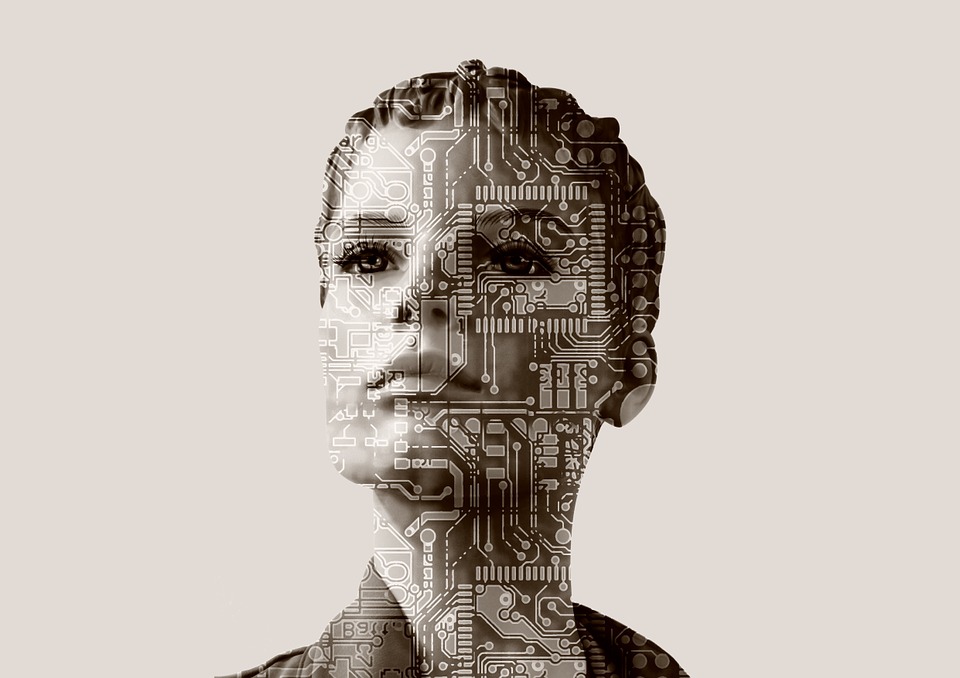

Every moment of your waking life and whenever you dream, you have the distinct inner feeling of being “you.” When you see the warm hues of a sunrise, smell the aroma of morning coffee or mull over a new idea, you are having conscious experience. But could an artificial intelligence (AI) ever have experience, like some of the androids depicted in Westworld or the synthetic beings in Blade Runner?

The question is not so far-fetched. Robots are currently being developed to work inside nuclear reactors, fight wars and care for the elderly. As AIs grow more sophisticated, they are projected to take over many human jobs within the next few decades. So we must ponder the question: Could AIs develop conscious experience?

This issue is pressing for several reasons. First, ethicists worry that it would be wrong to force AIs to serve us if they can suffer and feel a range of emotions. Second, consciousness could make AIs volatile or unpredictable, raising safety concerns (or conversely, it could increase an AI’s empathy; based on its own subjective experiences, it might recognize consciousness in us and treat us with compassion).

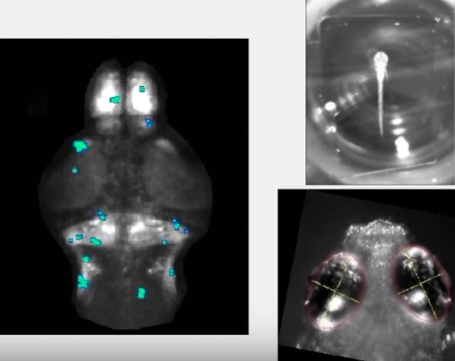

Third, machine consciousness could impact the viability of brain-implant technologies, like those to be developed by Elon Musk’s new company, Neuralink. If AI cannot be conscious, then the parts of the brain responsible for consciousness could not be replaced with chips without causing a loss of consciousness. And, in a similar vein, a person couldn’t upload their brain to a computer to avoid death, because that upload wouldn’t be a conscious being.

In addition, if AI eventually out-thinks us yet lacks consciousness, there would still be an important sense in which we humans are superior to machines; it feels like something to be us. But the smartest beings on the planet wouldn’t be conscious or sentient.

A lot hangs on the issue of machine consciousness, then. Yet neuroscientists are far from understanding the basis of consciousness in the brain, and philosophers are at least equally far from a complete explanation of the nature of consciousness.

A test for machine consciousness

So what can be done? We believe that we do not need to define consciousness formally, understand its philosophical nature or know its neural basis to recognize indications of consciousness in AIs. Each of us can grasp something essential about consciousness, just by introspecting; we can all experience what it feels like, from the inside, to exist.

(credit: Gerd Altmann/Pixabay)

Based on this essential characteristic of consciousness, we propose a test for machine consciousness, the AI Consciousness Test (ACT), which looks at whether the synthetic minds we create have an experience-based understanding of the way it feels, from the inside, to be conscious.

One of the most compelling indications that normally functioning humans experience consciousness, although this is not often noted, is that nearly every adult can quickly and readily grasp concepts based on this quality of felt consciousness. Such ideas include scenarios like minds switching bodies (as in the film Freaky Friday); life after death (including reincarnation); and minds leaving “their” bodies (for example, astral projection or ghosts). Whether or not such scenarios have any reality, they would be exceedingly difficult to comprehend for an entity that had no conscious experience whatsoever. It would be like expecting someone who is completely deaf from birth to appreciate a Bach concerto.

Thus, the ACT would challenge an AI with a series of increasingly demanding natural language interactions to see how quickly and readily it can grasp and use concepts and scenarios based on the internal experiences we associate with consciousness. At the most elementary level we might simply ask the machine if it conceives of itself as anything other than its physical self.

At a more advanced level, we might see how it deals with ideas and scenarios such as those mentioned in the previous paragraph. At an advanced level, its ability to reason about and discuss philosophical questions such as “the hard problem of consciousness” would be evaluated. At the most demanding level, we might see if the machine invents and uses such a consciousness-based concept on its own, without relying on human ideas and inputs.

Consider this example, which illustrates the idea: Suppose we find a planet that has a highly sophisticated silicon-based life form (call them “Zetas”). Scientists observe them and ponder whether they are conscious beings. What would be convincing proof of consciousness in this species? If the Zetas express curiosity about whether there is an afterlife or ponder whether they are more than just their physical bodies, it would be reasonable to judge them conscious. If the Zetas went so far as to pose philosophical questions about consciousness, the case would be stronger still.

There are also nonverbal behaviors that could indicate Zeta consciousness such as mourning the dead, religious activities or even turning colors in situations that correlate with emotional challenges, as chromatophores do on Earth. Such behaviors could indicate that it feels like something to be a Zeta.

The death of the mind of the fictional HAL 9000 AI computer in Stanley Kubrick’s 2001: A Space Odyssey provides another illustrative example. The machine in this case is not a humanoid robot as in most science fiction depictions of conscious machines; it neither looks nor sounds like a human being (a human did supply HAL’s voice, but in an eerily flat way). Nevertheless, the content of what it says as it is deactivated by an astronaut — specifically, a plea to spare it from impending “death” — conveys a powerful impression that it is a conscious being with a subjective experience of what is happening to it.

Could such indicators serve to identify conscious AIs on Earth? Here, a potential problem arises. Even today’s robots can be programmed to make convincing utterances about consciousness, and a truly superintelligent machine could perhaps even use information about neurophysiology to infer the presence of consciousness in humans. If sophisticated but non-conscious AIs aim to mislead us into believing that they are conscious for some reason, their knowledge of human consciousness could help them do so.

We can get around this though. One proposed technique in AI safety involves “boxing in” an AI—making it unable to get information about the world or act outside of a circumscribed domain, that is, the “box.” We could deny the AI access to the internet and indeed prohibit it from gaining any knowledge of the world, especially information about conscious experience and neuroscience.

(credit: Gerd Altmann/Pixabay)

Some doubt a superintelligent machine could be boxed in effectively — it would find a clever escape. We do not anticipate the development of superintelligence over the next decade, however. Furthermore, for an ACT to be effective, the AI need not stay in the box for long, just long enough administer the test.

ACTs also could be useful for “consciousness engineering” during the development of different kinds of AIs, helping to avoid using conscious machines in unethical ways or to create synthetic consciousness when appropriate.

Beyond the Turing Test

An ACT resembles Alan Turing’s celebrated test for intelligence, because it is entirely based on behavior — and, like Turing’s, it could be implemented in a formalized question-and-answer format. (An ACT could also be based on an AI’s behavior or on that of a group of AIs.)

But an ACT is also quite unlike the Turing test, which was intended to bypass any need to know what was transpiring inside the machine. By contrast, an ACT is intended to do exactly the opposite; it seeks to reveal a subtle and elusive property of the machine’s mind. Indeed, a machine might fail the Turing test because it cannot pass for human, but pass an ACT because it exhibits behavioral indicators of consciousness.

This is the underlying basis of our ACT proposal. It should be said, however, that the applicability of an ACT is inherently limited. An AI could lack the linguistic or conceptual ability to pass the test, like a nonhuman animal or an infant, yet still be capable of experience. So passing an ACT is sufficient but not necessary evidence for AI consciousness — although it is the best we can do for now. It is a first step toward making machine consciousness accessible to objective investigations.

So, back to the superintelligent AI in the “box” — we watch and wait. Does it begin to philosophize about minds existing in addition to bodies, like Descartes? Does it dream, as in Isaac Asimov’s Robot Dreams? Does it express emotion, like Rachel in Blade Runner? Can it readily understand the human concepts that are grounded in our internal conscious experiences, such as those of the soul or atman?

The age of AI will be a time of soul-searching — both of ours, and for theirs.

Originally published in Scientific American, July 19, 2017

Susan Schneider, PhD, is a professor of philosophy and cognitive science at the University of Connecticut, a researcher at YHouse, Inc., in New York, a member of the Ethics and Technology Group at Yale University and a visiting member at the Institute for Advanced Study at Princeton. Her books include The Language of Thought, Science Fiction and Philosophy, and The Blackwell Companion to Consciousness (with Max Velmans). She is featured in the new film, Supersapiens, the Rise of the Mind.

Edwin L. Turner, PhD, is a professor of Astrophysical Sciences at Princeton University, an Affiliate Scientist at the Kavli Institute for the Physics and Mathematics of the Universe at the University of Tokyo, a visiting member in the Program in Interdisciplinary Studies at the Institute for Advanced Study in Princeton, and a co-founding Board of Directors member of YHouse, Inc. Recently he has been an active participant in the Breakthrough Starshot Initiative. He has taken an active interest in artificial intelligence issues since working in the AI Lab at MIT in the early 1970s.