UW (University of Washington) | UW researchers create realistic video from audio files alone

University of Washington researchers at the UW Graphics and Image Laboratory have developed new algorithms that turn audio clips into a realistic, lip-synced video, starting with an existing video of that person speaking on a different topic.

As detailed in a paper to be presented Aug. 2 at SIGGRAPH 2017, the team successfully generated a highly realistic video of former president Barack Obama talking about terrorism, fatherhood, job creation and other topics, using audio clips of those speeches and existing weekly video addresses in which he originally spoke on a different topic decades ago.

Realistic audio-to-video conversion has practical applications like improving video conferencing for meetings (streaming audio over the internet takes up far less bandwidth than video, reducing video glitches), or holding a conversation with a historical figure in virtual reality, said Ira Kemelmacher-Shlizerman, an assistant professor at the UW’s Paul G. Allen School of Computer Science & Engineering.

Supasorn Suwajanakorn | Teaser — Synthesizing Obama: Learning Lip Sync from Audio

This beats previous audio-to-video conversion processes, which have involved filming multiple people in a studio saying the same sentences over and over to try to capture how a particular sound correlates to different mouth shapes, which is expensive, tedious and time-consuming. The new machine learning tool may also help overcome the “uncanny valley” problem, which has dogged efforts to create realistic video from audio.

How to do it

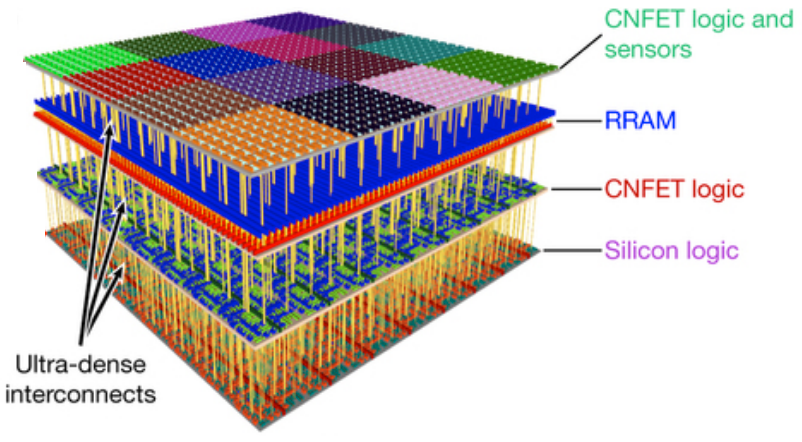

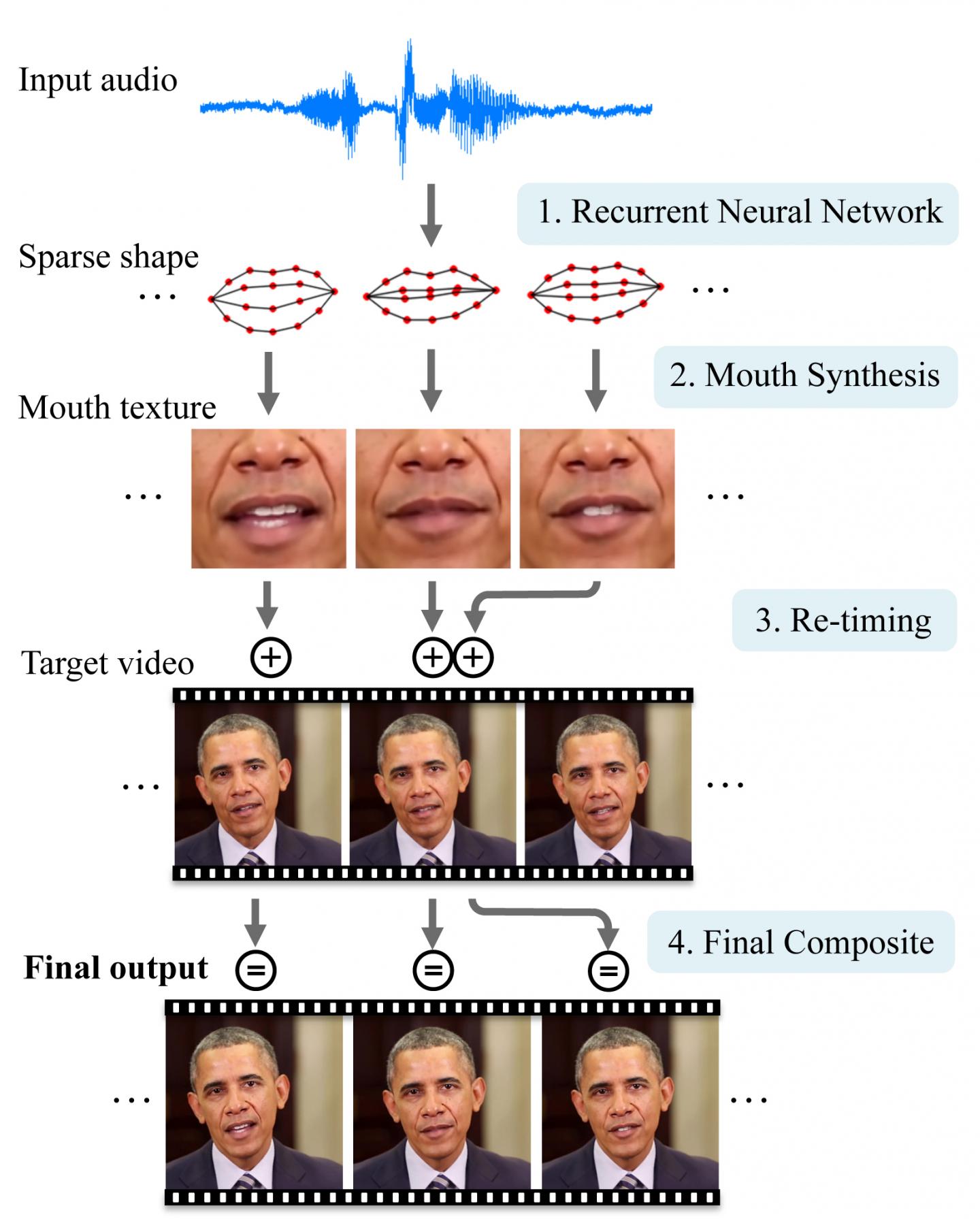

A neural network first converts the sounds from an audio file into basic mouth shapes. Then the system grafts and blends those mouth shapes onto an existing target video and adjusts the timing to create a realistic, lip-synced video of the person delivering the new speech. (credit: University of Washington)

1. Find or record a video of the person (or use video chat tools like Skype to create a new video) for the neural network to learn from. There are millions of hours of video that already exist from interviews, video chats, movies, television programs and other sources, the researchers note. (Obama was chosen because there were hours of presidential videos in the public domain.)

2. Train the neural network to watch videos of the person and translate different audio sounds into basic mouth shapes.

3. The system then uses the audio of an individual’s speech to generate realistic mouth shapes, which are then grafted onto and blended with the head of that person. Use a small time shift to enable the neural network to anticipate what the person is going to say next.

4. Currently, the neural network is designed to learn on one individual at a time, meaning that Obama’s voice — speaking words he actually uttered — is the only information used to “drive” the synthesized video. Future steps, however, include helping the algorithms generalize across situations to recognize a person’s voice and speech patterns with less data, with only an hour of video to learn from, for instance, instead of 14 hours.

Fakes of fakes

So the obvious question is: Can you use someone else’s voice on a video (assuming enough videos)? The researchers said they decided against going down the path, but they didn’t say it was impossible.

Even more pernicious: the original video person’s words (not just the voice) could be faked using Princeton/Adobe’s “VoCo” software (when available) — simply by editing a text transcript of their voice recording — or the fake voice itself could be modified.

Or Disney Research’s FaceDirector could be used to edit recorded substitute facial expressions (along with the fake voice) into the video.

However, by reversing the process — feeding video into the neural network instead of just audio — one could also potentially develop algorithms that could detect whether a video is real or manufactured, the researchers note.

The research was funded by Samsung, Google, Facebook, Intel, and the UW Animation Research Labs. You can contact the research team at audiolipsync@cs.washington.edu.

Abstract of Synthesizing Obama: Learning Lip Sync from Audio

Given audio of President Barack Obama, we synthesize a high quality video of him speaking with accurate lip sync, composited into a target video clip. Trained on many hours of his weekly address footage, a recurrent neural network learns the mapping from raw audio features to mouth shapes. Given the mouth shape at each time instant, we synthesize high quality mouth texture, and composite it with proper 3D pose matching to change what he appears to be saying in a target video to match the input audio track. Our approach produces photorealistic results.