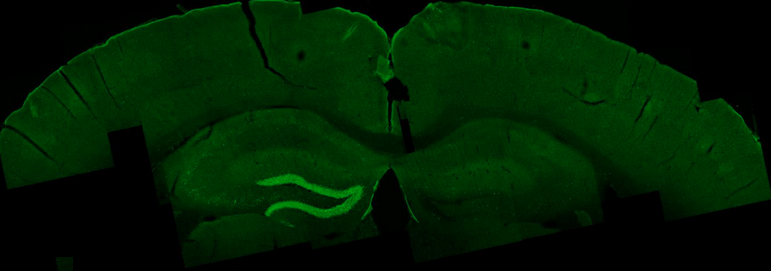

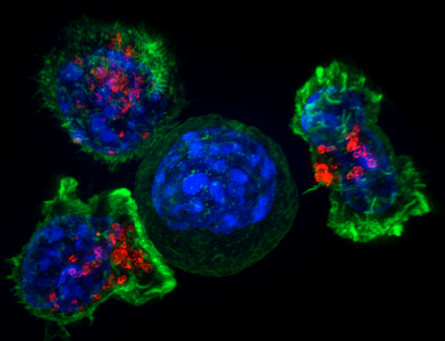

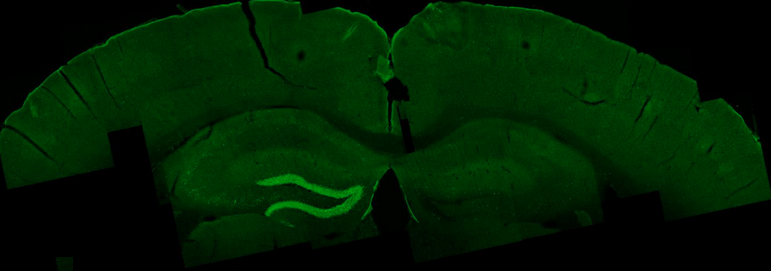

External electrical waves excite an area in the mouse hippocampus, shown in bright green. (credit: Nir Grossman, Ph.D., Suhasa B. Kodandaramaiah, Ph.D., and Andrii Rudenko, Ph.D.)

MIT researchers and associates have come up with a breakthrough method of remotely stimulating regions deep within the brain, replacing the invasive surgery now required for implanting electrodes for Parkinson’s and other brain disorders.

The new method could make deep-brain stimulation for brain disorders less expensive, more accessible to patients, and less risky (avoiding brain hemorrhage and infection).

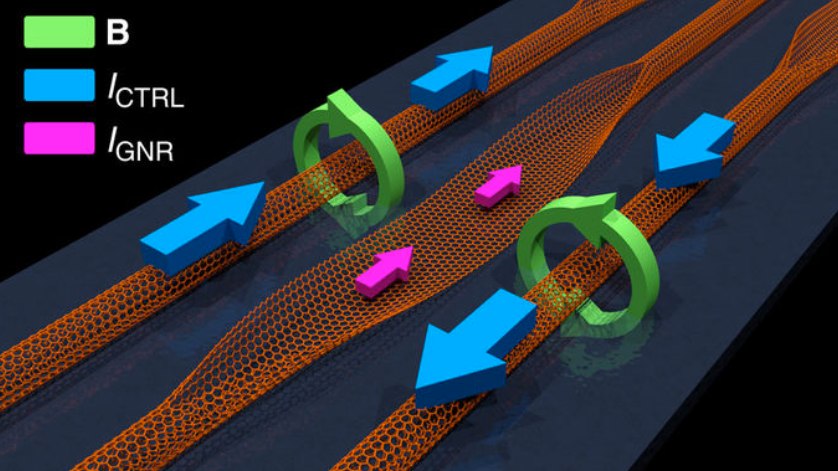

Working with mice, the researchers applied two high-frequency electrical currents at two slightly different frequencies (E1 and E2 in the diagram below), attaching electrodes (similar those used with an EEG brain machine) to the surface of the skull.

A new noninvasive method for deep-brain stimulation (credit: Grossman et al./Cell)

At these higher brain frequencies, the currents have no effect on brain tissues. But where the currents converge deep in the brain, they interfere with one another in such a way that they generate low-frequency current (corresponding to the red envelope in the diagram) inside neurons, thus stimulating neural electrical activity.

The researchers named this method “temporal interference stimulation” (that is, interference between pulses in the two currents at two slightly different times — generating the difference frequency).* For the experimental setup shown in the diagram above, the E1 current was 1kHz (1,000 Hz), which mixed with a 1.04kHz E2 current. That generated a current with a 40Hz “delta f” difference frequency — a frequency that can stimulate neural activity in the brain. (The researchers found no harmful effects in any part of the mouse brain.)

“Traditional deep-brain stimulation requires opening the skull and implanting an electrode, which can have complications,” explains Ed Boyden, an associate professor of biological engineering and brain and cognitive sciences at MIT, and the senior author of the study, which appears in the June 1, 2017 issue of the journal Cell. Also, “only a small number of people can do this kind of neurosurgery.”

Custom-designed, targeted deep-brain stimulation

If this new method is perfected and clinically tested, neurologists could control the size and location of the exact tissue that receives the electrical stimulation for each patient, by selecting the frequency of the currents and the number and location of the electrodes, according to the researchers.

Neurologists could also steer the location of deep-brain stimulation in real time, without moving the electrodes, by simply altering the currents. In this way, deep targets could be stimulated for conditions such as Parkinson’s, epilepsy, depression, and obsessive-compulsive disorder — without affecting surrounding brain structures.

The researchers are also exploring the possibility of using this method to experimentally treat other brain conditions, such as autism, and for basic science investigations.

Co-author Li-Huei Tsai, director of MIT’s Picower Institute for Learning and Memory, and researchers in her lab tested this technique in mice and found that they could stimulate small regions deep within the brain, including the hippocampus. But they were also able to shift the site of stimulation, allowing them to activate different parts of the motor cortex and prompt the mice to move their limbs, ears, or whiskers.

“We showed that we can very precisely target a brain region to elicit not just neuronal activation but behavioral responses,” says Tsai.

Last year, Tsai showed (open access) that using light to visually induce brain waves of a particular frequency could substantially reduce the beta amyloid plaques seen in Alzheimer’s disease, in the brains of mice. She now plans to explore whether this new type of electrical stimulation could offer a new way to generate the same type of beneficial brain waves.

This new method is also an alternative to other brain-stimulation methods.

Transcranial magnetic stimulation (TMS), which is FDA-approved for treating depression and to study the basic science of cognition, emotion, sensation, and movement, can stimulate deep brain structures but can result in surface regions being strongly stimulated, according to the researchers.

Transcranial ultrasound and expression of heat-sensitive receptors and injection of thermomagnetic nanoparticles have been proposed, “but the unknown mechanism of action … and the need to genetically manipulate the brain, respectively, may limit their immediate use in humans,” the researchers note in the paper.

The MIT researchers collaborated with investigators at Beth Israel Deaconess Medical Center (BIDMC), the IT’IS Foundation, Harvard Medical School, and ETH Zurich.

The research was funded in part by the Wellcome Trust, a National Institutes of Health Director’s Pioneer Award, an NIH Director’s Transformative Research Award, the New York Stem Cell Foundation Robertson Investigator Award, the MIT Center for Brains, Minds, and Machines, Jeremy and Joyce Wertheimer, Google, a National Science Foundation Career Award, the MIT Synthetic Intelligence Project, and Harvard Catalyst: The Harvard Clinical and Translational Science Center.

* Similar to a radio-frequency or audio “beat frequency.”

Abstract of Noninvasive Deep Brain Stimulation via Temporally Interfering Electric Fields

We report a noninvasive strategy for electrically stimulating neurons at depth. By delivering to the brain multiple electric fields at frequencies too high to recruit neural firing, but which differ by a frequency within the dynamic range of neural firing, we can electrically stimulate neurons throughout a region where interference between the multiple fields results in a prominent electric field envelope modulated at the difference frequency. We validated this temporal interference (TI) concept via modeling and physics experiments, and verified that neurons in the living mouse brain could follow the electric field envelope. We demonstrate the utility of TI stimulation by stimulating neurons in the hippocampus of living mice without recruiting neurons of the overlying cortex. Finally, we show that by altering the currents delivered to a set of immobile electrodes, we can steerably evoke different motor patterns in living mice.