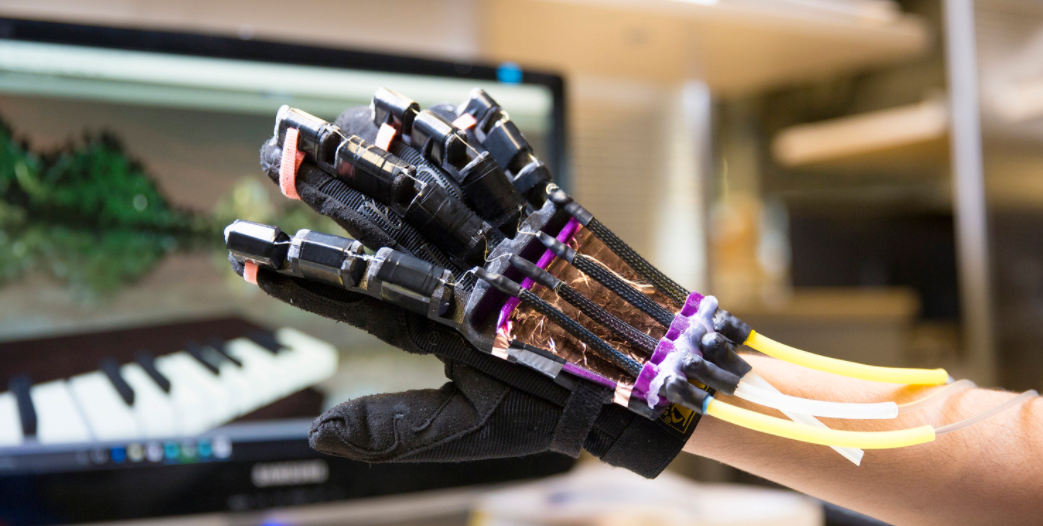

Prototype of haptic VR glove, using soft robotic “muscles” to provide realistic tactile feedback for VR experiences (credit: Jacobs School of Engineering/UC San Diego)

Engineers at UC San Diego have designed a light, flexible glove with soft robotic muscles that provide realistic tactile feedback for virtual reality (VR) experiences.

Currently, VR tactile-feedback user interfaces are bulky, uncomfortable to wear and clumsy, and they simply vibrate when a user touches a virtual surface or object.

“This is a first prototype, but it is surprisingly effective,” said Michael Tolley, a mechanical engineering professor at the Jacobs School of Engineering at UC San Diego and a senior author of a paper presented at the Electronic Imaging, Engineering Reality for Virtual Reality conference in Burlingame, California and published May 31, 2017 in Advanced Engineering Materials.

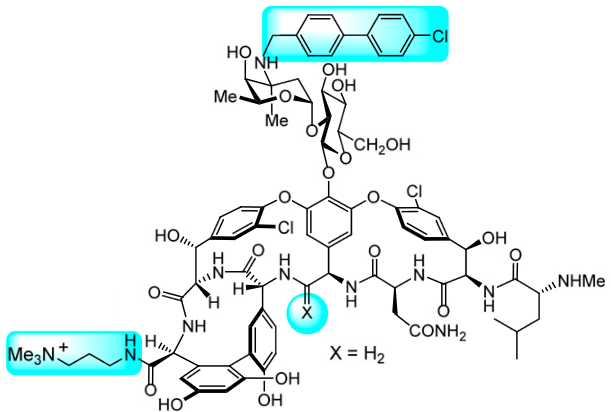

The key soft-robotic component of the new glove is a version of the “McKibben muscle” (a pneumatic, or air-based, actuator invented in 1950s by the physician Joseph L. McKibben for use in prosthetic limbs), using soft latex chambers covered with braided fibers. To apply tactile feedback when the user moves their fingers, the muscles respond like springs. The board controls the muscles by inflating and deflating them.*

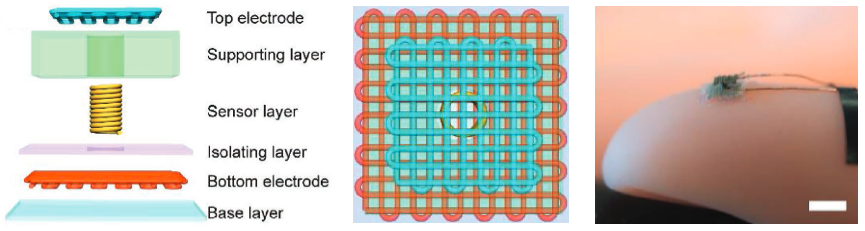

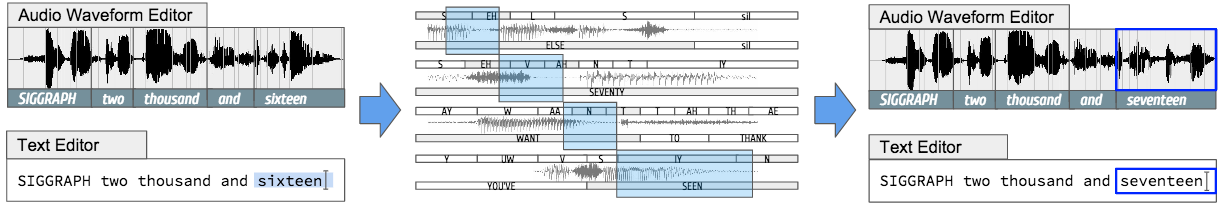

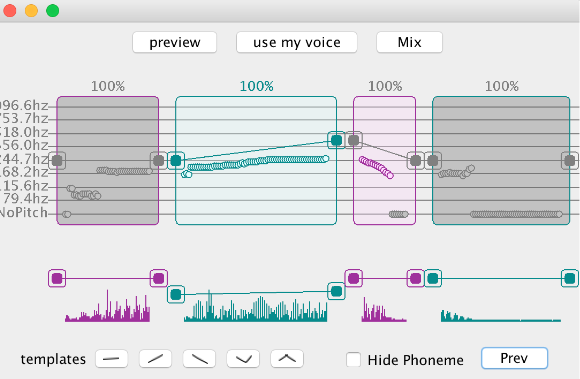

Prototype haptic VR glove system. A computer generates an image of a virtual world (in this case, a piano keyboard with a river and trees in the background) that it sends to the VR device (such as an Oculus Rift). A Leap Motion depth-camera (on the table) detects the position and movement of the user’s hands and sends data to a computer. It sends an image of the user’s hands superimposed over the keyboard (in the demo case) to the VR display and to a custom fluidic control board. The board then feeds back a signal to soft robotic components in the glove to individually inflate or deflate fingers, mimicking the user’s applied forces.

The engineers conducted an informal pilot study of 15 users, including two VR interface experts. The demo allowed them to play the piano in VR. They all agreed that the gloves increased the immersive experience, which they described as “mesmerizing” and “amazing.”

VR headset image of a piano, showing user’s finger actions (credit: Jacobs School of Engineering/UC San Diego)

The engineers say they’re working on making the glove cheaper, less bulky, and more portable. They would also like to bypass the Leap Motion device altogether to make the system more self-contained and compact. “Our final goal is to create a device that provides a richer experience in VR,” Tolley said. “But you could imagine it being used for surgery and video games, among other applications.”

* The researchers 3D-printed a mold to make the gloves’ soft exoskeleton. This will make the devices easier to manufacture and suitable for mass production, they said. Researchers used silicone rubber for the exoskeleton, with Velcro straps embedded at the joints.

JacobsSchoolNews | A glove powered by soft robotics to interact with virtual reality environments

Abstract of Soft Robotics: Review of Fluid-Driven Intrinsically Soft Devices; Manufacturing, Sensing, Control, and Applications in Human-Robot Interaction

The emerging field of soft robotics makes use of many classes of materials including metals, low glass transition temperature (Tg) plastics, and high Tg elastomers. Dependent on the specific design, all of these materials may result in extrinsically soft robots. Organic elastomers, however, have elastic moduli ranging from tens of megapascals down to kilopascals; robots composed of such materials are intrinsically soft − they are always compliant independent of their shape. This class of soft machines has been used to reduce control complexity and manufacturing cost of robots, while enabling sophisticated and novel functionalities often in direct contact with humans. This review focuses on a particular type of intrinsically soft, elastomeric robot − those powered via fluidic pressurization.