Near-infrared (NIR) light can easily pass through brain tissue with minimal scattering, allowing it to reach deep structures. There, up-conversion nanoparticles (UCNPs; blue) previously inserted in the tissue can absorb this light to generate shorter-wavelength blue-green light that can activate nearby neurons. (credit: RIKEN)

An international team of researchers has developed a way to shine light at new depths in the brain. It may lead to development of new, non-invasive clinical treatments for neurological disorders and new research tools.

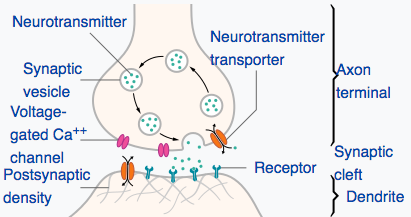

The new method extends the depth that optogenetics — a method for stimulating neurons with light — can reach. With optogenetics, blue-green light is used to turn on “light-gated ion channels” in neurons to stimulate neural activity. But blue-green light is heavily scattered by tissue. That limits how deep the light can reach and currently requires insertion of invasive optical fibers.

The researchers took a new approach to brain stimulation, as they reported in Science on February 9.

- They used longer-wavelength (650 to 1350nm) near-infrared (NIR) light, which can penetrate deeper into the brain (via the skull) of mice.

- The NIR light illuminated “upconversion nanoparticles” (UCNPs), which absorbed the near-infrared laser light and glowed blue-green in formerly inaccessible (deep) targeted neural areas.*

- The blue-green light then triggered (via chromophores, light-responsive molecules) ion channels in the neurons to turn on memory cells in the hippocampus and other areas. These included the medial septum, where nanoparticle-emitted light contributed to synchronizing neurons in a brain wave called the theta cycle.**

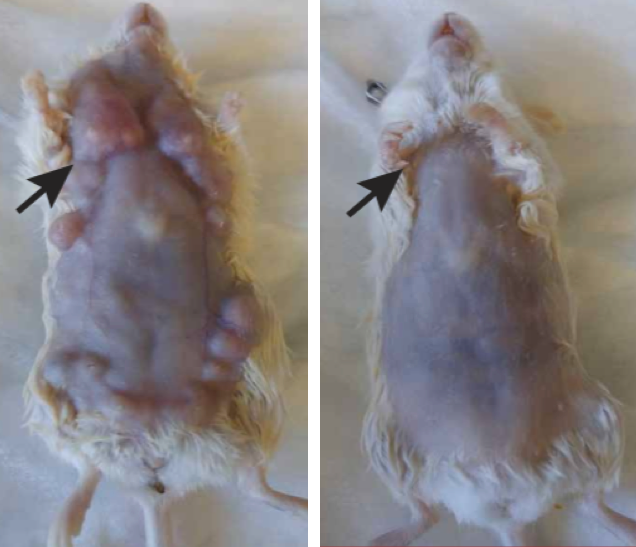

Non-invasive activation of neurons in the VTA, a reward center of the mouse brain. The blue-light sensitive ChR2 chromophores (green) were expressed (from an injection) on both sides of the VTA. But upconversion nanoparticles (blue) were only injected on the right. So when near-IR light was applied to both sides, it only activated the expression of the activity-induced chromophore cFos gene (red) on the side with the nanoparticles. (credit: RIKEN)

This study was a collaboration between scientists at the RIKEN Brain Science Institute, the National University of Singapore, the University of Tokyo, Johns Hopkins University, and Keio University.

Non-invasive light therapy

“Nanoparticles effectively extend the reach of our lasers, enabling ‘remote’ delivery of light and potentially leading to non-invasive therapies,” says Thomas McHugh, research group leader at the RIKEN Brain Science Institute in Japan. In addition to activating neurons, UCNPs can also be used for inhibition. In this study, UCNPs were able to quell experimental seizures in mice by emitting yellow light to silence hyperexcitable neurons.

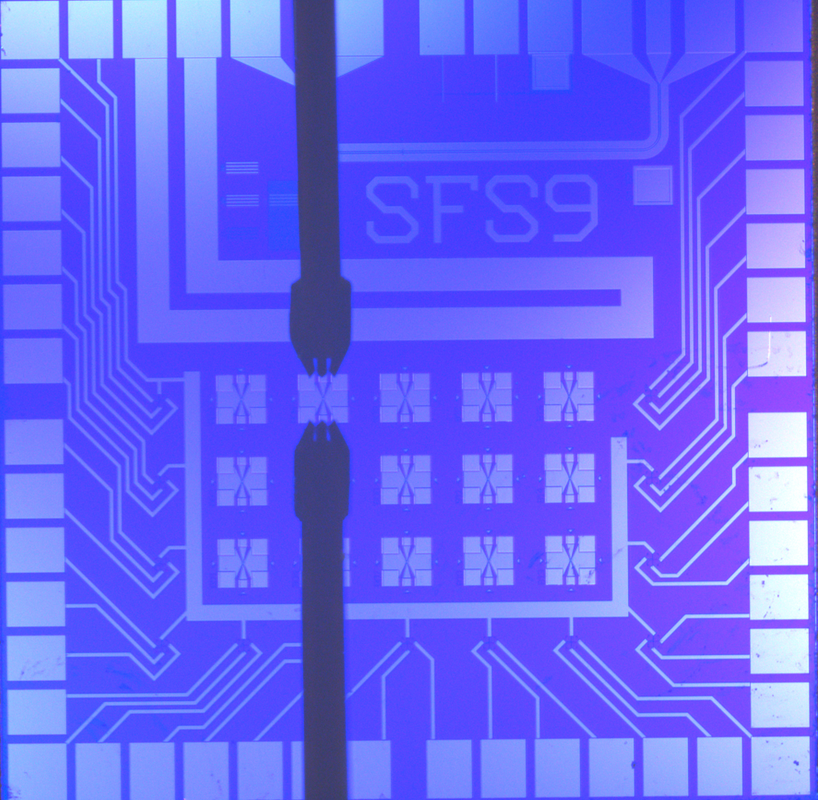

Schematic showing near-infrared radiation (NIR) being absorbed by upconversion nanoparticles (UCNPs) and re-radiated as shorter-wavelength (peaking at 450 and 475 nm) blue light that triggers a previously injected chromophore (a light emitting molecule expressed by neurons) — in this case, channelrhodopsin-2 (ChR2). In one experiment, the chromophore triggered a calcium ion channel in neurons in the ventral tegmental area (VTA) of the mouse brain (a region located ~4.2 mm below the skull), causing stimulation of neurons. (credit: Shuo Chen et al./Science)

While current deep brain stimulation is effective in alleviating specific neurological symptoms, it lacks cell-type specificity and requires permanently implanted electrodes, the researchers note.

The nanoparticles described in this study are compatible with the various light-activated channels currently in use in the optogenetics field and can be employed for neural activation or inhibition in many deep brain structures. “The nanoparticles appear to be quite stable and biocompatible, making them viable for long-term use. Plus, the low dispersion means we can target neurons very specifically,” says McHugh.

However, “a number of challenges must be overcome before this technique can be used in patients,” say Neus Feliu et al. in “Toward an optically controlled brain, Science 09 Feb 2018. “Specifically, neurons have to be transfected with light-gated ion channels … a substantial challenge [and] … placed close to the target neurons. … Neuronal networks undergo continuous changes [so] the stimulation pattern and placement of [nanoparticles] may have to be adjusted over time. … Potent upconverting NPs are also needed … [which] may change properties over time, such as structural degradation and loss of functional properties. … Long-term toxicity studies also need to be carried out.”

* “The lanthanide-doped up-conversion nanoparticles (UCNPs) were capable of converting low-energy incident NIR photons into high-energy visible emission with an efficiency orders of magnitude greater than that of multiphoton processes. … The core-shell UCNPs exhibited a characteristic up-conversion emission spectrum peaking at 450 and 475 nm upon excitation at 980 nm. Upon transcranial delivery of 980-nm CW laser pulses at a peak power of 2.0 W (25-ms pulses at 20 Hz over 1 s), an upconverted emission with a power density of ~0.063 mW/mm2 was detected. The conversion yield of NIR to blue light was ~2.5%. NIR pulses delivered across a wide range of laser energies to living tissue result in little photochemical or thermal damage.” — Shuo Chen et al./Science

** “Memory recall in mice also persisted in tests two weeks later. This indicates that the UCNPs remained at the injection site, which was confirmed through microscopy of the brains.” — Shuo Chen et al./Science

Abstract of Near-infrared deep brain stimulation via upconversion nanoparticle–mediated optogenetics

Optogenetics has revolutionized the experimental interrogation of neural circuits and holds promise for the treatment of neurological disorders. It is limited, however, because visible light cannot penetrate deep inside brain tissue. Upconversion nanoparticles (UCNPs) absorb tissue-penetrating near-infrared (NIR) light and emit wavelength-specific visible light. Here, we demonstrate that molecularly tailored UCNPs can serve as optogenetic actuators of transcranial NIR light to stimulate deep brain neurons. Transcranial NIR UCNP-mediated optogenetics evoked dopamine release from genetically tagged neurons in the ventral tegmental area, induced brain oscillations through activation of inhibitory neurons in the medial septum, silenced seizure by inhibition of hippocampal excitatory cells, and triggered memory recall. UCNP technology will enable less-invasive optical neuronal activity manipulation with the potential for remote therapy.