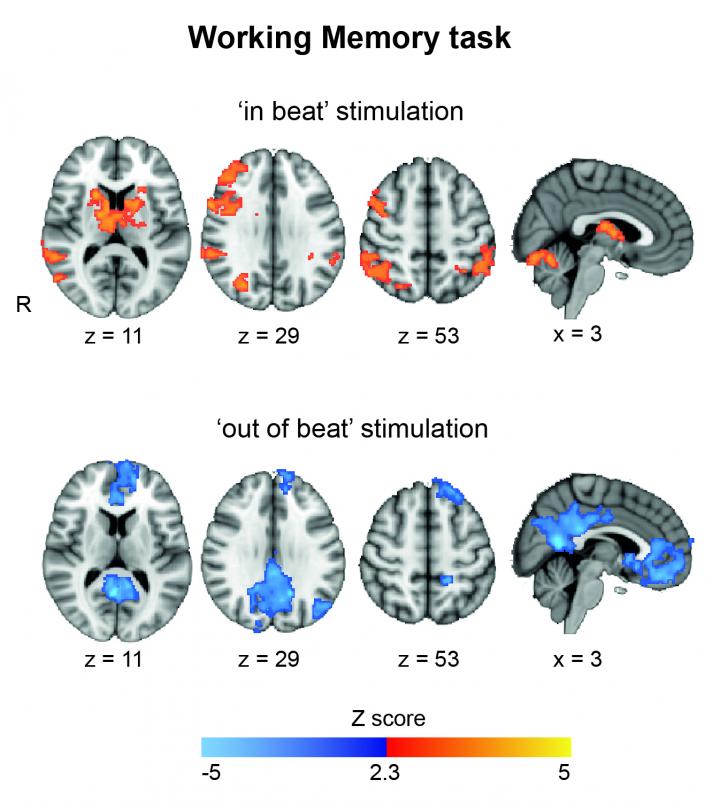

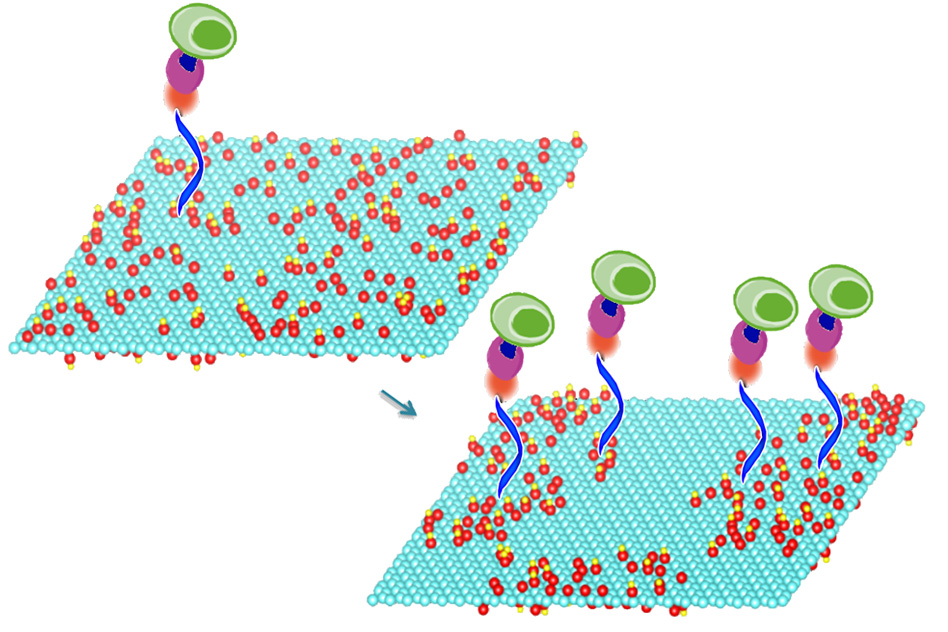

A new, very-low-cost diagnostic method. Mild heating of graphene oxide sheets makes it possible to bond particular compounds (blue, orange, purple) to the sheets’ surface, a new study shows. These compounds in turn select and bond with specific molecules of interest, including DNA and proteins, or even whole cells. In this image, the treated graphene oxide on the right has oxygen molecules (red) clustered together, making it nearly twice as efficient at capturing cells (green) as the material on the left. (credit: the researchers)

A new method developed at MIT and National Chiao Tung University, based on specially treated sheets of graphene oxide, could make it possible to capture and analyze individual cells from a small sample of blood. It could potentially lead to very-low-cost diagnostic devices (less than $5 a piece) that are mass-producible and could be used almost anywhere for point-of-care testing, especially in resource-constrained settings.

A single cell can contain a wealth of information about the health of an individual. The new system could ultimately lead to a variety of simple devices that could perform a variety of sensitive diagnostic tests, even in places far from typical medical facilities, for cancer screening or treatment follow-up, for example.

How to capture DNA, proteins, or even whole cells for analysis

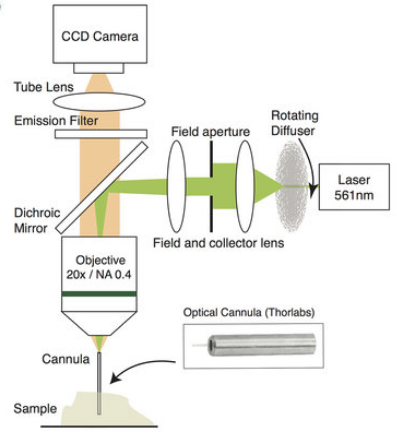

The material (graphene oxide, or GO) used in this research is an oxidized version of the two-dimensional form of pure carbon known as graphene. The key to the new process is heating the graphene oxide at relatively mild temperatures.

This low-temperature annealing, as it is known, makes it possible to bond particular compounds to the material’s surface that can be used to capture molecules of diagnostic interest.

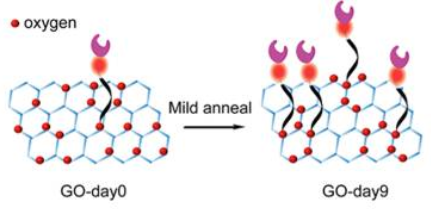

Schematic showing oxygen clustering, resulting in improved ability to recognize foreign molecules (credit: Neelkanth M. Bardhan et al./ACS Nano)

The heating process changes the material’s surface properties, causing oxygen atoms to cluster together, leaving spaces of bare graphene between them. This leaves room to attach other chemicals to the surface, which can be used to select and bond with specific molecules of interest, including DNA and proteins, or even whole cells. Once captured, those molecules or cells can then be subjected to a variety of tests.*

Nanobodies

The new research demonstrates how that basic process could potentially enable a suite of low-cost diagnostic systems.

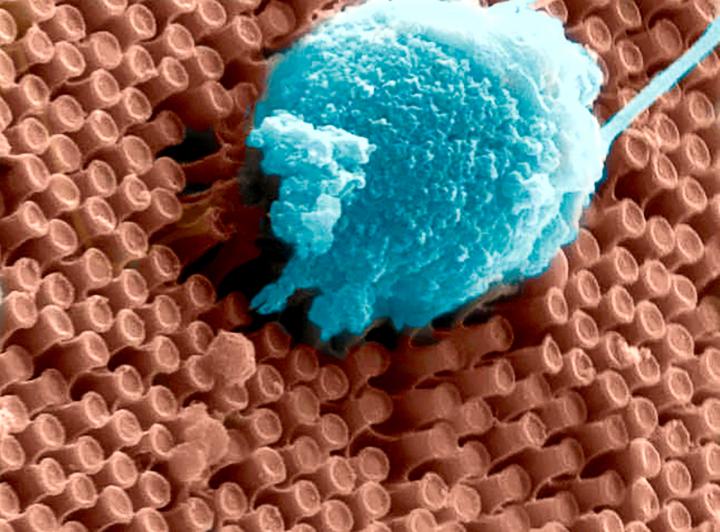

For this proof-of-concept test, the team used molecules that can quickly and efficiently capture specific immune cells that are markers for certain cancers. They were able to demonstrate that their treated graphene oxide surfaces were almost twice as effective at capturing such cells from whole blood, compared to devices fabricated using ordinary, untreated graphene oxide.

They did this by enzymatically coating the treated graphene oxide surface with peptides called “nanobodies” — subunits of antibodies, which can be cheaply and easily produced in large quantities in bioreactors and are highly selective for particular biomolecules.**

The new process allows for rapid capture and assessment of cells or biomolecules within about 10 minutes and without the need for refrigeration of samples or incubators for precise temperature control. And the whole system is compatible with existing large-scale manufacturing methods.

The researchers believe many different tests could be incorporated on a single device, all of which could be placed on a small glass slide like those used for microscopy. The basic processing method could also make possible a wide variety of other applications, including solar cells and light-emitting devices.

The findings are reported in the journal ACS Nano. Authors include Angela Belcher, the James Mason Crafts Professor in biological engineering and materials science and engineering at MIT and a member of the Koch Institute for Integrative Cancer Research; Jeffrey Grossman, the Morton and Claire Goulder and Family Professor in Environmental Systems at MIT; Hidde L. Ploegh, a professor of biology and member of the Whitehead Institute for Biomedical Research; Guan-Yu Chen, an assistant professor in biomedical engineering at National Chiao Tung University in Taiwan; and Zeyang Li, a doctoral student at the Whitehead Institute.

“Efficiency is especially important if you’re trying to detect a rare event,” Belcher says. “The goal of this was to show a high efficiency of capture.” The next step after this basic proof of concept, she says, is to try to make a working detector for a specific disease model.

The work was supported by the Army Research Office Institute for Collaborative Biotechnologies and MIT’s Tata Center and Solar Frontiers Center.

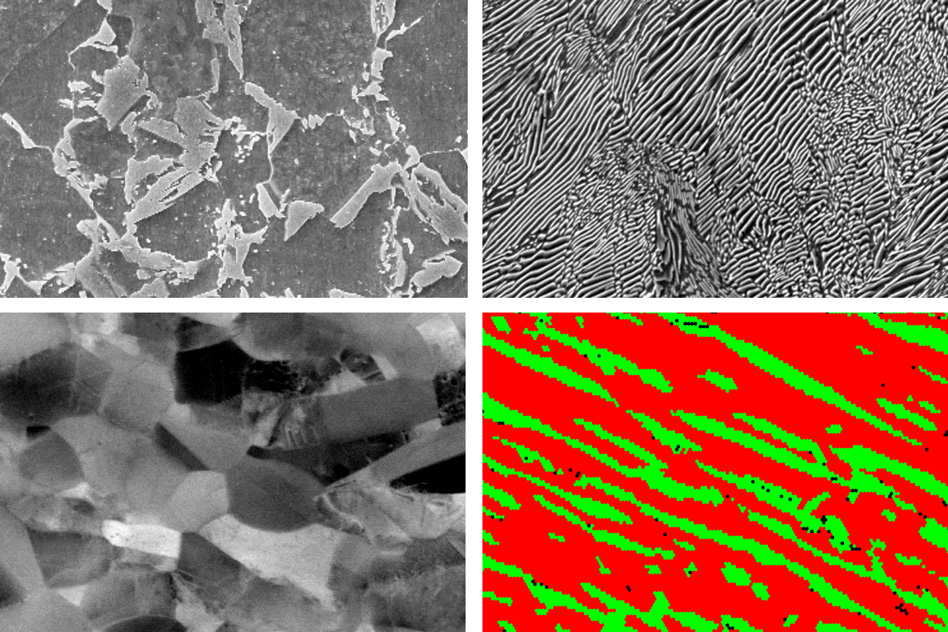

* Other researchers have been trying to develop diagnostic systems using a graphene oxide substrate to capture specific cells or molecules, but these approaches used just the raw, untreated material. Despite a decade of research, other attempts to improve such devices’ efficiency have relied on external modifications, such as surface patterning through lithographic fabrication techniques, or adding microfluidic channels, which add to the cost and complexity. Those methods for treating graphene oxide for this purpose require high-temperature treatments or the use of harsh chemicals; the new system, which the group has patented, requires no chemical pretreatment and an annealing temperature of just 50 to 80 degrees Celsius (122 to 176 F).

** The researchers found that increasing the annealing time steadily increased the efficiency of cell capture: After nine days of annealing, the efficiency of capturing cells from whole blood went from 54 percent, for untreated graphene oxide, to 92 percent for the treated material. The team then performed molecular dynamics simulations to understand the fundamental changes in the reactivity of the graphene oxide base material. The simulation results, which the team also verified experimentally, suggested that upon annealing, the relative fraction of one type of oxygen (carbonyl) increases at the expense of the other types of oxygen functional groups (epoxy and hydroxyl) as a result of the oxygen clustering. This change makes the material more reactive, which explains the higher density of cell capture agents and increased efficiency of cell capture.

Abstract of Enhanced Cell Capture on Functionalized Graphene Oxide Nanosheets through Oxygen Clustering

With the global rise in incidence of cancer and infectious diseases, there is a need for the development of techniques to diagnose, treat, and monitor these conditions. The ability to efficiently capture and isolate cells and other biomolecules from peripheral whole blood for downstream analyses is a necessary requirement. Graphene oxide (GO) is an attractive template nanomaterial for such biosensing applications. Favorable properties include its two-dimensional architecture and wide range of functionalization chemistries, offering significant potential to tailor affinity toward aromatic functional groups expressed in biomolecules of interest. However, a limitation of current techniques is that as-synthesized GO nanosheets are used directly in sensing applications, and the benefits of their structural modification on the device performance have remained unexplored. Here, we report a microfluidic-free, sensitive, planar device on treated GO substrates to enable quick and efficient capture of Class-II MHC-positive cells from murine whole blood. We achieve this by using a mild thermal annealing treatment on the GO substrates, which drives a phase transformation through oxygen clustering. Using a combination of experimental observations and MD simulations, we demonstrate that this process leads to improved reactivity and density of functionalization of cell capture agents, resulting in an enhanced cell capture efficiency of 92 ± 7% at room temperature, almost double the efficiency afforded by devices made using as-synthesized GO (54 ± 3%). Our work highlights a scalable, cost-effective, general approach to improve the functionalization of GO, which creates diverse opportunities for various next-generation device applications.