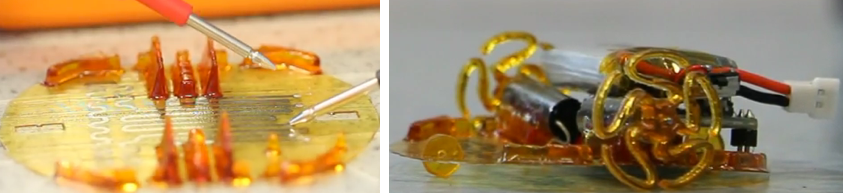

New physically unclonable nanomaterial (credit: Abdullah Alharbi et al./ACS Nano)

Recent advances in quantum computers may soon give hackers access to machines powerful enough to crack even the toughest of standard internet security codes. With these codes broken, all of our online data — from medical records to bank transactions — could be vulnerable to attack.

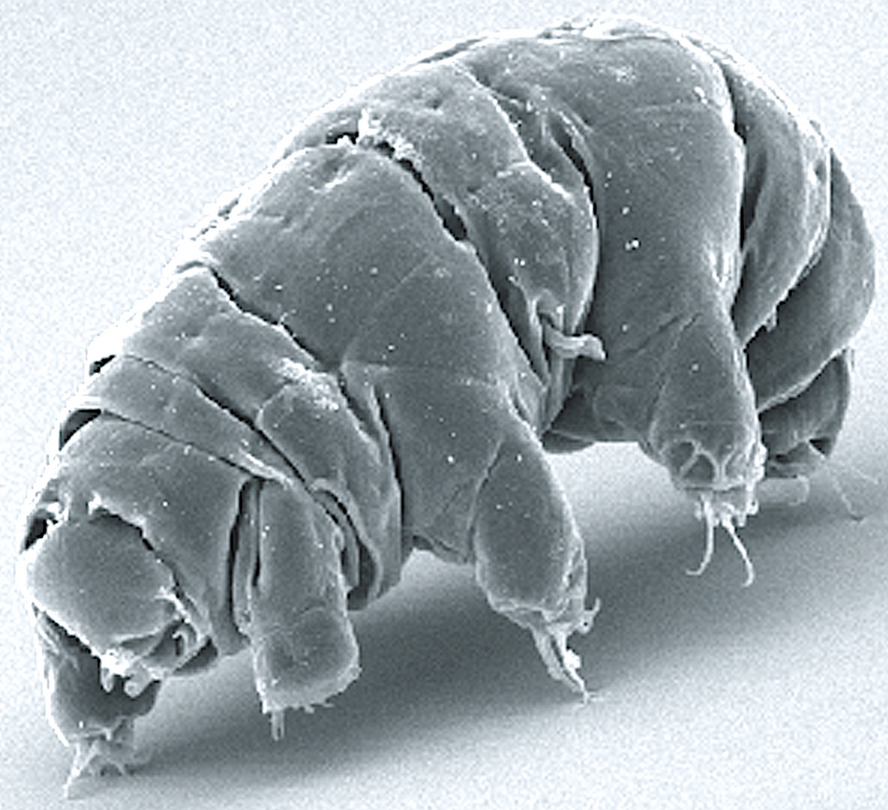

Now, a new low-cost nanomaterial developed by New York University Tandon School of Engineering researchers can be tuned to act as a secure authentication key to encrypt computer hardware and data. The layered molybdenum disulfide (MoS2) nanomaterial cannot be physically cloned (duplicated) — replacing programming, which can be hacked.

In a paper published in the journal ACS Nano, the researchers explain that the new nanomaterial has the highest possible level of structural randomness, making it physically unclonable. It achieves this with randomly occurring regions that alternately emit or do not emit light. When exposed to light, this pattern can be used to create a one-of-a-kind binary cryptographic authentication key that could secure hardware components at minimal cost.

The research team envisions a future in which similar nanomaterials can be inexpensively produced at scale and applied to a chip or other hardware component. “No metal contacts are required, and production could take place independently of the chip fabrication process,” according to Davood Shahrjerdi, Assistant Professor of Electrical and Computer Engineering. “It’s maximum security with minimal investment.”

The National Science Foundation and the U.S. Army Research Office supported the research.

A high-speed quantum encryption system to secure the future internet

Schematic of the experimental quantum key distribution setup (credit: Nurul T. Islam et al./Science Advances)

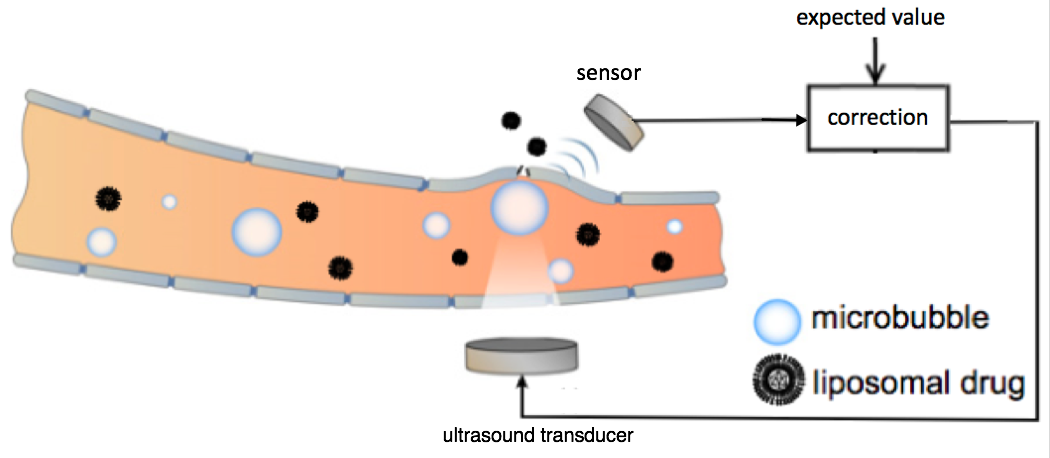

Another approach to the hacker threat is being developed by scientists at Duke University, The Ohio State University and Oak Ridge National Laboratory. It would use the properties that drive quantum computers to create theoretically hack-proof forms of quantum data encryption.

Called quantum key distribution (QKD), it takes advantage of one of the fundamental properties of quantum mechanics: Measuring tiny bits of matter like electrons or photons automatically changes their properties, which would immediately alert both parties to the existence of a security breach. However, current QKD systems can only transmit keys at relatively low rates — up to hundreds of kilobits per second — which are too slow for most practical uses on the internet.

The new experimental QKD system is capable of creating and distributing encryption codes at megabit-per-second rates — five to 10 times faster than existing methods and on a par with current internet speeds when running several systems in parallel. In an online open-access article in Science Advances, the researchers show that the technique is secure from common attacks, even in the face of equipment flaws that could open up leaks.

This research was supported by the Office of Naval Research, the Defense Advanced Research Projects Agency, and Oak Ridge National Laboratory.

Abstract of Physically Unclonable Cryptographic Primitives by Chemical Vapor Deposition of Layered MoS2

Physically unclonable cryptographic primitives are promising for securing the rapidly growing number of electronic devices. Here, we introduce physically unclonable primitives from layered molybdenum disulfide (MoS2) by leveraging the natural randomness of their island growth during chemical vapor deposition (CVD). We synthesize a MoS2 monolayer film covered with speckles of multilayer islands, where the growth process is engineered for an optimal speckle density. Using the Clark–Evans test, we confirm that the distribution of islands on the film exhibits complete spatial randomness, hence indicating the growth of multilayer speckles is a spatial Poisson process. Such a property is highly desirable for constructing unpredictable cryptographic primitives. The security primitive is an array of 2048 pixels fabricated from this film. The complex structure of the pixels makes the physical duplication of the array impossible (i.e., physically unclonable). A unique optical response is generated by applying an optical stimulus to the structure. The basis for this unique response is the dependence of the photoemission on the number of MoS2 layers, which by design is random throughout the film. Using a threshold value for the photoemission, we convert the optical response into binary cryptographic keys. We show that the proper selection of this threshold is crucial for maximizing combination randomness and that the optimal value of the threshold is linked directly to the growth process. This study reveals an opportunity for generating robust and versatile security primitives from layered transition metal dichalcogenides.

Abstract of Provably secure and high-rate quantum key distribution with time-bin qudits

The security of conventional cryptography systems is threatened in the forthcoming era of quantum computers. Quantum key distribution (QKD) features fundamentally proven security and offers a promising option for quantum-proof cryptography solution. Although prototype QKD systems over optical fiber have been demonstrated over the years, the key generation rates remain several orders of magnitude lower than current classical communication systems. In an effort toward a commercially viable QKD system with improved key generation rates, we developed a discrete-variable QKD system based on time-bin quantum photonic states that can generate provably secure cryptographic keys at megabit-per-second rates over metropolitan distances. We use high-dimensional quantum states that transmit more than one secret bit per received photon, alleviating detector saturation effects in the superconducting nanowire single-photon detectors used in our system that feature very high detection efficiency (of more than 70%) and low timing jitter (of less than 40 ps). Our system is constructed using commercial off-the-shelf components, and the adopted protocol can be readily extended to free-space quantum channels. The security analysis adopted to distill the keys ensures that the demonstrated protocol is robust against coherent attacks, finite-size effects, and a broad class of experimental imperfections identified in our system.