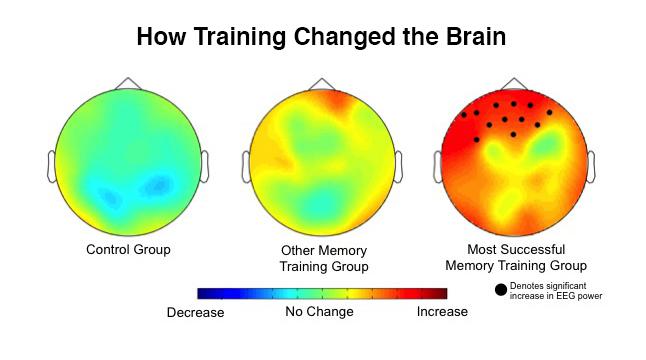

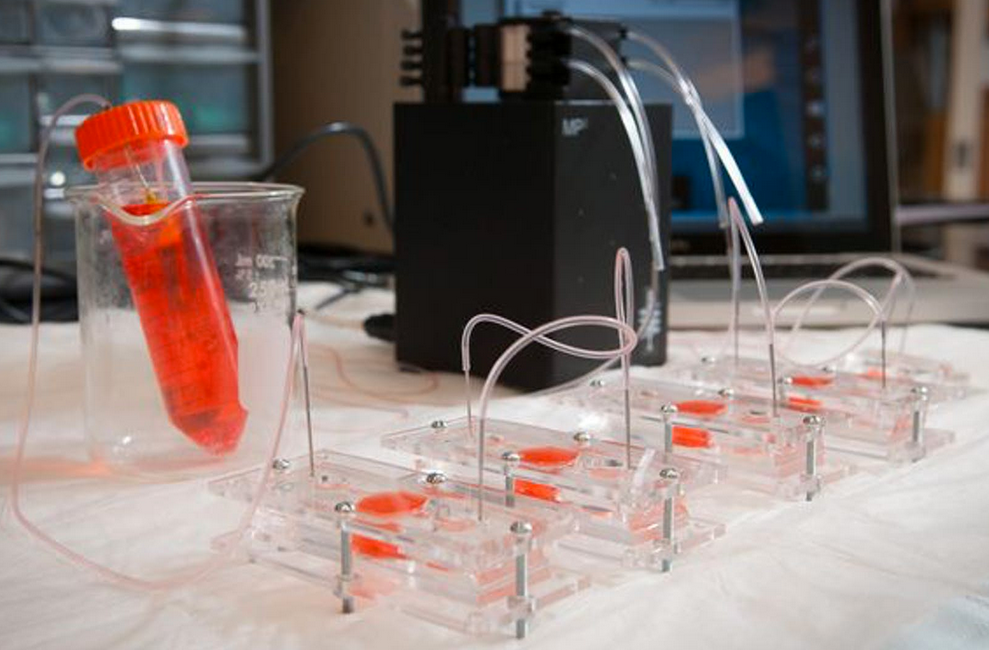

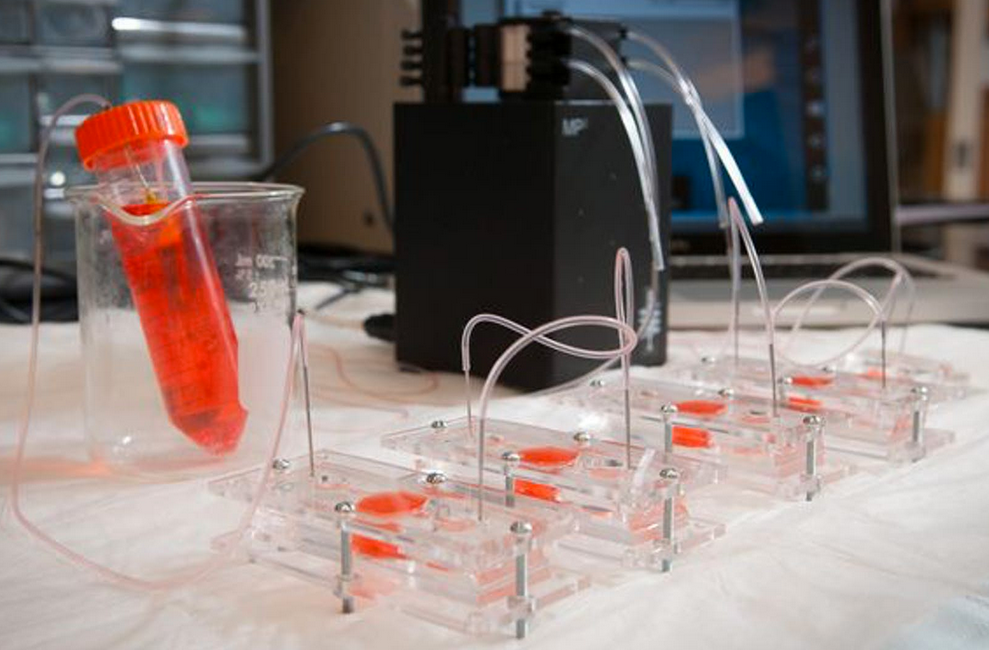

Scientists created miniature models (“organoids”) of heart, liver, and lung in dishes and combined them into an integrated “body-on-a-chip” system fed with nutrient-rich fluid, mimicking blood. (credit: Wake Forest Baptist Medical Center)

A team of scientists at Wake Forest Institute for Regenerative Medicine and nine other institutions has engineered miniature 3D human hearts, lungs, and livers to achieve more realistic testing of how the human body responds to new drugs.

The “body-on-a-chip” project, funded by the Defense Threat Reduction Agency, aims to help reduce the estimated $2 billion cost and 90 percent failure rate that pharmaceutical companies face when developing new medications. The research is described in an open-access paper in Scientific Reports, published by Nature.

Using the same expertise they’ve employed to build new organs for patients, the researchers connected together micro-sized 3D liver, heart, and lung organs-on-a chip (or “organoids”) on a single platform to monitor their function. They selected heart and liver for the system because toxicity to these organs is a major reason for drug candidate failures and drug recalls. And lungs were selected because they’re the point of entry for toxic particles and for aerosol drugs such as asthma inhalers.

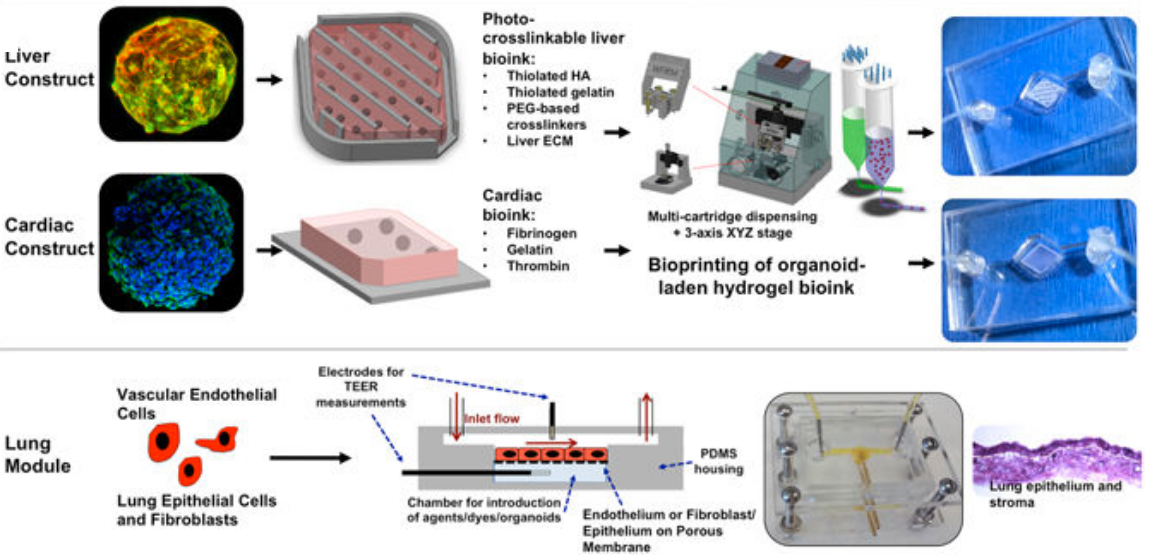

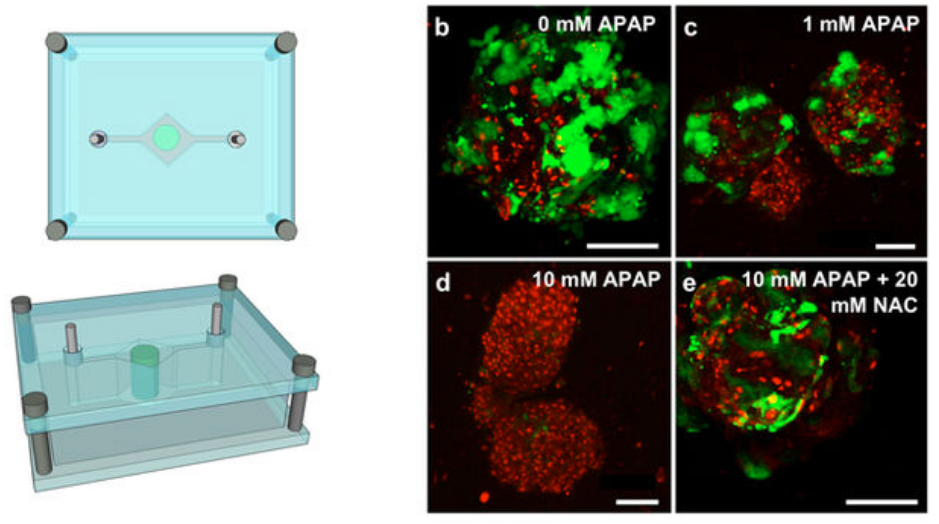

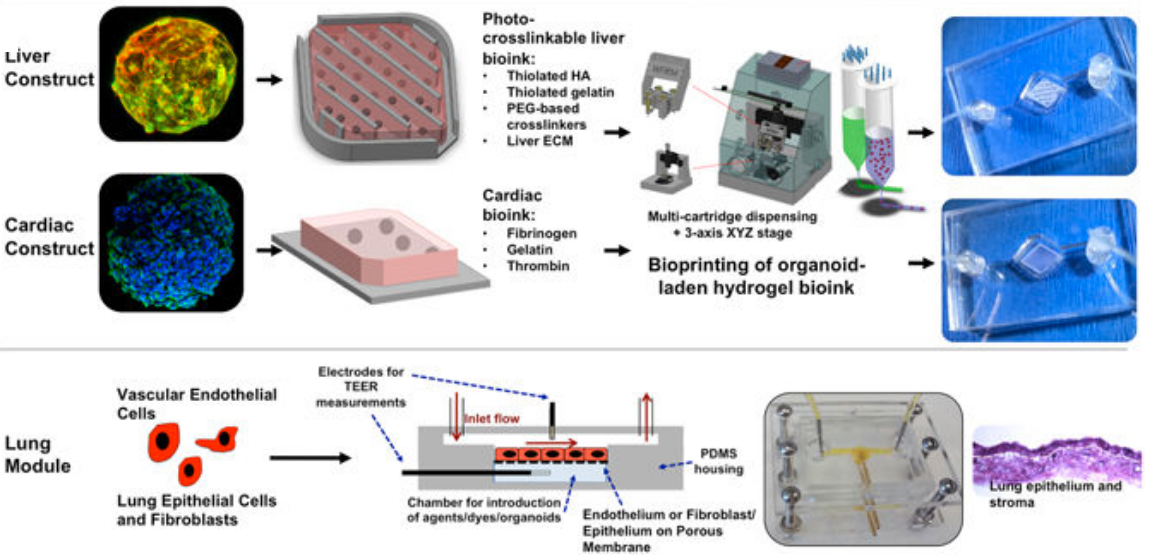

The integrated three-tissue organ-on-a-chip platform combines liver, heart, and lung organoids. (Top) Liver and cardiac modules are created by bioprinting spherical organoids using customized bioinks, resulting in 3D hydrogel constructs (upper left) that are placed into the microreactor devices. (Bottom) Lung modules are formed by creating layers of cells over porous membranes within microfluidic devices. TEER (trans-endothelial [or epithelial] electrical resistance sensors allow for monitoring tissue barrier function integrity over time. The three organoids are placed in a sealed, monitored system with a real-time camera. A nutrient-filled liquid that circulates through the system keeps the organoids alive and is used to introduce potential drug therapies into the system. (credit: Aleksander Skardal et al./Scientific Reports)

Drug compounds are currently screened in the lab using human cells and then tested in animals. But these methods don’t adequately replicate how drugs affect human organs. “If you screen a drug in livers only, for example, you’re never going to see a potential side effect to other organs,” said Aleks Skardal, Ph.D., assistant professor at Wake Forest Institute for Regenerative Medicine and lead author of the paper.

In many cases during testing of new drug candidates — and sometimes even after the drugs have been approved for use — drugs also have unexpected toxic effects in tissues not directly targeted by the drugs themselves, he explained. “By using a multi-tissue organ-on-a-chip system, you can hopefully identify toxic side effects early in the drug development process, which could save lives as well as millions of dollars.”

“There is an urgent need for improved systems to accurately predict the effects of drugs, chemicals and biological agents on the human body,” said Anthony Atala, M.D., director of the institute and senior researcher on the multi-institution study. “The data show a significant toxic response to the drug as well as mitigation by the treatment, accurately reflecting the responses seen in human patients.”

Advanced drug screening, personalized medicine

The scientists conducted multiple scenarios to ensure that the body-on-a-chip system mimics a multi-organ response.

For example, they introduced a drug used to treat cancer into the system. Known to cause scarring of the lungs, the drug also unexpectedly affected the system’s heart. (A control experiment using only the heart failed to show a response.) The scientists theorize that the drug caused inflammatory proteins from the lung to be circulated throughout the system. As a result, the heart increased beats and then later stopped altogether, indicating a toxic side effect.

“This was completely unexpected, but it’s the type of side effect that can be discovered with this system in the drug development pipeline,” Skardal noted.

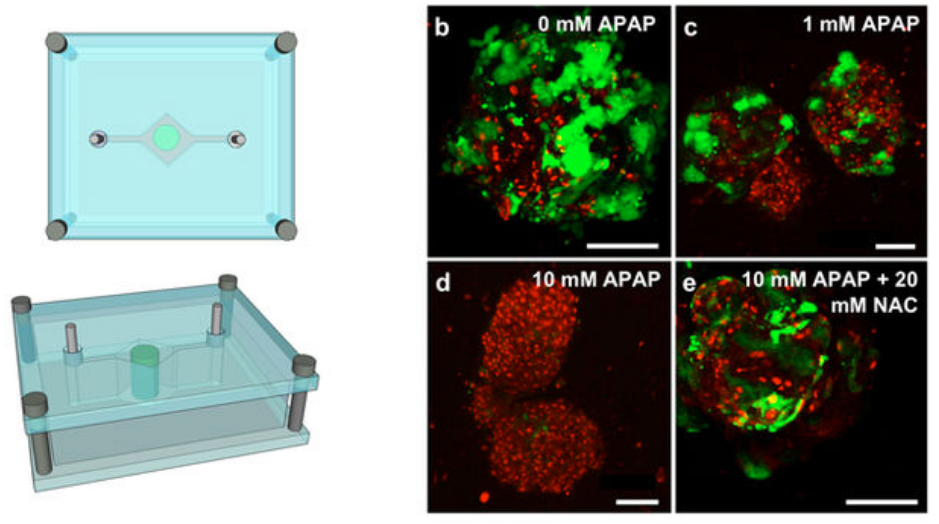

Test of “liver on a chip” response to two drugs to demonstrate clinical relevance. Liver construct toxicity response was assessed following exposure to acetaminophen (APAP) and the clinically-used APAP countermeasure N-acetyl-L-cysteine (NAC). Liver constructs in the fluidic system (left) were treated with no drug (b), 1 mM APAP (c), and 10 mM APAP (d) — showing progressive loss of function and cell death, compared to 10 mM APAP +20 mM NAC (e), which mitigated those negative effects. The data shows both a significant cytotoxic (cell-damage) response to APAP as well as its mitigation by NAC treatment — accurately reflecting the clinical responses seen in human patients. (credit: Aleksander Skardal et al./Scientific Reports)

The scientists are now working to increase the speed of the system for large scale screening and add additional organs.

“Eventually, we expect to demonstrate the utility of a body-on-a-chip system containing many of the key functional organs in the human body,” said Atala. “This system has the potential for advanced drug screening and also to be used in personalized medicine — to help predict an individual patient’s response to treatment.”

Several patent applications comprising the technology described in the paper have been filed.

The international collaboration included researchers at Wake Forest Institute for Regenerative Medicine at the Wake Forest School of Medicine, Harvard-MIT Division of Health Sciences and Technology, Wyss Institute for Biologically Inspired Engineering at Harvard University, Biomaterials Innovation Research Center at Harvard Medical School, Bloomberg School of Public Health at Johns Hopkins University, Virginia Tech-Wake Forest School of Biomedical Engineering and Sciences, Brigham and Women’s Hospital, University of Konstanz, Konkuk University (Seoul), and King Abdulaziz University.

Abstract of Multi-tissue interactions in an integrated three-tissue organ-on-a-chip platform

Many drugs have progressed through preclinical and clinical trials and have been available – for years in some cases – before being recalled by the FDA for unanticipated toxicity in humans. One reason for such poor translation from drug candidate to successful use is a lack of model systems that accurately recapitulate normal tissue function of human organs and their response to drug compounds. Moreover, tissues in the body do not exist in isolation, but reside in a highly integrated and dynamically interactive environment, in which actions in one tissue can affect other downstream tissues. Few engineered model systems, including the growing variety of organoid and organ-on-a-chip platforms, have so far reflected the interactive nature of the human body. To address this challenge, we have developed an assortment of bioengineered tissue organoids and tissue constructs that are integrated in a closed circulatory perfusion system, facilitating inter-organ responses. We describe a three-tissue organ-on-a-chip system, comprised of liver, heart, and lung, and highlight examples of inter-organ responses to drug administration. We observe drug responses that depend on inter-tissue interaction, illustrating the value of multiple tissue integration for in vitro study of both the efficacy of and side effects associated with candidate drugs.