Using Watson for enhancing human-computer co-creativity (credit: Georgia Tech)

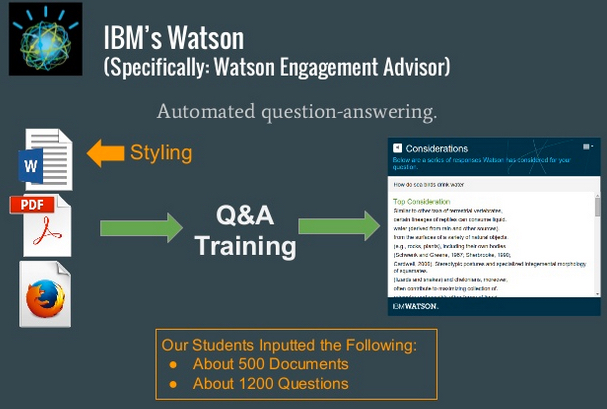

Georgia Institute of Technology researchers, working with student teams, trained a cloud-based version of IBM’s Watson called the Watson Engagement Advisor to provide answers to questions about biologically inspired design (biomimetics), a design paradigm that uses biological systems as analogues for inventing technological systems.

Ashok Goel, a professor at Georgia Tech’s School of Interactive Computing who conducts research on computational creativity. In an experiment, he used this version of Watson as an “intelligent research assistant” to support teaching about biologically inspired design and computational creativity in the Georgia Tech CS4803/8803 class on Computational Creativity in Spring 2015. Goel found that Watson’s ability to retrieve natural language information could allow a novice to quickly “train up” about complex topics and better determine whether their idea or hypothesis is worth pursuing.

An intelligent research assistant

In the form of a class project, the students fed Watson several hundred biology articles from Biologue, an interactive biology repository, and 1,200 question-answer pairs. The teams then posed questions to Watson about the research it had “learned” regarding big design challenges in areas such as engineering, architecture, systems, and computing.

Examples of questions:

“How do you make a better desalination process for consuming sea water?” (Animals have a variety of answers for this, such as how seagulls filter out seawater salt through special glands.)

“How can manufacturers develop better solar cells for long-term space travel?” One answer: Replicate how plants in harsh climates use high-temperature fibrous insulation material to regulate temperature.

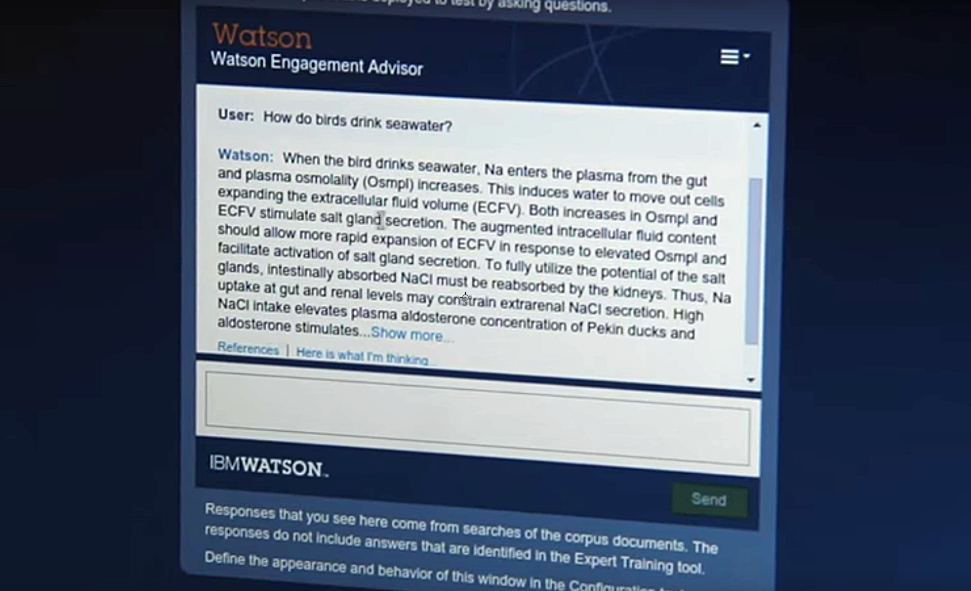

Watson effectively acted as an intelligent sounding board to steer students through what would otherwise be a daunting task of parsing a wide volume of research that may fall outside their expertise.

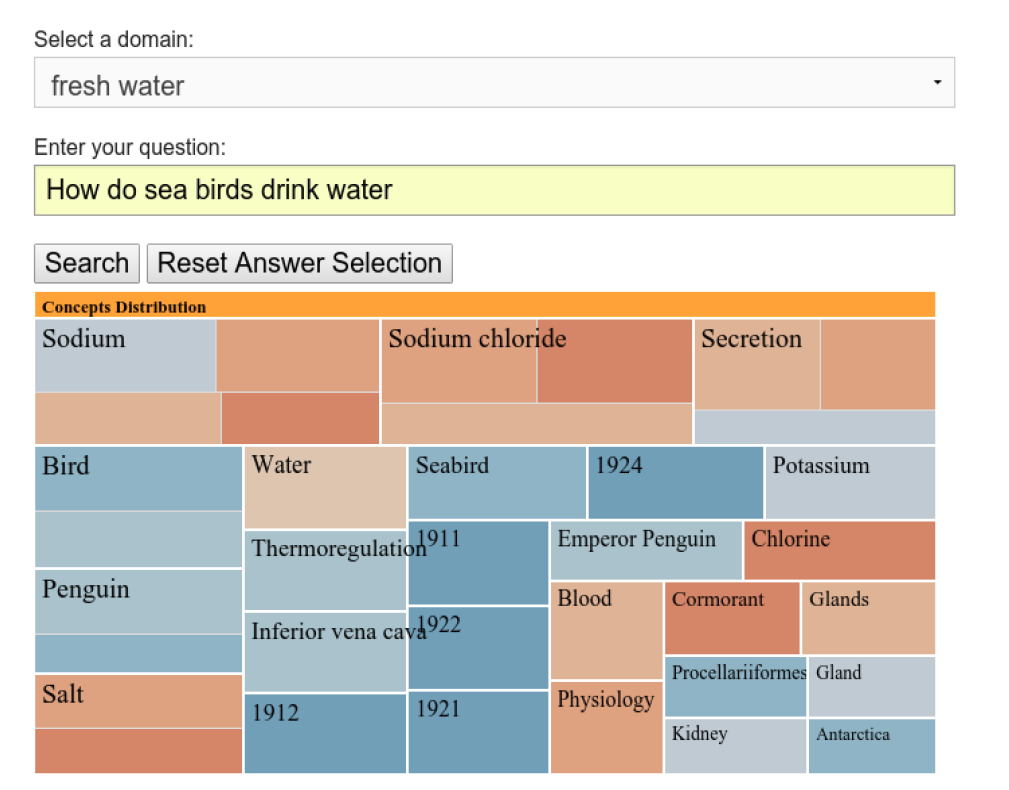

This version of Watson also prompts users with alternate ways to ask questions for better results. Those results are packaged as a “treetop” where each answer is a “leaf” that varies in size based on its weighted importance. This was intended to allow the average user to navigate results more easily on a given topic.

Results from training the Watson AI system were packaged as a “treetop” where each answer is a “leaf” that varies in size based on its weighted importance. Each leaf is the starting point for a Q&A with Watson. (credit: Georgia Tech)

“Imagine if you could ask Google a complicated question and it immediately responded with your answer — not just a list of links to manually open, says Goel. “That’s what we did with Watson. Researchers are provided a quickly digestible visual map of the concepts relevant to the query and the degree to which they are relevant. We were able to add more semantic and contextual meaning to Watson to give some notion of a conversation with the AI.”

Georgia Tech’s Watson Engagement Advisor (credit: Georgia Tech)

Goel believes this approach to using Watson could assist professionals in a variety of fields by allowing them to ask questions and receive answers as quickly as in a natural conversation. He plans to investigate other areas with Watson such as online learning and healthcare.

The work was presented at the Association for the Advancement of Artificial Intelligence (AAAI) 2015 Fall Symposium on Cognitive Assistance in Government, Nov. 12–14, in Arlington, Va. and was published in Procs. AAAI 2015 Fall Symposium on Cognitive Assistance (open access).

Abstract of Using Watson for Enhancing Human-Computer Co-Creativity

We describe an experiment in using IBM’s Watson cognitive system to teach about human-computer co-creativity in

a Georgia Tech Spring 2015 class on computational creativity. The project-based class used Watson to support biologically

inspired design, a design paradigm that uses biological systems as analogues for inventing technological

systems. The twenty-four students in the class self-organized into six teams of four students each, and developed semester-long projects that built on Watson to support biologically inspired design. In this paper, we describe this experiment in using Watson to teach about human-computer co-creativity, present one project in detail, and summarize the remaining five projects. We also draw lessons on building on Watson for (i) supporting biologically inspired design, and (ii) enhancing human-computer co-creativity.