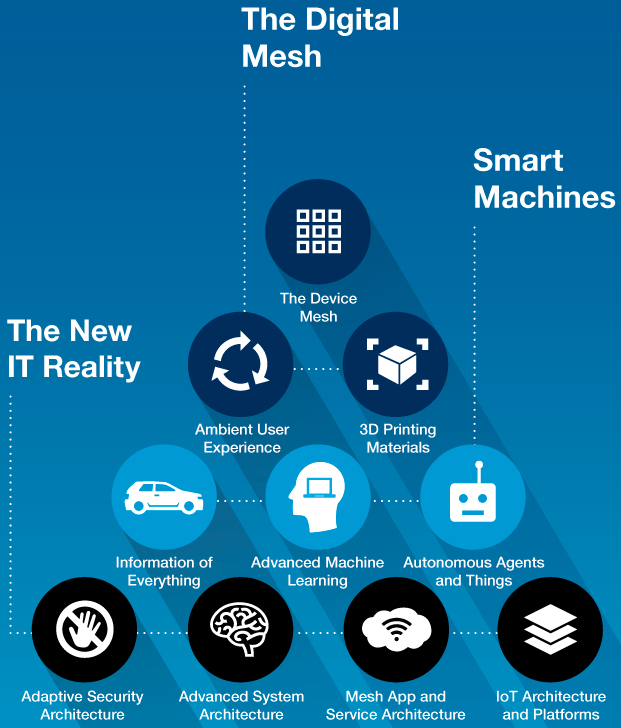

Top 10 strategic trends 2016 (credit: Gartner, Inc.)

At the Gartner Symposium/ITxpo today (Oct. 8), Gartner, Inc. highlighted the top 10 technology trends that will be strategic for most organizations in 2016 and will shape digital business opportunities through 2020.

The Device Mesh

The device mesh refers to how people access applications and information or interact with people, social communities, governments and businesses. It includes mobile devices, wearable, consumer and home electronic devices, automotive devices, and environmental devices, such as sensors in the Internet of Things (IoT), allowing for greater cooperative interaction between devices.

Ambient User Experience

The device mesh creates the foundation for a new continuous and ambient user experience. Immersive environments delivering augmented and virtual reality hold significant potential but are only one aspect of the experience. The ambient user experience preserves continuity across boundaries of device mesh, time and space. The experience seamlessly flows across a shifting set of devices — such as sensors, cars, and even factories — and interaction channels blending physical, virtual and electronic environment as the user moves from one place to another.

3D Printing Materials

Advances in 3D printing will drive user demand and a compound annual growth rate of 64.1 percent for enterprise 3D-printer shipments through 2019, which will require a rethinking of assembly line and supply chain processes to exploit 3D printing.

Information of Everything

Everything in the digital mesh produces, uses and transmits information, including sensory and contextual information. “Information of everything” addresses this influx with strategies and technologies to link data from all these different data sources. Advances in semantic tools such as graph databases as well as other emerging data classification and information analysis techniques will bring meaning to the often chaotic deluge of information.

Advanced Machine Learning

In advanced machine learning, deep neural nets (DNNs) move beyond classic computing and information management to create systems that can autonomously learn to perceive the world on their own, making it possible to address key challenges related to the information of everything trend.

DNNs (an advanced form of machine learning particularly applicable to large, complex datasets) is what makes smart machines appear “intelligent.” DNNs enable hardware- or software-based machines to learn for themselves all the features in their environment, from the finest details to broad sweeping abstract classes of content. This area is evolving quickly, and organizations must assess how they can apply these technologies to gain competitive advantage.

Autonomous Agents and Things

Machine learning gives rise to a spectrum of smart machine implementations — including robots, autonomous vehicles, virtual personal assistants (VPAs) and smart advisors — that act in an autonomous (or at least semiautonomous) manner.

VPAs such as Google Now, Microsoft’s Cortana, and Apple’s Siri are becoming smarter and are precursors to autonomous agents. The emerging notion of assistance feeds into the ambient user experience in which an autonomous agent becomes the main user interface. Instead of interacting with menus, forms and buttons on a smartphone, the user speaks to an app, which is really an intelligent agent.

Adaptive Security Architecture

The complexities of digital business and the algorithmic economy combined with an emerging “hacker industry” significantly increase the threat surface for an organization. Relying on perimeter defense and rule-based security is inadequate, especially as organizations exploit more cloud-based services and open APIs for customers and partners to integrate with their systems. IT leaders must focus on detecting and responding to threats, as well as more traditional blocking and other measures to prevent attacks. Application self-protection, as well as user and entity behavior analytics, will help fulfill the adaptive security architecture.

Advanced System Architecture

The digital mesh and smart machines require intense computing architecture demands to make them viable for organizations. Providing this required boost are high-powered and ultraefficient neuromorphic (brain-like) architectures fueled by GPUs (graphic processing units) and field-programmable gate arrays (FPGAs). There are significant gains to this architecture, such as being able to run at speeds of greater than a teraflop with high-energy efficiency.

Mesh App and Service Architecture

Monolithic, linear application designs (e.g., the three-tier architecture) are giving way to a more loosely coupled integrative approach: the apps and services architecture. Enabled by software-defined application services, this new approach enables Web-scale performance, flexibility and agility. Microservice architecture is an emerging pattern for building distributed applications that support agile delivery and scalable deployment, both on-premises and in the cloud. Containers are emerging as a critical technology for enabling agile development and microservice architectures. Bringing mobile and IoT elements into the app and service architecture creates a comprehensive model to address back-end cloud scalability and front-end device mesh experiences. Application teams must create new modern architectures to deliver agile, flexible and dynamic cloud-based applications that span the digital mesh.

Internet of Things Platforms

IoT platforms complement the mesh app and service architecture. The management, security, integration and other technologies and standards of the IoT platform are the base set of capabilities for building, managing, and securing elements in the IoT. The IoT is an integral part of the digital mesh and ambient user experience and the emerging and dynamic world of IoT platforms is what makes them possible.

* Gartner defines a strategic technology trend as one with the potential for significant impact on the organization. Factors that denote significant impact include a high potential for disruption to the business, end users or IT, the need for a major investment, or the risk of being late to adopt. These technologies impact the organization’s long-term plans, programs and initiatives.