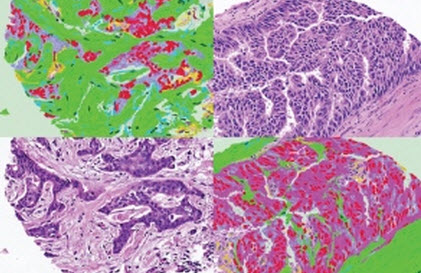

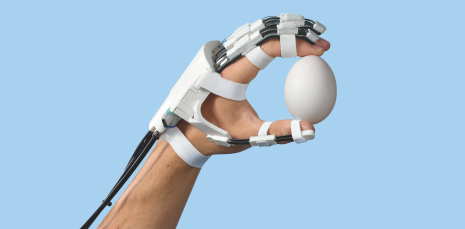

Conventional neural-network image-recognition algorithm trained to recognize human hair (left), compared to the more precise heuristically trained algorithm (right) (credit: Wenzhangzhi Guo and Parham Aarabi/IEEE Trans NN & LS)

A new machine learning algorithm designed by University of Toronto researchers Parham Aarabi and Wenzhi Guo learns directly from human instructions, rather than an existing set of examples, as in traditional neural networks. In tests, it outperformed existing neural networks by 160 per cent.

Their “heuristically trained neural networks” (HNN) algorithm also outperformed its own training by nine per cent — it learned to recognize hair in pictures with greater reliability than that enabled by the training.

Aarabi and Guo trained their HNN algorithm to identify people’s hair in photographs, a challenging task for computers. “Our algorithm learned to correctly classify difficult, borderline cases — distinguishing the texture of hair versus the texture of the background,” says Aarabi. “What we saw was like a teacher instructing a child, and the child learning beyond what the teacher taught her initially.”

Heuristic training

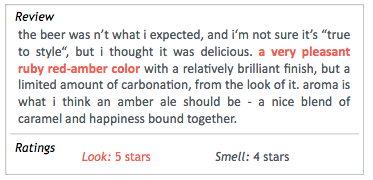

Humans conventionally “teach” neural networks by providing a set of labeled data and asking the neural network to make decisions based on the samples it’s seen. For example, you could train a neural network to identify sky in a photograph by showing it hundreds of pictures with the sky labeled.

With HNN, humans provide direct instructions that are used to pre-classify training samples rather than a set of fixed examples. Trainers program the algorithm with guidelines such as “Sky is likely to be varying shades of blue,” and “Pixels near the top of the image are more likely to be sky than pixels at the bottom.”

Their work is published in the journal IEEE Transactions on Neural Networks and Learning Systems.

This heuristic-training approach addresses one of the biggest challenges for neural networks: making correct classifications of previously unknown or unlabeled data, the researchers say. This is crucial for applying machine learning to new situations, such as correctly identifying cancerous tissues for medical diagnostics, or classifying all the objects surrounding and approaching a self-driving car.

“Applying heuristic training to hair segmentation is just a start,” says Guo. “We’re keen to apply our method to other fields and a range of applications, from medicine to transportation.”

Abstract of Hair Segmentation Using Heuristically-Trained Neural Networks

We present a method for binary classification using neural networks (NNs) that performs training and classification on the same data using the help of a pretraining heuristic classifier. The heuristic classifier is initially used to segment data into three clusters of high-confidence positives, high-confidence negatives, and low-confidence sets. The high-confidence sets are used to train an NN, which is then used to classify the low-confidence set. Applying this method to the binary classification of hair versus nonhair patches, we obtain a 2.2% performance increase using the heuristically trained NN over the current state-of-the-art hair segmentation method.