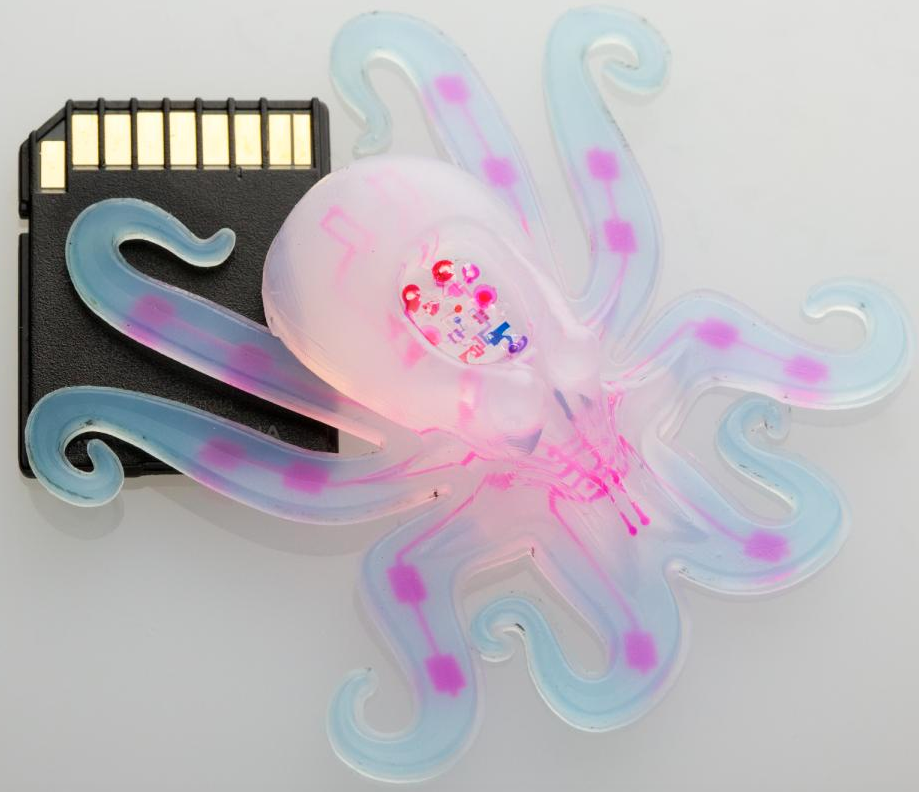

Harvard’s “octobot” is powered by a chemical reaction and controlled with a soft logic board. A reaction inside the bot transforms a small amount of liquid fuel (hydrogen peroxide) into a large amount of oxygen gas, which flows into the octobot’s arms and inflates them like a balloon. The team used a microfluidic logic circuit, a soft analogue of a simple electronic oscillator, to control when hydrogen peroxide decomposes to gas in the octobot. Octopi have long been a source of inspiration in soft robotics. These curious creatures can perform incredible feats of strength and dexterity with no internal skeleton. (SD card shown for scale only.) (credit: Lori Sanders)

The first autonomous, untethered, entirely soft 3-D-printed robot (powered only by a chemical reaction) has been demonstrated by a team of Harvard University researchers and described in the journal Nature.

Nicknamed “octobot,” the bot combines soft lithography, molding, and 3-D printing.

“One longstanding vision for the field of soft robotics has been to create robots that are entirely soft, but the struggle has always been in replacing rigid components like batteries and electronic controls with analogous soft systems and then putting it all together,” said Harvard professor Robert Wood. “This research demonstrates that we can easily manufacture the key components of a simple, entirely soft robot, which lays the foundation for more complex designs.”

Powered by hydrogen peroxide

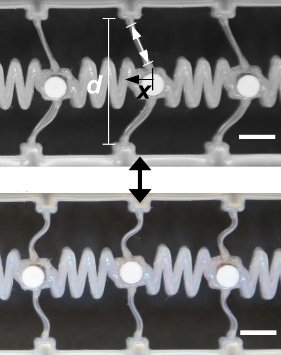

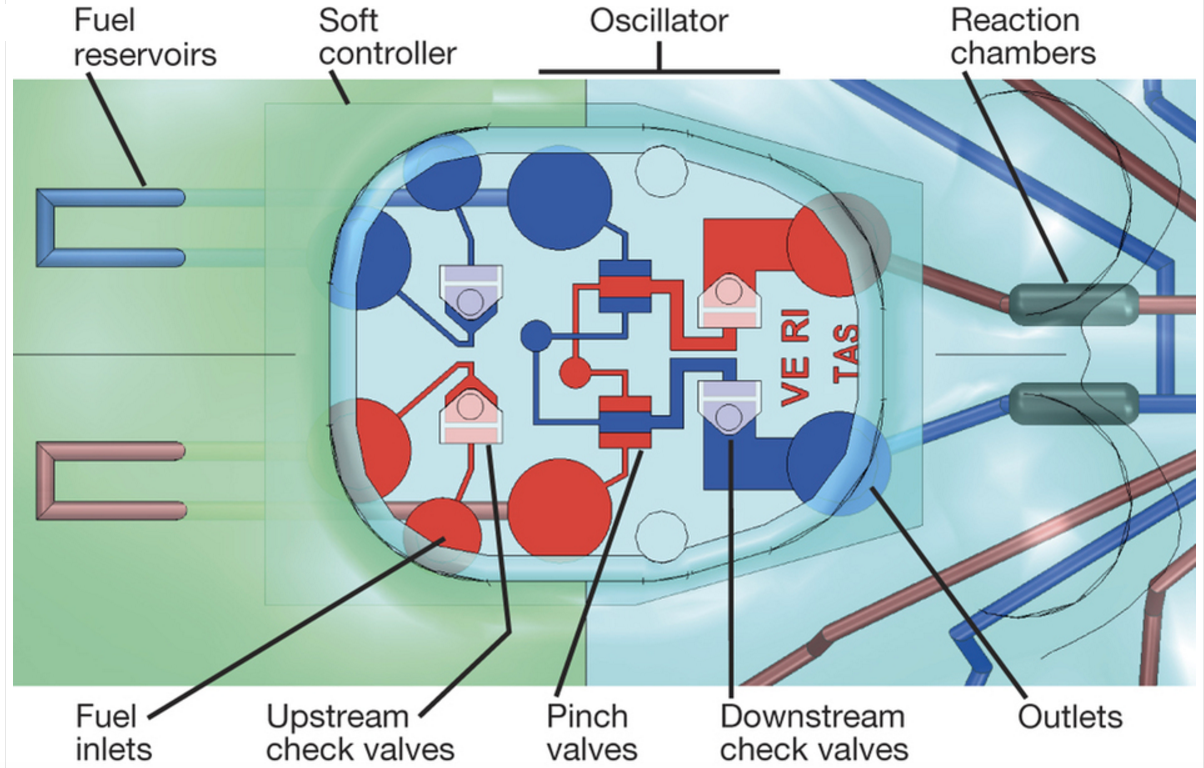

Octobot structure. A system of check valves and switch valves within the soft controller regulates fluid flow into and through the system. The reaction chambers convert the hydrogen peroxide to oxygen, which then inflates the bot arms. The 500-micrometers-high “VERITAS” letters are patterned into the soft controller as an indication of scale. (credit: Michael Wehner et al./Nature)

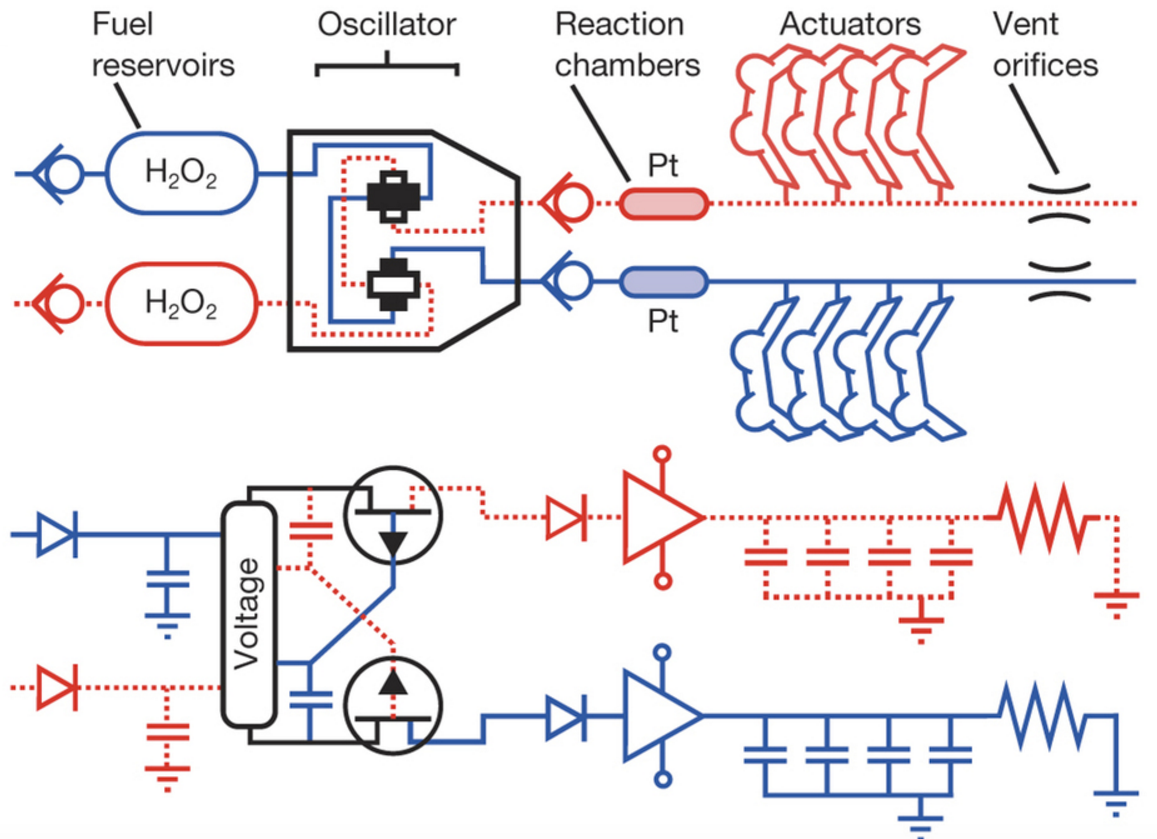

Harvard’s octobot is pneumatic-based — powered by gas under pressure. A reaction inside the bot transforms a small amount of liquid fuel (hydrogen peroxide) into a large amount of gas, which flows into the octobot’s arms and inflates them like balloons. To control the reaction, the team used a microfluidic logic circuit based on pioneering work by co-author and chemist George Whitesides.

Octobot mechanical schematic (top) and electronic analogue (bottom). Check valves, fuel tanks, oscillator, reaction chambers, actuators and vent orifices are analogous to diodes, supply capacitors, electrical oscillator, amplifiers, capacitors and pull-down resistors, respectively. (credit: Michael Wehner at al./Nature)

The circuit, a soft analogue of a simple electronic oscillator, controls when hydrogen peroxide decomposes to gas in the octobot, triggering actuators.

The proof-of-concept octobot design could pave the way for a new generation of such machines, which could help revolutionize how humans interact with machines, the researchers suggest. They hope their approach for creating autonomous soft robots inspires roboticists, material scientists, and researchers focused on advanced manufacturing.

Next, the Harvard team hopes to design an octobot that can crawl, swim, and interact with its environment.

Robert Wood, the Charles River Professor of Engineering and Applied Sciences, and Jennifer A. Lewis, the Hansjorg Wyss Professor of Biologically Inspired Engineering, at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS), led the research. Lewis and Wood are also core faculty members of the Wyss Institute for Biologically Inspired Engineering at Harvard University. George Whitesides is the Woodford L. and Ann A. Flowers University Professor and a core faculty member of the Wyss.

The research was supported by the National Science Foundation through the Materials Research Science and Engineering Center at Harvard and by the Wyss Institute.

Harvard University | Introducing the Octobot

Harvard University | Powering the Octobot: A chemical reaction

Abstract of An integrated design and fabrication strategy for entirely soft, autonomous robots

Soft robots possess many attributes that are difficult, if not impossible, to achieve with conventional robots composed of rigid materials. Yet, despite recent advances, soft robots must still be tethered to hard robotic control systems and power sources. New strategies for creating completely soft robots, including soft analogues of these crucial components, are needed to realize their full potential. Here we report the untethered operation of a robot composed solely of soft materials. The robot is controlled with microfluidic logic that autonomously regulates fluid flow and, hence, catalytic decomposition of an on-board monopropellant fuel supply. Gas generated from the fuel decomposition inflates fluidic networks downstream of the reaction sites, resulting in actuation. The body and microfluidic logic of the robot are fabricated using moulding and soft lithography, respectively, and the pneumatic actuator networks, on-board fuel reservoirs and catalytic reaction chambers needed for movement are patterned within the body via a multi-material, embedded 3D printing technique. The fluidic and elastomeric architectures required for function span several orders of magnitude from the microscale to the macroscale. Our integrated design and rapid fabrication approach enables the programmable assembly of multiple materials within this architecture, laying the foundation for completely soft, autonomous robots.