Would you trust this robot? (credit: Rethink Robotics)

Trust in robots is a critical component in safety that requires study, says MIT Professor Emeritus Thomas B. Sheridan in an open-access study published in Human Factors journal.

For decades, he has studied humans and automation and in each case, he noted significant human factors challenges — particularly concerning safety. He looked at self-driving cars and highly automated transit systems; routine tasks such as the delivery of packages in Amazon warehouses; devices that handle tasks in hazardous or inaccessible environments, such as the Fukushima nuclear plant; and robots that engage in social interaction (Barbies).

For example, no human driver, he claims, will stay alert to take over control of a self-driving car quickly enough should the automation fail. Nor does self-driving car technology consider the value of social interaction between drivers such as eye contact and hand signals. And would airline passengers be happy if computerized monitoring replaced the second pilot?

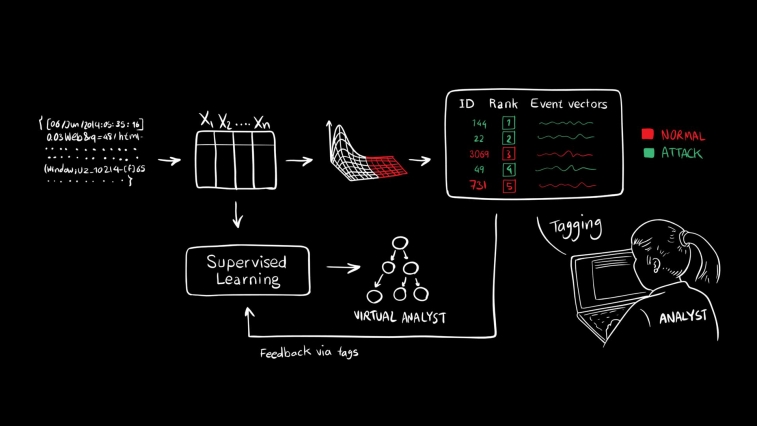

Designing a robot to move an elderly person in and out of bed would potentially reduce back injuries among human caregivers, but questions abound as to what physical form that robot should take, and hospital patients may be alienated by robots delivering their food trays. The ability of robots to learn from human feedback is an area that demands human factors research, as is understanding how people of different ages and abilities best learn from robots.

Sheridan also challenges the human factors community to address the inevitable trade-offs: the possibility of robots providing jobs rather than taking them away, robots as assistants that can enhance human self-worth instead of diminishing it, and the role of robots to improve rather than jeopardize security.

Abstract of Human–Robot Interaction: Status and Challenges

Objective: The current status of human–robot interaction (HRI) is reviewed, and key current research challenges for the human factors community are described.

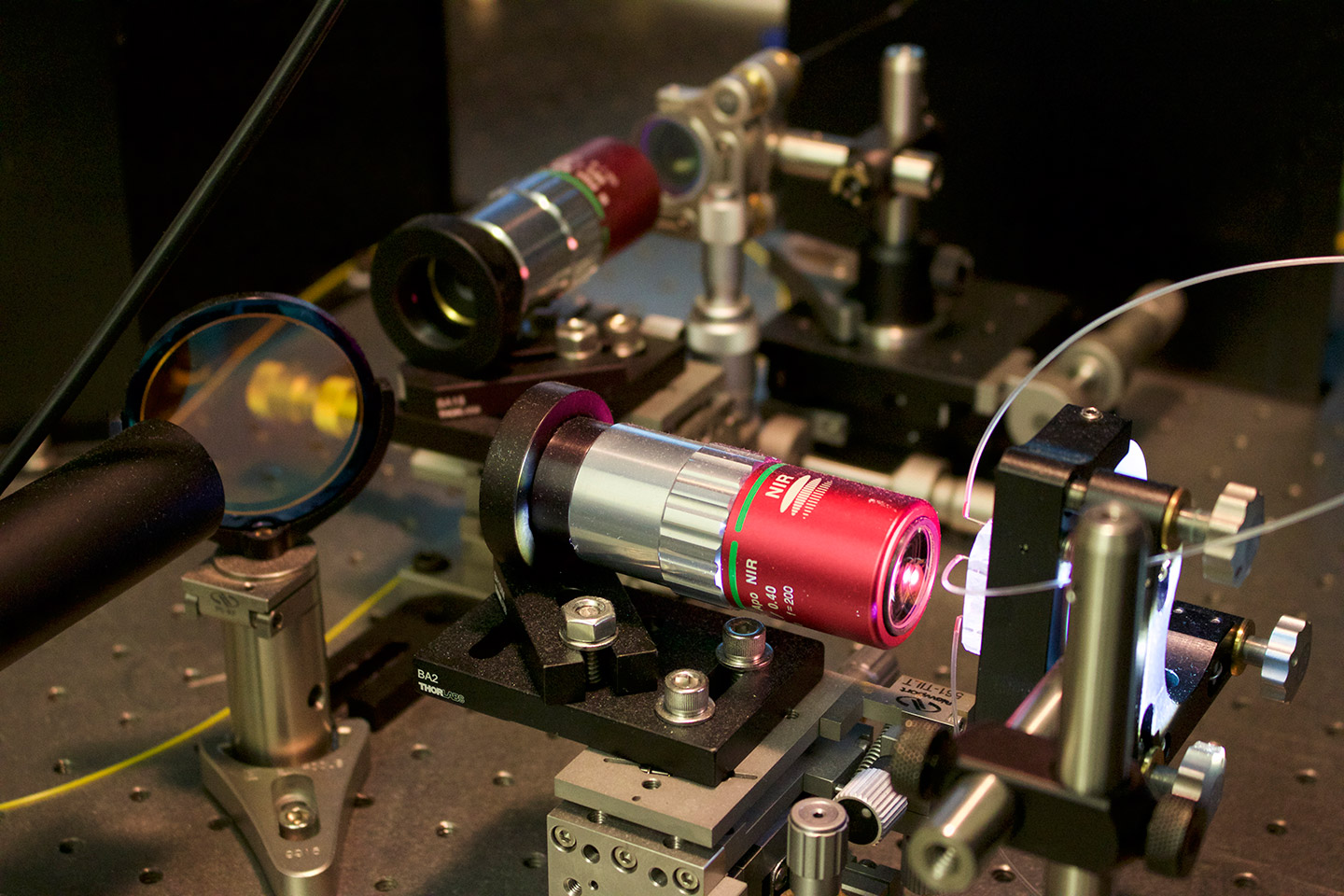

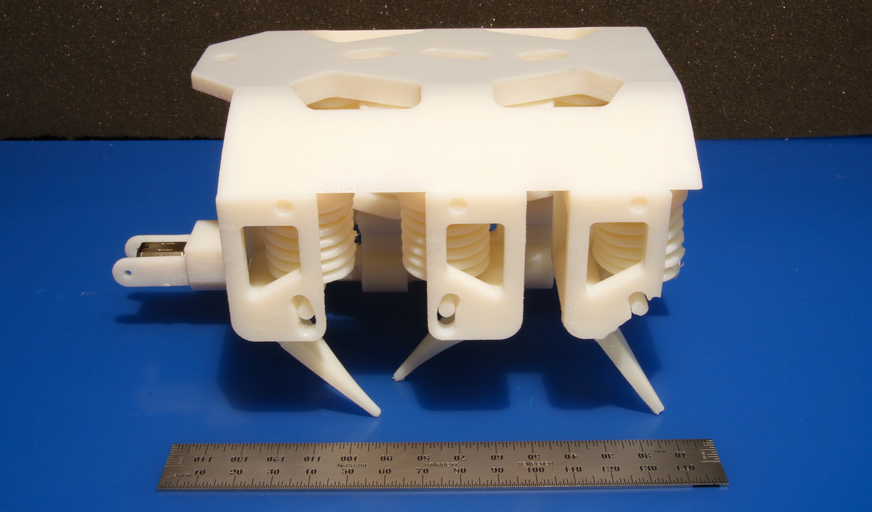

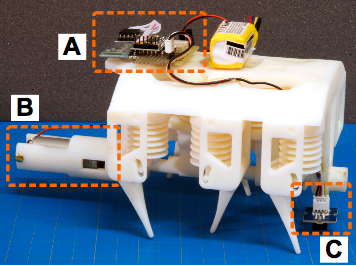

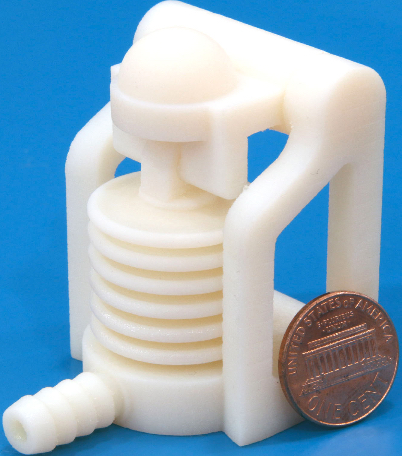

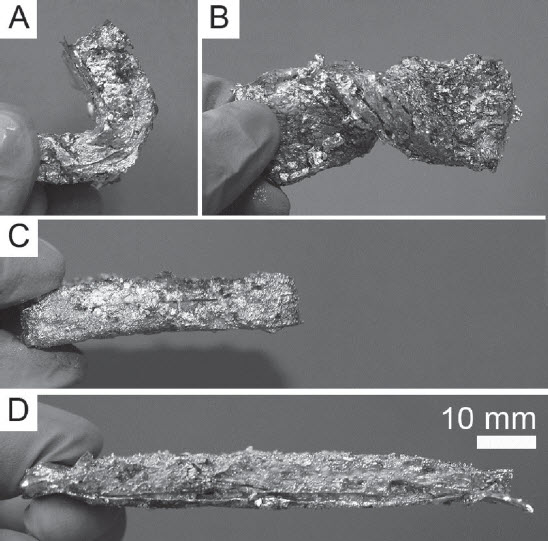

Background: Robots have evolved from continuous human-controlled master–slave servomechanisms for handling nuclear waste to a broad range of robots incorporating artificial intelligence for many applications and under human supervisory control.

Methods: This mini-review describes HRI developments in four application areas and what are the challenges for human factors research.

Results: In addition to a plethora of research papers, evidence of success is manifest in live demonstrations of robot capability under various forms of human control.

Conclusions: HRI is a rapidly evolving field. Specialized robots under human teleoperation have proven successful in hazardous environments and medical application, as have specialized telerobots under human supervisory control for space and repetitive industrial tasks. Research in areas of self-driving cars, intimate collaboration with humans in manipulation tasks, human control of humanoid robots for hazardous environments, and social interaction with robots is at initial stages. The efficacy of humanoid general-purpose robots has yet to be proven.

Applications: HRI is now applied in almost all robot tasks, including manufacturing, space, aviation, undersea, surgery, rehabilitation, agriculture, education, package fetch and delivery, policing, and military operations.