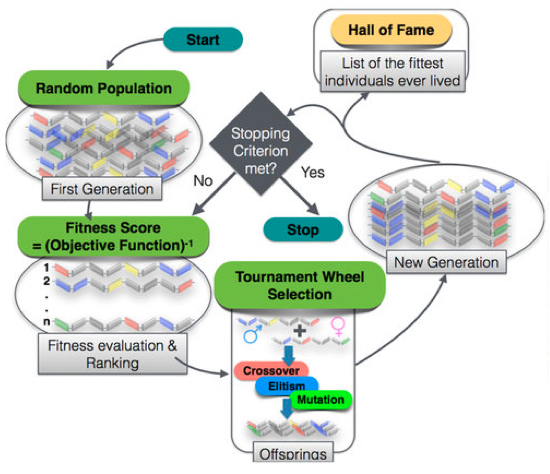

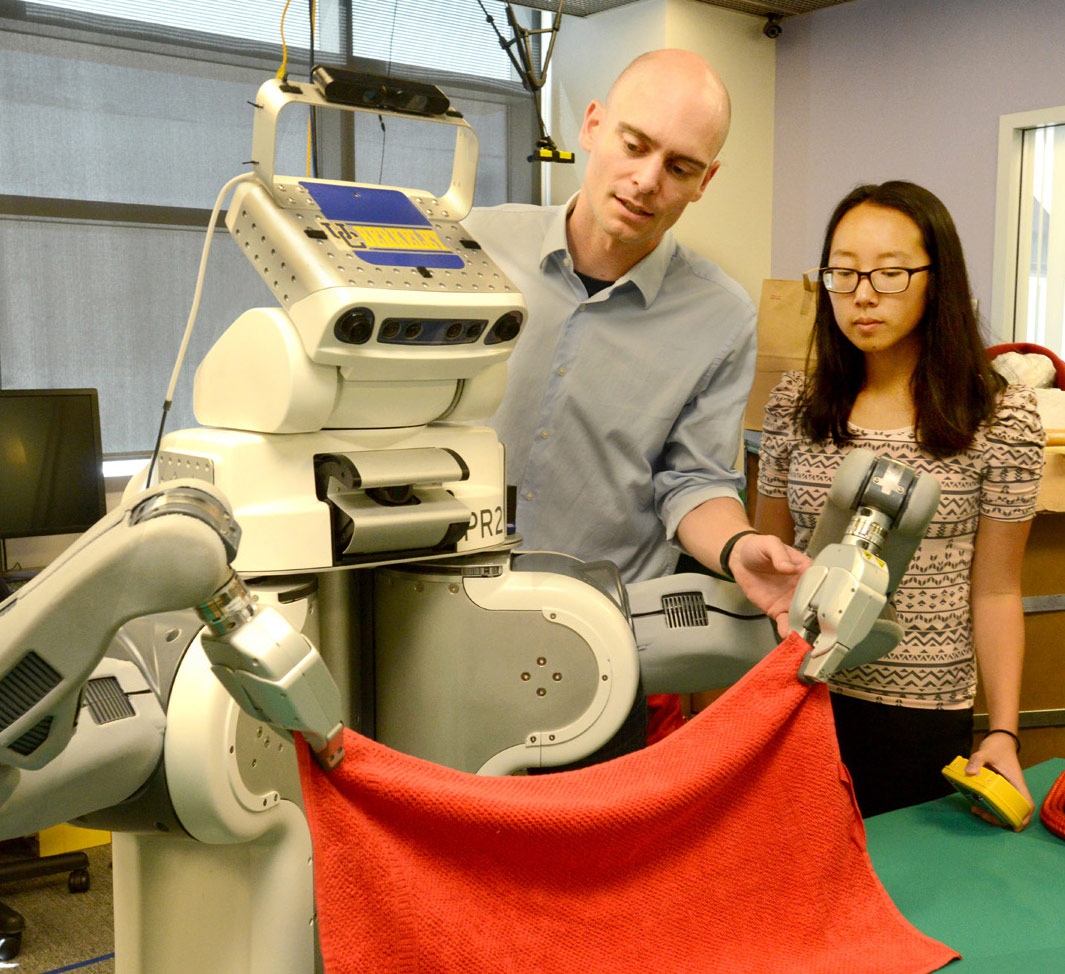

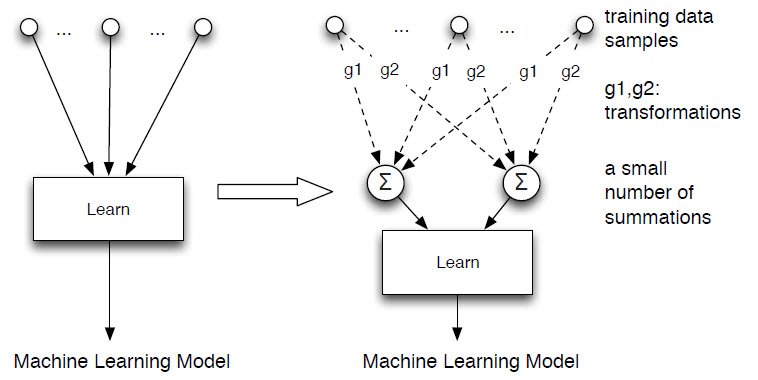

The novel approach to making systems forget data is called “machine unlearning” by the two researchers who are pioneering the concept. Instead of making a model directly depend on each training data sample (left), they convert the learning algorithm into a summation form (right) – a process that is much easier and faster than retraining the system from scratch. (credit: Yinzhi Cao and Junfeng Yang)

Machine learning systems are becoming ubiquitous, but what about false or damaging information about you (and others) that these systems have learned? Is it even possible for that information to be ever corrected? There are some heavy security and privacy questions here. Ever Google yourself?

Some background: machine-learning software programs calculate predictive relationships from massive amounts of data. The systems identify these predictive relationships using advanced algorithms — a set of rules for solving math problems — and “training data.” This data is then used to construct the models and features that enable a system to predict things, like the probability of rain next week or when the Zika virus will arrive in your town.

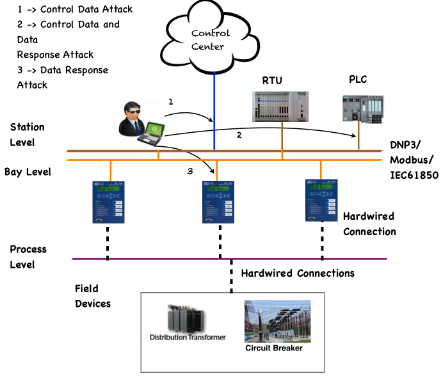

This intricate process means that a piece of raw data often goes through a series of computations in a system. The computations and information derived by the system from that data together form a complex propagation network called the data’s “lineage” (a term coined by Yinzhi Cao, a Lehigh University assistant professor of computer science and engineering, and his colleague, Junfeng Yang of Columbia University).

“Effective forgetting systems must be able to let users specify the data to forget with different levels of granularity,” said Cao. “These systems must remove the data and undo its effects so that all future operations run as if the data never existed.”

Widely used learning systems such as Google Search are, for the most part, only able to forget a user’s raw data upon request — not the data’s lineage (what the user’s data connects to). However, in October 2014, Google removed more than 170,000 links to comply with the ruling, which affirmed users’ right to control what appears when their names are searched. In July 2015, Google said it had received more than a quarter-million such requests.

How “machine unlearning” works

Now the two researchers say they have developed a way to forget faster and more effectively. Their concept, called “machine unlearning,” led to a four-year, $1.2 million National Science Foundation grant to develop the approach.

Building on work that was presented at a 2015 IEEE Symposium and then published, Cao and Yang’s “machine unlearning” method is based on the assumption that most learning systems can be converted into a form that can be updated incrementally without costly retraining from scratch.

Their approach introduces a layer of a small number of summations between the learning algorithm and the training data to eliminate dependency on each other. That means the learning algorithms depend only on the summations and not on individual data.

Using this method, unlearning a piece of data and its lineage no longer requires rebuilding the models and features that predict relationships between pieces of data. Simply recomputing a small number of summations would remove the data and its lineage completely — and much faster than through retraining the system from scratch, the researchers claim.

Verification?

Cao and Yang tested their unlearning approach on four diverse, real-world systems: LensKit, an open-source recommendation system; Zozzle, a closed-source JavaScript malware detector; an open-source OSN spam filter; and PJScan, an open-source PDF malware detector.

Cao and Yang are now adapting the technique to other systems and creating verifiable machine unlearning to statistically test whether unlearning has indeed repaired a system or completely wiped out unwanted data.

“We foresee easy adoption of forgetting systems because they benefit both users and service providers,” they said. “With the flexibility to request that systems forget data, users have more control over their data, so they are more willing to share data with the systems.”

The researchers envision “forgetting systems playing a crucial role in emerging data markets where users trade data for money, services, or other data, because the mechanism of forgetting enables a user to cleanly cancel a data transaction or rent out the use rights of her data without giving up the ownership.”

editor’s comments: I’d like to see case studies and critical reviews of this software by independent security and privacy experts. Yes, I’m paranoid but… etc. Your suggestions? To be continued…

Abstract of Towards Making Systems Forget with Machine Unlearning

Today’s systems produce a rapidly exploding amount of data, and the data further derives more data, forming a complex data propagation network that we call the data’s lineage. There are many reasons that users want systems to forget certain data including its lineage. From a privacy perspective, users who become concerned with new privacy risks of a system often want the system to forget their data and lineage. From a security perspective, if an attacker pollutes an anomaly detector by injecting manually crafted data into the training data set, the detector must forget the injected data to regain security. From a usability perspective, a user can remove noise and incorrect entries so that a recommendation engine gives useful recommendations. Therefore, we envision forgetting systems, capable of forgetting certain data and their lineages, completely and quickly. This paper focuses on making learning systems forget, the process of which we call machine unlearning, or simply unlearning. We present a general, efficient unlearning approach by transforming learning algorithms used by a system into a summation form. To forget a training data sample, our approach simply updates a small number of summations — asymptotically faster than retraining from scratch. Our approach is general, because the summation form is from the statistical query learning in which many machine learning algorithms can be implemented. Our approach also applies to all stages of machine learning, including feature selection and modeling. Our evaluation, on four diverse learning systems and real-world workloads, shows that our approach is general, effective, fast, and easy to use.