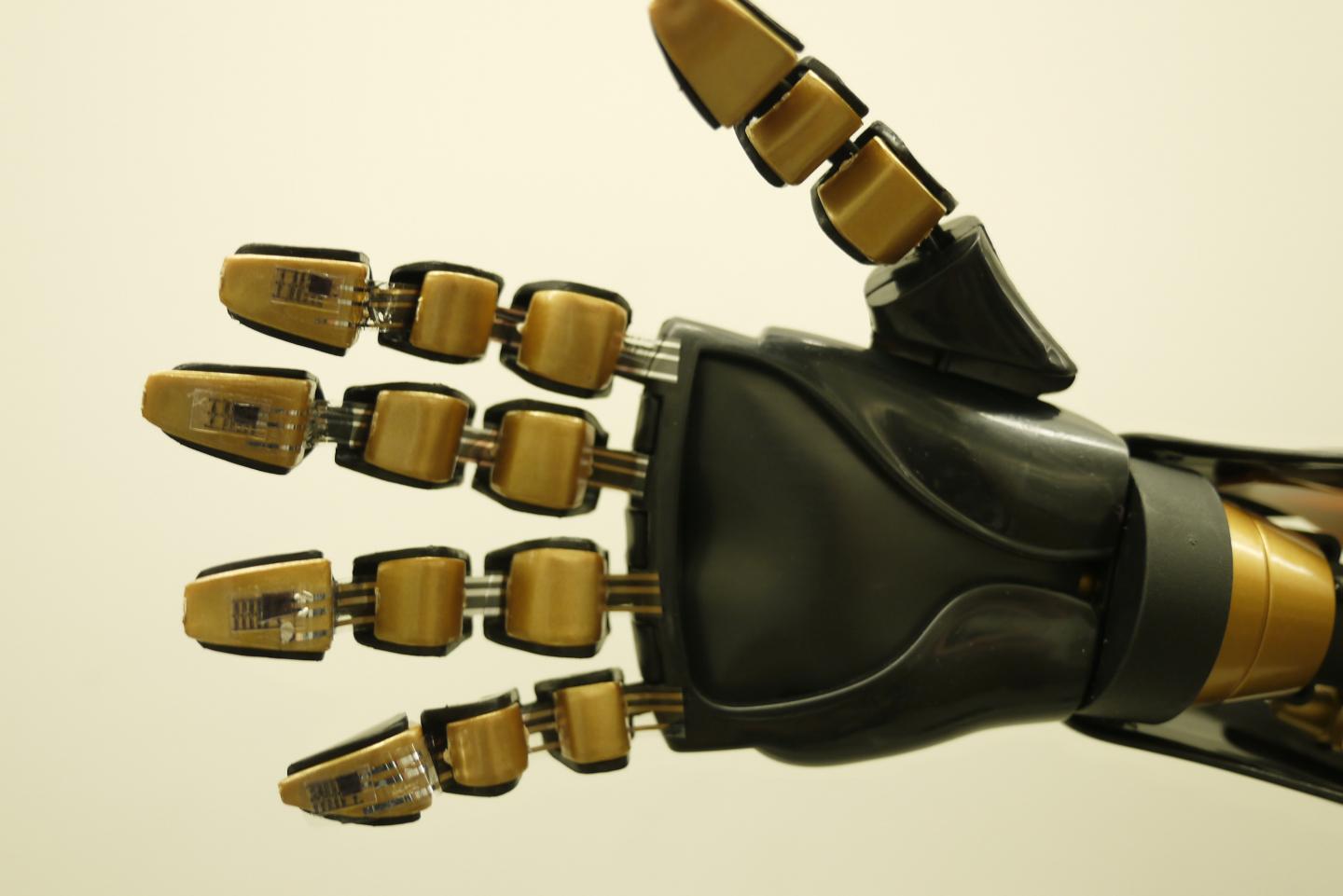

The Harvard RoboBee concept (credit: Harvard Microrobotics Lab)

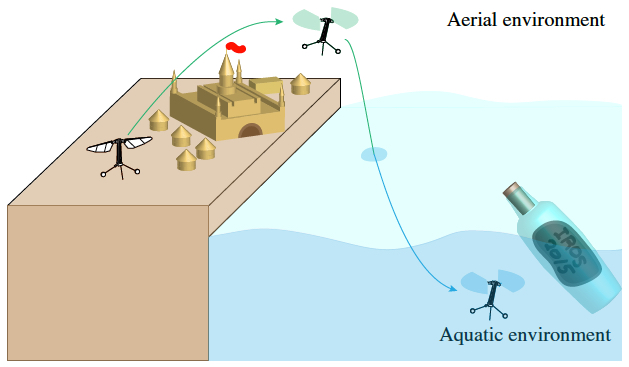

In 1939, Russian engineer Boris Ushakov proposed a “flying submarine” — a cool James Bond-style vehicle that could seamlessly transition from air to water and back again. Ever since, engineers have been trying to design one, with little success. The biggest challenge: aerial vehicles require large airfoils like wings or sails to generate lift, while underwater vehicles need to minimize surface area to reduce drag.

Engineers at the Harvard John A. Paulson School of Engineering and Applied Science (SEAS) decided to try that a new version of their RoboBee microbot (see “A robotic insect makes first controlled test flight“), taking a clue from puffins. These birds with flamboyant beaks employ flapping motions that are similar in air and water.

Harvard University | RoboBee: From Aerial to Aquatic

But to make that actually work, the team had to first solve four thorny problems:

Surface tension. The RoboBee is so small and lightweight that it cannot break the surface tension of the water. To overcome this hurdle, the RoboBee hovers over the water at an angle, momentarily switches off its wings, and then crashes unceremoniously into the water to make itself sink.

Water’s increased density (1,000 times denser than air), which would snap the wing off the RoboBee. Solution: the team lowered the wing speed from 120 flaps per second to nine but kept the flapping mechanisms and hinge design the same. A swimming RoboBee simply changes its direction by adjusting the stroke angle of the wings, the same way it does in air.

Shorting out. Like the flying version, it’s tethered to a power source. Solution: use deionized water and coat the electrical connections with glue.

Moving from water to air. Problem: it can’t generate enough lift without snapping one of its wings. They researchers say they’re working on that next.

“We believe the RoboBee has the potential to become the world’s first successful dual aerial, aquatic insect-scale vehicle,” the researchers claim in a paper presented at the International Conference on Intelligent Robots and Systems in Germany. The research was funded by the National Science Foundation and the Wyss Institute for Biologically Inspired Engineering.

Hmm, maybe we’ll see a vehicle based on the RoboBee in a future Bond film?