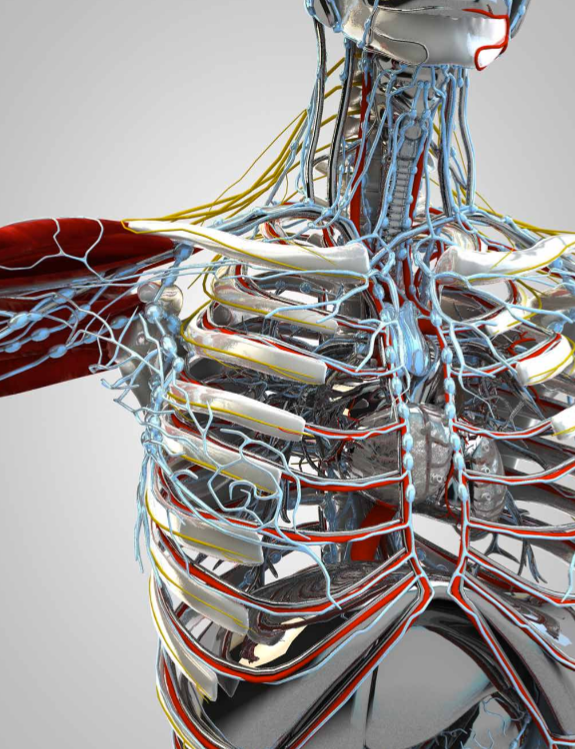

Illustration of single-atom-layer “atomristors” — the thinnest-ever memory-storage device (credit: Cockrell School of Engineering, The University of Texas at Austin)

A team of electrical engineers at The University of Texas at Austin and scientists at Peking University has developed a one-atom-thick 2D “atomristor” memory storage device that may lead to faster, smaller, smarter computer chips.

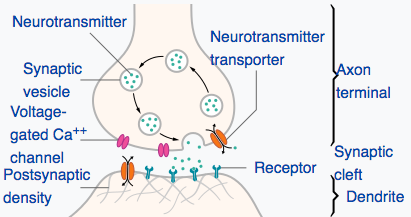

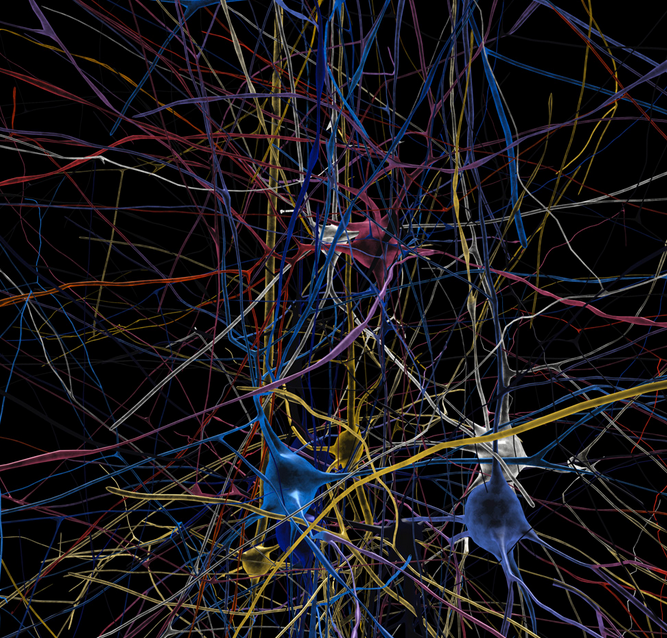

The atomristor (atomic memristor) improves upon memristor (memory resistor) memory storage technology by using atomically thin nanomaterials (atomic sheets). (Combining memory and logic functions, similar to the synapses of biological brains, memristors “remember” their previous state after being turned off.)

Schematic of atomristor memory sandwich based on molybdenum sulfide (MoS2) in a form of a single-layer atomic sheet grown on gold foil. (Blue: Mo; yellow: S) (credit: Ruijing Ge et al./Nano Letters)

Memory storage and transistors have, to date, been separate components on a microchip. Atomristors combine both functions on a single, more-efficient device. They use metallic atomic sheets (such as graphene or gold) as electrodes and semiconducting atomic sheets (such as molybdenum sulfide) as the active layer. The entire memory cell is a two-layer sandwich only ~1.5 nanometers thick.

“The sheer density of memory storage that can be made possible by layering these synthetic atomic sheets onto each other, coupled with integrated transistor design, means we can potentially make computers that learn and remember the same way our brains do,” said Deji Akinwande, associate professor in the Cockrell School of Engineering’s Department of Electrical and Computer Engineering.

“This discovery has real commercialization value, as it won’t disrupt existing technologies,” Akinwande said. “Rather, it has been designed to complement and integrate with the silicon chips already in use in modern tech devices.”

The research is described in an open-access paper in the January American Chemical Society journal Nano Letters.

Longer battery life in cell phones

For nonvolatile operation (preserving data after power is turned off), the new design also “offers a substantial advantage over conventional flash memory, which occupies far larger space. In addition, the thinness allows for faster and more efficient electric current flow,” the researchers note in the paper.

The research team also discovered another unique application for the atomristor technology: Atomristors are the smallest radio-frequency (RF) memory switches to be demonstrated, with no DC battery consumption, which could ultimately lead to longer battery life for cell phones and other battery-powered devices.*

Funding for the UT Austin team’s work was provided by the National Science Foundation and the Presidential Early Career Award for Scientists and Engineers, awarded to Akinwande in 2015.

* “Contemporary switches are realized with transistor or microelectromechanical devices, both of which are volatile, with the latter also requiring large switching voltages [which are not ideal] for mobile technologies,” the researchers note in the paper. Atomristors instead allow for nonvolatile low-power radio-frequency (RF) switches with “low voltage operation, small form-factor, fast switching speed, and low-temperature integration compatible with silicon or flexible substrates.”

Abstract of Atomristor: Nonvolatile Resistance Switching in Atomic Sheets of Transition Metal Dichalcogenides

Recently, two-dimensional (2D) atomic sheets have inspired new ideas in nanoscience including topologically protected charge transport,1,2 spatially separated excitons,3 and strongly anisotropic heat transport.4 Here, we report the intriguing observation of stable nonvolatile resistance switching (NVRS) in single-layer atomic sheets sandwiched between metal electrodes. NVRS is observed in the prototypical semiconducting (MX2, M = Mo, W; and X = S, Se) transitional metal dichalcogenides (TMDs),5 which alludes to the universality of this phenomenon in TMD monolayers and offers forming-free switching. This observation of NVRS phenomenon, widely attributed to ionic diffusion, filament, and interfacial redox in bulk oxides and electrolytes,6−9 inspires new studies on defects, ion transport, and energetics at the sharp interfaces between atomically thin sheets and conducting electrodes. Our findings overturn the contemporary thinking that nonvolatile switching is not scalable to subnanometre owing to leakage currents.10 Emerging device concepts in nonvolatile flexible memory fabrics, and brain-inspired (neuromorphic) computing could benefit substantially from the wide 2D materials design space. A new major application, zero-static power radio frequency (RF) switching, is demonstrated with a monolayer switch operating to 50 GHz.