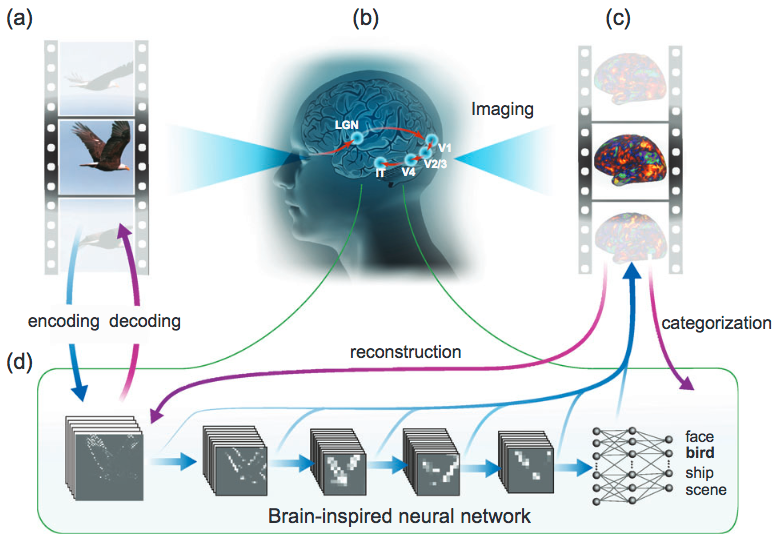

Oops! A new debugging tool called DeepXplore generates real-world test images meant to expose logic errors in deep neural networks. The darkened photo at right tricked one set of neurons into telling the car to turn into the guardrail. After catching the mistake, the tool retrains the network to fix the bug. (credit: Columbia Engineering)

Researchers at Columbia and Lehigh universities have developed a method for error-checking the reasoning of the thousands or millions of neurons in unsupervised (self-taught) deep-learning neural networks, such as those used in self-driving cars.

Their tool, DeepXplore, feeds confusing, real-world inputs into the network to expose rare instances of flawed reasoning, such as the incident last year when Tesla’s autonomous car collided with a truck it mistook for a cloud, killing its passenger. Deep learning systems don’t explain how they make their decisions, which makes them hard to trust.

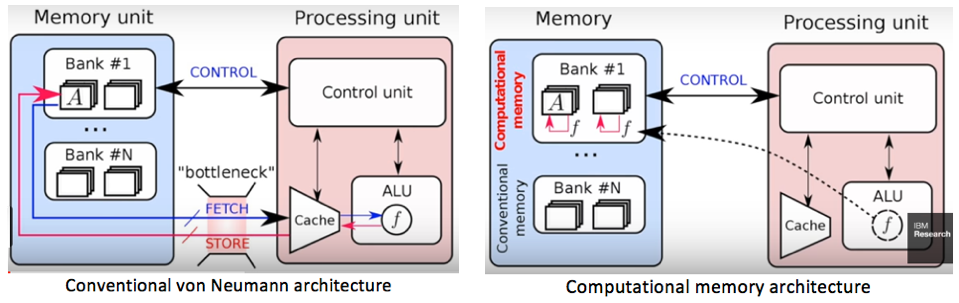

Modeled after the human brain, deep learning uses layers of artificial neurons that process and consolidate information. This results in a set of rules to solve complex problems, from recognizing friends’ faces online to translating email written in Chinese. The technology has achieved impressive feats of intelligence, but as more tasks become automated this way, concerns about safety, security, and ethics are growing.

Finding bugs by generating test images

Debugging the neural networks in self-driving cars is an especially slow and tedious process, with no way to measure how thoroughly logic within the network has been checked for errors. Current limited approaches include randomly feeding manually generated test images into the network until one triggers a wrong decision (telling the car to veer into the guardrail, for example); and “adversarial testing,” which automatically generates test images that it alters incrementally until one image tricks the system.

The new DeepXplore solution — presented Oct. 29, 2017 in an open-access paper at ACM’s Symposium on Operating Systems Principles in Shanghai — can find a wider variety of bugs than random or adversarial testing by using the network itself to generate test images likely to cause neuron clusters to make conflicting decisions, according to the researchers.

To simulate real-world conditions, photos are lightened and darkened, and made to mimic the effect of dust on a camera lens, or a person or object blocking the camera’s view. A photo of the road may be darkened just enough, for example, to cause one set of neurons to tell the car to turn left, and two other sets of neurons to tell it to go right.

After inferring that the first set misclassified the photo, DeepXplore automatically retrains the network to recognize the darker image and fix the bug. Using optimization techniques, researchers have designed DeepXplore to trigger as many conflicting decisions with its test images as it can while maximizing the number of neurons activated.

“You can think of our testing process as reverse-engineering the learning process to understand its logic,” said co-developer Suman Jana, a computer scientist at Columbia Engineering and a member of the Data Science Institute. “This gives you some visibility into what the system is doing and where it’s going wrong.”

Testing their software on 15 state-of-the-art neural networks, including Nvidia’s Dave 2 network for self-driving cars, the researchers uncovered thousands of bugs missed by previous techniques. They report activating up to 100 percent of network neurons — 30 percent more on average than either random or adversarial testing — and bringing overall accuracy up to 99 percent in some networks, a 3 percent improvement on average.*

The ultimate goal: certifying a neural network is bug-free

Still, a high level of assurance is needed before regulators and the public are ready to embrace robot cars and other safety-critical technology like autonomous air-traffic control systems. One limitation of DeepXplore is that it can’t certify that a neural network is bug-free. That requires isolating and testing the exact rules the network has learned.

A new tool developed at Stanford University, called ReluPlex, uses the power of mathematical proofs to do this for small networks. Costly in computing time, but offering strong guarantees, this small-scale verification technique complements DeepXplore’s full-scale testing approach, said ReluPlex co-developer Clark Barrett, a computer scientist at Stanford.

“Testing techniques use efficient and clever heuristics to find problems in a system, and it seems that the techniques in this paper are particularly good,” he said. “However, a testing technique can never guarantee that all the bugs have been found, or similarly, if it can’t find any bugs, that there are, in fact, no bugs.”

DeepXplore has applications beyond self-driving cars. It can find malware disguised as benign code in anti-virus software, and uncover discriminatory assumptions baked into predictive policing and criminal sentencing software, for example.

The team has made their open-source software public for other researchers to use, and launched a website to let people upload their own data to see how the testing process works.

* The team evaluated DeepXplore on real-world datasets including Udacity self-driving car challenge data, image data from ImageNet and MNIST, Android malware data from Drebin, PDF malware data from Contagio/VirusTotal, and production-quality deep neural networks trained on these datasets, such as these ranked top in Udacity self-driving car challenge. Their results show that DeepXplore found thousands of incorrect corner case behaviors (e.g., self-driving cars crashing into guard rails) in 15 state-of-the-art deep learning models with a total of 132,057 neurons trained on five popular datasets containing around 162 GB of data.

Abstract of DeepXplore: Automated Whitebox Testing of Deep Learning Systems

Deep learning (DL) systems are increasingly deployed in safety- and security-critical domains including self-driving cars and malware detection, where the correctness and predictability of a system’s behavior for corner case inputs are of great importance. Existing DL testing depends heavily on manually labeled data and therefore often fails to expose erroneous behaviors for rare inputs.

We design, implement, and evaluate DeepXplore, the first whitebox framework for systematically testing real-world DL systems. First, we introduce neuron coverage for systematically measuring the parts of a DL system exercised by test inputs. Next, we leverage multiple DL systems with similar functionality as cross-referencing oracles to avoid manual checking. Finally, we demonstrate how finding inputs for DL systems that both trigger many differential behaviors and achieve high neuron coverage can be represented as a joint optimization problem and solved efficiently using gradient-based search techniques.

DeepXplore efficiently finds thousands of incorrect corner case behaviors (e.g., self-driving cars crashing into guard rails and malware masquerading as benign software) in state-of-the-art DL models with thousands of neurons trained on five popular datasets including ImageNet and Udacity self-driving challenge data. For all tested DL models, on average, DeepXplore generated one test input demonstrating incorrect behavior within one second while running only on a commodity laptop. We further show that the test inputs generated by DeepXplore can also be used to retrain the corresponding DL model to improve the model’s accuracy by up to 3%.