(credit: iStock)

When you use smartphone AI apps like Siri, you’re dependent on the cloud for a lot of the processing — limited by your connection speed. But what if your smartphone could do more of the processing directly on your device — allowing for smarter, faster apps?

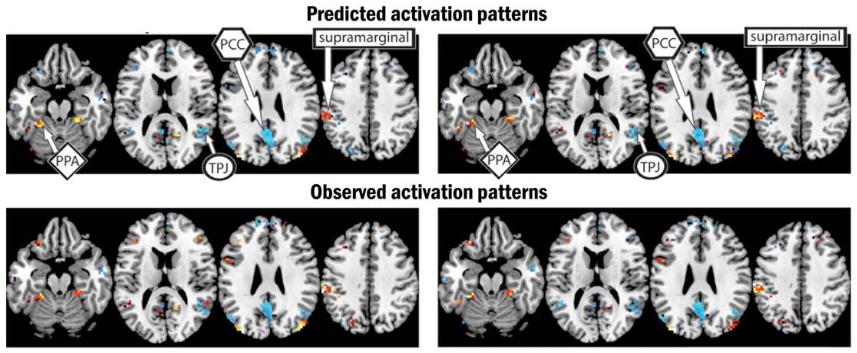

MIT scientists have taken a step in that direction with a new way to enable artificial-intelligence systems called convolutional neural networks (CNNs) to run locally on mobile devices. (CNN’s are used in areas such as autonomous driving, speech recognition, computer vision, and automatic translation.) Neural networks take up a lot of memory and consume a lot of power, so they usually run on servers in the cloud, which receive data from desktop or mobile devices and then send back their analyses.

The new MIT analytic method can determine how much power a neural network will actually consume when run on a particular type of hardware. The researchers used the method to evaluate new techniques for paring down neural networks so that they’ll run more efficiently on handheld devices.

The new CNN designs are also optimized to run on an energy-efficient computer chip optimized for neural networks that the researchers developed in 2016.

Reducing energy consumption

The new MIT software method uses “energy-aware pruning” — meaning they reduce a neural networks’ power consumption by cutting out the layers of the network that contribute very little to a neural network’s final output and consume the most energy.

Associate professor of electrical engineering and computer science Vivienne Sze and colleagues describe the work in an open-access paper they’re presenting this week (of July 24, 2017) at the Computer Vision and Pattern Recognition Conference. They report that the methods offered up to 73 percent reduction in power consumption over the standard implementation of neural networks — 43 percent better than the best previous method.

Meanwhile, another MIT group at the Computer Science and Artificial Intelligence Laboratory has designed a hardware approach to reduce energy consumption and increase computer-chip processing speed for specific apps, using “cache hierarchies.” (“Caches” are small, local memory banks that store data that’s frequently used by computer chips to cut down on time- and energy-consuming communication with off-chip memory.)**

The researchers tested their system on a simulation of a chip with 36 cores, or processing units. They found that compared to its best-performing predecessors, the system increased processing speed by 20 to 30 percent while reducing energy consumption by 30 to 85 percent. They presented the new system, dubbed Jenga, in an open-access paper at the International Symposium on Computer Architecture earlier in July 2017.

Better batteries — or maybe, no battery?

Another solution to better mobile AI is improving rechargeable batteries in cell phones (and other mobile devices), which have limited charge capacity and short lifecycles, and perform poorly in cold weather.

Recently, DARPA-funded researchers from the University of Houston (and at the University of California-San Diego and Northwestern University) have discovered that quinones — an inexpensive, earth-abundant and easily recyclable material that is low-cost and nonflammable — can address current battery limitations.

“One of these batteries, as a car battery, could last 10 years,” said Yan Yao, associate professor of electrical and computer engineering. In addition to slowing the deterioration of batteries for vehicles and stationary electricity storage batteries, it also would make battery disposal easier because the material does not contain heavy metals. The research is described in Nature Materials.

The first battery-free cellphone that can send and receive calls using only a few microwatts of power. (credit: Mark Stone/University of Washington)

But what if we eliminated batteries altogether? University of Washington researchers have invented a cellphone that requires no batteries. Instead, it harvests 3.5 microwatts of power from ambient radio signals, light, or even the vibrations of a speaker.

The new technology is detailed in a paper published July 1, 2017 in the Proceedings of the Association for Computing Machinery on Interactive, Mobile, Wearable and Ubiquitous Technologies.

The UW researchers demonstrated how to harvest this energy from ambient radio signals transmitted by a WiFi base station up to 31 feet away. “You could imagine in the future that all cell towers or Wi-Fi routers could come with our base station technology embedded in it,” said co-author Vamsi Talla, a former UW electrical engineering doctoral student and Allen School research associate. “And if every house has a Wi-Fi router in it, you could get battery-free cellphone coverage everywhere.”

A cellphone CPU (computer processing unit) typically requires several watts or more (depending on the app), so we’re not quite there yet. But that power requirement could one day be sufficiently reduced by future special-purpose chips and MIT’s optimized algorithms.

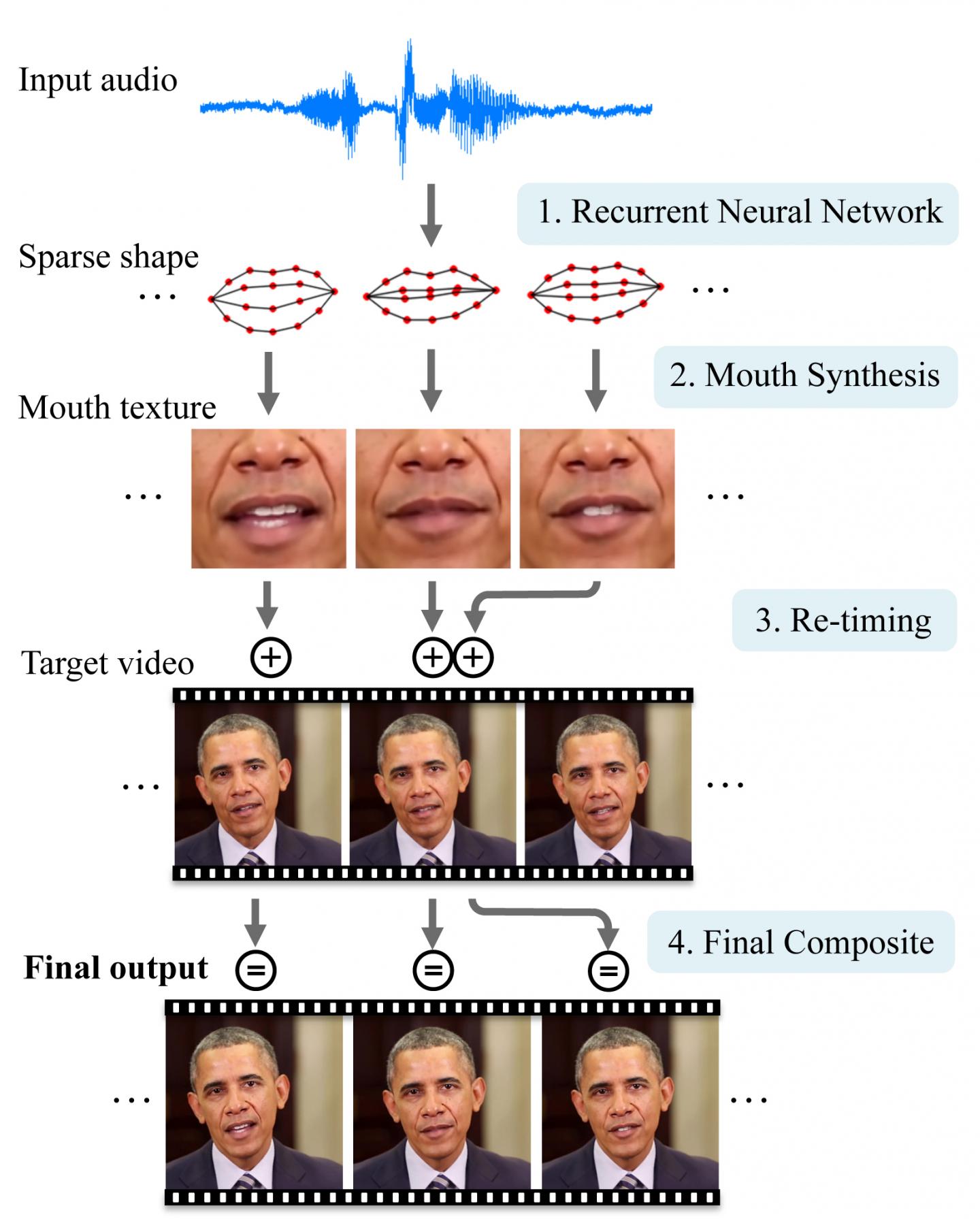

It might even let you do amazing things. :)

* Loosely based on the anatomy of the brain, neural networks consist of thousands or even millions of simple but densely interconnected information-processing nodes, usually organized into layers. The connections between nodes have “weights” associated with them, which determine how much a given node’s output will contribute to the next node’s computation. During training, in which the network is presented with examples of the computation it’s learning to perform, those weights are continually readjusted, until the output of the network’s last layer consistently corresponds with the result of the computation. With the proposed pruning method, the energy consumption of AlexNet and GoogLeNet are reduced by 3.7x and 1.6x, respectively, with less than 1% top-5 accuracy loss.

** The software reallocates cache access on the fly to reduce latency (delay), based on the physical locations of the separate memory banks that make up the shared memory cache. If multiple cores are retrieving data from the same DRAM [memory] cache, this can cause bottlenecks that introduce new latencies. So after Jenga has come up with a set of cache assignments, cores don’t simply dump all their data into the nearest available memory bank; instead, Jenga parcels out the data a little at a time, then estimates the effect on bandwidth consumption and latency.

*** The stumbling block, Yao said, has been the anode, the portion of the battery through which energy flows. Existing anode materials are intrinsically structurally and chemically unstable, meaning the battery is only efficient for a relatively short time. The differing formulations offer evidence that the material is an effective anode for both acid batteries and alkaline batteries, such as those used in a car, as well as emerging aqueous metal-ion batteries.