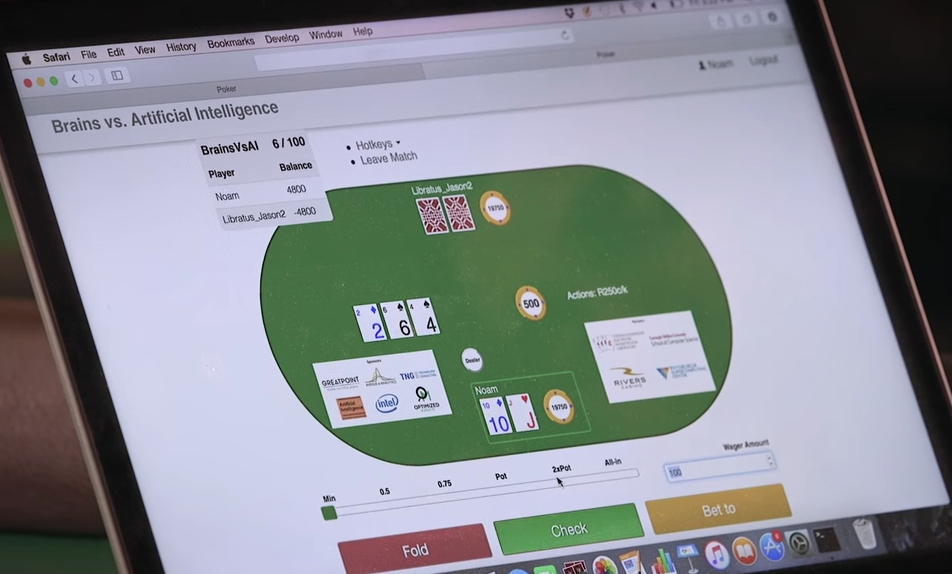

“Brains vs Artificial Intelligence” competition at the Rivers Casino in Pittsburgh (credit: Carnegie Mellon University)

Libratus, an AI developed by Carnegie Mellon University, has defeated four of the world’s best professional poker players in a marathon 120,000 hands of Heads-up, No-Limit Texas Hold’em poker played over 20 days, CMU announced today (Jan. 31) — joining Deep Blue (for chess), Watson, and Alpha Go as major milestones in AI.

Libratus led the pros by a collective $1,766,250 in chips.* The tournament was held at the Rivers Casino in Pittsburgh from 11–30 January in a competition called “Brains Vs. Artificial Intelligence: Upping the Ante.”

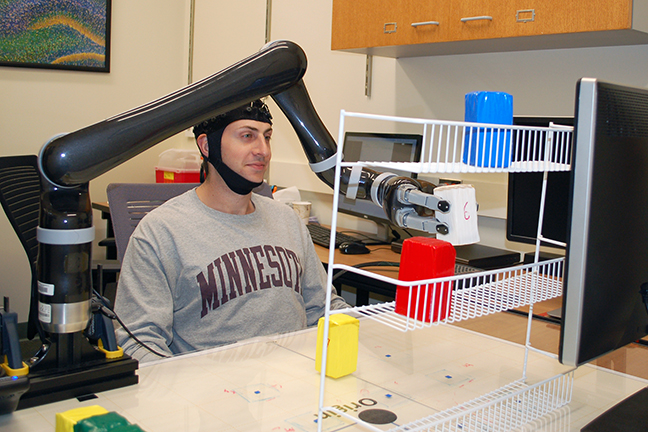

The developers of Libratus — Tuomas Sandholm, professor of computer science, and Noam Brown, a Ph.D. student in computer science — said the sizable victory is statistically significant and not simply a matter of luck. “The best AI’s ability to do strategic reasoning with imperfect information has now surpassed that of the best humans,” Sandholm said. “This is the last frontier, at least in the foreseeable horizon, in game-solving in AI.”

This new AI milestone has implications for any realm in which information is incomplete and opponents sow misinformation, said Frank Pfenning, head of the Computer Science Department in CMU’s School of Computer Science. Business negotiation, military strategy, cybersecurity, and medical treatment planning could all benefit from automated decision-making using a Libratus-like AI.

“The computer can’t win at poker if it can’t bluff,” Pfenning explained. “Developing an AI that can do that successfully is a tremendous step forward scientifically and has numerous applications. Imagine that your smartphone will someday be able to negotiate the best price on a new car for you. That’s just the beginning.”

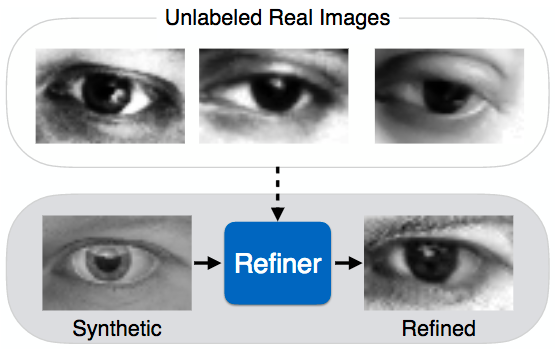

How the pros taught Libratus about its weaknesses

Brains vs AI scorecard (credit: Carnegie Mellon University)

So how was Libratus was able to improve day to day during the competition? It turns out it was the pros themselves who taught Libratus about its weaknesses. “After play ended each day, a meta-algorithm analyzed what holes the pros had identified and exploited in Libratus’ strategy,” Sandholm explained. “It then prioritized the holes and algorithmically patched the top three using the supercomputer each night.

“This is very different than how learning has been used in the past in poker. Typically researchers develop algorithms that try to exploit the opponent’s weaknesses. In contrast, here the daily improvement is about algorithmically fixing holes in our own strategy.”

Sandholm also said that Libratus’ end-game strategy was a major advance. “The end-game solver has a perfect analysis of the cards,” he said. It was able to update its strategy for each hand in a way that ensured any late changes would only improve the strategy. Over the course of the competition, the pros responded by making more aggressive moves early in the hand, no doubt to avoid playing in the deep waters of the endgame where the AI had an advantage, he added.

Converging high-performance computing and AI

Professor Tuomas Sandholm, Carnegie Mellon School of Computer Science, with the Pittsburgh Supercomputing Center’s Bridges supercomputer (credit: Carnegie Mellon University)

Libratus’ victory was made possible by the Pittsburgh Supercomputing Center’s Bridges computer. Libratus recruited the raw power of approximately 600 of Bridges’ 846 compute nodes. Bridges’ total speed is 1.35 petaflops, about 7,250 times as fast as a high-end laptop, and its memory is 274 terabytes, about 17,500 as much as you’d get in that laptop. This computing power gave Libratus the ability to play four of the best Texas Hold’em players in the world at once and beat them.

“We designed Bridges to converge high-performance computing and artificial intelligence,” said Nick Nystrom, PSC’s senior director of research and principal investigator for the National Science Foundation-funded Bridges system. “Libratus’ win is an important milestone toward developing AIs to address complex, real-world problems. At the same time, Bridges is powering new discoveries in the physical sciences, biology, social science, business and even the humanities.”

Sandholm said he will continue his research push on the core technologies involved in solving imperfect-information games and in applying these technologies to real-world problems. That includes his work with Optimized Markets, a company he founded to automate negotiations.

“CMU played a pivotal role in developing both computer chess, which eventually beat the human world champion, and Watson, the AI that beat top human Jeopardy! competitors,” Pfenning said. “It has been very exciting to watch the progress of poker-playing programs that have finally surpassed the best human players. Each one of these accomplishments represents a major milestone in our understanding of intelligence.

Head’s-Up No-Limit Texas Hold’em is a complex game, with 10160 (the number 1 followed by 160 zeroes) information sets — each set being characterized by the path of play in the hand as perceived by the player whose turn it is. The AI must make decisions without knowing all of the cards in play, while trying to sniff out bluffing by its opponent. As “no-limit” suggests, players may bet or raise any amount up to all of their chips.

Sandholm will be sharing Libratus’ secrets now that the competition is over, beginning with invited talks at the Association for the Advancement of Artificial Intelligence meeting Feb. 4–9 in San Francisco and in submissions to peer-reviewed scientific conferences and journals.

* The pros — Dong Kim, Jimmy Chou, Daniel McAulay and Jason Les — will split a $200,000 prize purse based on their respective performances during the event. McAulay, of Scotland, said Libratus was a tougher opponent than he expected, but it was exciting to play against it. “Whenever you play a top player at poker, you learn from it,” he said.

Carnegie Mellon University | Brains Vs. AI Rematch: Why Poker?