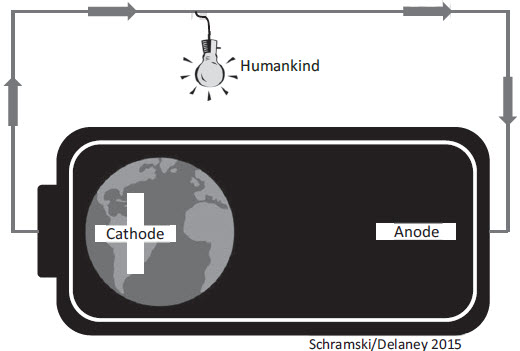

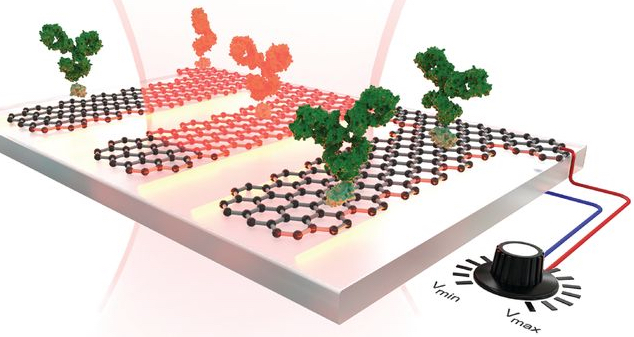

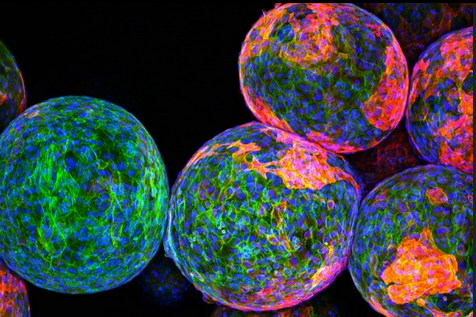

Parallelized multispectral imaging. Each rainbow-colored bar is the fluorescent spectrum from a discrete point in a cell culture. The gigapixel multispectral microscope records nearly a million such spectra every second. (credit: Antony Orth et al./Optica)

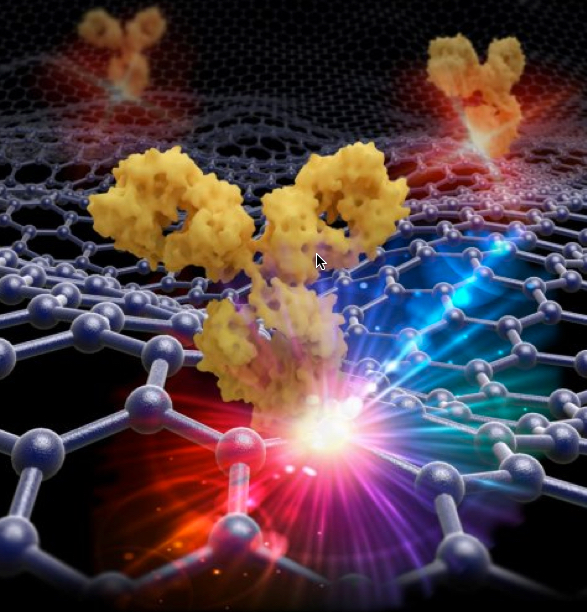

A new multispectral microscope capable of processing nearly 17 billion pixels in a single image has been developed by a team of researchers from the United States and Australia — the largest such microscopic image ever created.

This level of multicolor detail is essential for studying the impact of experimental drugs on biological samples and is an important advancement over traditional microscope designs, the researchers say. The goal is to simultaneously process large amounts of data to deal with a major bottleneck in pharmaceutical research: rapid, data-rich biomedical imaging.

The microscope merges data simultaneously collected by thousands of microlenses to produce a continuous series of datasets that essentially reveal how much of multiple colors (frequencies) are present at each point in a single biological sample.

“We recognized that the microscopy part of the drug development pipeline was much slower than it could be and designed a system specifically for this task,” said Antony Orth, a researcher formerly at the Rowland Institute, Harvard University in Cambridge and now with the ARC Centre for Nanoscale BioPhotonics, RMIT University in Melbourne, Australia.

Orth and his colleagues published their results in Optica, a journal of The Optical Society.

Multispectral imaging

Multispectral imaging is used for a variety of scientific and medical research applications. This process adds data about specific colors, or frequencies to images. Medical researchers are able to study these frequencies to learn about the composition and chemical processes that are taking place within a biological sample. This is essential for pharmaceutical research — particularly cancer research — to observe how cells and tissues respond to specific chemicals and experimental drugs.

Such research, however, is very data intensive and slow since current multispectral microscopes can only survey a single point at a time with few color channels, typically only 4 or 5. This process must then be repeated over and over to scan the entire sample.

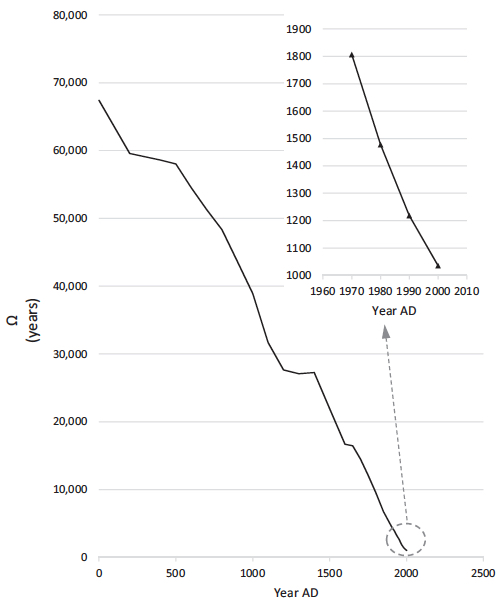

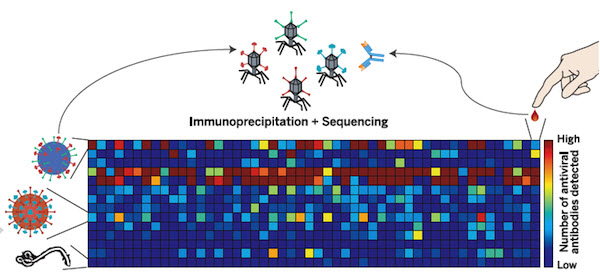

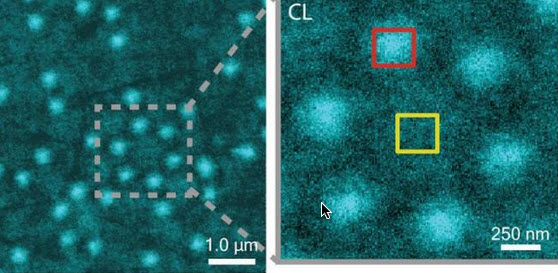

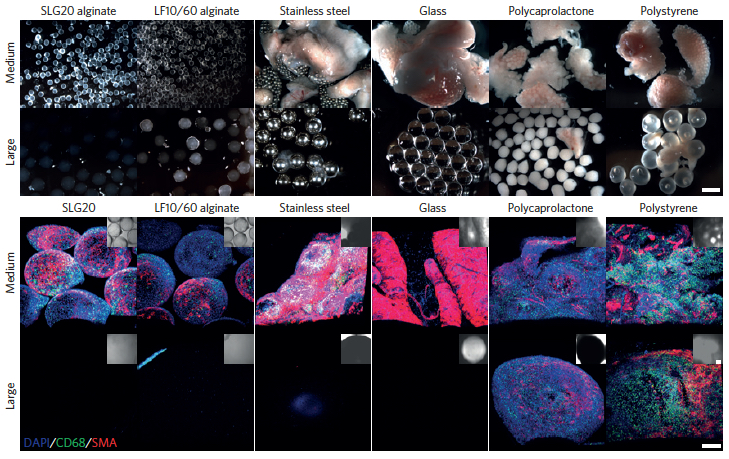

Slices of a spectral data cube. HeLa cells are imaged at 11 wavelengths from blue to red. The bottom right panel is a composite of all wavelength channels. (credit: Antony Orth et al./Optica)

Microlenses and parallel processing for big data

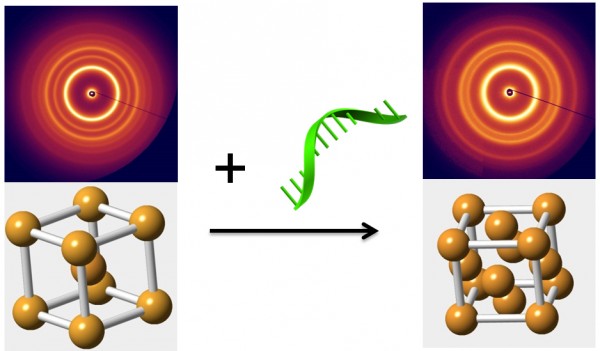

To overcome these limitations, Orth and his team took inspiration from modern computing, in which massive amounts of data and calculations are simultaneous handled by multicore processors. In the case of imaging, however, the work of a single microscope lens is distributed among an entire array or microlenses, each responsible for collecting multispectral data for a very narrow portion of each sample.

To capture this data, a laser is focused onto a small spot on the sample by each microlens. The laser light causes the sample to fluoresce, emitting specific wavelengths of light that differ depending on the molecules that are present. This fluorescence is then imaged back onto the camera. This is done for thousands of microlenses at once.

This multipoint scanning greatly reduces the amount of time necessary to image a sample by simultaneously harnessing thousands of lenses.

“By recording the color spectrum of the fluorescence, we can determine how much of each fluorescing molecule is in the sample,” said Orth. “What makes our microscope particularly powerful is that it records many different colors at once, allowing researchers to highlight a large number of structures in a single experiment.”

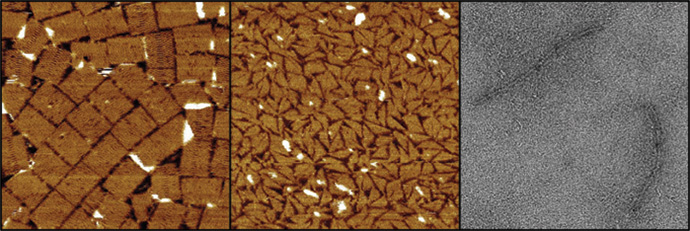

To demonstrate their design, the researchers applied various dyes that adhere to specific molecules within a cell sample. These dyes respond to laser light by fluorescing at specific frequencies so they can be detected and localized with high precision. Each microlens then looked at a very small part of the sample, an area about 0.6 by 0.1 millimeters in size. The raw data produced by this was a series of small images roughly 1,200 by 200 pixels wide.

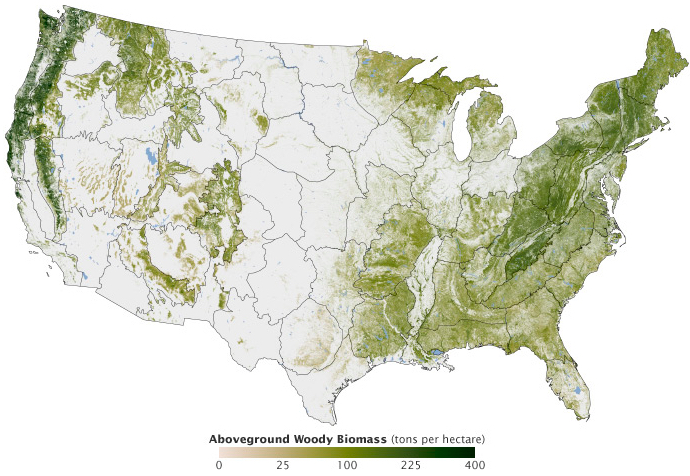

These individual multicolor images were then stitched together into a large mosaic image. By simultaneously imaging 13 separate colors bands, the dataset produced was nearly 17 billion pixels in size.

In scientific imaging, such multilayered files are referred to as “datacubes,” because they contain three dimensions — two spatial (the X and Y coordinates) and one dimension of color. “The dataset basically tells you how much of each color you have at any given X-Y position in the sample,” explained Orth.

This design is a significant improvement over regular, single-lens microscopes, which take a series of medium-sized pictures in a serial fashion. Since they cannot see the entire sample at once, it’s necessary to take one picture and then move the sample to capture the next. This means the sample has to remain still while the microscope is refocused or color filters are changed. Orth and his colleagues’ design eliminates much of this mechanical dead-time and is almost always imaging.

Multispectral fluorescence image of an entire cancer cell culture. A gradient wavelength filter is applied in post processing to visualize the full spectral nature of the dataset – 13 discrete wavelengths from red to blue. (credit: Antony Orth et al./Optica)

Speeding up drug discovery wtih big data

This novel approach initially presented a challenge in the data pipeline. The raw data is in the form of one megapixel images recorded at 200 frames per second — a data rate much higher than current microscopes, which required the team to capture and process a tremendous amount of data each second.

Over time, the availability and prices of fast cameras and fast hard drives have come down considerably, allowing for a much more affordable and efficient design. The current limiting factor is loading the recorded data from hard drives to active computer memory to produce an image. The researchers estimate that an active memory of about 100 gigabytes to store the raw dataset would improve the entire process even further.

The goal of this technology is to speed up drug discovery. For example, to study the impact of a new cancer drug it’s essential to determine if a specific drug kills cancer cells more often than healthy cells. This requires testing the same drug on thousands to millions of cells with varying doses and under different conditions, which is normally a very time-consuming and labor-intensive task.

The new microscope presented in this paper speeds up this process while also looking at many different colors at once. “This is important because the more colors you can see, the more insightful and efficient your experiments become,” noted Orth. “Because of this, the speed-up afforded by our microscope goes beyond just the improvement in raw data rate.”

Continuing this research, the team would like to expand to live cell imaging in which billion-pixel, time-lapse movies of cells moving and responding to various stimuli could be made, opening the door to experiments that currently aren’t possible with small-scale time-lapse movies.

Abstract of Gigapixel multispectral microscopy

Understanding the complexity of cellular biology often requires capturing and processing an enormous amount of data. In high-content drug screens, each cell is labeled with several different fluorescent markers and frequently thousands to millions of cells need to be analyzed in order to characterize biology’s intrinsic variability. In this work, we demonstrate a new microlens-based multispectral microscope designed to meet this throughput-intensive demand. We report multispectral image cubes of up to 1.26 gigapixels in the spatial domain, with up to 13 spectral samples per pixel, for a total image size of 16.4 billion spatial-spectral samples. To our knowledge, this is the largest multispectral microscopy dataset reported in the literature. Our system has highly reconfigurable spectral sampling and bandwidth settings and we have demonstrated spectral unmixing of up to 6 fluorescent channels.