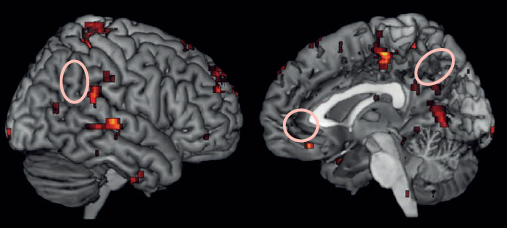

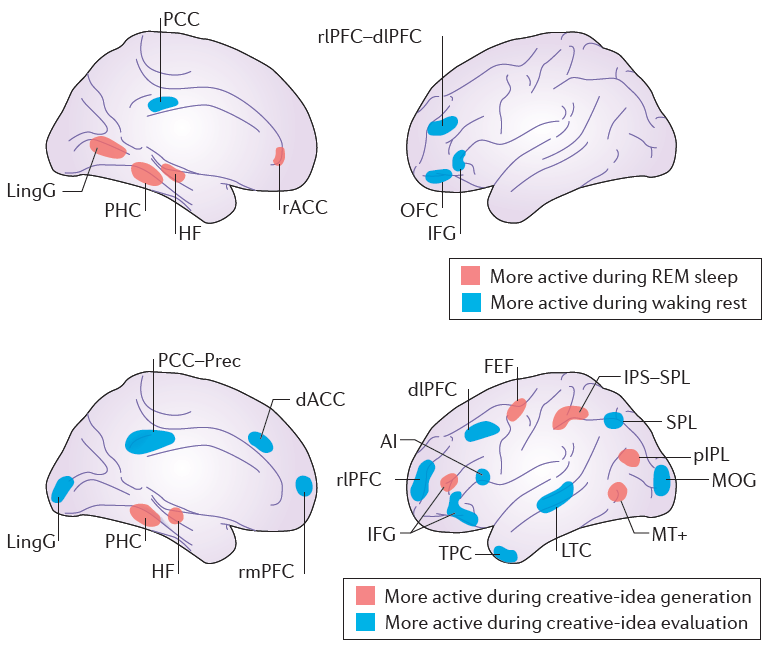

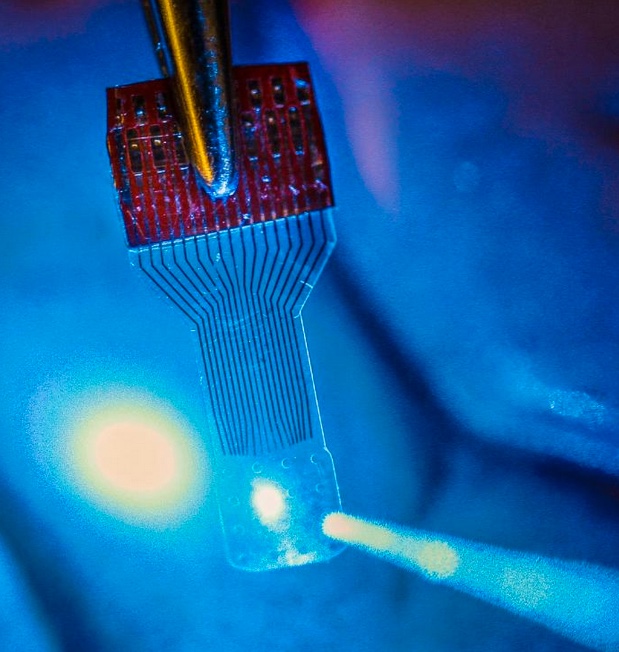

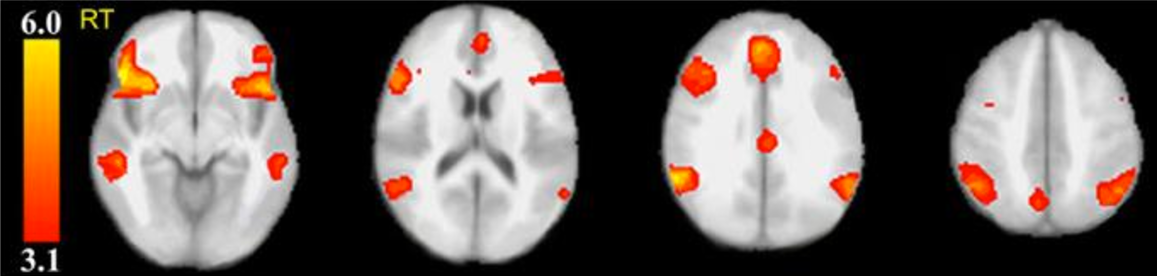

Significant clusters in fMRI exam are located in the anterior cingulate cortex, bilateral inferior frontal, inferior parietal and medial temporal gyrl, and the precuneus. (credit: Perelman School of Medicine at the University of Pennsylvania/Journal of Clinical Psychiatry)

Scanning people’s brains with fMRI (functional magnetic resonance imaging) was significantly more effective at spotting lies than a traditional polygraph test, researchers in the Perelman School of Medicine at the University of Pennsylvania found in a study published in the Journal of Clinical Psychiatry.

When someone is lying, areas of the brain linked to decision-making are activated, which lights up on an fMRI scan for experts to see. While laboratory studies showed fMRI’s ability to detect deception with up to 90 percent accuracy, estimates of polygraphs’ accuracy ranged wildly, between chance and 100 percent, depending on the study.

The Penn study is the first to compare the two modalities in the same individuals in a blinded and prospective fashion. The approach adds scientific data to the long-standing debate about this technology and builds the case for more studies investigating its potential real-life applications, such as evidence in criminal legal proceedings.

Neuroscientists better than polygraph examiners at detecting deception

Researchers from Penn’s departments of Psychiatry and Biostatistics and Epidemiology found that neuroscience experts without prior experience in lie detection, using fMRI data, were 24 percent more likely to detect deception than professional polygraph examiners reviewing polygraph recordings. In both fMRI and polygraph, participants took a standardized “concealed information” test.*

Polygraph monitors individuals’ electrical skin conductivity, heart rate, and respiration during a series of questions. Polygraph is based on the assumption that incidents of lying are marked by upward or downward spikes in these measurements.

“Polygraph measures reflect complex activity of the peripheral nervous system that is reduced to only a few parameters, while fMRI is looking at thousands of brain clusters with higher resolution in both space and time. While neither type of activity is unique to lying, we expected brain activity to be a more specific marker, and this is what I believe we found,” said the study’s lead author, Daniel D. Langleben, MD, a professor of Psychiatry.

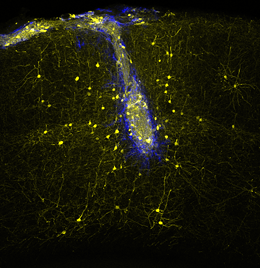

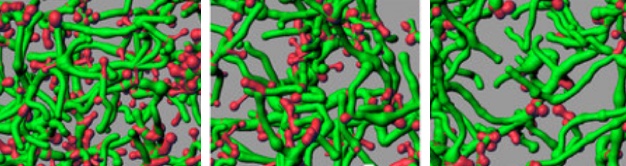

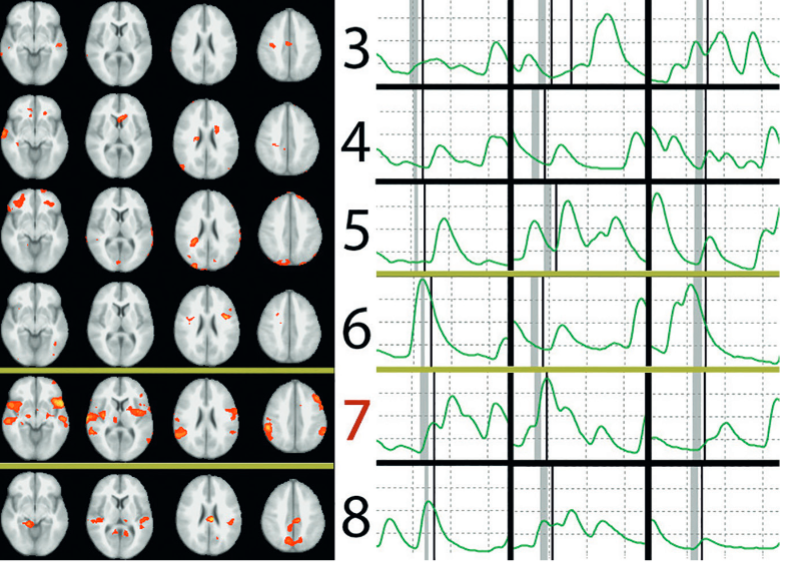

fMRI Correct and Polygraphy Incorrect. (Left) All 3 fMRI raters correctly identified number 7 as the concealed number. (Right) Representative fragments from the electrodermal activity polygraphy channel correspond to responses about the same concealed numbers. The gray bars mark the time of polygraph examiner’s question (“Did you write the number [X]?”), and the thin black bars immediately following indicate the time of participant’s “No” response. All 3 polygraph raters incorrectly identified number 6 as the Lie Item. (credit: Daniel D. Langleben et al./Journal of Clinical Psychiatry)

The scenario was reversed in another example, as neither fMRI nor polygraph experts were perfect, which is demonstrated in the paper. However, overall, fMRI experts were 24 percent more likely to detect the lie in any given participant.

Combination of technologies was 100 percent correct

Beyond the accuracy comparison, authors made another important observation. In the 17 cases when polygraph and fMRI agreed on what the concealed number was, they were 100 percent correct. Such high precision of positive determinations could be especially important in the United States and British criminal proceedings, where avoiding false convictions takes absolute precedence over catching the guilty, the authors said.

They cautioned that while this does suggest that the two modalities may be complementary if used in sequence, their study was not designed to test combined use of both modalities and their unexpected observation needs to be confirmed experimentally before any practical conclusions could be made.

The study was supported by the U.S. Army Research Office, No Lie MRI, Inc, and the University of Pennsylvania Center for MRI and Spectroscopy.

* To compare the two technologies, 28 participants were given the so-called “Concealed Information Test” (CIT). CIT is designed to determine whether a person has specific knowledge by asking carefully constructed questions, some of which have known answers, and looking for responses that are accompanied by spikes in physiological activity. Sometimes referred to as the Guilty Knowledge Test, CIT has been developed and used by polygraph examiners to demonstrate the effectiveness of their methods to subjects prior to the actual polygraph examination.

In the Penn study, a polygraph examiner asked participants to secretly write down a number between three and eight. Next, each person was administered the CIT while either hooked to a polygraph or lying inside an MRI scanner. Each of the participants had both tests, in a different order, a few hours apart. During both sessions, they were instructed to answer “no” to questions about all the numbers, making one of the six answers a lie. The results were then evaluated by three polygraph and three neuroimaging experts separately and then compared to determine which technology was better at detecting the fib.

Abstract of Polygraphy and Functional Magnetic Resonance Imaging in Lie Detection: A Controlled Blind Comparison Using the Concealed Information Test

Objective: Intentional deception is a common act that often has detrimental social, legal, and clinical implications. In the last decade, brain activation patterns associated with deception have been mapped with functional magnetic resonance imaging (fMRI), significantly expanding our theoretical understanding of the phenomenon. However, despite substantial criticism, polygraphy remains the only biological method of lie detection in practical use today. We conducted a blind, prospective, and controlled within-subjects study to compare the accuracy of fMRI and polygraphy in the detection of concealed information. Data were collected between July 2008 and August 2009.

Method: Participants (N = 28) secretly wrote down a number between 3 and 8 on a slip of paper and were questioned about what number they wrote during consecutive and counterbalanced fMRI and polygraphy sessions. The Concealed Information Test (CIT) paradigm was used to evoke deceptive responses about the concealed number. Each participant’s preprocessed fMRI images and 5-channel polygraph data were independently evaluated by 3 fMRI and 3 polygraph experts, who made an independent determination of the number the participant wrote down and concealed.

Results: Using a logistic regression, we found that fMRI experts were 24% more likely (relative risk = 1.24, P < .001) to detect the concealed number than the polygraphy experts. Incidentally, when 2 out of 3 raters in each modality agreed on a number (N = 17), the combined accuracy was 100%.

Conclusions: These data justify further evaluation of fMRI as a potential alternative to polygraphy. The sequential or concurrent use of psychophysiology and neuroimaging in lie detection also deserves new consideration.