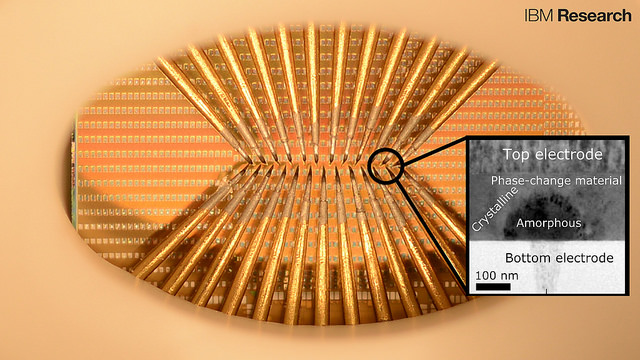

A prototype chip with large arrays of phase-change devices that store the state of artificial neuronal populations in their atomic configuration. The devices are accessed via an array of probes in this prototype to allow for characterization and testing. The tiny squares are contact pads used to access the nanometer-scale phase-change cells (inset). Each set of probes can access a population of 100 cells. There are thousands to millions of these cells on one chip and IBM accesses them (in this particular photograph) by means of the sharp needles (probe card). (credit: IBM Research)

Scientists at IBM Research in Zurich have developed artificial neurons that emulate how neurons spike (fire). The goal is to create energy-efficient, high-speed, ultra-dense integrated neuromorphic (brain-like) technologies for applications in cognitive computing, such as unsupervised learning for detecting and analyzing patterns.

Applications could include internet of things sensors that collect and analyze volumes of weather data for faster forecasts and detecting patterns in financial transactions, for example.

The results of this research appeared today (Aug. 3) as a cover story in the journal Nature Nanotechnology.

Emulating neuron spiking

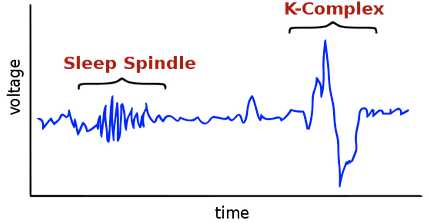

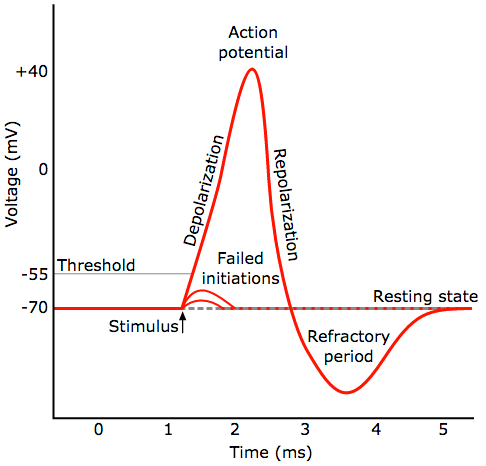

General pattern of a neural spike (action potential). A neuron fires (generates a rapid action potential, or voltage, when triggered by a stimulus) a signal from a synapse (credit: Chris 73/Diberri CC)

IBM’s new neuron-like spiking mechanism is based on a recent IBM breakthrough in phase-change materials. Phase-change materials are used for storing and processing digital data in re-writable Blu-ray discs, for example. The new phase-change materials developed by IBM recently are used instead for storing and processing analog data — like the synapses and neurons in our biological brains.

The new phase-change materials also overcome problems in conventional computing, where there’s a separate memory and logic unit, slowing down computation. These functions are combined in the new artificial neurons, just as they are in a biological neuron.

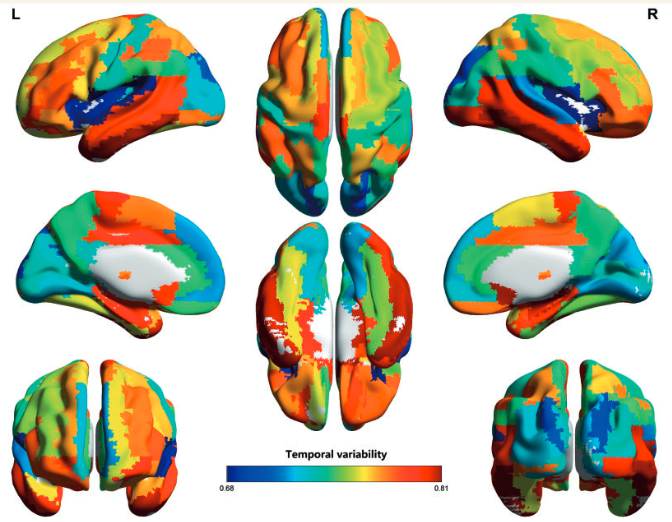

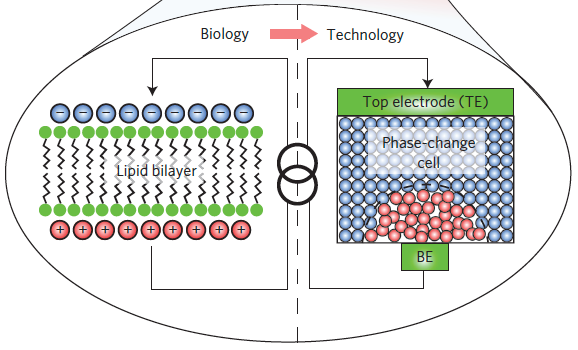

In biological neurons, a thin lipid-bilayer membrane separates the electrical charges inside the cell from those outside it. The membrane potential is altered by the arrival of excitatory and inhibitory postsynaptic potentials through the dendrites of the neuron, and upon sufficient excitation of the neuron (a phase change), an action potential, or spike, is generated. IBM’s new germanium-antimony-tellurium (GeSbTe or GST) phase-change material emulates this process. It has two stable states: an amorphous one (without a clearly defined structure) and a crystalline one (with a structure). (credit: Tomas Tuma et al./Nature Nanotechnology)

Alternative to von-Neumann-based algorithms

In addition, previous attempts to build artificial neurons are built using CMOS-based circuits, the standard transistor technology we have in our computers. The new phase-change technology can reproduce similar functionality at reduced power consumption. The artificial neurons are also superior in functioning at nanometer-length-scale dimensions and feature native stochasticity (based on random variables, simulating neurons).

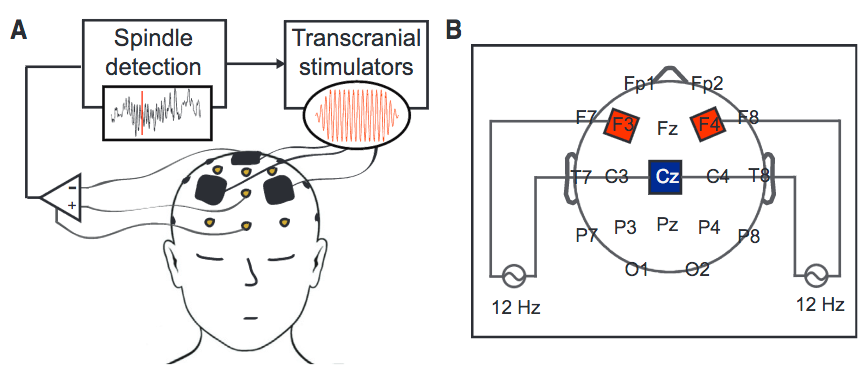

“Populations of stochastic phase-change neurons, combined with other nanoscale computational elements such as artificial synapses, could be a key enabler for the creation of a new generation of extremely dense neuromorphic computing systems,” said Tomas Tuma, a co-author of the paper.

“The relatively complex computational tasks, such as Bayesian inference, that stochastic neuronal populations can perform with collocated processing and storage render them attractive as a possible alternative to von-Neumann-based algorithms in future cognitive computers,” the IBM scientists state in the paper.

IBM scientists have organized hundreds of these artificial neurons into populations and used them to represent fast and complex signals. These artificial neurons have been shown to sustain billions of switching cycles, which would correspond to multiple years of operation at an update frequency of 100 Hz. The energy required for each neuron update was less than five picojoule and the average power less than 120 microwatts — for comparison, 60 million microwatts power a 60 watt lightbulb.

IBM Research | All-memristive neuromorphic computing with level-tuned neurons

Abstract of Stochastic phase-change neurons

Artificial neuromorphic systems based on populations of spiking neurons are an indispensable tool in understanding the human brain and in constructing neuromimetic computational systems. To reach areal and power efficiencies comparable to those seen in biological systems, electroionics-based and phase-change-based memristive devices have been explored as nanoscale counterparts of synapses. However, progress on scalable realizations of neurons has so far been limited. Here, we show that chalcogenide-based phase-change materials can be used to create an artificial neuron in which the membrane potential is represented by the phase configuration of the nanoscale phase-change device. By exploiting the physics of reversible amorphous-to-crystal phase transitions, we show that the temporal integration of postsynaptic potentials can be achieved on a nanosecond timescale. Moreover, we show that this is inherently stochastic because of the melt-quench-induced reconfiguration of the atomic structure occurring when the neuron is reset. We demonstrate the use of these phase-change neurons, and their populations, in the detection of temporal correlations in parallel data streams and in sub-Nyquist representation of high-bandwidth signals.