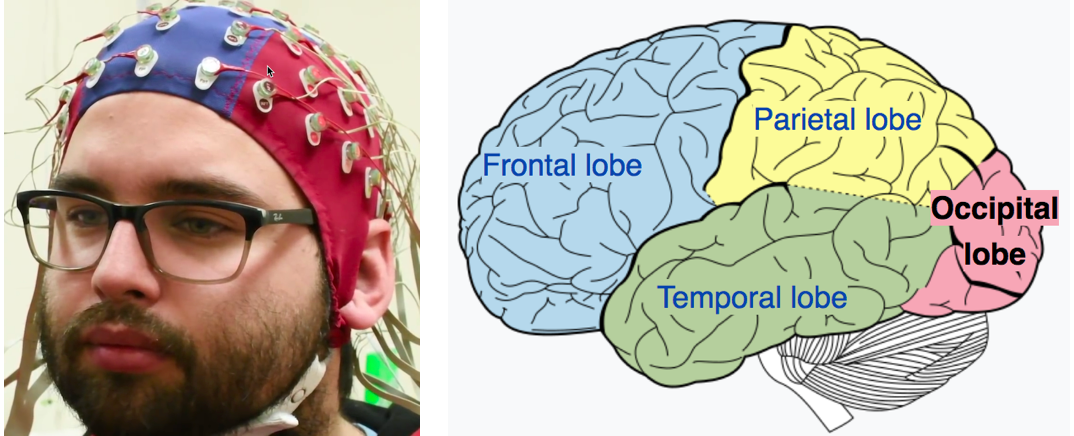

MIT Media Lab researcher Arnav Kapur demonstrates the AlterEgo device. It picks up neuromuscular facial signals generated by his thoughts; a bone-conduction headphone lets him privately hear responses from his personal devices. (credit: Lorrie Lejeune/MIT)

MIT researchers have invented a system that allows someone to communicate silently and privately with a computer or the internet by simply thinking — without requiring any facial muscle movement.

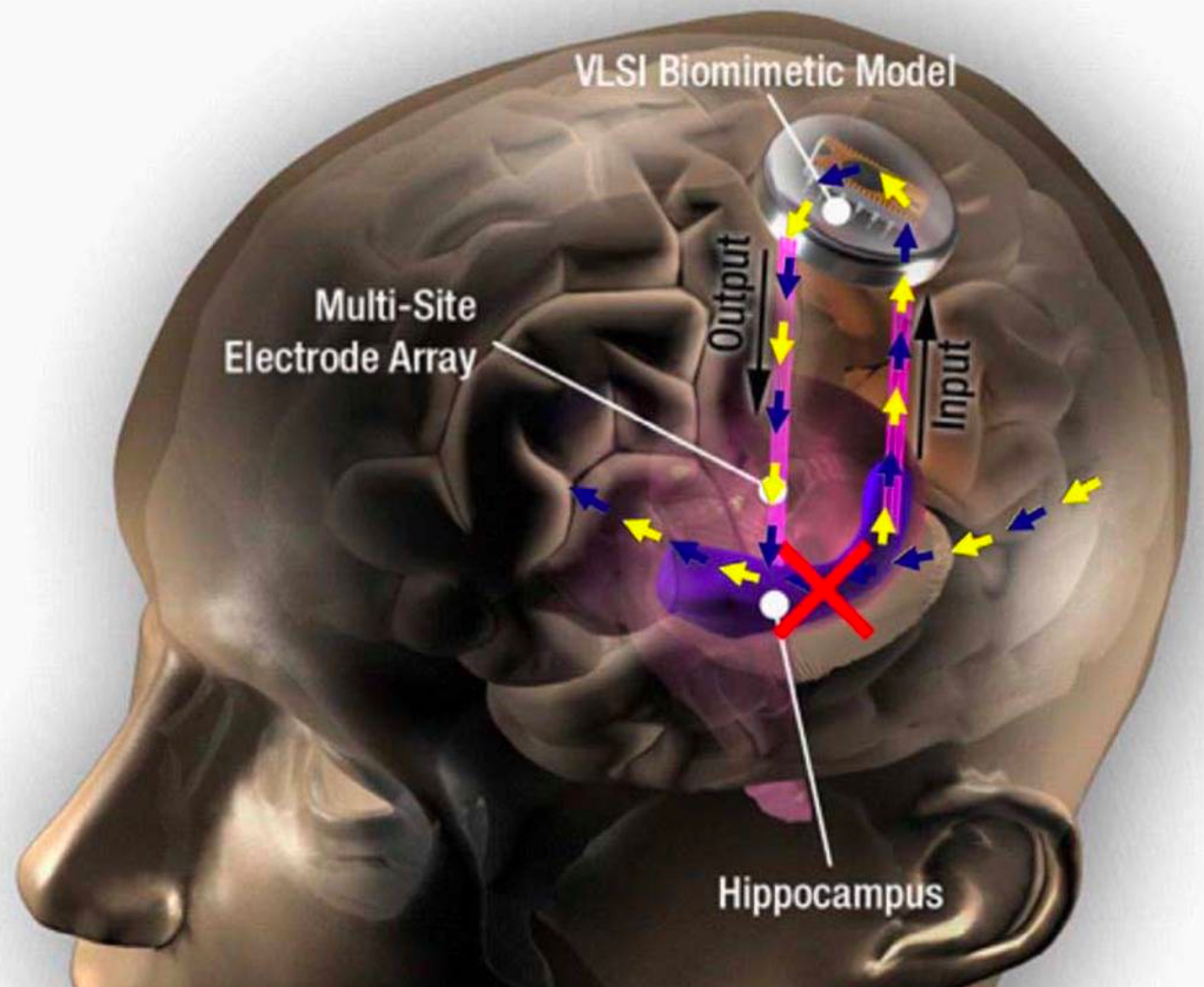

The AlterEgo system consists of a wearable device with electrodes that pick up otherwise undetectable neuromuscular subvocalizations — saying words “in your head” in natural language. The signals are fed to a neural network that is trained to identify subvocalized words from these signals. Bone-conduction headphones also transmit vibrations through the bones of the face to the inner ear to convey information to the user — privately and without interrupting a conversation. The device connects wirelessly to any external computing device via Bluetooth.

A silent, discreet, bidirectional conversation with machines. “Our idea was: Could we have a computing platform that’s more internal, that melds human and machine in some ways and that feels like an internal extension of our own cognition?,” says Arnav Kapur, a graduate student at the MIT Media Lab who led the development of the new system. Kapur is first author on an open-access paper on the research presented in March at the IUI ’18 23rd International Conference on Intelligent User Interfaces.

In one of the researchers’ experiments, subjects used the system to silently report opponents’ moves in a chess game and silently receive recommended moves from a chess-playing computer program. In another experiment, subjects were able to undetectably answer difficult computational problems, such as the square root of large numbers or obscure facts. The researchers achieved 92% median word accuracy levels, which is expected to improve. “I think we’ll achieve full conversation someday,” Kapur said.

Non-disruptive. “We basically can’t live without our cellphones, our digital devices,” says Pattie Maes, a professor of media arts and sciences and Kapur’s thesis advisor. “But at the moment, the use of those devices is very disruptive. If I want to look something up that’s relevant to a conversation I’m having, I have to find my phone and type in the passcode and open an app and type in some search keyword, and the whole thing requires that I completely shift attention from my environment and the people that I’m with to the phone itself.

“So, my students and I have for a very long time been experimenting with new form factors and new types of experience that enable people to still benefit from all the wonderful knowledge and services that these devices give us, but do it in a way that lets them remain in the present.”*

Even the tiniest signal to her jaw or larynx might be interpreted as a command. Keeping one hand on the sensitivity knob, she concentrated to erase mistakes the machine kept interpreting as nascent words.

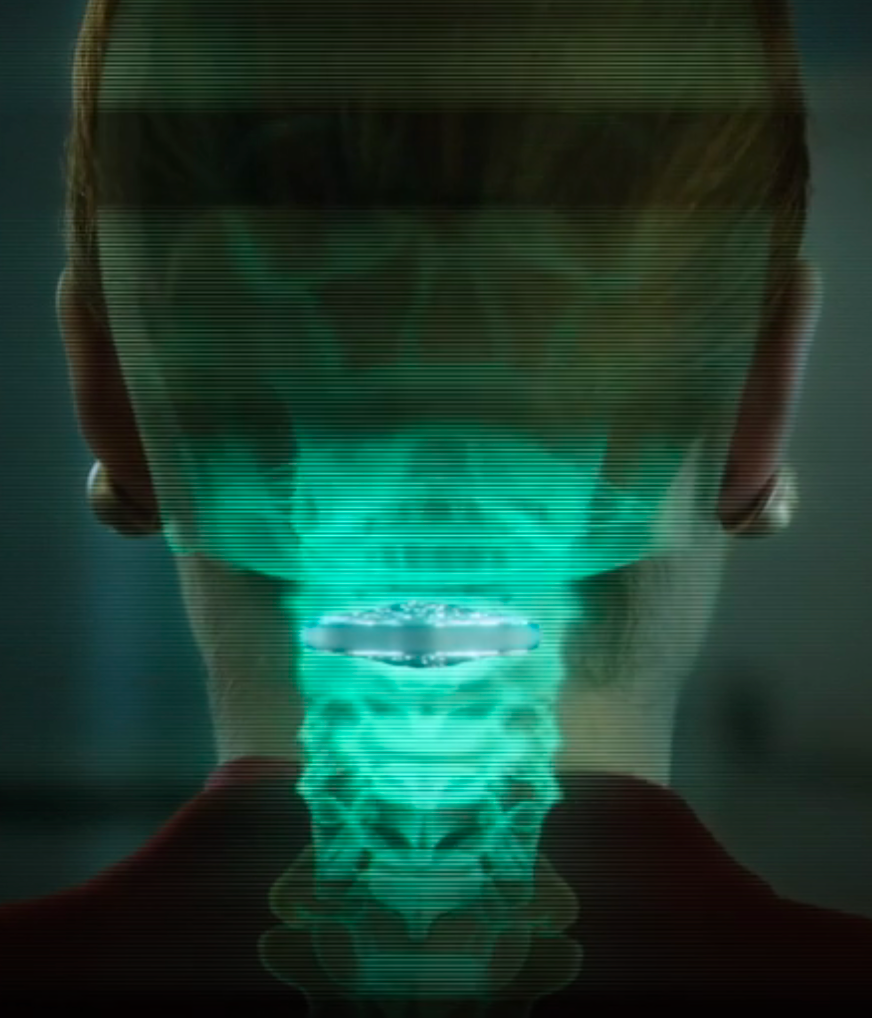

Few people used subvocals, for the same reason few ever became street jugglers. Not many could operate the delicate systems without tipping into chaos. Any normal mind kept intruding with apparent irrelevancies, many ascending to the level of muttered or almost-spoken words the outer consciousness hardly noticed, but which the device manifested visibly and in sound.Tunes that pop into your head… stray associations you generally ignore… memories that wink in and out… impulses to action… often rising to tickle the larynx, the tongue, stopping just short of sound…As she thought each of those words, lines of text appeared on the right, as if a stenographer were taking dictation from her subvocalized thoughts. Meanwhile, at the left-hand periphery, an extrapolation subroutine crafted little simulations. A tiny man with a violin. A face that smiled and closed one eye… It was well this device only read the outermost, superficial nervous activity, associated with the speech centers.When invented, the sub-vocal had been hailed as a boon to pilots — until high-performance jets began plowing into the ground. We experience ten thousand impulses for every one we allow to become action. Accelerating the choice and decision process did more than speed reaction time. It also shortcut judgment.Even as a computer input device, it was too sensitive for most people. Few wanted extra speed if it also meant the slightest sub-surface reaction could become embarrassingly real, in amplified speech or writing.If they ever really developed a true brain to computer interface, the chaos would be even worse.

— From EARTH (1989) chapter 35 by David Brin (with permission)

IoT control. In the conference paper, the researchers suggest that an “internet of things” (IoT) controller “could enable a user to control home appliances and devices (switch on/off home lighting, television control, HVAC systems etc.) through internal speech, without any observable action.” Or schedule an Uber pickup.

Peripheral devices could also be directly interfaced with the system. “For instance, lapel cameras and smart glasses could directly communicate with the device and provide contextual information to and from the device. … The device also augments how people share and converse. In a meeting, the device could be used as a back-channel to silently communicate with another person.”

Applications of the technology could also include high-noise environments, like the flight deck of an aircraft carrier, or even places with a lot of machinery, like a power plant or a printing press, suggests Thad Starner, a professor in Georgia Tech’s College of Computing. “There’s a lot of places where it’s not a noisy environment but a silent environment. A lot of time, special-ops folks have hand gestures, but you can’t always see those. Wouldn’t it be great to have silent-speech for communication between these folks? The last one is people who have disabilities where they can’t vocalize normally.”

* Or users could, conceivably, simply zone out — checking texts, email messages, and twitter (all converted to voice) during boring meetings, or even reply, using mentally selected “smart reply” type options.