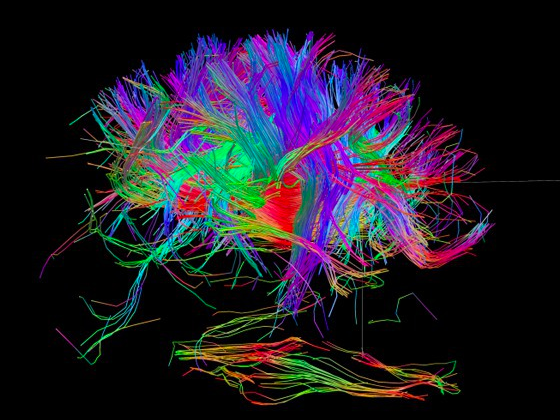

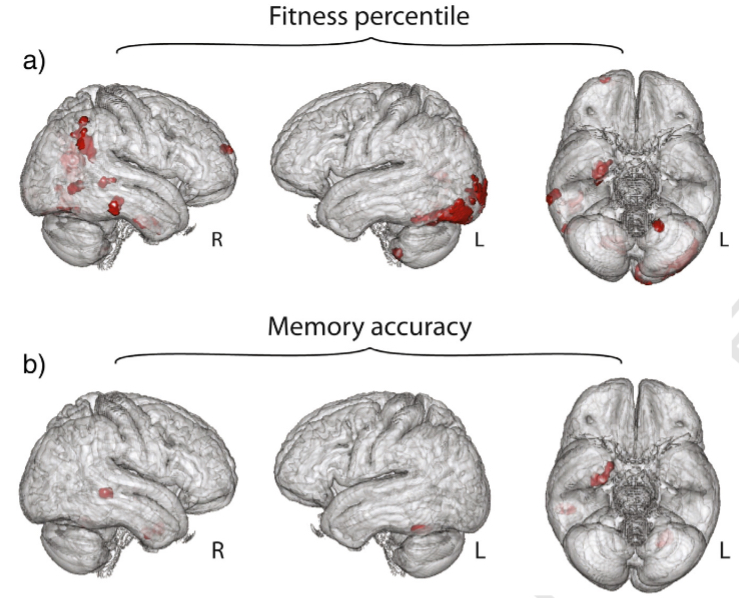

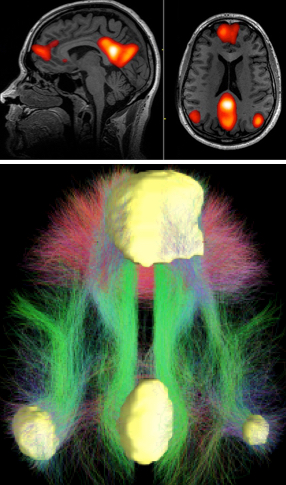

The brain’s default mode network (DMN) was the focus of the cognitive-stimulation experiment. Top: fMRI scans showing DMN regions; bottom: a diagram of typical connectivity between these regions. (credit: John Graner/Walter Reed National Military Medical Center, Andreas Horn et al./NeuroImage, Abigail G. Garrity/Am. J. Psych.)

Neuroscientists in Italy and the U.K. have developed cognitive-stimulation exercises and tested them in a month-long experiment with healthy aging adults. The exercises were based on studies of the brain’s resting state, known as the “default mode network”* (DMN).

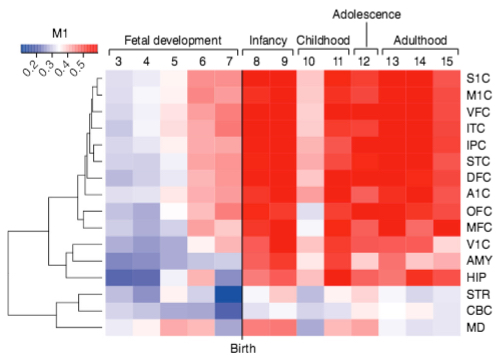

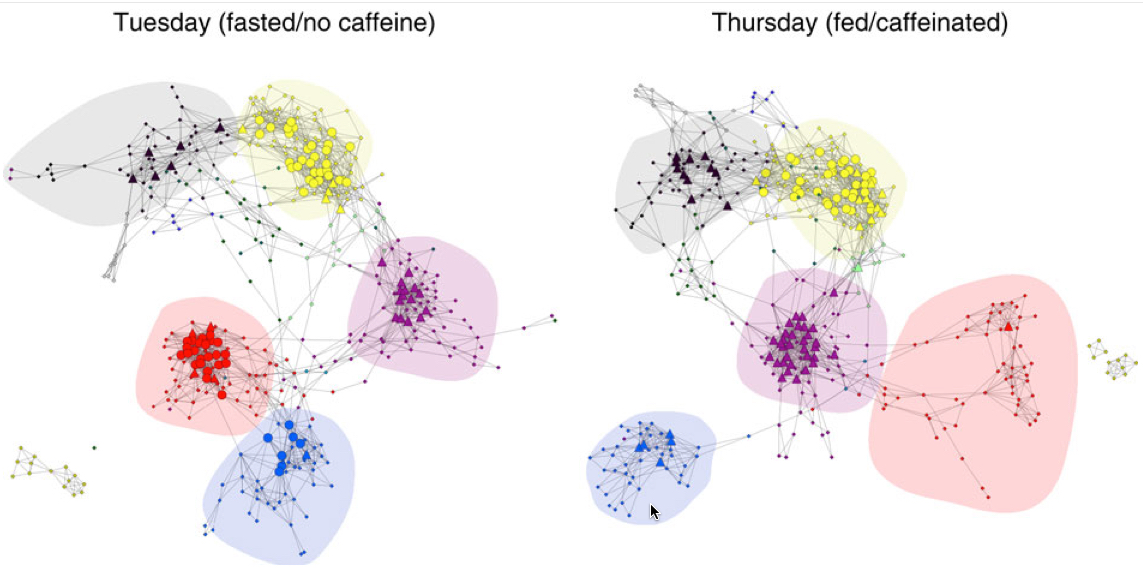

In a paper published in Brain Research Bulletin, the researchers explain that in aging (and at a pathological level in AD patients), the posterior (back) region of the DMN in the brain is underactive while the anterior (front) region is overactive. In addition, the two regions are not well connected in aging. So as a proof of concept, working with screened and tested, mentally healthy adults over age 50, the researchers designed an experiment to improve cognitive connectivity in the posterior region and also between the anterior and posterior regions.

Intensive mental exercises

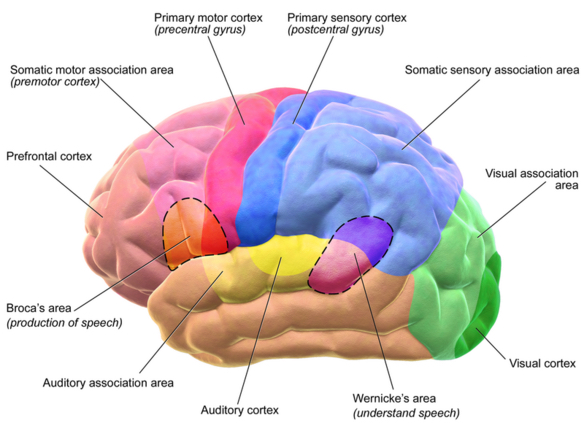

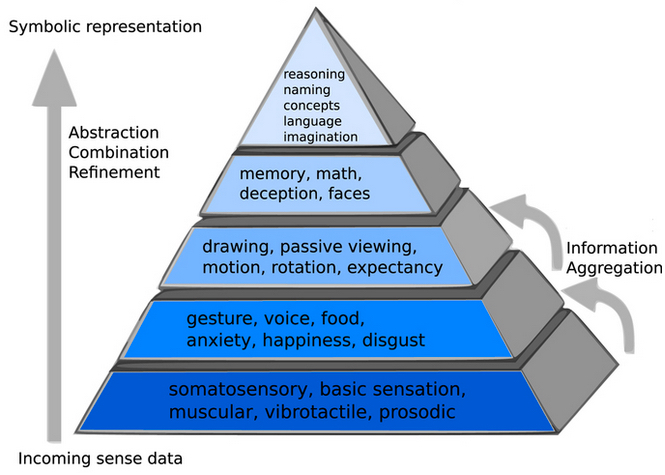

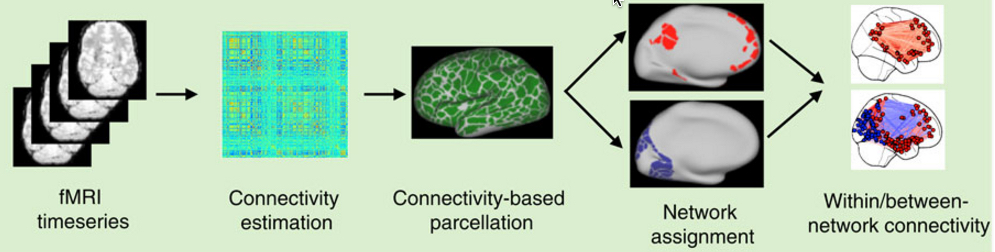

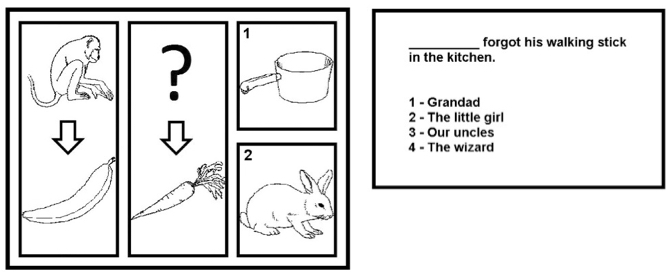

The intensive cognitive exercises were conducted over a period of up to 42 days using E-Prime 2.0 software from Psychology Software Tools. The computer-based exercises were chosen to be in the domains of “semantic processing, memory retrieval, logical reasoning, and executive processing,” the neuroscientists said, with “simultaneous activity in widespread neocortical, and mediotemporal and limbic areas” (the posterior component of the DMN). “Further exercises were then added to foster functional connectivity between anterior and posterior regions.”

Sample trials from the “sequence completion” (left) and “sentence completion” (right) tasks in the exercises. More difficult sequence-completion trials were characterized by abstract or indirect inter-image relations (e.g., “bear” and “bee” on the left, “cat” in the upper central position, “1: milk” and “2: cow” as the alternative answers). (credit: Matteo De Marco et al./Brain Research Bulletin and Psychology Software Tools)

An MRI protocol and a battery of neuropsychological tests were administered at baseline and at the end of the study.

The exercises were followed by fMRI exams. “Significant associations were found between task performance and gray-matter volume of multiple DMN core regions,” the authors note. “Functional regulation of resting-state connectivity within the posterior component of the DMN was found, “but no change in connectivity between the posterior and the anterior components. … These findings suggest that the program devised may have a preventive and therapeutic role in association with early AD-type neurodegeneration.”

The researchers are affiliated with IRCCS Fondazione Ospedale and University of Modena in Italy and University of Sheffield in the U.K.

* “The default mode network is most commonly shown to be active when a person is not focused on the outside world and the brain is at wakeful rest, such as during daydreaming and mind-wandering, but it is also active when the individual is thinking about others, thinking about themselves, remembering the past, and planning for the future. The network activates “by default” when a person is not involved in a task. … The DMN can also be defined by the areas deactivated during external directed tasks compared to rest.” — Wikipedia 1/9/2016

Abstract of Cognitive stimulation of the default-mode network modulates functional connectivity in healthy aging

A cognitive-stimulation tool was created to regulate functional connectivity within the brain Default-Mode Network (DMN). Computerized exercises were designed based on the hypothesis that repeated task-dependent coactivation of multiple DMN regions would translate into regulation of resting-state network connectivity.

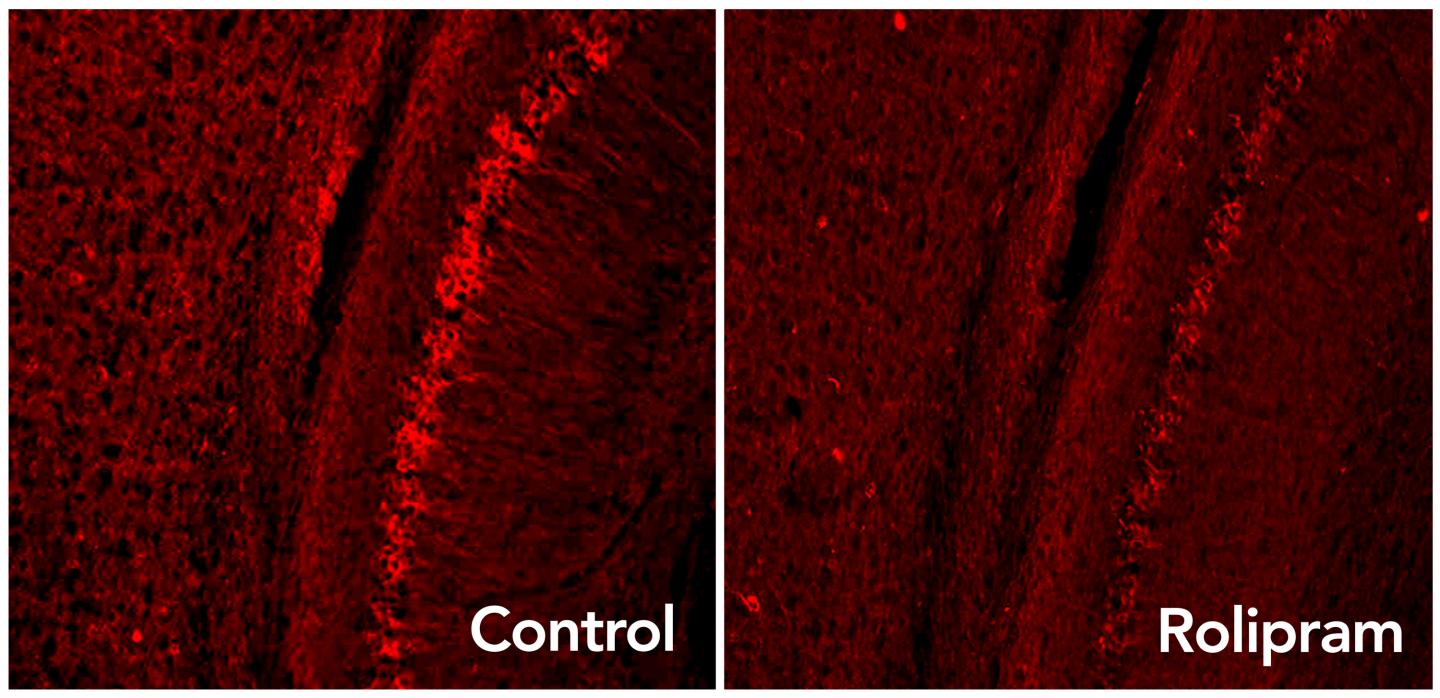

Forty seniors (mean age: 65.90 years; SD: 8.53) were recruited and assigned either to an experimental group (n = 21) who received one month of intensive cognitive stimulation, or to a control group (n = 19) who maintained a regime of daily-life activities explicitly focused on social interactions. An MRI protocol and a battery of neuropsychological tests were administered at baseline and at the end of the study. Changes in the DMN (measured via functional connectivity of posterior-cingulate seeds), in brain volumes, and in cognitive performance were measured with mixed models assessing group-by-timepoint interactions. Moreover, regression models were run to test gray-matter correlates of the various stimulation tasks.

Significant associations were found between task performance and gray-matter volume of multiple DMN core regions. Training-dependent up-regulation of functional connectivity was found in the posterior DMN component. This interaction was driven by a pattern of increased connectivity in the training group, while little or no up-regulation was seen in the control group. Minimal changes in brain volumes were found, but there was no change in cognitive performance.

The training-dependent regulation of functional connectivity within the posterior DMN component suggests that this stimulation program might exert a beneficial impact in the prevention and treatment of early AD neurodegeneration, in which this neurofunctional pathway is progressively affected by the disease.