(credit: iStock)

People over age 50 are scoring better on cognitive tests than people of the same age did in the past — a trend that could be linked to higher education rates and increased use of technology in our daily lives, according to a new study published in an open-access paper in the journal PLOS ONE. But the study also showed that average physical health of the older population has declined.

The study, by researchers at the International Institute for Applied Systems Analysis (IIASA) in Austria, relied on representative survey data from Germany that measured cognitive processing speed, physical fitness, and mental health in 2006 and again in 2012.

It found that cognitive test scores increased significantly within the six-year period (for men and women and at all ages from 50 to 90 years), while physical functioning and mental health declined, especially for low-educated men aged 50–64. The survey data was representative of the non-institutionalized German population, mentally and physically able to participate in the tests.

Cognition normally begins to decline with age, and is one key characteristic that demographers use to understand how different population groups age more successfully than others, according to IIASA population experts.

Changing lifestyles

Previous studies have found elderly people to be in increasingly good health — “younger” in many ways than previous generations at the same chronological age — with physical and cognitive measures all showing improvement over time. The new study is the first to show divergent trends over time between cognitive and physical function.

“We think that these divergent results can be explained by changing lifestyles,” says IIASA World Population Program researcher Nadia Steiber, author of the PLOS ONE study. “Life has become cognitively more demanding, with increasing use of communication and information technology also by older people, and people working longer in intellectually demanding jobs. At the same time, we are seeing a decline in physical activity and rising levels of obesity.”

A second study from IIASA population researchers, published last week in the journal Intelligence found similar results, suggesting that older people have also become smarter in England.

“On average, test scores of people aged 50+ today correspond to test scores from people 4–8 years younger and tested 6 years earlier,” says Valeria Bordone, a researcher at IIASA and the affiliated Wittgenstein Centre for Demography and Global Human Capital.

The studies both provide confirmation of the “Flynn effect” — a trend in rising performance in standard IQ tests from generation to generation. The studies show that changes in education levels in the population can explain part, but not all of the effect.

“We show for the first time that although compositional changes of the older population in terms of education partly explain the Flynn effect, the increasing use of modern technology such as computers and mobile phones in the first decade of the 2000s also contributes considerably to its explanation,” says Bordone.

The researchers note that while the findings apply to Germany and England, future research may provide evidence on other countries.

IIASA | Rethinking population aging

Abstract of Population Aging at Cross-Roads: Diverging Secular Trends in Average Cognitive Functioning and Physical Health in the Older Population of Germany

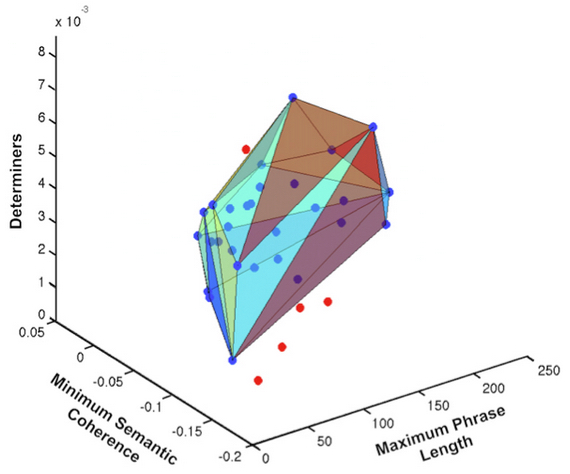

This paper uses individual-level data from the German Socio-Economic Panel to model trends in population health in terms of cognition, physical fitness, and mental health between 2006 and 2012. The focus is on the population aged 50–90. We use a repeated population-based cross-sectional design. As outcome measures, we use SF-12 measures of physical and mental health and the Symbol-Digit Test (SDT) that captures cognitive processing speed. In line with previous research we find a highly significant Flynn effect on cognition; i.e., SDT scores are higher among those who were tested more recently (at the same age). This result holds for men and women, all age groups, and across all levels of education. While we observe a secular improvement in terms of cognitive functioning, at the same time, average physical and mental health has declined. The decline in average physical health is shown to be stronger for men than for women and found to be strongest for low-educated, young-old men aged 50–64: the decline over the 6-year interval in average physical health is estimated to amount to about 0.37 SD, whereas average fluid cognition improved by about 0.29 SD. This pattern of results at the population-level (trends in average population health) stands in interesting contrast to the positive association of physical health and cognitive functioning at the individual-level. The findings underscore the multi-dimensionality of health and the aging process.

Abstract of Smarter every day: The deceleration of population ageing in terms of cognition

Cognitive decline correlates with age-associated health risks and has been shown to be a good predictor of future morbidity and mortality. Cognitive functioning can therefore be considered an important measure of differential aging across cohorts and population groups. Here, we investigate if and why individuals aged 50+ born into more recent cohorts perform better in terms of cognition than their counterparts of the same age born into earlier cohorts (Flynn effect). Based on two waves of English and German survey data, we show that cognitive test scores of participants aged 50+ in the later wave are higher compared with those of participants aged 50+ in the earlier wave. The mean scores in the later wave correspond to the mean scores in the earlier wave obtained by participants who were on average 4–8 years younger. The use of a repeat cross-sectional design overcomes potential bias from retest effects. We show for the first time that although compositional changes of the older population in terms of education partly explain the Flynn effect, the increasing use of modern technology (i.e., computers and mobile phones) in the first decade of the 2000s also contributes to its explanation.