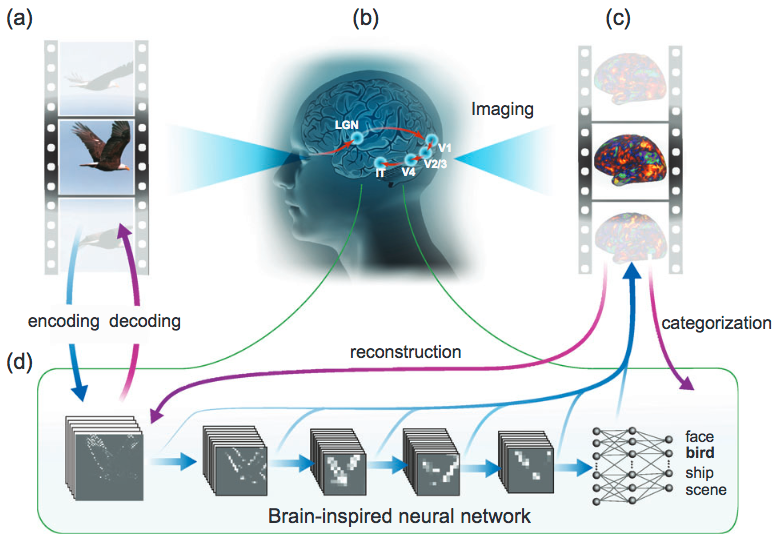

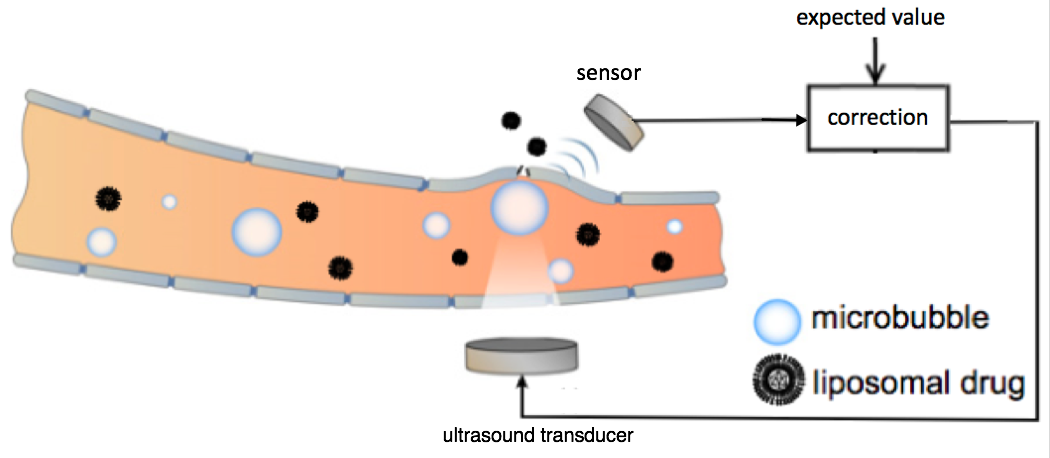

Schematic representation of the feedback-controlled focused ultrasound drug delivery system. Serving as the acoustic indicator of drug-delivery dosage, the microbubble emission signal was sensed and compared with the expected value. The difference was used as feedback to the ultrasound transducer for controlling the level of the ultrasound transmission. The ultrasound transducer and sensor were located outside the rat skull. The microbubbles were generated in the bloodstream at the target location in the brain. (credit: Tao Sun/Brigham and Women’s Hospital; adapted by KurzweilAI)

Researchers at Brigham and Women’s Hospital have developed a safer way to use focused ultrasound to temporarily open the blood-brain barrier* to allow for delivering vital drugs for treating glioma brain tumors — an alternative to invasive incision or radiation.

Focused ultrasound drug delivery to the brain uses “cavitation” — creating microbubbles — to temporarily open the blood-brain barrier. The problem with this method has been that if these bubbles destabilize and collapse, they could damage the critical vasculature in the brain.

To create a finer degree of control over the microbubbles and improve safety, the researchers placed a sensor outside of the rat brain to listen to ultrasound echoes bouncing off the microbubbles, as an indication of how stable the bubbles were.** That data was used to modify the ultrasound intensity, stabilizing the microbubbles to maintain safe ultrasound exposure.

The team tested the approach in both healthy rats and in an animal model of glioma brain cancer. Further research will be needed to adapt the technique for humans, but the approach could offer improved safety and efficacy control for human clinical trials, which are now underway in Canada.

The research, published this week in the journal Proceedings of the National Academy of Sciences, was supported by the National Institutes of Health in Canada.

* The blood brain barrier is an impassable obstacle for 98% of drugs, which it treats as pathogens and blocks them from passing from patients’ bloodstream into the brain. Using focused ultrasound, drugs can administered using an intravenous injection of innocuous lipid-coated gas microbubbles.

** For the ultrasound transducer, the researchers combined two spherically curved transducers (operating at a resonant frequency at 274.3 kHz) to double the effective aperture size and provide significantly improved focusing in the axial direction.

Abstract of Closed-loop control of targeted ultrasound drug delivery across the blood–brain/tumor barriers in a rat glioma model

Cavitation-facilitated microbubble-mediated focused ultrasound therapy is a promising method of drug delivery across the blood–brain barrier (BBB) for treating many neurological disorders. Unlike ultrasound thermal therapies, during which magnetic resonance thermometry can serve as a reliable treatment control modality, real-time control of modulated BBB disruption with undetectable vascular damage remains a challenge. Here a closed-loop cavitation controlling paradigm that sustains stable cavitation while suppressing inertial cavitation behavior was designed and validated using a dual-transducer system operating at the clinically relevant ultrasound frequency of 274.3 kHz. Tests in the normal brain and in the F98 glioma model in vivo demonstrated that this controller enables reliable and damage-free delivery of a predetermined amount of the chemotherapeutic drug (liposomal doxorubicin) into the brain. The maximum concentration level of delivered doxorubicin exceeded levels previously shown (using uncontrolled sonication) to induce tumor regression and improve survival in rat glioma. These results confirmed the ability of the controller to modulate the drug delivery dosage within a therapeutically effective range, while improving safety control. It can be readily implemented clinically and potentially applied to other cavitation-enhanced ultrasound therapies.