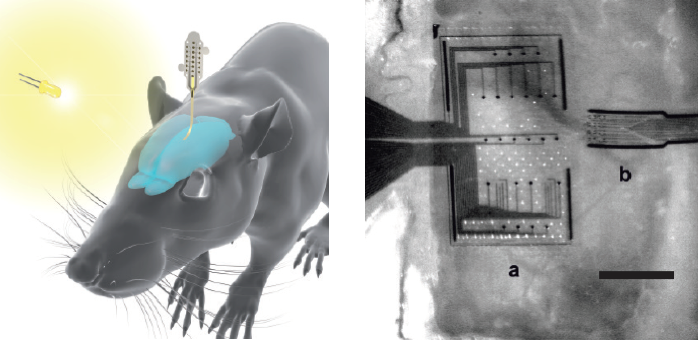

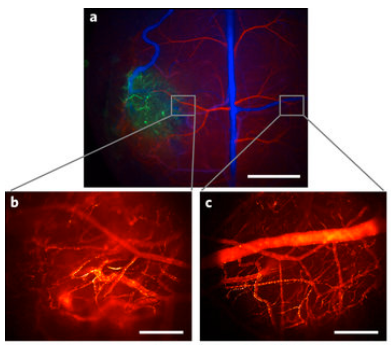

(a) High-resolution, high-speed quantum-dot shortwave infrared imaging was used to image the blood-vessel network of a mouse glioblastoma brain tumor (b) at 60 frames per second and to compare it to the blood-vessel network (c) in the opposite (healthy) brain hemisphere. (credit: Oliver T. Bruns et al./ Nature Biomedical Engineering)

A team of researchers has created bright, glowing nanoparticles called quantum dots that can be injected into the body, where they emit light at shortwave infrared (SWIR) wavelengths that pass through the skin — allowing internal body structures such as fine networks of blood vessels to be imaged in vivo (in live animals) on high-speed video cameras for the first time.

The new findings are described in an open-access paper in the journal Nature Biomedical Engineering by Moungi Bawendi, MIT Lester Wolf Professor of Chemistry, and 22 other researchers.*

Near-infrared imaging for research on biological tissues, with wavelengths between 700 and 900 nanometers (billionths of a meter), is widely used because these wavelengths can shine through tissues. But wavelengths of around 1,000 to 2,000 nanometers have the potential to provide even better results, because body tissues are more transparent at that longer light-wavelength range.

The problem in doing that has been the lack of light-emitting materials that could work at those longer wavelengths and that were bright enough to be easily detected through the surrounding skin and muscle tissues.

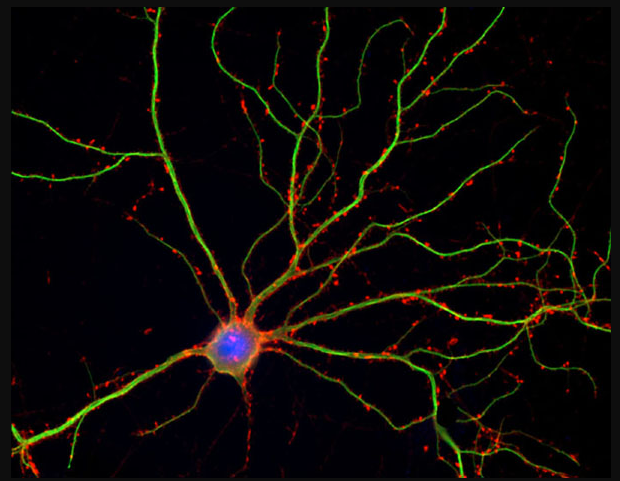

Live internal images of awake, moving mice

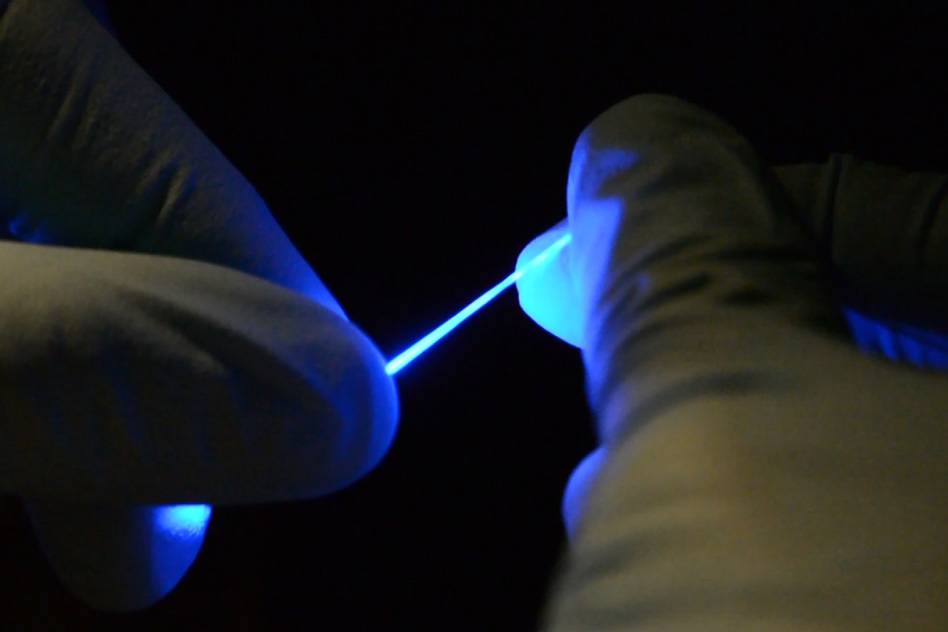

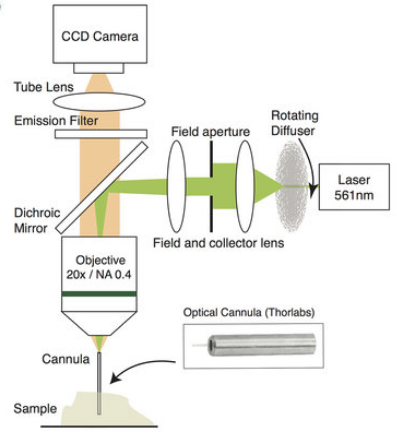

Contact-free video monitoring of heart and respiratory rate in mice using quantum dots covered with biocompatible lipid molecules and injected into mice. A newly developed camera is highly sensitive to shortwave infrared light. (credit: Oliver T. Bruns et al./ Nature Biomedical Engineering)

Now the team has succeeded in making particles that are “orders of magnitude better than previous materials, and that allow unprecedented detail in biological imaging,” says lead author Oliver T. Bruns, an MIT research scientist. The synthesis of these new particles was initially described in an open-access paper by researchers from the Bawendi group in Nature Communications last year.

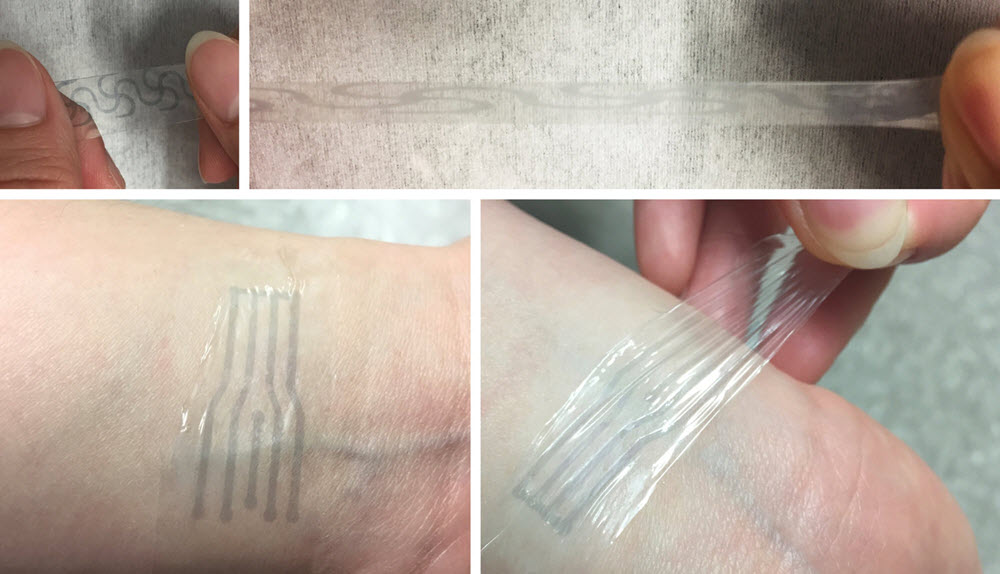

These new light-emitting nanoparticles are the first that are bright enough to allow imaging of internal organs in mice that are awake and moving, as opposed to previous methods that required them to be anesthetized, Bruns says. Initial applications would be for preclinical research in animals, as the compounds contain some materials, such as indium arsenide, that are unlikely to be approved for use in humans. The researchers are also working on developing versions that would be safer for humans.

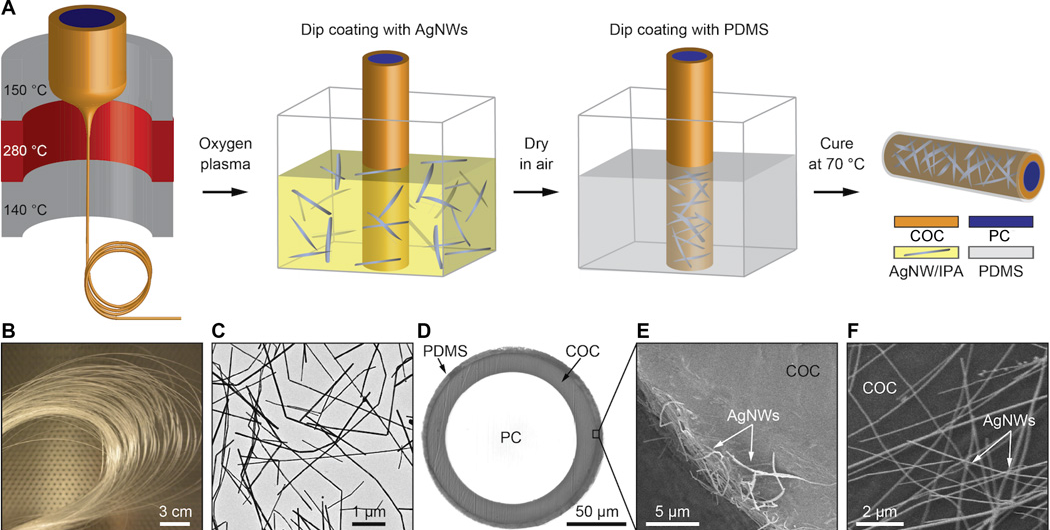

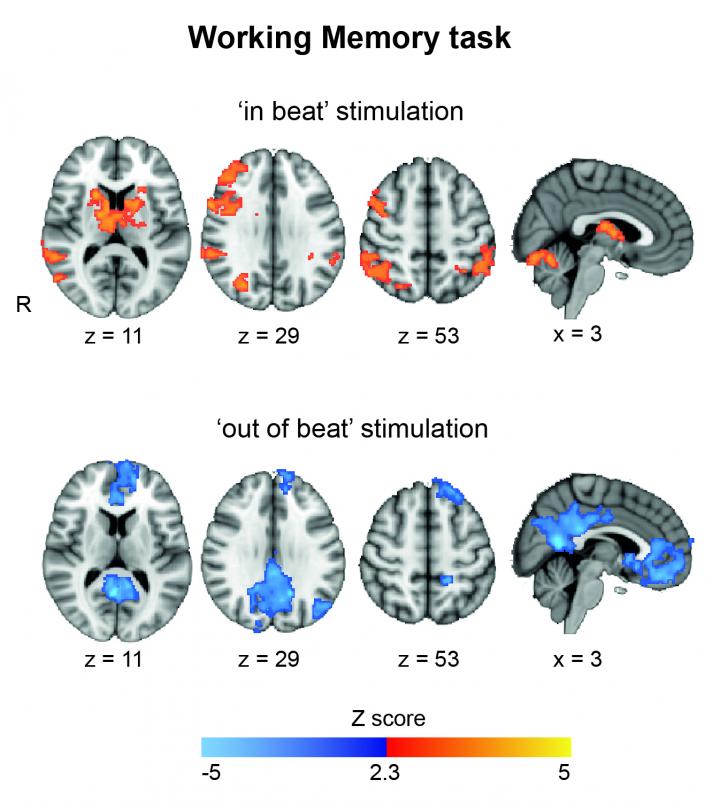

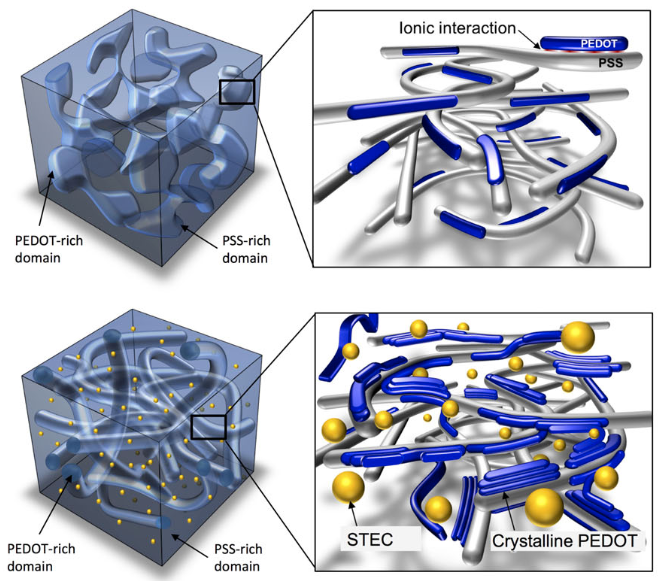

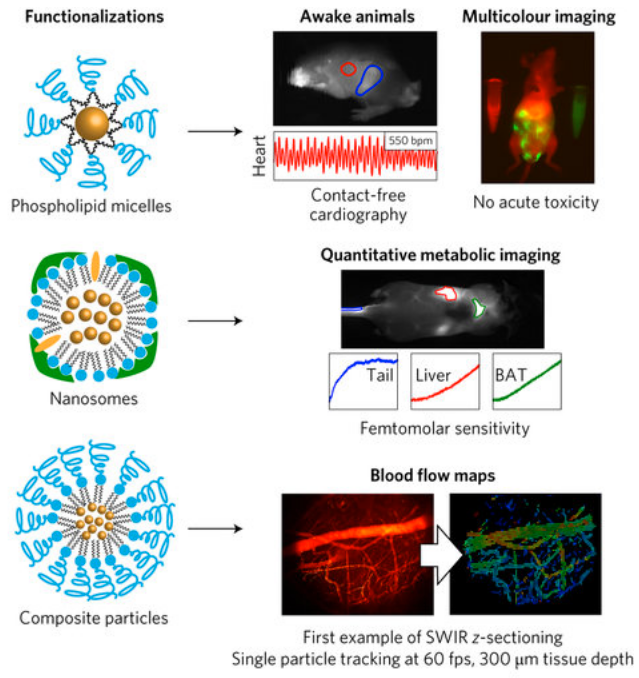

Quantum dots, made of semiconductor materials, emit light whose frequency can be precisely tuned by controlling the exact size and composition of the particles. These were functionalized via three distinct surface coatings that tailor the physiological properties for specific shortwave infrared imaging applications. The quantum dots are so bright, their emissions can be captured with very short exposure times. That makes it possible to produce not just single images but video that captures details of motion, such as the flow of blood — making it possible to distinguish between veins and arteries. (credit: Oliver T. Bruns et al./ Nature Biomedical Engineering)

Not only can the new method determine the direction of blood flow, Bruns says, it is detailed enough to track individual blood cells within that flow. “We can track the flow in each and every capillary, at super-high speed,” he says. “We can get a quantitative measure of flow, and we can do such flow measurements at very high resolution, over large areas.”

Such imaging could potentially be used, for example, to study how the blood flow pattern in a tumor changes as the tumor develops, which might lead to new ways of monitoring disease progression or responsiveness to a drug treatment. “This could give a good indication of how treatments are working that was not possible before,” he says.

* The team included members from Harvard Medical School, the Harvard T.H. Chan School of Public Health, Raytheon Vision Systems, and University Medical Center in Hamburg, Germany. The work was supported by the National Institutes of Health, the National Cancer Institute, the National Foundation for Cancer Research, the Warshaw Institute for Pancreatic Cancer Research, the Massachusetts General Hospital Executive Committee on Research, the Army Research Office through the Institute for Soldier Nanotechnologies at MIT, the U.S. Department of Defense, and the National Science Foundation.