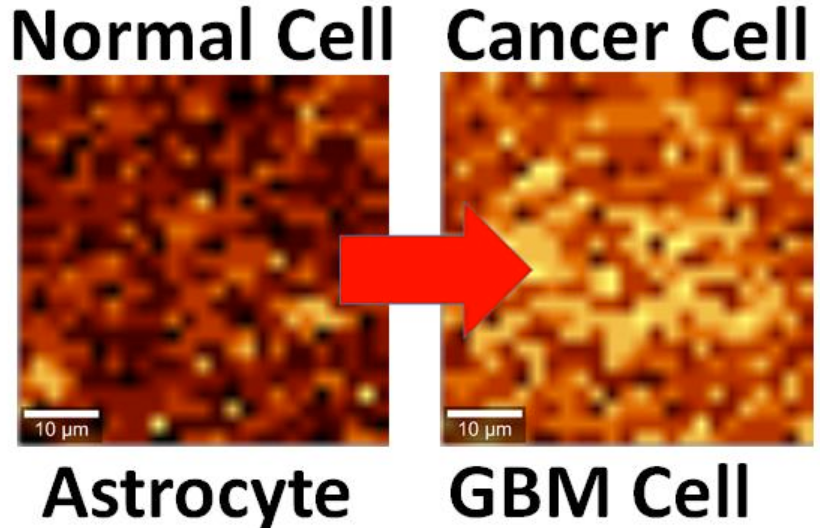

Brain cell culture. Left: Normal astrocyte brain cell; Right: cancerous Glioblastoma Multiforme (GBM) version, imaged by Raman spectrography. (credit: B. Keisham et al./ACS Appl. Mater. Interfaces)

By interfacing brain cells with graphene, University of Illinois at Chicago researchers have differentiated a single hyperactive Glioblastoma Multiforme cancerous astrocyte cell from a normal cell in the lab — pointing the way to developing a simple, noninvasive tool for early cancer diagnosis.

In the study, reported in the journal ACS Applied Materials & Interfaces, the researchers looked at lab-cultured human brain astrocyte cells taken from a mouse model. They compared normal astrocytes to their cancerous counterpart, highly malignant brain tumor glioblastoma multiforme.

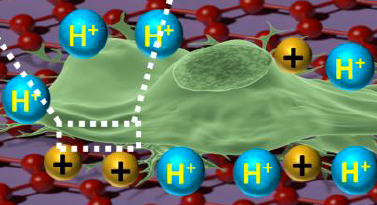

Illustration showing an astrocyte cell taken from a mouse brain draped over graphene (credit: B. Keisham et al./ACS Appl. Mater. Interfaces)

In a lab analysis, the cell is draped over graphene, explains Vikas Berry, associate professor and head of chemical engineering at UIC, who led the research along with Ankit Mehta, assistant professor of clinical neurosurgery in the UIC College of Medicine.

“The electric field around the cancer cell pushes away electrons in graphene’s electron cloud,” he said, which changes the vibration energy of the carbon atoms [in the graphene]. The change in vibration energy (resulting from the cancerous condition) can be pinpointed by Raman spectroscopy with a resolution of 300 nanometers, allowing for determining the activity of a single cell. (Raman spectroscopy is a highly sensitive method commonly used in chemistry to identify molecules by how they scatter laser light.)

“Graphene is the thinnest known material and is very sensitive to whatever happens on its surface,” Berry said. The nanomaterial is composed of a single layer of carbon atoms linked in a hexagonal chicken-wire pattern, and all the atoms share a cloud of electrons moving freely about the surface.

Patient biopsies planned

The technique is now being studied in a mouse model of cancer, with results that are “very promising,” Berry said. Experiments with patient biopsies would be further down the road. “Once a patient has brain tumor surgery, we could use this technique to see if the tumor relapses,” Berry said. “For this, we would need a cell sample we could interface with graphene and look to see if cancer cells are still present.”

The same technique may also work to differentiate between other types of cells or the activity of cells. “We may be able to use it with bacteria to quickly see if the strain is Gram-positive or Gram-negative,” Berry said. “We may be able to use it to detect sickle cells.”

Earlier this year, Berry and other coworkers introduced nanoscale ripples in graphene, causing it to conduct differently in perpendicular directions, useful for electronics. They wrinkled the graphene by draping it over a string of rod-shaped bacteria, then vacuum-shrinking the germs. “We took the earlier work and sort of flipped it over,” Berry said. “Instead of laying graphene on cells, we laid cells on graphene and studied graphene’s atomic vibrations.”

Funding was provided by UIC.

Abstract of Cancer Cell Hyperactivity and Membrane Dipolarity Monitoring via Raman Mapping of Interfaced Graphene: Toward Non-Invasive Cancer Diagnostics

Ultrasensitive detection, mapping, and monitoring of the activity of cancer cells is critical for treatment evaluation and patient care. Here, we demonstrate that a cancer cell’s glycolysis-induced hyperactivity and enhanced electronegative membrane (from sialic acid) can sensitively modify the second-order overtone of in-plane phonon vibration energies (2D) of interfaced graphene via a hole-doping mechanism. By leveraging ultrathin graphene’s high quantum capacitance and responsive phononics, we sensitively differentiated the activity of interfaced Glioblastoma Multiforme (GBM) cells, a malignant brain tumor, from that of human astrocytes at a single-cell resolution. GBM cell’s high surface electronegativity (potential ∼310 mV) and hyperacidic-release induces hole-doping in graphene with a 3-fold higher 2D vibration energy shift of approximately 6 ± 0.5 cm–1 than astrocytes. From molecular dipole-induced quantum coupling, we estimate that the sialic acid density on the cell membrane increases from one molecule per ∼17 nm2 to one molecule per ∼7 nm2. Furthermore, graphene phononic response also identified enhanced acidity of cancer cell’s growth medium. Graphene’s phonon-sensitive platform to determine interfaced cell’s activity/chemistry will potentially open avenues for studying activity of other cancer cell types, including metastatic tumors, and characterizing different grades of their malignancy.