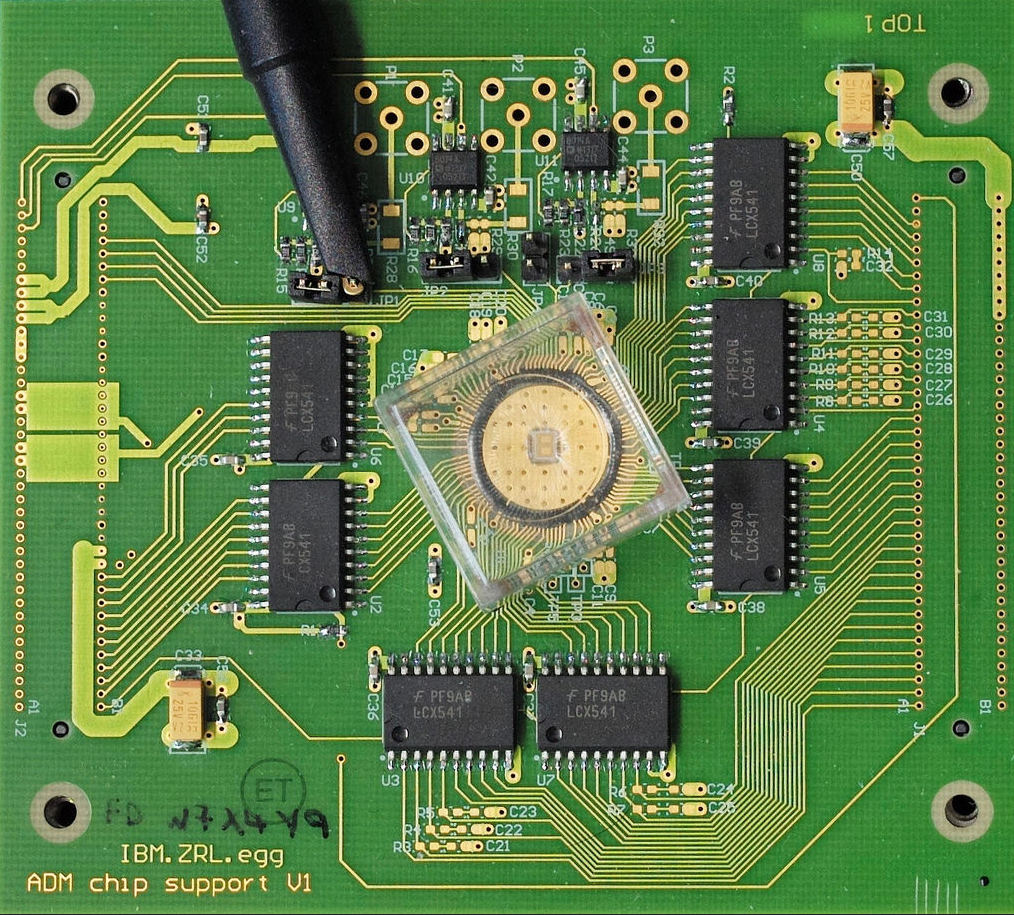

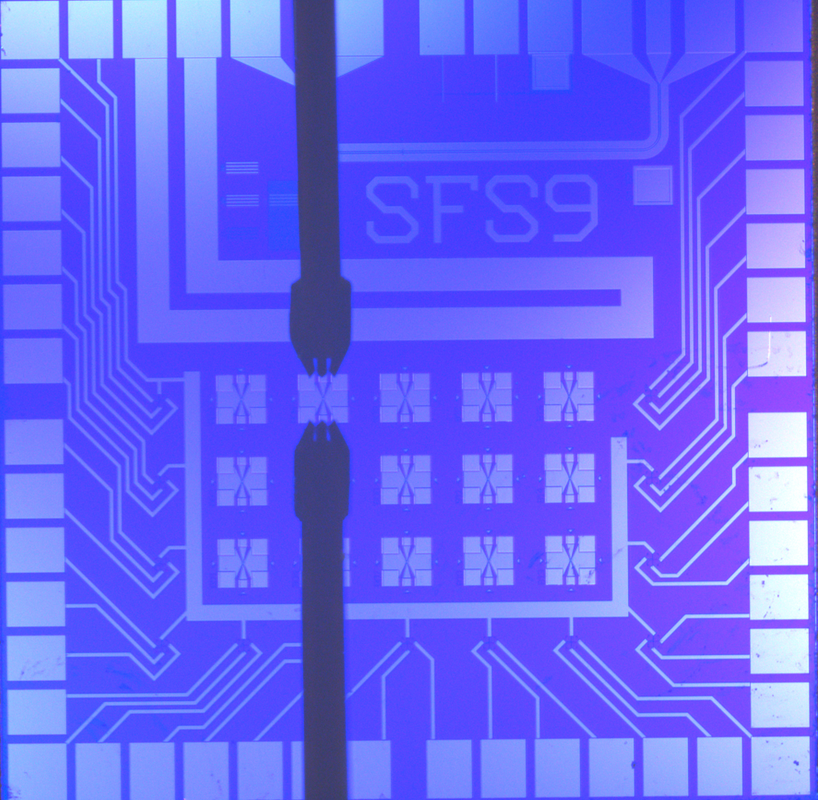

NIST’s artificial synapse, designed for neuromorphic computing, mimics the operation of switch between two neurons. One artificial synapse is located at the center of each X. This chip is 1 square centimeter in size. (The thick black vertical lines are electrical probes used for testing.) (credit: NIST)

A superconducting “synapse” that “learns” like a biological system, operating like the human brain, has been built by researchers at the National Institute of Standards and Technology (NIST).

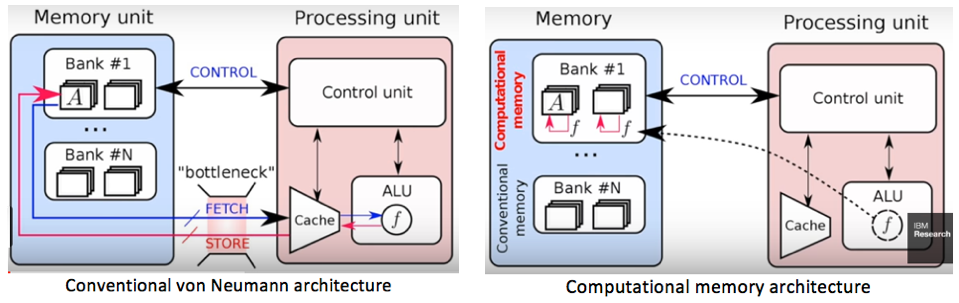

The NIST switch, described in an open-access paper in Science Advances, provides a missing link for neuromorphic (brain-like) computers, according to the researchers. Such “non-von Neumann architecture” future computers could significantly speed up analysis and decision-making for applications such as self-driving cars and cancer diagnosis.

The research is supported by the Intelligence Advanced Research Projects Activity (IARPA) Cryogenic Computing Complexity Program, which was launched in 2014 with the goal of paving the way to “a new generation of superconducting supercomputer development beyond the exascale.”*

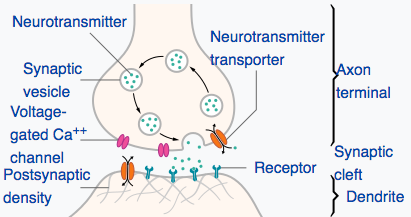

A synapse is a connection or switch between two neurons, controlling transmission of signals. (credit: NIST)

NIST’s artificial synapse is a metallic cylinder 10 micrometers in diameter — about 10 times larger than a biological synapse. It simulates a real synapse by processing incoming electrical spikes (pulsed current from a neuron) and customizing spiking output signals. The more firing between cells (or processors), the stronger the connection. That process enables both biological and artificial synapses to maintain old circuits and create new ones.

Dramatically faster, lower-energy-required, compared to human synapses

But the NIST synapse has two unique features that the researchers say are superior to human synapses and to other artificial synapses:

- Operating at 100 GHz, it can fire at a rate that is much faster than the human brain — 1 billion times per second, compared to a brain cell’s rate of about 50 times per second.

- It uses only about one ten-thousandth as much energy as a human synapse. The spiking energy is less than 1 attojoule** — roughly equivalent to the miniscule chemical energy bonding two atoms in a molecule — compared to the roughly 10 femtojoules (10,000 attojoules) per synaptic event in the human brain. Current neuromorphic platforms are orders of magnitude less efficient than the human brain. “We don’t know of any other artificial synapse that uses less energy,” NIST physicist Mike Schneider said.

Superconducting devices mimicking brain cells and transmission lines have been developed, but until now, efficient synapses — a crucial piece — have been missing. The new Josephson junction-based artificial synapse would be used in neuromorphic computers made of superconducting components (which can transmit electricity without resistance), so they would be more efficient than designs based on semiconductors or software. Data would be transmitted, processed, and stored in units of magnetic flux.

The brain is especially powerful for tasks like image recognition because it processes data both in sequence and simultaneously and it stores memories in synapses all over the system. A conventional computer processes data only in sequence and stores memory in a separate unit.

The new NIST artificial synapses combine small size, superfast spiking signals, and low energy needs, and could be stacked into dense 3D circuits for creating large systems. They could provide a unique route to a far more complex and energy-efficient neuromorphic system than has been demonstrated with other technologies, according to the researchers.

Nature News does raise some concerns about the research, quoting neuromorphic-technology experts: “Millions of synapses would be necessary before a system based on the technology could be used for complex computing; it remains to be seen whether it will be possible to scale it to this level. … The synapses can only operate at temperatures close to absolute zero, and need to be cooled with liquid helium. That this might make the chips impractical for use in small devices, although a large data centre might be able to maintain them. … We don’t yet understand enough about the key properties of the [biological] synapse to know how to use them effectively.”

Inside a superconducting synapse

The NIST synapse is a customized Josephson junction***, long used in NIST voltage standards. These junctions are a sandwich of superconducting materials with an insulator as a filling. When an electrical current through the junction exceeds a level called the critical current, voltage spikes are produced.

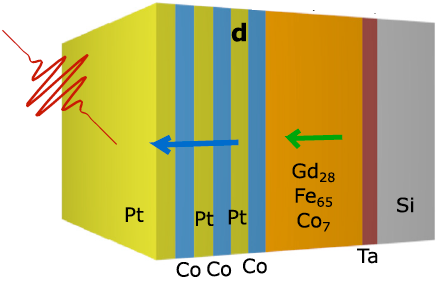

Illustration showing the basic operation of NIST’s artificial synapse, based on a Josephson junction. Very weak electrical current pulses are used to control the number of nanoclusters (green) pointing in the same direction. Shown here: a “magnetically disordered state” (left) vs. “magnetically ordered state” (right). (credit: NIST)

Each artificial synapse uses standard niobium electrodes but has a unique filling made of nanoscale clusters (“nanoclusters”) of manganese in a silicon matrix. The nanoclusters — about 20,000 per square micrometer — act like tiny bar magnets with “spins” that can be oriented either randomly or in a coordinated manner. The number of nanoclusters pointing in the same direction can be controlled, which affects the superconducting properties of the junction.

Diagram of circuit used in the simulation. The blue and red areas represent pre- and post-synapse neurons, respectively. The X symbol represents the Josephson junction. (credit: Michael L. Schneider et al./Science Advances)

The synapse rests in a superconducting state, except when it’s activated by incoming current and starts producing voltage spikes. Researchers apply current pulses in a magnetic field to boost the magnetic ordering — that is, the number of nanoclusters pointing in the same direction.

This magnetic effect progressively reduces the critical current level, making it easier to create a normal conductor and produce voltage spikes. The critical current is the lowest when all the nanoclusters are aligned. The process is also reversible: Pulses are applied without a magnetic field to reduce the magnetic ordering and raise the critical current. This design, in which different inputs alter the spin alignment and resulting output signals, is similar to how the brain operates.

Synapse behavior can also be tuned by changing how the device is made and its operating temperature. By making the nanoclusters smaller, researchers can reduce the pulse energy needed to raise or lower the magnetic order of the device. Raising the operating temperature slightly from minus 271.15 degrees C (minus 456.07 degrees F) to minus 269.15 degrees C (minus 452.47 degrees F), for example, results in more and higher voltage spikes.

* Future exascale supercomputers would run at 1018 exaflops (“flops” = floating point operations per second) or more. The current fastest supercomputer — the Sunway TaihuLight — operates at about 0.1 exaflops; zettascale computers, the next step beyond exascale, would run 10,000 times faster than that.

** An attojoule is 10-18 joule, a unit of energy, and is one-thousandth of a femtojoule.

*** The Josephson effect is the phenomenon of supercurrent — i.e., a current that flows indefinitely long without any voltage applied — across a device known as a Josephson junction, which consists of two superconductors coupled by a weak link. — Wikipedia

Abstract of Ultralow power artificial synapses using nanotextured magnetic Josephson junctions

Neuromorphic computing promises to markedly improve the efficiency of certain computational tasks, such as perception and decision-making. Although software and specialized hardware implementations of neural networks have made tremendous accomplishments, both implementations are still many orders of magnitude less energy efficient than the human brain. We demonstrate a new form of artificial synapse based on dynamically reconfigurable superconducting Josephson junctions with magnetic nanoclusters in the barrier. The spiking energy per pulse varies with the magnetic configuration, but in our demonstration devices, the spiking energy is always less than 1 aJ. This compares very favorably with the roughly 10 fJ per synaptic event in the human brain. Each artificial synapse is composed of a Si barrier containing Mn nanoclusters with superconducting Nb electrodes. The critical current of each synapse junction, which is analogous to the synaptic weight, can be tuned using input voltage spikes that change the spin alignment of Mn nanoclusters. We demonstrate synaptic weight training with electrical pulses as small as 3 aJ. Further, the Josephson plasma frequencies of the devices, which determine the dynamical time scales, all exceed 100 GHz. These new artificial synapses provide a significant step toward a neuromorphic platform that is faster, more energy-efficient, and thus can attain far greater complexity than has been demonstrated with other technologies.