The Moogfest four-day festival in Durham, North Carolina next weekend (May 18 — 21) explores the future of technology, art, and music. Here are some of the sessions that may be especially interesting to KurzweilAI readers. Full #Moogfest2017 Program Lineup.

Culture and Technology

(credit: Google)

The Magenta by Google Brain team will bring its work to life through an interactive demo plus workshops on the creation of art and music through artificial intelligence.

Magenta is a Google Brain project to ask and answer the questions, “Can we use machine learning to create compelling art and music? If so, how? If not, why not?” It’s first a research project to advance the state-of-the art and creativity in music, video, image and text generation and secondly, Magenta is building a community of artists, coders, and machine learning researchers.

The interactive demo will go through a improvisation along with the machine learning models, much like the Al Jam Session. The workshop will cover how to use the open source library to build and train models and interact with them via MIDI.

Technical reference: Magenta: Music and Art Generation with Machine Intelligence

TEDx Talks | Music and Art Generation using Machine Learning | Curtis Hawthorne | TEDxMountainViewHighSchool

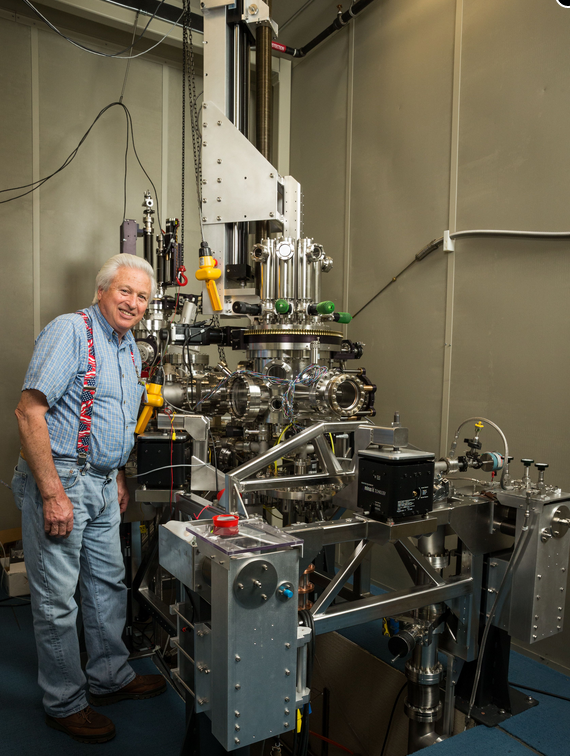

Miguel Nicolelis (credit: Duke University)

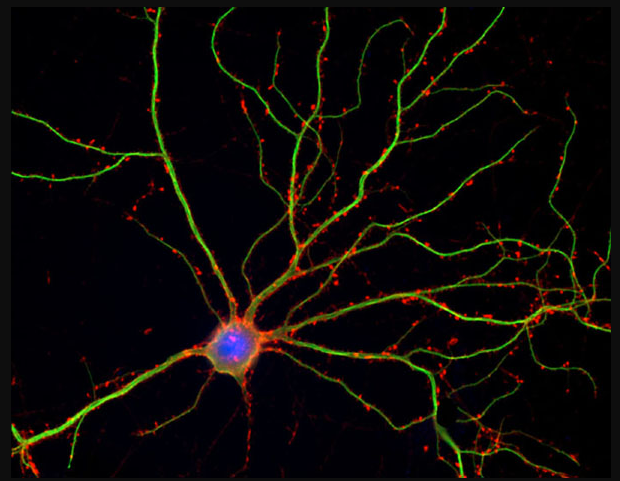

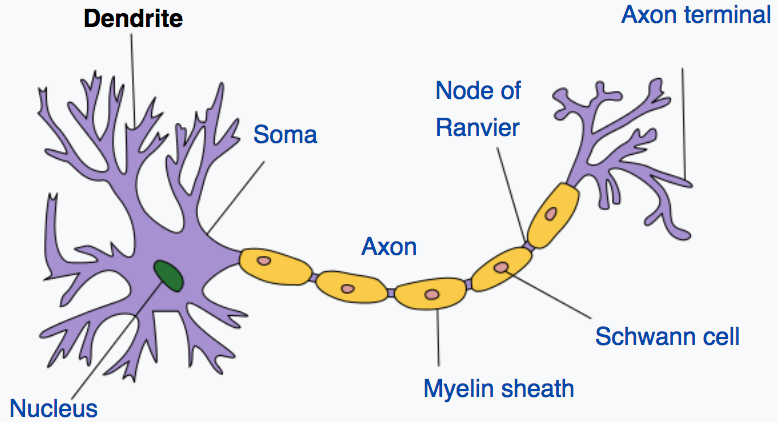

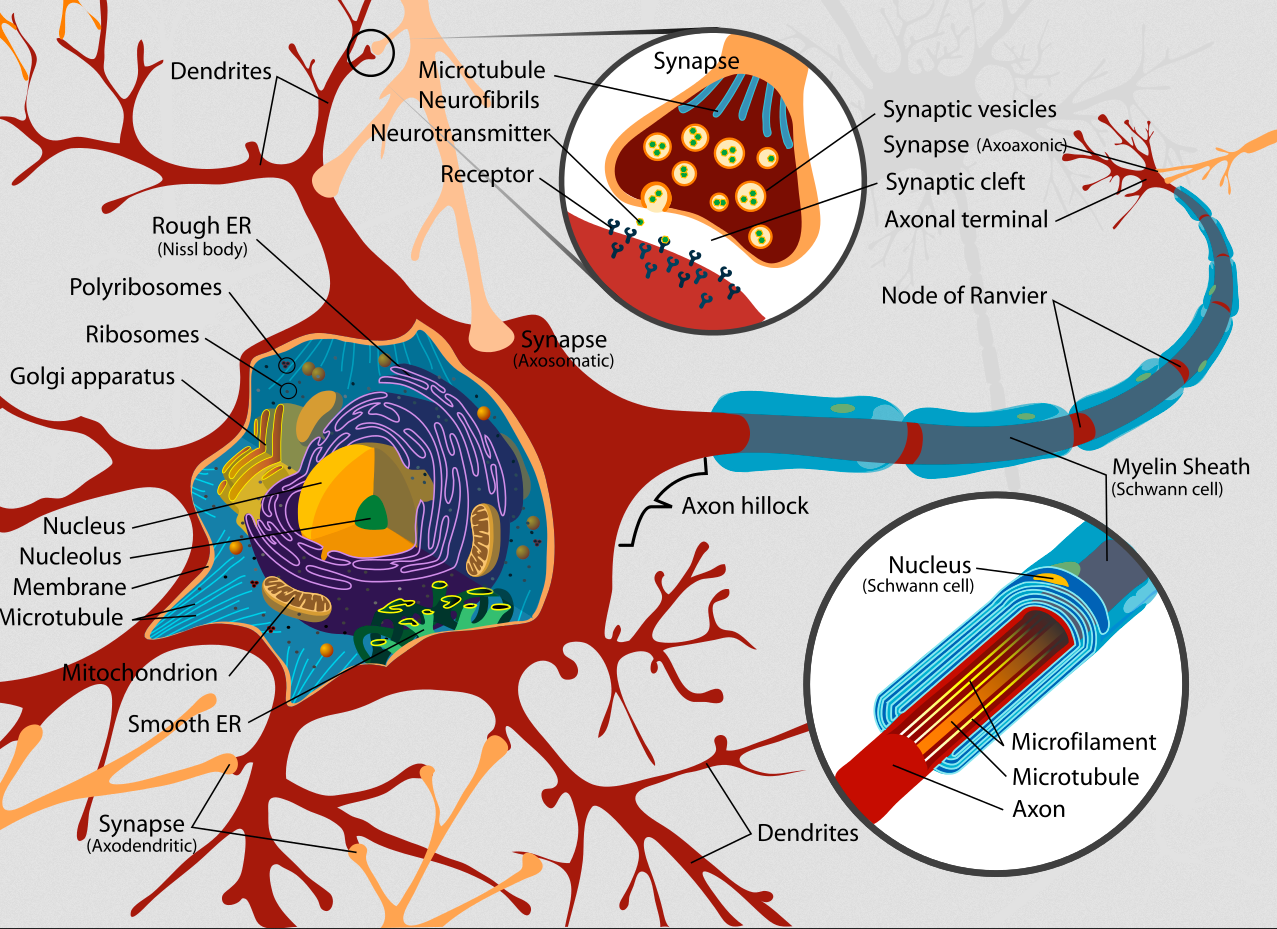

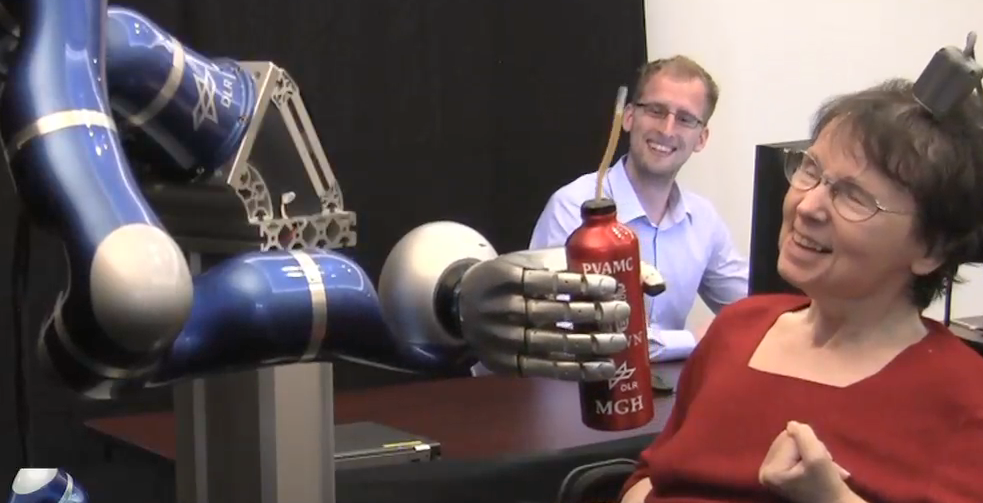

Miguel A. L. Nicolelis, MD, PhD will discuss state-of-the-art research on brain-machine interfaces, which make it possible for the brains of primates to interact directly and in a bi-directional way with mechanical, computational and virtual devices. He will review a series of recent experiments using real-time computational models to investigate how ensembles of neurons encode motor information. These experiments have revealed that brain-machine interfaces can be used not only to study fundamental aspects of neural ensemble physiology, but they can also serve as an experimental paradigm aimed at testing the design of novel neuroprosthetic devices.

He will also explore research that raises the hypothesis that the properties of a robot arm, or other neurally controlled tools, can be assimilated by brain representations as if they were extensions of the subject’s own body.

Theme: Transhumanism

Dervishes at Royal Opera House with Matthew Herbert (credit: ?)

Andy Cavatorta (MIT Media Lab) will present a conversation and workshop on a range of topics including the four-century history of music and performance at the forefront of technology. Known as the inventor of Bjork’s Gravity Harp, he has collaborated on numerous projects to create instruments using new technologies that coerce expressive music out of fire, glass, gravity, tiny vortices, underwater acoustics, and more. His instruments explore technologically mediated emotion and opportunities to express the previously inexpressible.

Theme: Instrument Design

Berklee College of Music

Michael Bierylo (credit: Moogfest)

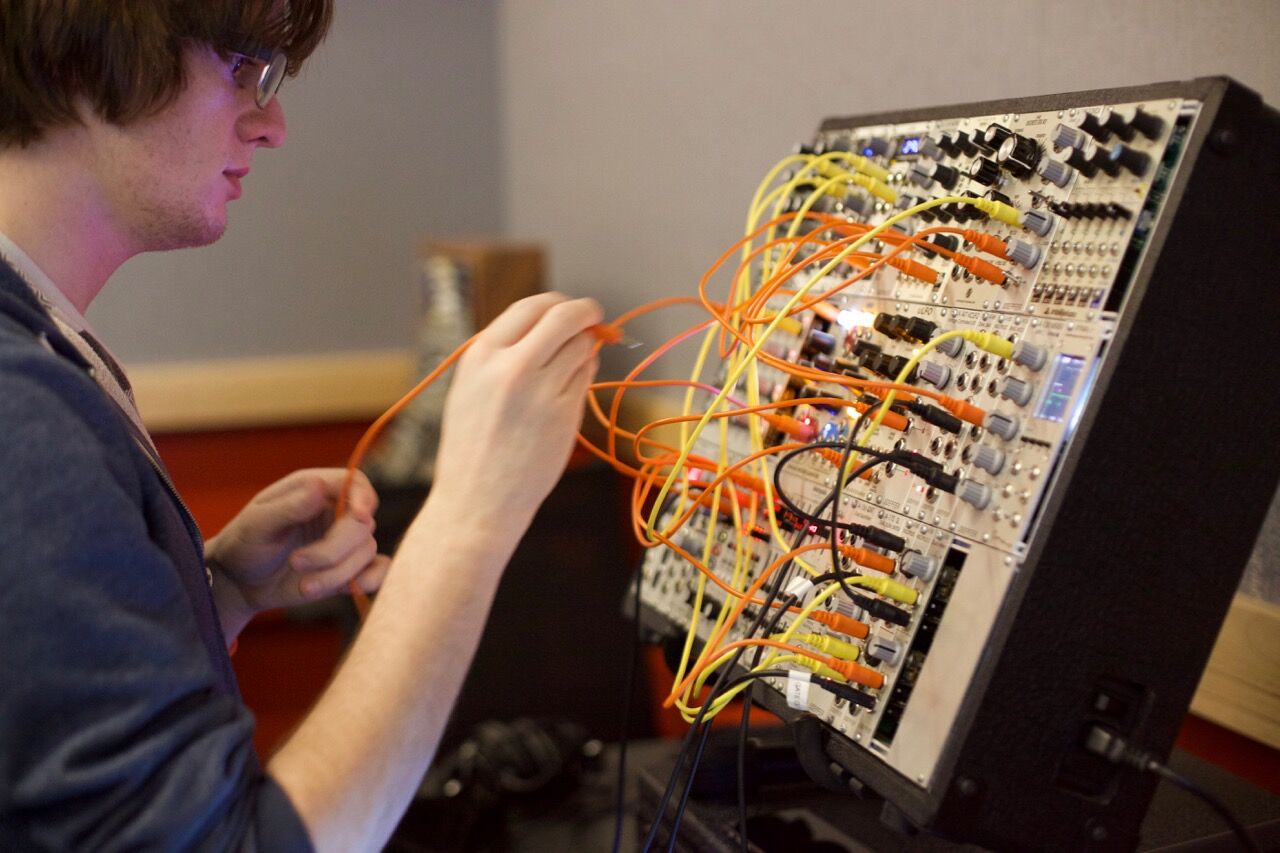

Michael Bierylo will present his Modular Synthesizer Ensemble alongside the Csound workshops from fellow Berklee Professor Richard Boulanger.

Csound is a sound and music computing system originally developed at MIT Media Lab and can most accurately be described as a compiler or a software that takes textual instructions in the form of source code and converts them into object code which is a stream of numbers representing audio. Although it has a strong tradition as a tool for composing electro-acoustic pieces, it is used by composers and musicians for any kind of music that can be made with the help of the computer and has traditionally being used in a non-interactive score driven context, but nowadays it is mostly used in in a real-time context.

Michael Bierylo serves as the Chair of the Electronic Production and Design Department, which offers students the opportunity to combine performance, composition, and orchestration with computer, synthesis, and multimedia technology in order to explore the limitless possibilities of musical expression.

Berklee College of Music | Electronic Production and Design (EPD) at Berklee College of Music

Chris Ianuzzi (credit: William Murray)

Chris Ianuzzi, a synthesist of Ciani-Musica and past collaborator with pioneers such as Vangelis and Peter Baumann, will present a daytime performance and sound exploration workshops with the B11 braininterface and NeuroSky headset–a Brainwave Sensing Headset.

Theme: Hacking Systems

Argus Project (credit: Moogfest)

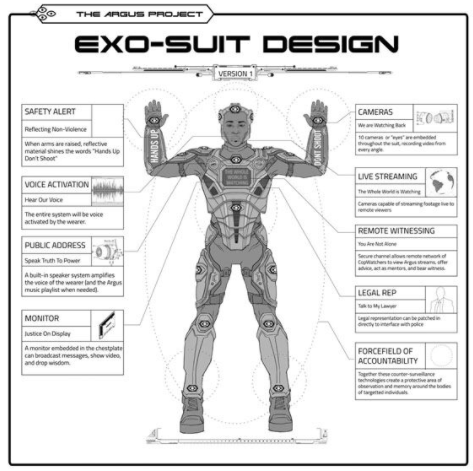

The Argus Project from Gan Golan and Ron Morrison of NEW INC is a wearable sculpture, video installation and counter-surveillance training, which directly intersects the public debate over police accountability. According to ancient Greek myth, Argus Panoptes was a giant with 100 eyes who served as an eternal watchman, both for – and against – the gods.

By embedding an array of camera “eyes” into a full body suit of tactical armor, the Argus exo-suit creates a “force field of accountability” around the bodies of those targeted. While some see filming the police as a confrontational or subversive act, it is in fact, a deeply democratic one. The act of bearing witness to the actions of the state – and showing them to the world – strengthens our society and institutions. The Argus Project is not so much about an individual hero, but the Citizen Body as a whole. In between one of the music acts, a presentation about the project will be part of the Protest Stage.

Argus Exo Suit Design (credit: Argus Project)

Theme: Protest

Found Sound Nation (credit: Moogfest)

Democracy’s Exquisite Corpse from Found Sound Nation and Moogfest, an immersive installation housed within a completely customized geodesic dome, is a multi-person instrument and music-based round-table discussion. Artists, activists, innovators, festival attendees and community engage in a deeply interactive exploration of sound as a living ecosystem and primal form of communication.

Within the dome, there are 9 unique stations, each with their own distinct set of analog or digital sound-making devices. Each person’s set of devices is chained to the person sitting next to them, so that everybody’s musical actions and choices affect the person next to them, and thus affect everyone else at the table. This instrument is a unique experiment in how technology and the instinctive language of sound can play a role in the shaping of a truly collective unconscious.

Theme: Protest

(credit: Land Marking)

Land Marking, from Halsey Burgund and Joe Zibkow of MIT Open Doc Lab, is a mobile-based music/activist project that augments the physical landscape of protest events with a layer of location-based audio contributed by event participants in real-time. The project captures the audioscape and personal experiences of temporary, but extremely important, expressions of discontent and desire for change.

Land Marking will be teaming up with the Protest Stage to allow Moogfest attendees to contribute their thoughts on protests and tune into an evolving mix of commentary and field recordings from others throughout downtown Durham. Land Marking is available on select apps.

Theme: Protest

Taeyoon Choi (credit: Moogfest)

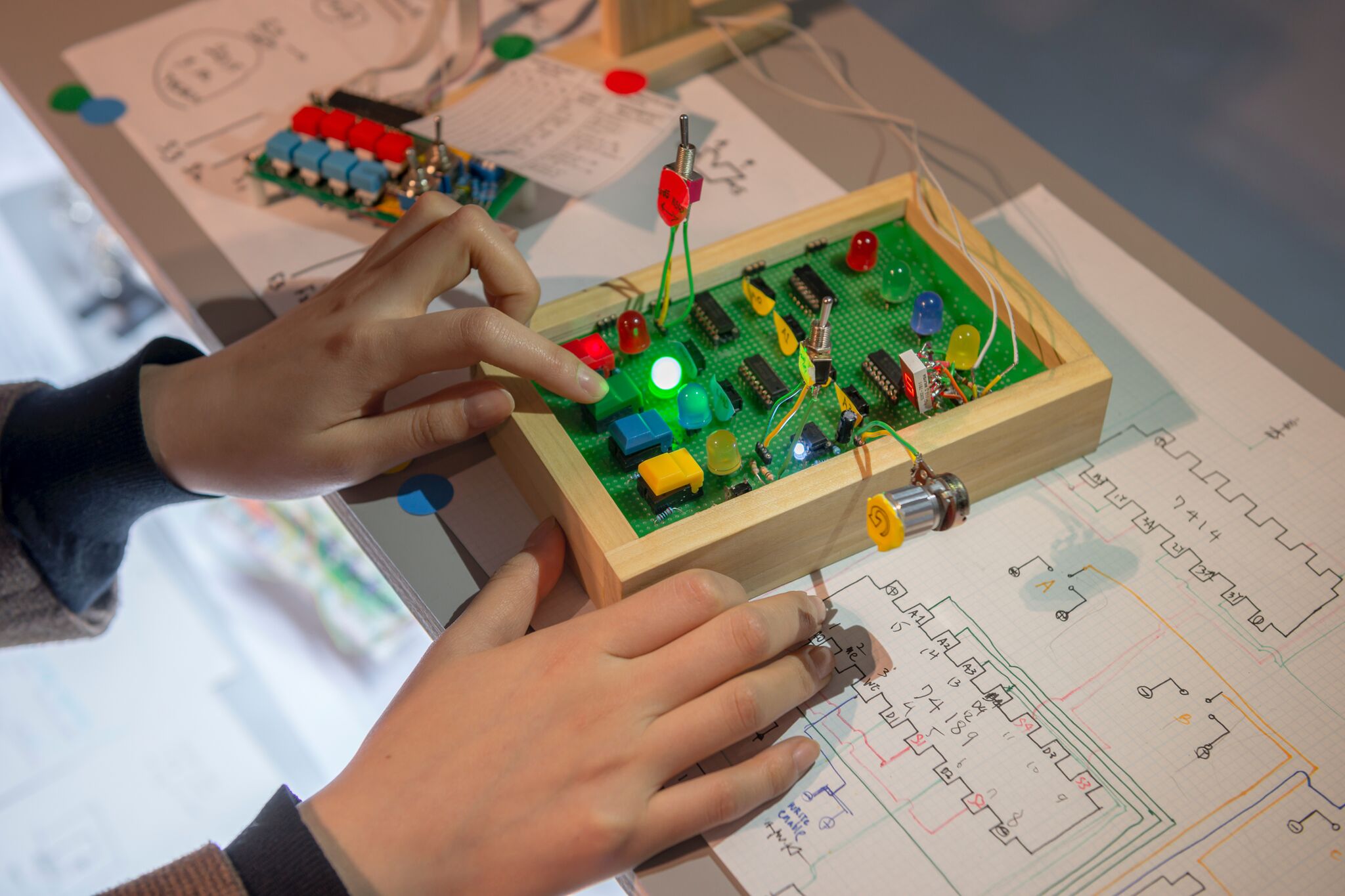

Taeyoon Choi, an artist and educator based in New York and Seoul, who will be leading a Sign Making Workshop as one of the Future Thought leaders on the Protest Stage. His art practice involves performance, electronics, drawings and storytelling that often leads to interventions in public spaces.

Taeyoon will also participate in the Handmade Computer workshop to build a1 Bit Computer, which demonstrates how binary numbers and boolean logic can be configured to create more complex components. On their own these components aren’t capable of computing anything particularly useful, but a computer is said to be Turing complete if it includes all of them, at which point it has the extraordinary ability to carry out any possible computation. He has participated in numerous workshops at festivals around the world, from Korea to Scotland, but primarily at the School for Poetic Computation (SFPC) — an artist run school co-founded by Taeyoon in NYC. Taeyoon Choi’s Handmade Computer projects.

Theme: Protest

(credit: Moogfest)

irlbb from Vivan Thi Tang, connects individuals after IRL (in real life) interactions and creates community that otherwise would have been missed. With a customized beta of the app for Moogfest 2017, irlbb presents a unique engagement opportunity.

Theme: Protest

Ryan Shaw and Michael Clamann (credit: Duke University)

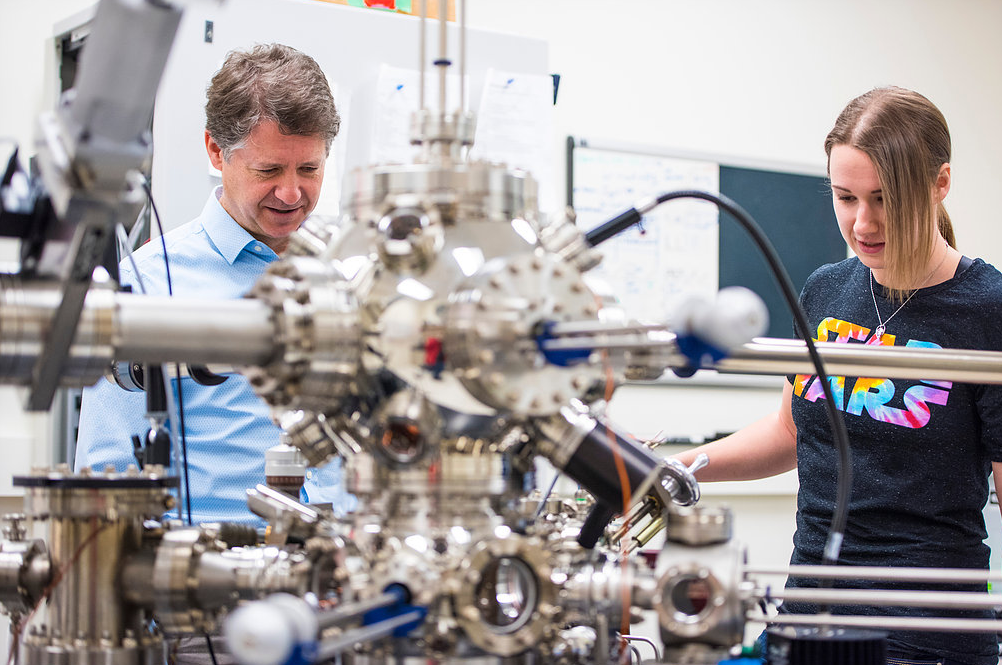

Duke Professors Ryan Shaw, and Michael Clamann will lead a daily science pub talk series on topics that include future medicine, humans and anatomy, and quantum physics.

Ryan is a pioneer in mobile health—the collection and dissemination of information using mobile and wireless devices for healthcare–working with faculty at Duke’s Schools of Nursing, Medicine and Engineering to integrate mobile technologies into first-generation care delivery systems. These technologies afford researchers, clinicians, and patients a rich stream of real-time information about individuals’ biophysical and behavioral health in everyday environments.

Michael Clamann is a Senior Research Scientist in the Humans and Autonomy Lab (HAL) within the Robotics Program at Duke University, an Associate Director at UNC’s Collaborative Sciences Center for Road Safety, and the Lead Editor for Robotics and Artificial Intelligence for Duke’s SciPol science policy tracking website. In his research, he works to better understand the complex interactions between robots and people and how they influence system effectiveness and safety.

Theme: Hacking Systems

Dave Smith (credit: Moogfest)

Dave Smith, the iconic instrument innovator and Grammy-winner, will lead Moogfest’s Instruments Innovators program and host a headlining conversation with a leading artist revealed in next week’s release. He will also host a masterclass.

As the original founder of Sequential Circuits in the mid-70s and Dave designed the Prophet-5––the world’s first fully-programmable polyphonic synth and the first musical instrument with an embedded microprocessor. From the late 1980’s through the early 2000’s he has worked to develop next level synths with the likes of the Audio Engineering Society, Yamaha, Korg, Seer Systems (for Intel). Realizing the limitations of software, Dave returned to hardware and started Dave Smith Instruments (DSI), which released the Evolver hybrid analog/digital synthesizer in 2002. Since then the DSI product lineup has grown to include the Prophet-6, OB-6, Pro 2, Prophet 12, and Prophet ’08 synthesizers, as well as the Tempest drum machine, co-designed with friend and fellow electronic instrument designer Roger Linn.

Theme: Future Thought

Dave Rossum, Gerhard Behles, and Lars Larsen (credit: Moogfest)

EM-u Systems Founder Dave Rossum, Ableton CEO Gerhard Behles, and LZX Founder Lars Larsen will take part in conversations as part of the Instruments Innovators program.

Driven by the creative and technological vision of electronic music pioneer Dave Rossum, Rossum Electro-Music creates uniquely powerful tools for electronic music production and is the culmination of Dave’s 45 years designing industry-defining instruments and transformative technologies. Starting with his co-founding of E-mu Systems, Dave provided the technological leadership that resulted in what many consider the premier professional modular synthesizer system–E-mu Modular System–which became an instrument of choice for numerous recording studios, educational institutions, and artists as diverse as Frank Zappa, Leon Russell, and Hans Zimmer. In the following years, worked on developing Emulator keyboards and racks (i.e. Emulator II), Emax samplers, the legendary SP-12 and SP-1200 (sampling drum machines), the Proteus sound modules and the Morpheus Z-Plane Synthesizer.

Gerhard Behles co-founded Ableton in 1999 with Robert Henke and Bernd Roggendorf. Prior to this he had been part of electronic music act “Monolake” alongside Robert Henke, but his interest in how technology drives the way music is made diverted his energy towards developing music software. He was fascinated by how dub pioneers such as King Tubby ‘played’ the recording studio, and began to shape this concept into a music instrument that became Ableton Live.

LZX Industries was born in 2008 out of the Synth DIY scene when Lars Larsen of Denton, Texas and Ed Leckie of Sydney, Australia began collaborating on the development of a modular video synthesizer. At that time, analog video synthesizers were inaccessible to artists outside of a handful of studios and universities. It was their continuing mission to design creative video instruments that (1) stay within the financial means of the artists who wish to use them, (2) honor and preserve the legacy of 20th century toolmakers, and (3) expand the boundaries of possibility. Since 2015, LZX Industries has focused on the research and development of new instruments, user support, and community building.

Science

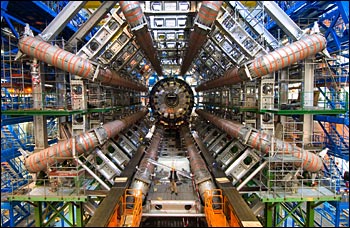

ATLAS detector (credit: Kaushik De, Brookhaven National Laboratory)

ATLAS @ CERN. The full ATLAS @ CERN program will be led by Duke University Professors Mark Kruse andKatherine Hayles along with ATLAS @ CERN Physicist Steven Goldfarb.

The program will include a “Virtual Visit” to the Large Hadron Collider — the world’s largest and most powerful particle accelerator — via a live video session, a ½ day workshop analyzing and understanding LHC data, and a “Science Fiction versus Science Fact” live debate.

The ATLAS experiment is designed to exploit the full discovery potential and the huge range of physics opportunities that the LHC provides. Physicists test the predictions of the Standard Model, which encapsulates our current understanding of what the building blocks of matter are and how they interact – resulting in one such discoveries as the Higgs boson. By pushing the frontiers of knowledge it seeks to answer to fundamental questions such as: What are the basic building blocks of matter? What are the fundamental forces of nature? Could there be a greater underlying symmetry to our universe?

“Atlas Boogie” (referencing Higgs Boson):

ATLAS Experiment | The ATLAS Boogie

(credit: Kate Shaw)

Kate Shaw (ATLAS @ CERN), PhD, in her keynote, titled “Exploring the Universe and Impacting Society Worldwide with the Large Hadron Collider (LHC) at CERN,” will dive into the present-day and future impacts of the LHC on society. She will also share findings from the work she has done promoting particle physics in developing countries through her Physics without Frontiers program.

The ATLAS experiment is designed to exploit the full discovery potential and the huge range of physics opportunities that the LHC provides. Physicists test the predictions of the Standard Model, which encapsulates our current understanding of what the building blocks of matter are and how they interact – resulting in one such discoveries as the Higgs boson. By pushing the frontiers of knowledge it seeks to answer to fundamental questions such as: What are the basic building blocks of matter? What are the fundamental forces of nature? Could there be a greater underlying symmetry to our universe?

Theme: Future Thought

Arecibo (credit: Joe Davis/MIT)

In his keynote, Joe Davis (MIT) will trace the history of several projects centered on ideas about extraterrestrial communications that have given rise to new scientific techniques and inspired new forms of artistic practice. He will present his “swansong” — an interstellar message that is intended explicitly for human beings rather than for aliens.

Theme: Future Thought

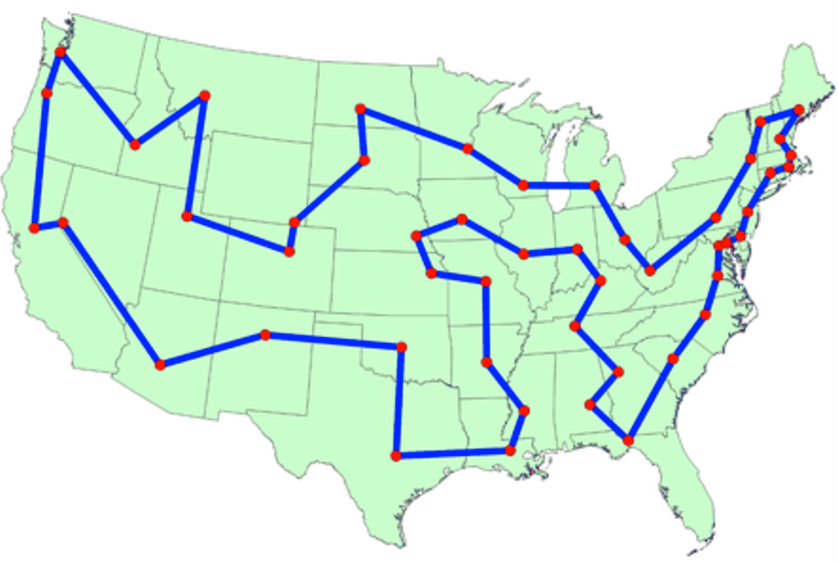

Immortality bus (credit: Zoltan Istvan)

Zoltan Istvan (Immortality Bus), the former U.S. Presidential candidate for the Transhumanist party and leader of the Transhumanist movement, will explore the path to immortality through science with the purpose of using science and technology to radically enhance the human being and human experience. His futurist work has reached over 100 million people–some of it due to the Immortality Bus which he recently drove across America with embedded journalists aboard. The bus is shaped and looks like a giant coffin to raise life extension awareness.

Zoltan Istvan | 1-min Hightlight Video for Zoltan Istvan Transhumanism Documentary IMMORTALITY OR BUST

Theme: Transhumanism/Biotechnology

(credit: Moogfest)

Marc Fleury and members of the Church of Space — Park Krausen, Ingmar Koch, and Christ of Veillon — return to Moogfest for a second year to present an expanded and varied program with daily explorations in modern physics with music and the occult, Illuminati performances, theatrical rituals to ERIS, and a Sunday Mass in their own dedicated “Church” venue.

Theme: Techno-Shamanism

#Moogfest2017