A “zoolophone” with animal shapes automatically created using a computer algorithm. The tone of each key is comparable to those of professionally made instruments as a demonstration of an algorithm for computationally designing an object’s vibrational properties and sounds. (Changxi Zheng/Columbia Engineering)

Computer scientists at Columbia Engineering, Harvard, and MIT have demonstrated that acoustic properties — both sound and vibration — can be controlled by 3D-printing specific shapes.

They designed an optimization algorithm and used computational methods and digital fabrication to alter the shape of 2D and 3D objects, creating what looks to be a simple children’s musical instrument — a xylophone with keys in the shape of zoo animals.

Practical uses

“Our discovery could lead to a wealth of possibilities that go well beyond musical instruments,” says Changxi Zheng, assistant professor of computer science at Columbia Engineering, who led the research team.

“Our algorithm could lead to ways to build less noisy computer fans and bridges that don’t amplify vibrations under stress, and advance the construction of micro-electro-mechanical resonators whose vibration modes are of great importance.”

Zheng, who works in the area of dynamic, physics-based computational sound for immersive environments, wanted to see if he could use computation and digital fabrication to actively control the acoustical property, or vibration, of an object.

Zheng’s team decided to focus on simplifying the slow, complicated, manual process of designing “idiophones” — musical instruments that produce sounds through vibrations in the instrument itself, not through strings or reeds.

The surface vibration and resulting sounds depend on the idiophone’s shape in a complex way, so designing the shapes to obtain desired sound characteristics is not straightforward, and their forms have so far been limited to well-understood designs such as bars that are tuned by careful drilling of dimples on the underside of the instrument.

Optimizing sound properties

To demonstrate their new technique, the team settled on building a “zoolophone,” a metallophone with playful animal shapes (a metallophone is an idiophone made of tuned metal bars that can be struck to make sound, such as a glockenspiel).

Their algorithm optimized and 3D-printed the instrument’s keys in the shape of colorful lions, turtles, elephants, giraffes, and more, modelling the geometry to achieve the desired pitch and amplitude of each part.

“Our zoolophone’s keys are automatically tuned to play notes on a scale with overtones and frequency of a professionally produced xylophone,” says Zheng, whose team spent nearly two years on developing new computational methods while borrowing concepts from computer graphics, acoustic modeling, mechanical engineering, and 3D printing.

“By automatically optimizing the shape of 2D and 3D objects through deformation and perforation, we were able to produce such professional sounds that our technique will enable even novices to design metallophones with unique sound and appearance.”

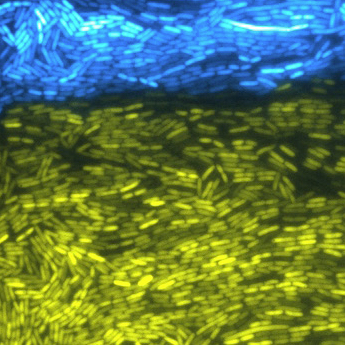

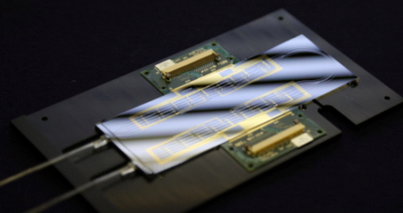

3D metallophone cups automatically created by computers (credit: Changxi Zheng/Columbia Engineering)

The zoolophone represents fundamental research into understanding the complex relationships between an object’s geometry and its material properties, and the vibrations and sounds it produces when struck.

While previous algorithms attempted to optimize either amplitude (loudness) or frequency, the zoolophone required optimizing both simultaneously to fully control its acoustic properties. Creating realistic musical sounds required more work to add in overtones, secondary frequencies higher than the main one that contribute to the timbre associated with notes played on a professionally produced instrument.

Looking for the most optimal shape that produces the desired sound when struck proved to be the core computational difficulty: the search space for optimizing both amplitude and frequency is immense. To increase the chances of finding the most optimal shape, Zheng and his colleagues developed a new, fast stochastic optimization method, which they called Latin Complement Sampling (LCS).

They input shape and user-specified frequency and amplitude spectra (for instance, users can specify which shapes produce which note) and, from that information, optimized the shape of the objects through deformation and perforation to produce the wanted sounds. LCS outperformed all other alternative optimizations and can be used in a variety of other problems.

“Acoustic design of objects today remains slow and expensive,” Zheng notes. “We would like to explore computational design algorithms to improve the process for better controlling an object’s acoustic properties, whether to achieve desired sound spectra or to reduce undesired noise. This project underscores our first step toward this exciting direction in helping us design objects in a new way.”

Zheng, whose previous work in computer graphics includes synthesizing realistic sounds that are automatically synchronized to simulated motions, has already been contacted by researchers interested in applying his approach to micro-electro-mechanical systems (MEMS), in which vibrations filter RF signals.

Their work—“Computational Design of Metallophone Contact Sounds”—will be presented at SIGGRAPH Asia on November 4 in Kobe, Japan.

The work at Columbia Engineering was supported in part by the National Science Foundation (NSF) and Intel, at Harvard and MIT by NSF, Air Force Research Laboratory, and DARPA.