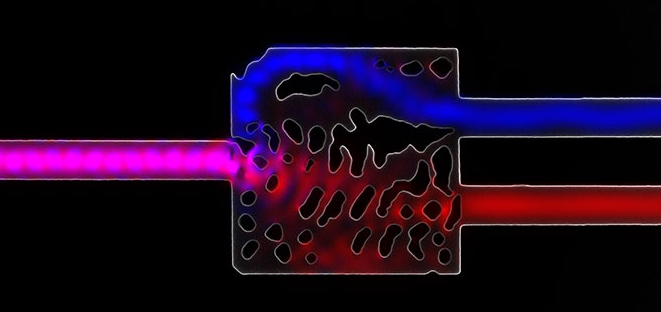

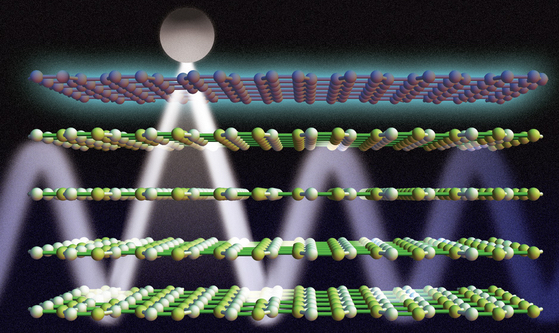

Researchers have shown that a DC voltage applied to layers of graphene and boron nitride can be used to control light emission from a nearby atom. Here, graphene is represented by a maroon-colored top layer; boron nitride is represented by yellow-green lattices below the graphene; and the atom is represented by a grey circle. A low concentration of DC voltage (in blue) allows the light to propagate inside the boron nitride, forming a tightly confined waveguide for optical signals. (credit: Anshuman Kumar Srivastava and Jose Luis Olivares/MIT)

In a new discovery that could lead to chips that combine optical and electronic components, researchers at MIT, IBM and two universities have found a way to combine light and sound with far lower losses than when such devices are made separately and then interconnected, they say.

Light’s interaction with graphene produces vibrating electron particles called plasmons, while light interacting with hexagonal boron nitride (hBN) produces phonons (sound “particles”). Fang and his colleagues found that when the materials are combined in a certain way, the plasmons and phonons can couple, producing a strong resonance.

The properties of the graphene allow precise control over light, while hBN provides very strong confinement and guidance of the light. Combining the two makes it possible to create new “metamaterials” that marry the advantages of both, the researchers say.

The work is co-authored by MIT associate professor of mechanical engineering Nicholas Fang and graduate student Anshuman Kumar, and their co-authors at IBM’s T.J. Watson Research Center, Hong Kong Polytechnic University, and the University of Minnesota.

According to Phaedon Avouris, a researcher at IBM and co-author of the paper, “The combination of these two materials provides a unique system that allows the manipulation of optical processes.”

The two materials are structurally similar — both composed of hexagonal arrays of atoms that form two-dimensional sheets — but they each interact with light quite differently. The researchers found that these interactions can be complementary, and can couple in ways that afford a great deal of control over the behavior of light.

The hybrid material blocks light when a particular voltage is applied to the graphene layer. When a different voltage is applied, a special kind of emission and propagation, called “hyperbolicity” occurs. This phenomenon has not been seen before in optical systems, Fang says.

Nanoscale optical waveguides

The result: an extremely thin sheet of material can interact strongly with light, allowing beams to be guided, funneled, and controlled by different voltages applied to the sheet.

The combined materials create a tuned system that can be adjusted to allow light only of certain specific wavelengths or directions to propagate, they say.

These properties should make it possible, Fang says, to create tiny optical waveguides, about 20 nanometers in size —- the same size range as the smallest features that can now be produced in microchips.

“Our work paves the way for using 2-D material heterostructures for engineering new optical properties on demand,” says co-author Tony Low, a researcher at IBM and the University of Minnesota.

Single-molecule optical resolution

Another potential application, Fang says, comes from the ability to switch a light beam on and off at the material’s surface; because the material naturally works at near-infrared wavelengths, this could enable new avenues for infrared spectroscopy, he says. “It could even enable single-molecule resolution,” Fang says, of biomolecules placed on the hybrid material’s surface.

Sheng Shen, an assistant professor of mechanical engineering at Carnegie Mellon University who was not involved in this research, says, “This work represents significant progress on understanding tunable interactions of light in graphene-hBN.” The work is “pretty critical” for providing the understanding needed to develop optoelectronic or photonic devices based on graphene and hBN, he says, and “could provide direct theoretical guidance on designing such types of devices. … I am personally very excited about this novel theoretical work.”

The research team also included Kin Hung Fung of Hong Kong Polytechnic University. The work was supported by the National Science Foundation and the Air Force Office of Scientific Research.

Abstract of Tunable Light–Matter Interaction and the Role of Hyperbolicity in Graphene–hBN System

Hexagonal boron nitride (hBN) is a natural hyperbolic material, which can also accommodate highly dispersive surface phonon-polariton modes. In this paper, we examine theoretically the mid-infrared optical properties of graphene–hBN heterostructures derived from their coupled plasmon–phonon modes. We find that the graphene plasmon couples differently with the phonons of the two Reststrahlen bands, owing to their different hyperbolicity. This also leads to distinctively different interaction between an external quantum emitter and the plasmon–phonon modes in the two bands, leading to substantial modification of its spectrum. The coupling to graphene plasmons allows for additional gate tunability in the Purcell factor and narrow dips in its emission spectra.