American and Korean researchers are creating an artificial nerve system for robots and humans. (credit: Kevin Craft)

Researchers at Stanford University and Seoul National University have developed an artificial sensory nerve system that’s a step toward artificial skin for prosthetic limbs, restoring sensation to amputees, and giving robots human-like reflexes.*

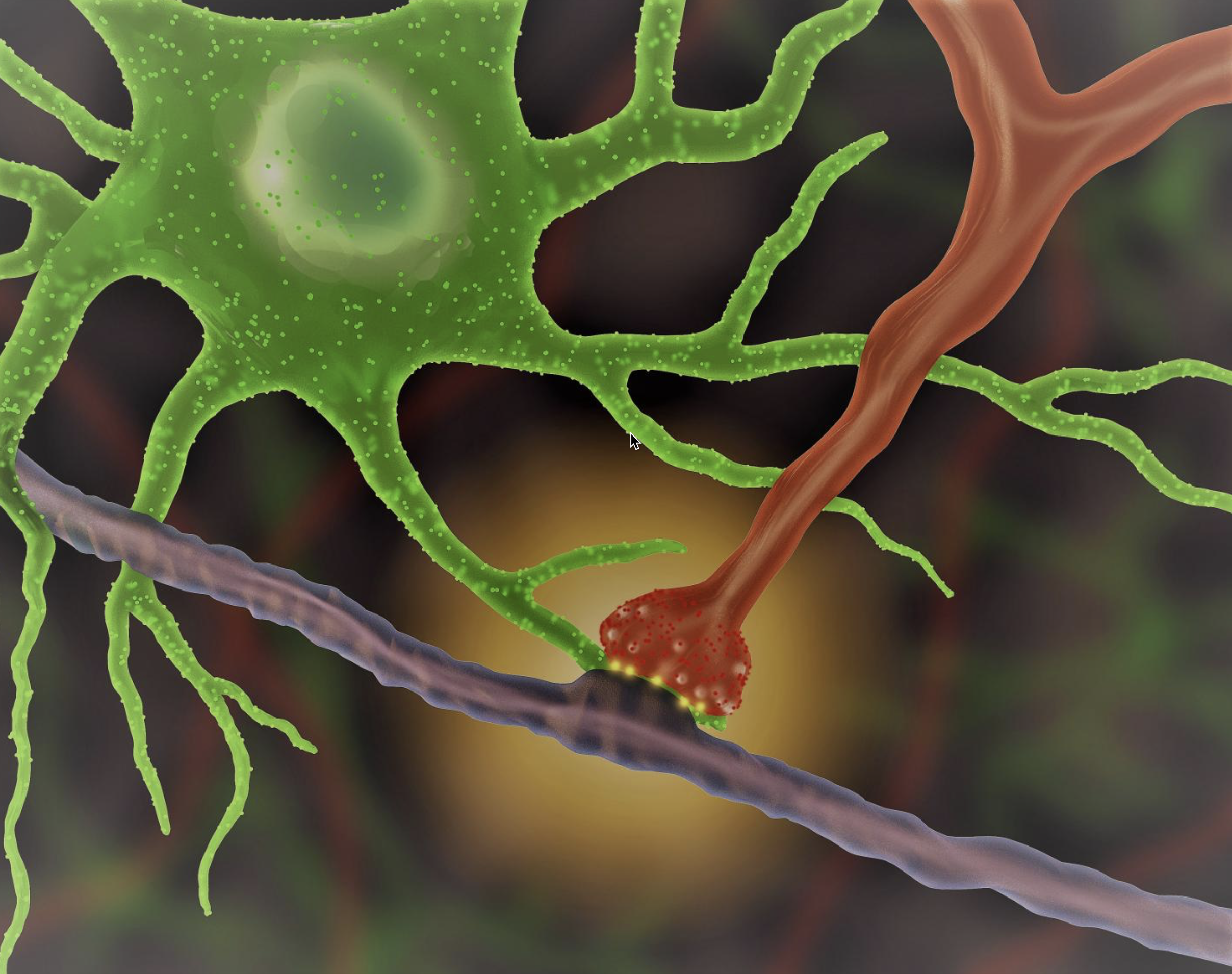

Their rudimentary artificial nerve circuit integrates three previously developed components: a touch-pressure sensor, a flexible electronic neuron, and an artificial synaptic transistor modeled on human synapses.

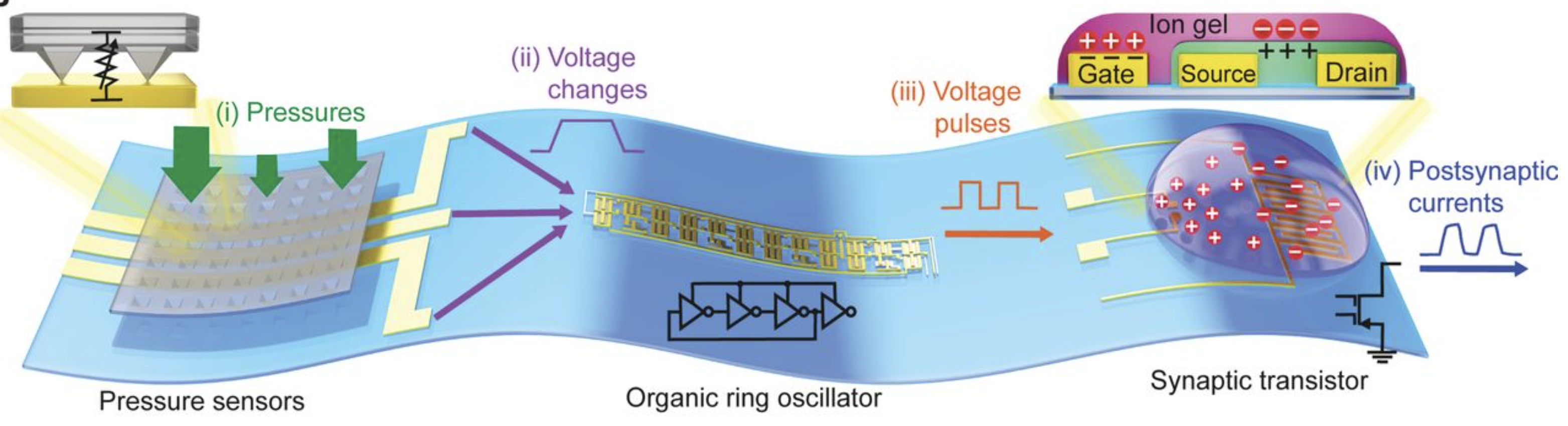

Here’s how the artificial nerve circuit works:

(Biological model) Pressures applied to afferent (sensory) mechanoreceptors (pressure sensors, in this case) in the finger change the receptor potential (voltage) of each mechanoreceptor. The receptor potential changes combine and initiate action potentials in the nerve fiber, connected to a heminode in the chest. The nerve fiber forms synapses with interneurons in the spinal cord. Action potentials from multiple nerve fibers combine through the synapses and contribute to information processing (via postsynaptic potentials). (credit: (Yeongin Kim (Stanford University), Alex Chortos(Stanford University), Wentao Xu (Seoul National University), Zhenan Bao (Stanford University), Tae-Woo Lee (Seoul National University))

(Artificial model) Illustration of a corresponding artificial afferent nerve system made of pressure sensors, an organic ring oscillator (simulates a neuron), and a transistor that simulates a synapse. (Only one ring oscillator connected to a synaptic transistor is shown here for simplicity.) Colors of parts match corresponding colors in the biological version. (credit: Yeongin Kim (Stanford University), Alex Chortos (Stanford University), Wentao Xu (Seoul National University), Zhenan Bao (Stanford University), Tae-Woo Lee (Seoul National University))

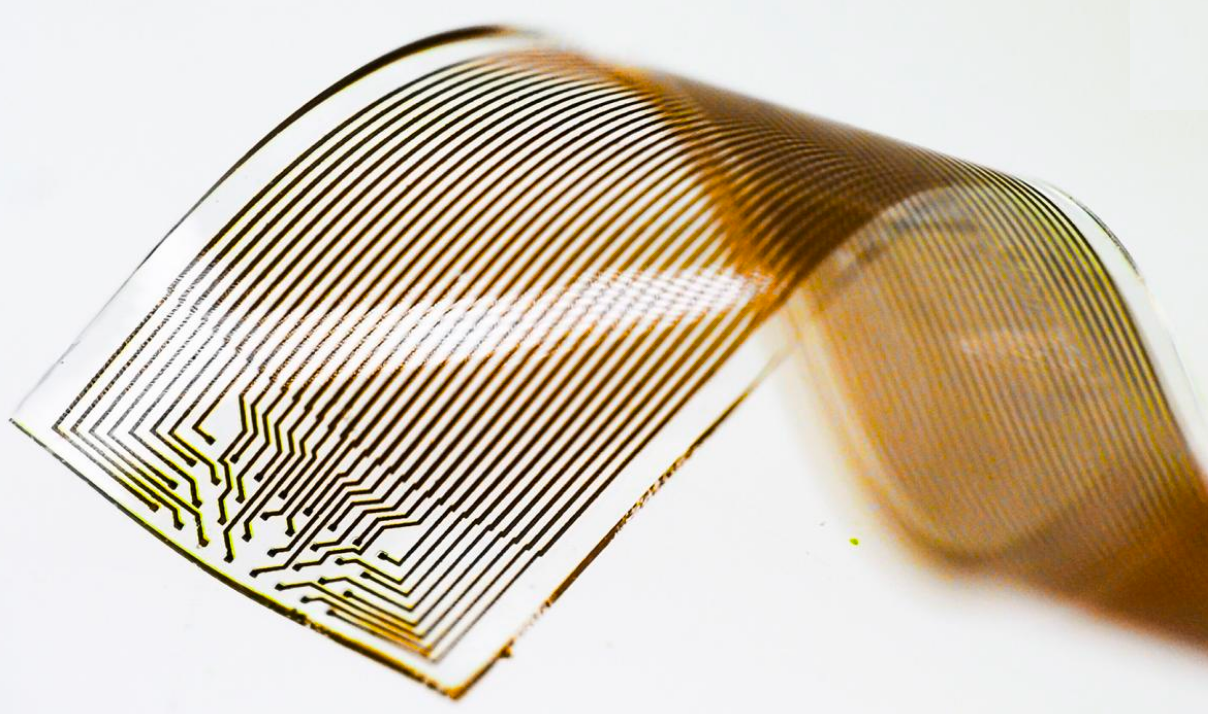

(Photo) Artificial sensor, artificial neuron, and artificial synapse. (credit: Yeongin Kim (Stanford University), Alex Chortos(Stanford University), Wentao Xu (Seoul National University), Zhenan Bao (Stanford University), Tae-Woo Lee (Seoul National University))

Experiments with the artificial nerve circuit

In a demonstration experiment, the researchers used the artificial nerve circuit to activate the twitch reflex in the knee of a cockroach.

A cockroach insect (A) with an attached artificial mechanosensory nerve was used in this experiment. The artificial afferent nerve (B) was connected to the biological motor (movement) nerves of a detached insect leg (B, lower right) to demonstrate a hybrid reflex arc (such as a knee reflex). Applied pressure caused a reflex movement of the leg. A force gauge (C) was used to measure the force of the reflex movements of the disabled insect leg. (credit: Yeongin Kim (Stanford University), Alex Chortos(Stanford University), Wentao Xu (Seoul National University), Zhenan Bao (Stanford University), Tae-Woo Lee (Seoul National University))

The researchers did another experiment that showed how the artificial nerve system could be used to identify letters in the Braille alphabet.

Improving robot and human sensory abilites

The researchers “used a knee reflex as an example of how more-advanced artificial nerve circuits might one day be part of an artificial skin that would give prosthetic devices or robots both senses and reflexes,” noted Chiara Bartolozzi, Ph.D., of Istituto Italiano Di Tecnologia, writing in a Science commentary on the research.

Tactile information from artificial tactile systems “can improve the interaction of a robot with objects,” says Bartolozzi, who is involved in research with the iCub robot.

“In this scenario, objects can be better recognized because touch complements the information gathered from vision about the shape of occluded or badly illuminated regions of the object, such as its texture or hardness. Tactile information also allows objects to be better manipulated — for example, by exploiting contact and slip detection to maintain a stable but gentle grasp of fragile or soft objects (see the photo). …

“Information about shape, softness, slip, and contact forces also greatly improves the usability of upper-limb prosthetics in fine manipulation. … The advantage of the technology devised by Kim et al. is the possibility of covering at a reasonable cost larger surfaces, such as fingers, palms, and the rest of the prosthetic device.

“Safety is enhanced when sensing contacts inform the wearer that the limb is encountering obstacles. The acceptability of the artificial hand by the wearer is also improved because the limb is perceived as part of the body, rather than as an external device. Lower-limb prostheses can take advantage of the same technology, which can also provide feedback about the distribution of the forces at the foot while walking.”

Next research steps

The researchers plan next to create artificial skin coverings for prosthetic devices, which will require new devices to detect heat and other sensations, the ability to embed them into flexible circuits, and then a way to interface all of this to the brain. They also hope to create low-power, artificial sensor nets to cover robots. The idea is to make them more agile by providing some of the same feedback that humans derive from their skin.

“We take skin for granted but it’s a complex sensing, signaling and decision-making system,” said Zhenan Bao, Ph.D., a Stanford professor of chemical engineering and one of the senior authors. “This artificial sensory nerve system is a step toward making skin-like sensory neural networks for all sorts of applications.”

This milestone is part of Bao’s quest to mimic how skin can stretch, repair itself, and, most remarkably, act like a smart sensory network that knows not only how to transmit pleasant sensations to the brain, but also when to order the muscles to react reflexively to make prompt decisions.

The synaptic transistor is the brainchild of Tae-Woo Lee of Seoul National University, who spent his sabbatical year in Bao’s Stanford lab to initiate the collaborative work.

Reference: Science May 31. Source: Stanford University and Seoul National University.

* This work was funded by the Ministry of Science and ICT, Korea; by Seoul National University (SNU); by Samsung Electronics; by the National Nanotechnology Coordinated Infrastructure; and by the Stanford Nano Shared Facilities (SNSF). Patents related to this work are planned.

N-terminated surface of III-V nitrides from first-principles calculations. PMA ranges from 24.1 meV/u.c. in Fe/BN to 53.7 meV/u.c. in Fe/InN. Symmetry-protected degeneracy between x2 − y2 and xy orbitals and its lift by the spin-orbit coupling play a dominant role. As a consequence, PMA in Fe/III-V nitride thin films is dominated by first-order perturbation of the spin-orbit coupling, instead of second-order in conventional transition metal/oxide thin films. This game-changing scenario would also open a new field of magnetism on transition metal/nitride interfaces.

N-terminated surface of III-V nitrides from first-principles calculations. PMA ranges from 24.1 meV/u.c. in Fe/BN to 53.7 meV/u.c. in Fe/InN. Symmetry-protected degeneracy between x2 − y2 and xy orbitals and its lift by the spin-orbit coupling play a dominant role. As a consequence, PMA in Fe/III-V nitride thin films is dominated by first-order perturbation of the spin-orbit coupling, instead of second-order in conventional transition metal/oxide thin films. This game-changing scenario would also open a new field of magnetism on transition metal/nitride interfaces.