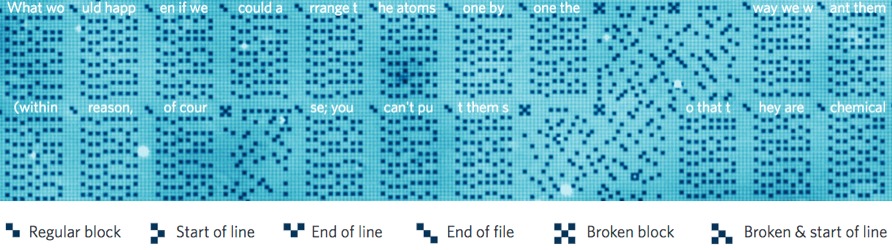

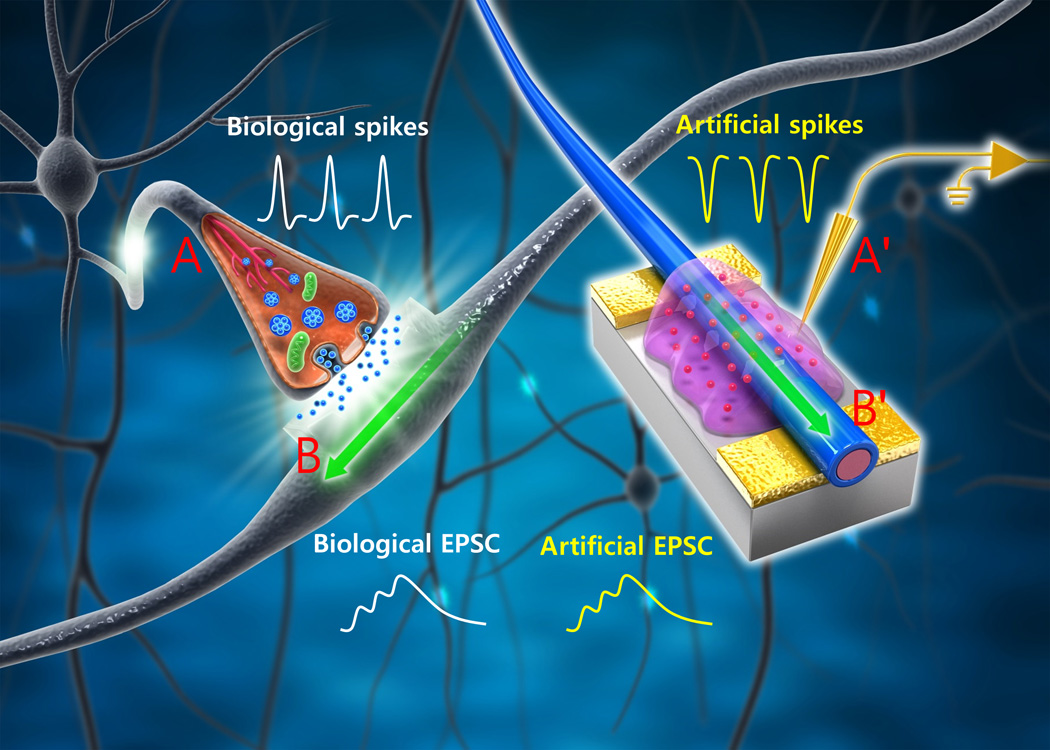

Crash avoidance technologies now available in non-luxury vehicles include Lane Departure Warning (LDW), Forward Collision Warning (FCW), and Blind Spot Monitoring Roadway (BSM). (credit: Corey D. Harper et al./Accident Analysis and Prevention)

U.S. National Highway Traffic Safety Administration chief Mark Rosekind said at a conference today (July 20) that the government “will not abandon efforts to speed the development of self-driving cars … to reduce the 94 percent of car crashes attributed to human error, despite a fatal accident involving a Tesla Model S operating on an autopilot system,” Reuters reports. But autonomous vehicles must be “much safer” than human drivers before they are deployed on U.S. roads, he added.

However, Carnegie Mellon College of Engineering researchers suggest that already-available partially automated vehicle crash-avoidance technologies are a practical interim solution, they conclude in a study published in the journal Accident Analysis and Prevention.

These technologies — which include forward collision warning and avoidance, lane departure warning, blind spot monitoring, and partially autonomous braking or controls — are already available in non-luxury vehicles such as the Honda Accord and Mazda CX-9. If these technologies were deployed in all “light-duty vehicles,” it could prevent or reduce the severity of up 1.3 million crashes a year, including 10,100 fatal wrecks, according to the study.

“While there is much discussion about driverless vehicles, we have demonstrated that even with partial automation, there are financial and safety benefits,” says Chris T. Hendrickson, director of the Carnegie Mellon Traffic21 Institute.

When the team compared the price of equipping cars with safety technology to the expected annual reduction in the costs of crashes (based on government and insurance industry data), they discovered a net cost benefit (in addition to life-saving benefits) in two scenarios:

- In the perfect-world scenario in which all relevant crashes are avoided with these technologies, there is an annual benefit of $202 billion or $861 per car.

- On the more conservative side, when only observed crash reductions in vehicles equipped with blind spot monitoring, lane departure and forward collision crash avoidance systems are considered, there is still an annual positive net benefit of $4 billion dollars or $20 a vehicle (and lower prices could lead to larger net benefits over time).

Carnegie Mellon’s Technologies for Safe and Efficient Transportation (T-SET) University Transportation Center, the National Science Foundation, and the Hillman Foundation funded the project.

Abstract of Cost and benefit estimates of partially-automated vehicle collision avoidance technologies

Many light-duty vehicle crashes occur due to human error and distracted driving. Partially-automated crash avoidance features offer the potential to reduce the frequency and severity of vehicle crashes that occur due to distracted driving and/or human error by assisting in maintaining control of the vehicle or issuing alerts if a potentially dangerous situation is detected. This paper evaluates the benefits and costs of fleet-wide deployment of blind spot monitoring, lane departure warning, and forward collision warning crash avoidance systems within the US light-duty vehicle fleet. The three crash avoidance technologies could collectively prevent or reduce the severity of as many as 1.3 million U.S. crashes a year including 133,000 injury crashes and 10,100 fatal crashes. For this paper we made two estimates of potential benefits in the United States: (1) the upper bound fleet-wide technology diffusion benefits by assuming all relevant crashes are avoided and (2) the lower bound fleet-wide benefits of the three technologies based on observed insurance data. The latter represents a lower bound as technology is improved over time and cost reduced with scale economies and technology improvement. All three technologies could collectively provide a lower bound annual benefit of about $18 billion if equipped on all light-duty vehicles. With 2015 pricing of safety options, the total annual costs to equip all light-duty vehicles with the three technologies would be about $13 billion, resulting in an annual net benefit of about $4 billion or a $20 per vehicle net benefit. By assuming all relevant crashes are avoided, the total upper bound annual net benefit from all three technologies combined is about $202 billion or an $861 per vehicle net benefit, at current technology costs. The technologies we are exploring in this paper represent an early form of vehicle automation and a positive net benefit suggests the fleet-wide adoption of these technologies would be beneficial from an economic and social perspective.