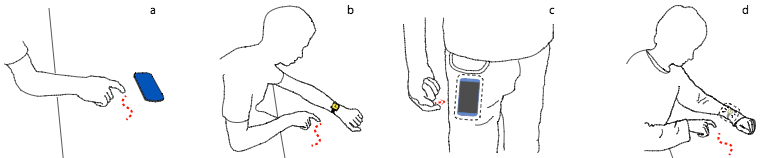

A 22W laser used for adaptive optics on the Very Large Telescope in Chile. A suite of similar lasers could be used to cloak our planet’s transit around the Sun. (credit: ESO/G. Hüdepohl)

We could use lasers to conceal the Earth from observation by an advanced extraterrestrial civilization by shining massive laser beams aimed at a specific star where aliens might be located — thus masking our planet during its transit of the Sun, suggest two astronomers at Columbia University in an open-access paper in Monthly Notices of the Royal Astronomical Society.

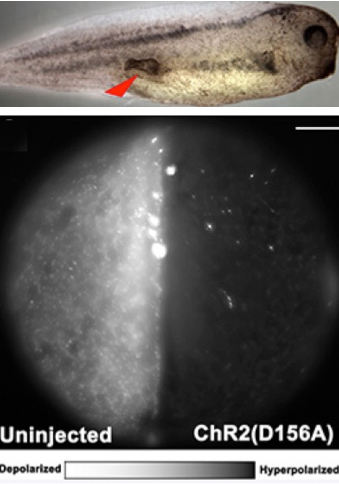

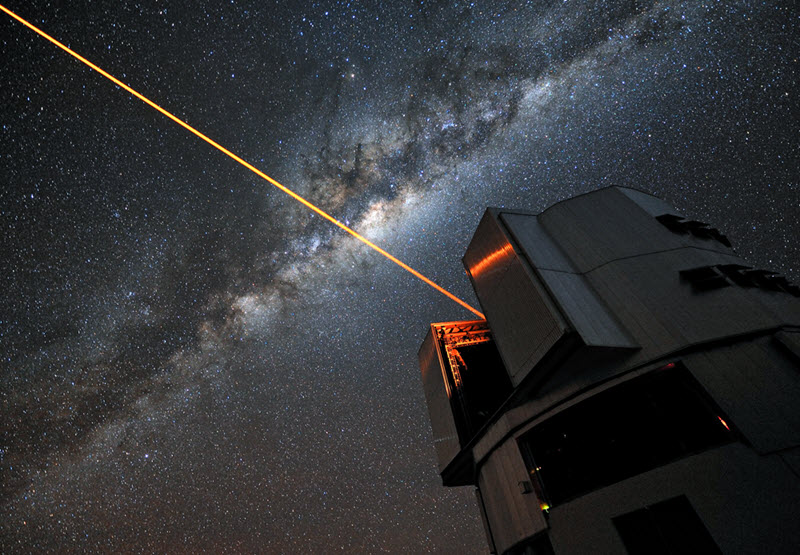

The idea comes from the NASA Kepler mission’s search method for exoplanets (planets around other stars), which looks for transits (a planet crossing in front a star) — identified by a tiny decrease in the star’s brightness.*

To detect exoplanets, NASA’s Kepler measures the light from a star. When a planet crosses in front of a star, the event is called a transit. The planet is usually too small to see, but it can produce a small change in a star’s brightness of about 1/10,000 (100 parts per million), lasting for 2 to 16 hours. (credit: NASA Ames)

Kepler has confirmed the existence of more than 1,000 planets using this technique, with tens of these worlds similar in size to the Earth. Kipping and Teachey speculate that alien scientists could use this approach to locate Earth, since it’s in the “habitable zone” of our Sun (a distance where the temperature is right for liquid water, so it may be a promising place for life), and may be of interest to aliens.*

How to cloak our Earth from aliens

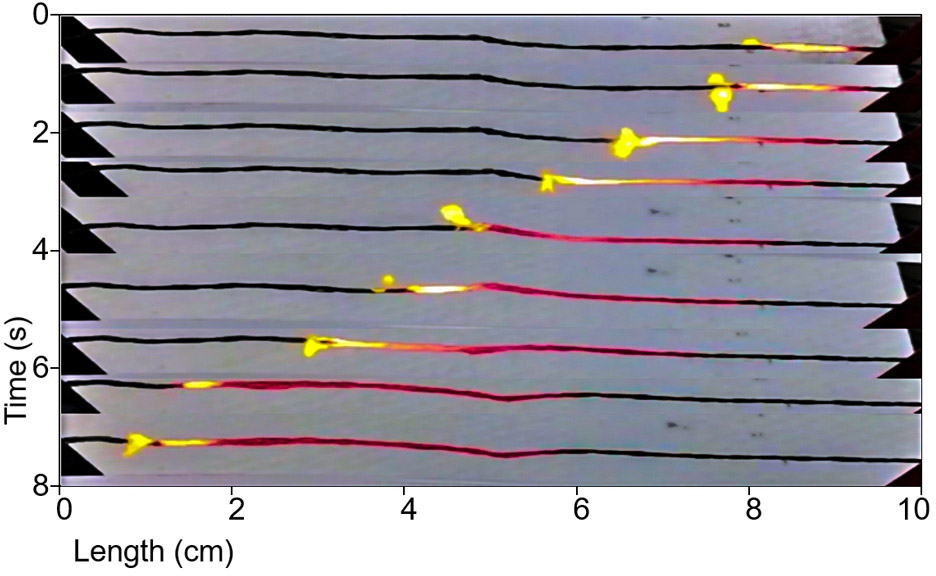

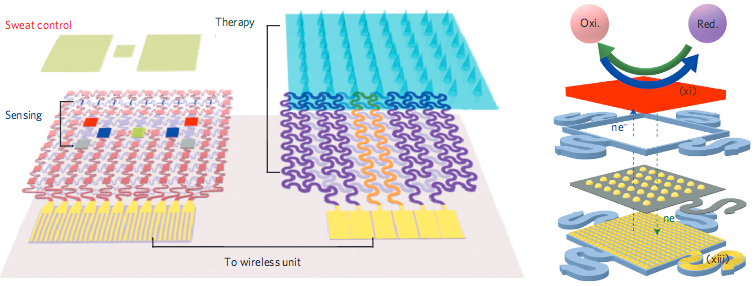

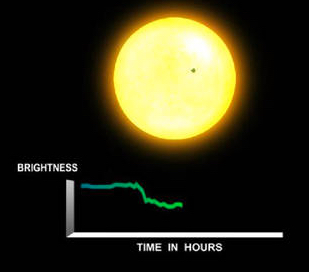

Columbia Professor David Kipping and graduate student Alex Teachey suggest that transits could be masked by controlled laser emission, with the beam directed at the star where the suspected aliens might be located. When the planet’s transit takes place, the laser would be switched on to compensate for the dip in light.**

Illustration (not to scale) of the transit cloaking device. To cloak the Earth, a laser beam (orange) is fired from the night side of the Earth (blue circle) toward a target star (“receiver”) during the transit. (credit: David M. Kipping and Alex Teachey/MNRAS)

According to the authors, emitting a continuous 30 MW laser for about 10 hours, once a year, would be enough to eliminate the transit signal, at least in the visible-light range. The energy needed is comparable to that collected by the International Space Station solar array in a year. A chromatic (multi-wavelength) cloak, effective at all solar wavelengths, is more challenging, and would need a large array of tuneable lasers with a total power of 250 MW.***

“Alternatively, we could cloak only the atmospheric signatures associated with biological activity, such as oxygen, which is achievable with a peak laser power of just 160 kW per transit. To another civilization, this should make the Earth appear as if life never took hold on our world”, said Teachey.

Cool Worlds Lab/Columbia University | A Cloaking Device for Planets

Broadcasting our existence: the METI (message SETI) approach

The lasers could also be used to broadcast our existence by modifying the light from the Sun during a transit to make it obviously artificial, such as modifying the normal “U” transit light curve (the intensity vs. time pattern during transit). The authors suggest that we could even transmit information by modulating the laser beams at the same time, providing a way to send messages to aliens.

However, several prominent scientists, including Stephen Hawking, have cautioned against humanity broadcasting our presence to intelligent life on other planets. Hawking and others are concerned that extraterrestrials might wish to take advantage of the Earth’s resources, and that their visit, rather than being benign, could be as devastating as when Europeans first traveled to the Americas. (See Are you ready for contact with extraterrestrial intelligence? and METI: should we be shouting at the cosmos?)

Perhaps aliens have had the same thought. The two astronomers propose that the Search for Extraterrestrial Intelligence (SETI), which currently looks mailing for alien radio signals, could be broadened to search for artificial star transits. Such signatures could also be readily searched in the NASA archival data of Kepler transit surveys.

* Once detected, the planet’s orbital size can be calculated from the period (how long it takes the planet to orbit once around the star) and the mass of the star using Kepler’s Third Law of planetary motion. The size of the planet is found from the depth of the transit (how much the brightness of the star drops) and the size of the star. From the orbital size and the temperature of the star, the planet’s characteristic temperature can be calculated. From this, the question of whether or not the planet is habitable (not necessarily inhabited) can be answered. — Kepler and K2, NASA Mission Overview

** It’s not clear what indicators might lead to such a suspicion, aside from a confirmed SETI transmission detection. It would be interesting to calculate the required number and locations of lasers, their operational schedule, and their power requirements for a worst-case scenario — assuming potential threats from certain types of stars, or all stars — considering laser beam divergence angle, beam flux gradients, and maximum star distance within about one degree of a planet’s ecliptic plane can see it transit in the ecliptic plane, based on assumed maximum alien telescope resolving power.

[UPDATE 1/3/2016: Kipping correction: "within about one degree" and added "based on assumed maximum alien telescope resolving power"]

[UPDATE 1/3/2016: from Kipping regarding beam divergence angle, flux gradients, and primary focus of the paper]: “Beam shaping, through the use of multiple beams, can produce effectively isotropic radiation within the beam width. Unless the target is very close, the beam width typically encompasses the entire alien solar system by the time it reaches, due to beam divergence. So we don’t even really need to know the position of the target planet that well (although we likely do anyway thanks to our detection methods). A common misunderstanding of our paper is to erroneously assume that we are advocating that humanity should build this for the Earth, but actually we are pointing out that if even our current technology can pull off a pretty effective cloak then other more advanced civilizations may be able to hide from us perfectly.”]

*** For example, a chromatic cloak for the NIRSpec instrument planned for James Webb Space Telescope covering from 0.6 to 5 µm would require approximately 6000 monochromatic lasers in the array.

Abstract of A Cloaking Device for Transiting Planets

The transit method is presently the most successful planet discovery and characterization tool at our disposal. Other advanced civilizations would surely be aware of this technique and appreciate that their home planet’s existence and habitability is essentially broadcast to all stars lying along their ecliptic plane. We suggest that advanced civilizations could cloak their presence, or deliberately broadcast it, through controlled laser emission. Such emission could distort the apparent shape of their transit light curves with relatively little energy, due to the collimated beam and relatively infrequent nature of transits. We estimate that humanity could cloak the Earth from Kepler-like broadband surveys using an optical monochromatic laser array emitting a peak power of ∼30 MW for ∼10 hours per year. A chromatic cloak, effective at all wavelengths, is more challenging requiring a large array of tunable lasers with a total power of ∼250 MW. Alternatively, a civilization could cloak only the atmospheric signatures associated with biological activity on their world, such as oxygen, which is achievable with a peak laser power of just ∼160 kW per transit. Finally, we suggest that the time of transit for optical SETI is analogous to the water-hole in radio SETI, providing a clear window in which observers may expect to communicate. Accordingly, we propose that a civilization may deliberately broadcast their technological capabilities by distorting their transit to an artificial shape, which serves as both a SETI beacon and a medium for data transmission. Such signatures could be readily searched in the archival data of transit surveys.