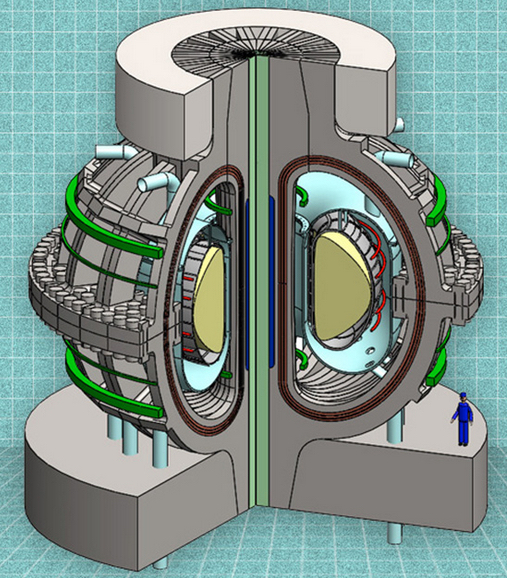

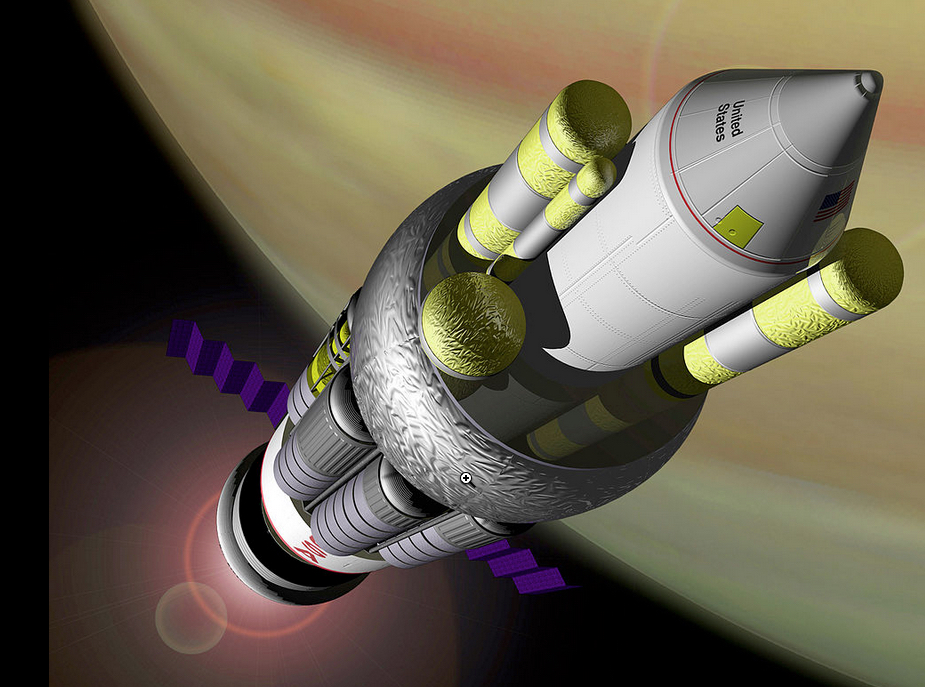

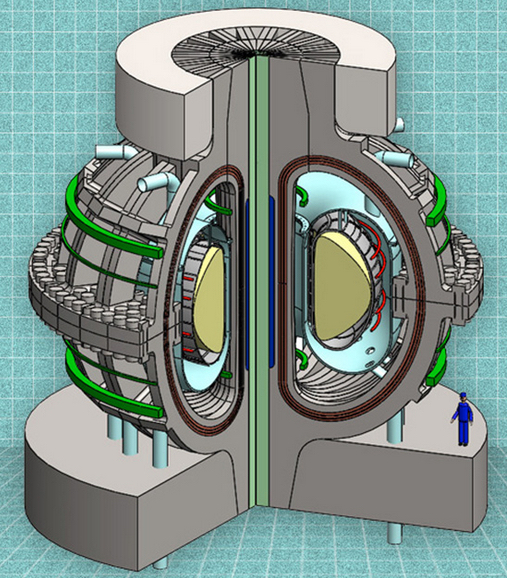

A cutaway view of the proposed ARC reactor (credit: MIT ARC team)

MIT plans to create a new compact version of a tokamak fusion reactor with the goal of producing practical fusion power, which could offer a nearly inexhaustible energy resource in as little as a decade.

Fusion, the nuclear reaction that powers the sun, involves fusing pairs of hydrogen atoms together to form helium, accompanied by enormous releases of energy.

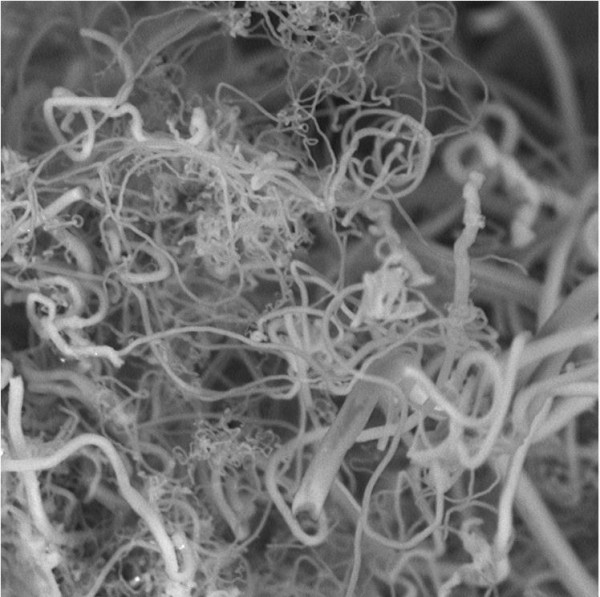

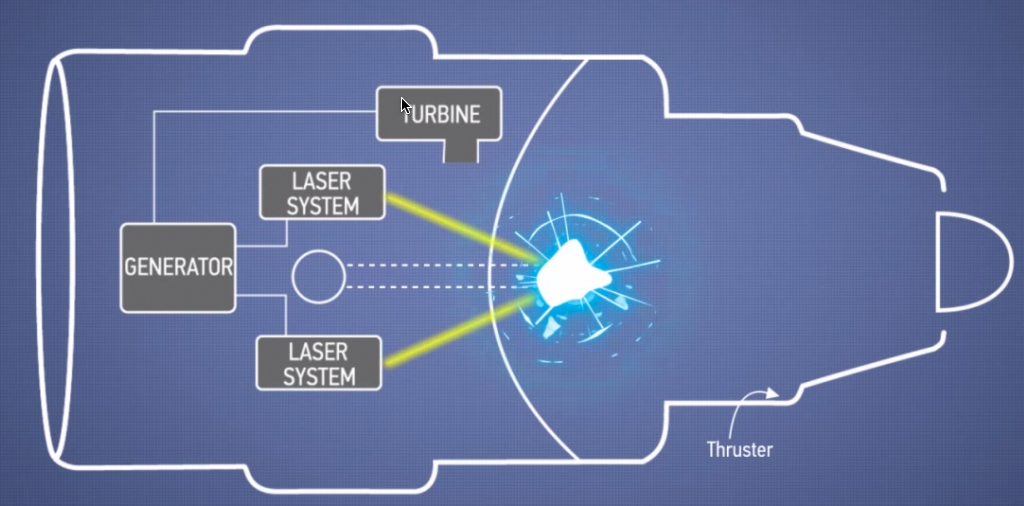

The new fusion reactor, called ARC, would take advantage of new, commercially available superconductors — rare-earth barium copper oxide (REBCO) superconducting tapes (the dark brown areas in the illustration above) — to produce stronger magnetic field coils, according to Dennis Whyte, a professor of Nuclear Science and Engineering and director of MIT’s Plasma Science and Fusion Center.

The stronger magnetic field makes it possible to produce the required magnetic confinement of the superhot plasma — that is, the working material of a fusion reaction — but in a much smaller device than those previously envisioned. The reduction in size, in turn, makes the whole system less expensive and faster to build, and also allows for some ingenious new features in the power plant design.

The proposed reactor is described in a paper in the journal Fusion Engineering and Design, co-authored by Whyte, PhD candidate Brandon Sorbom, and 11 others at MIT.

Power plant prototype

The new reactor is designed for basic research on fusion and also as a potential prototype power plant that could produce 270MW of electrical power. The basic reactor concept and its associated elements are based on well-tested and proven principles developed over decades of research at MIT and around the world, the team says. An experimental tokamak was built at Princeton Plasma Physics Laboratory circa 1980.

The hard part has been confining the superhot plasma — an electrically charged gas — while heating it to temperatures hotter than the cores of stars. This is where the magnetic fields are so important — they effectively trap the heat and particles in the hot center of the device.

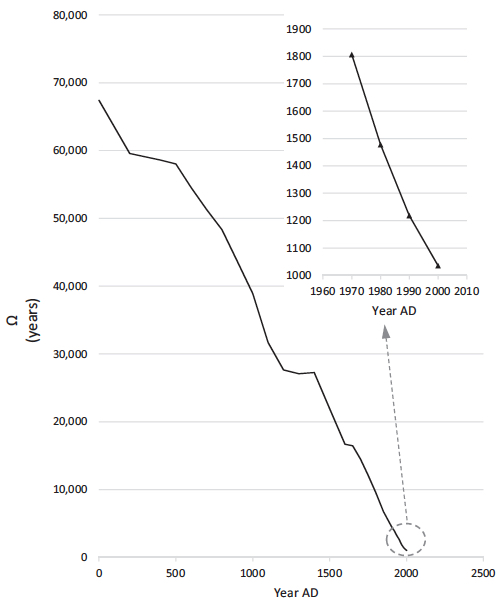

While most characteristics of a system tend to vary in proportion to changes in dimensions, the effect of changes in the magnetic field on fusion reactions is much more extreme: The achievable fusion power increases according to the fourth power of the increase in the magnetic field.

Tenfold boost in power

The new superconductors are strong enough to increase fusion power by about a factor of 10 compared to standard superconducting technology, Sorbom says. This dramatic improvement leads to a cascade of potential improvements in reactor design.

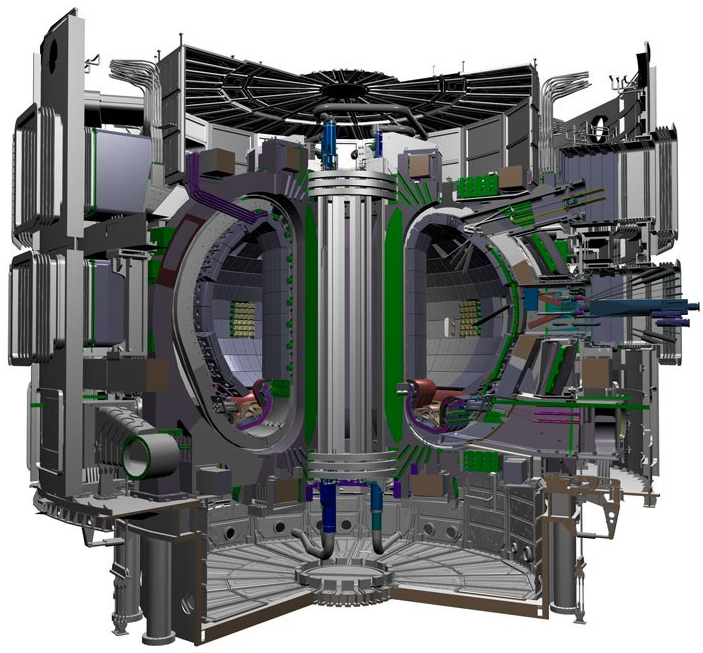

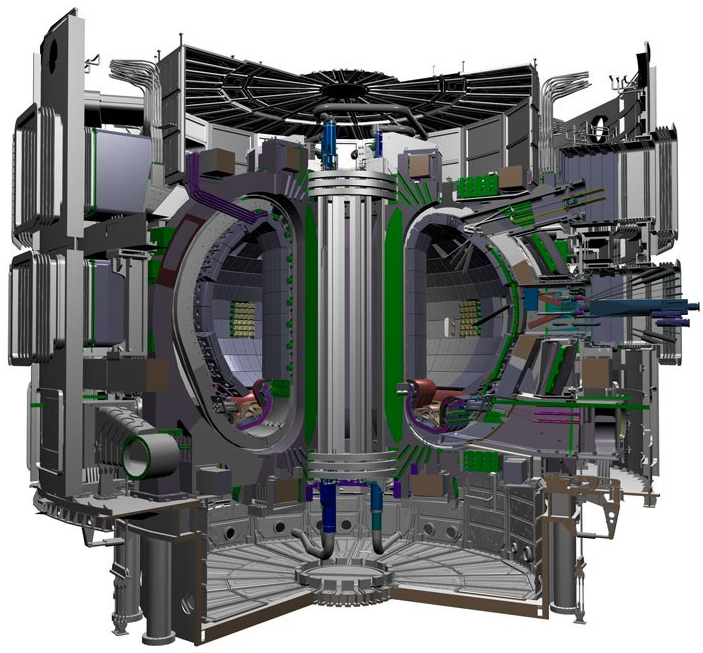

ITER — the world’s largest tokamak — is expected to be completed in 2019, with deuterium-tritium operations in 2027 and 2000–4000MW of fusion power onto the grid in 2040 (credit: ITER Organization)

The world’s most powerful planned fusion reactor, a huge device called ITER that is under construction in France, is expected to cost around $40 billion. Sorbom and the MIT team estimate that the new design, about half the diameter of ITER (which was designed before the new superconductors became available), would produce about the same power at a fraction of the cost, in a shorter construction time, and with the same physics.

Another key advance in the new design is a method for removing the fusion power core from the donut-shaped reactor without having to dismantle the entire device. That makes it especially well-suited for research aimed at further improving the system by using different materials or designs to fine-tune the performance.

In addition, as with ITER, the new superconducting magnets would enable the reactor to operate in a sustained way, producing a steady power output, unlike today’s experimental reactors that can only operate for a few seconds at a time without overheating of copper coils.

Liquid protection

Another key advantage is that most of the solid blanket materials used to surround the fusion chamber in such reactors are replaced by a liquid material that can easily be circulated and replaced, eliminating the need for costly replacement procedures as the materials degrade over time.

Right now, as designed, the reactor should be capable of producing about three times as much electricity as is needed to keep it running, but the design could probably be improved to increase that proportion to about five or six times, Sorbom says. So far, no fusion reactor has produced as much energy as it consumes, so this kind of net energy production would be a major breakthrough in fusion technology, the team says.

The design could produce a reactor that would provide electricity to about 100,000 people, they say. Devices of a similar complexity and size have been built within about five years, they say.

“Fusion energy is certain to be the most important source of electricity on earth in the 22nd century, but we need it much sooner than that to avoid catastrophic global warming,” says David Kingham, CEO of Tokamak Energy Ltd. in the UK, who was not connected with this research. “This paper shows a good way to make quicker progress,” he says.

The MIT research, Kingham says, “shows that going to higher magnetic fields, an MIT specialty, can lead to much smaller (and hence cheaper and quicker-to-build) devices.” The work is of “exceptional quality,” he says; “the next step … would be to refine the design and work out more of the engineering details, but already the work should be catching the attention of policy makers, philanthropists and private investors.”

The research was supported by the U.S. Department of Energy and the National Science Foundation.

Abstract of ARC: A compact, high-field, fusion nuclear science facility and demonstration power plant with demountable magnets

The affordable, robust, compact (ARC) reactor is the product of a conceptual design study aimed at reducing the size, cost, and complexity of a combined fusion nuclear science facility (FNSF) and demonstration fusion Pilot power plant. ARC is a ∼200–250 MWe tokamak reactor with a major radius of 3.3 m, a minor radius of 1.1 m, and an on-axis magnetic field of 9.2 T. ARC has rare earth barium copper oxide (REBCO) superconducting toroidal field coils, which have joints to enable disassembly. This allows the vacuum vessel to be replaced quickly, mitigating first wall survivability concerns, and permits a single device to test many vacuum vessel designs and divertor materials. The design point has a plasma fusion gain of Qp ≈ 13.6, yet is fully non-inductive, with a modest bootstrap fraction of only ∼63%. Thus ARC offers a high power gain with relatively large external control of the current profile. This highly attractive combination is enabled by the ∼23 T peak field on coil achievable with newly available REBCO superconductor technology. External current drive is provided by two innovative inboard RF launchers using 25 MW of lower hybrid and 13.6 MW of ion cyclotron fast wave power. The resulting efficient current drive provides a robust, steady state core plasma far from disruptive limits. ARC uses an all-liquid blanket, consisting of low pressure, slowly flowing fluorine lithium beryllium (FLiBe) molten salt. The liquid blanket is low-risk technology and provides effective neutron moderation and shielding, excellent heat removal, and a tritium breeding ratio ≥ 1.1. The large temperature range over which FLiBe is liquid permits an output blanket temperature of 900 K, single phase fluid cooling, and a high efficiency helium Brayton cycle, which allows for net electricity generation when operating ARC as a Pilot power plant.