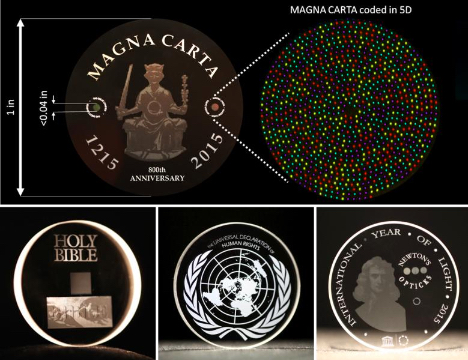

Documents captured in nanostructured glass, expected to last billions of years (credit: University of Southampton)

Scientists at the University of Southampton Optoelectronics Research Centre (ORC) have developed the first digital data storage system capable of creating archives that can survive for billions of years.

Using nanostructured glass, the system has 360 TB per disc capacity, thermal stability up to 1,000°C, and virtually unlimited lifetime at room temperature (or 13.8 billion years at 190°C ).

As a “highly stable and safe form of portable memory,” the technology opens up a new era of “eternal” data archiving that could be essential to cope with the accelerating amount of information currently being created and stored, the scientists says.* The system could be especially useful for organizations with big archives, such as national archives, museums, and libraries, according to the scientists.

Superman memory crystal

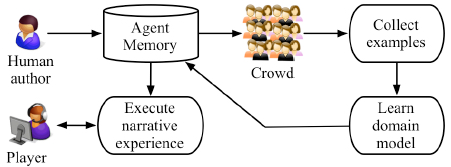

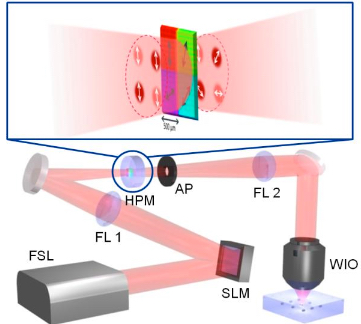

5D optical storage writing setup. FSL: femtosecond laser; SLM: spatial light modulator; FL1 and FL2: Fourier lens; HPM: half-wave plate matrix; AP: aperture; WIO: water immersion objective. Inset: Linearly polarized light (white arrows) with different intensity levels propagate simultaneously through each half-wave plate segment with different slow-axis orientation (black arrows). The colors of the rectangle indicate four different intensity levels. (credit: University of Southampton)

The recording system uses an ultrafast laser to produce extremely short (femtosecond) and intense pulses of light. The file is written in three up to 18 layers of nanostructured dots separated by five micrometers (one millionth of a meter) in fuzed quartz (coined as a “Superman memory crystal” (as in “memory crystals” used in the Superman films).” The self-assembled nanostructures change the way light travels through glass, modifying the polarization of light, which can then be read by a combination optical microscope and polarizer, similar to that found in Polaroid sunglasses.

The recording method is described as “5D” because the information encoding is in five dimensions — three-dimensional position plus size and orientation.

So far, the researchers have saved major documents from human history, such as the Universal Declaration of Human Rights (UDHR), Newton’s Opticks, Magna Carta, and Kings James Bible as digital copies. A copy of the UDHR encoded to 5D data storage was recently presented to UNESCO by the ORC at the International Year of Light (IYL) closing ceremony in Mexico.

The team is now looking for industry partners to further develop and commercialize this technology.

The researchers will present their research at the photonics industry’s SPIE (the International Society for Optical Engineering Conference) in San Francisco on Wednesday Feb. 17.

* In 2008, the International Data Corporation [found] that total capacity of data stored is increasing by around 60% each year. As a result, more than 39,000 exabytes of data will be generated by 2020. This amount of data will cause a series of problems and one of the main will be power consumption. 1.5% of the total U.S. electricity consumption in 2010 was given to the data centers in the U.S. According to a report by the Natural Resources Defence Council, the power consumption of all data centers in the U.S. will reach roughly 140 billion kilowatt-hours per each year by 2020. This amount of electricity is equivalent to that generated by roughly thirteen Heysham 2 nuclear power stations (one of the biggest stations in UK, net 1240 MWe).

Most of these data centers are built based on hard-disk drive (HDD), with only a few designed on optical discs. HDD is the most popular solution for digital data storage according to the International Data Corporation. However, HDD is not an energy-efficient option for data archiving; the loading energy consumption is around 0.04 W/GB. In addition, HDD is an unsatisfactory candidate for long-term storage due to the short lifetime of the hardware and requires transferring data every two years to avoid any loss.

— Jingyu Zhang et al. Eternal 5D data storage by ultrafast laser writing in glass. Proceedings of the SPIE OPTO 2016

Abstract of Eternal 5D data storage by ultrafast laser writing in glass

Femtosecond laser writing in transparent materials has attracted considerable interest due to new science and a wide range of applications from laser surgery, 3D integrated optics and optofluidics to geometrical phase optics and ultra-stable optical data storage. A decade ago it has been discovered that under certain irradiation conditions self-organized subwavelength structures with record small features of 20 nm, could be created in the volume of silica glass. On the macroscopic scale the self-assembled nanostructure behaves as a uniaxial optical crystal with negative birefringence. The optical anisotropy, which results from the alignment of nano-platelets, referred to as form birefringence, is of the same order of magnitude as positive birefringence in crystalline quartz. The two independent parameters describing birefringence, the slow axis orientation (4th dimension) and the strength of retardance (5th dimension), are explored for the optical encoding of information in addition to three spatial coordinates. The slow axis orientation and the retardance are independently manipulated by the polarization and intensity of the femtosecond laser beam. The data optically encoded into five dimensions is successfully retrieved by quantitative birefringence measurements. The storage allows unprecedented parameters including hundreds of terabytes per disc data capacity and thermal stability up to 1000°. Even at elevated temperatures of 160oC, the extrapolated decay time of nanogratings is comparable with the age of the Universe – 13.8 billion years. The demonstrated recording of the digital documents, which will survive the human race, including the eternal copies of Kings James Bible and Magna Carta, is a vital step towards an eternal archive.