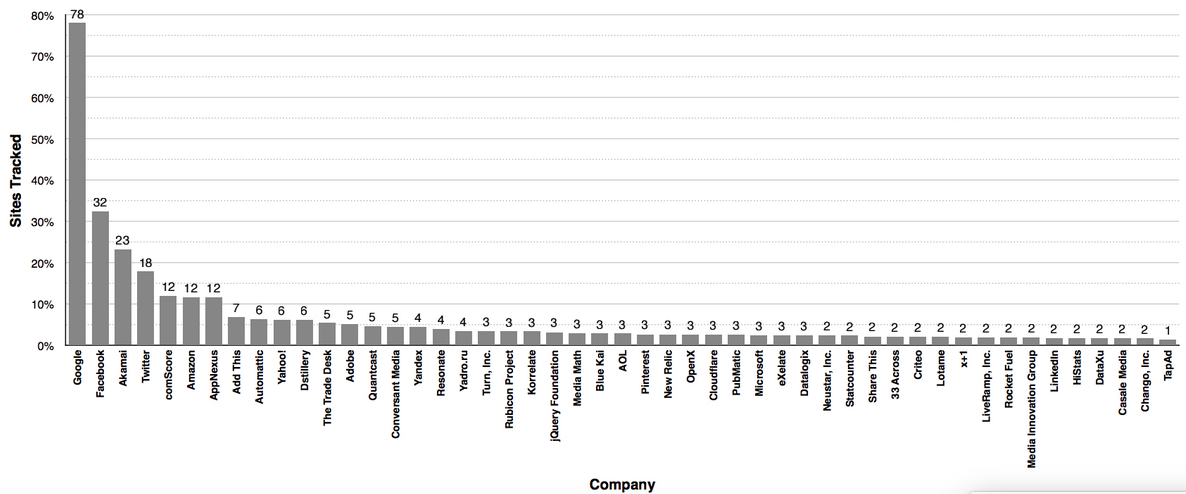

Columbia University engineering researchers have developed a new “circulator” technology that can double WiFi speed while reducing the size of wireless devices. It does this by requiring only one antenna (instead of two, for transmitter and receiver) and by using conventional CMOS chips instead of resorting to large, expensive magnetic components.

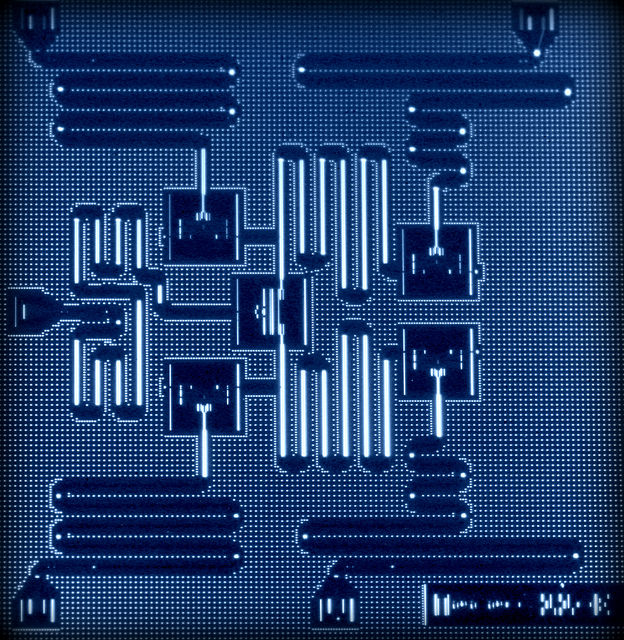

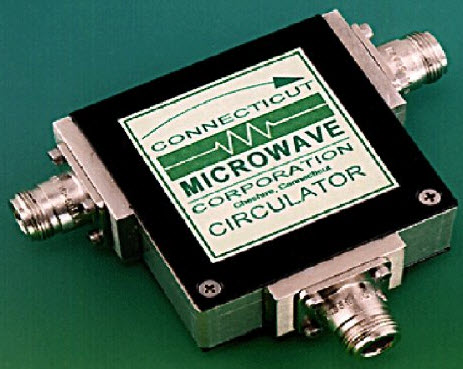

Current bulky circulator design (credit: Connecticut Microwave Corporation)

Columbia engineers previously invented a “full-duplex” radio integrated circuit on a conventional CMOS chip. “Full duplex” means simultaneous transmission and reception at the same frequency in a wireless radio, unlike “half-duplex” (transmitting and receiving at different times, used by current cell phones and other wireless devices). Full duplex also allows for faster transmission speeds.

The new circulator technology further miniaturizes future WiFi and other wireless devices (see Lighter, cheaper radio-wave device could double the useful bandwidth in wireless communications — an earlier circulator device developed by The University of Texas at Austin engineers that was not integrated on a CMOS chip).

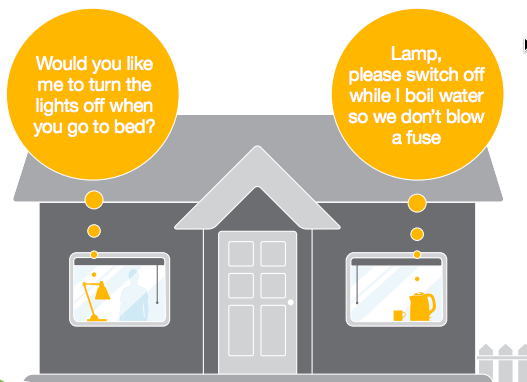

“Full-duplex communications, where the transmitter and the receiver operate at the same time and at the same frequency, has become a critical research area and now we’ve shown that WiFi capacity can be doubled on a nanoscale silicon chip with a single antenna,” said Electrical Engineering Associate Professor Harish Krishnaswamy, director of the Columbia High-Speed and Mm-wave IC (CoSMIC) Lab. “This has enormous implications for devices like smartphones and tablets.”

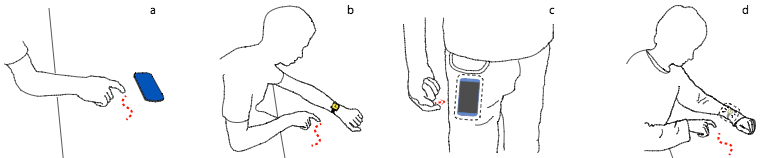

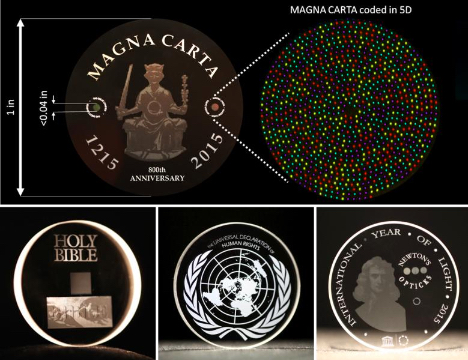

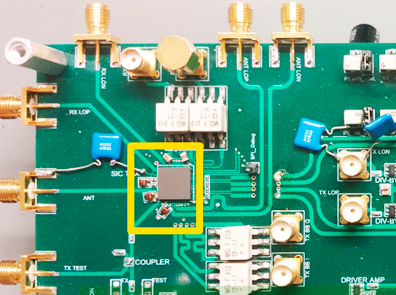

Prototype of first CMOS full-duplex receiver IC with integrated magnetic-free circulator (credit: Negar Reiskarimian, Columbia Engineering)

By combining circulator and full-duplex technologies, “this technology could revolutionize the field of telecommunications,” he said. “Our circulator is the first to be put on a silicon chip, and we get literally orders of magnitude better performance than prior work.”

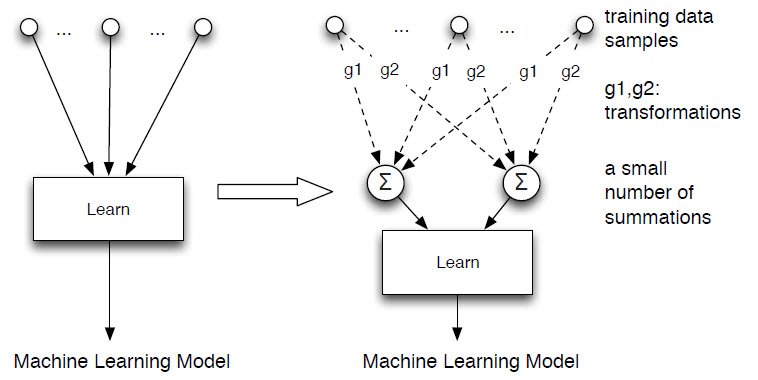

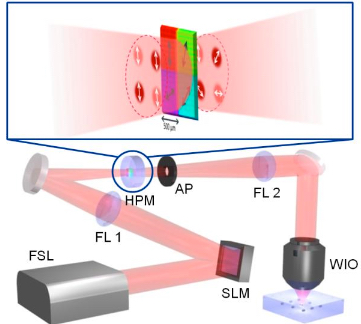

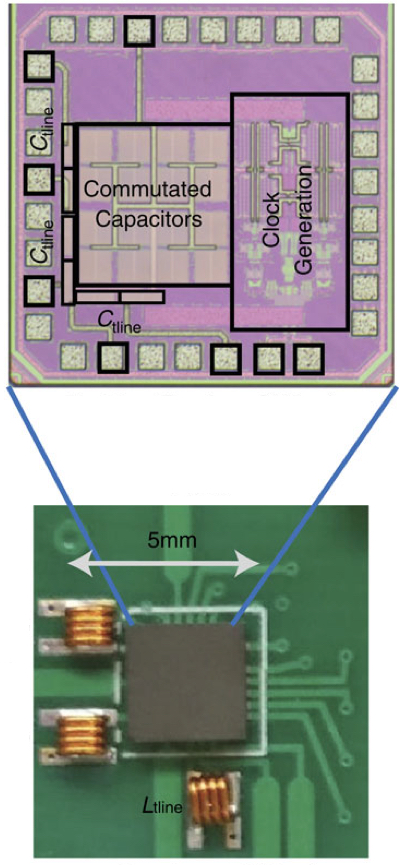

How to embed circulator technology on a CMOS chip

CMOS circulator IC on a printed-curcuit board, interfaced with off-chip inductors. (credit: Negar Reiskarimian, Columbia Engineering)

A circulator allows for using only one antenna to both transmit and receive. To do that, it has to “break” “Lorentz reciprocity” — a fundamental physical characteristic of most electronic structures that requires that electromagnetic waves travel in the same manner in both forward and reverse directions.

The traditional way of breaking Lorentz reciprocity and building radio-frequency circulators has been to use magnetic materials such as ferrites, which lose reciprocity when an external magnetic field is applied. But these materials are not compatible with silicon chip technology, and ferrite circulators are bulky and expensive.

Krishnaswamy and his team were able to design a highly miniaturized circulator that uses switches to rotate the signal across a set of capacitors to emulate the non-reciprocal “twist” of the signal that is seen in ferrite materials.

“Being able to put the circulator on the same chip as the rest of the radio has the potential to significantly reduce the size of the system, enhance its performance, and introduce new functionalities critical to full duplex,” says PhD student Jin Zhou, who integrated the circulator with a full-duplex receiver.

Circulator circuits and components have applications in many different scenarios, from radio-frequency full-duplex communications and radar to building isolators that prevent high-power transmitters from being damaged by back-reflections from the antenna. The ability to break reciprocity also opens up new possibilities in radio-frequency signal processing that are yet to be discovered.

The circulator research is published in an open-access paper on April 15 in Nature Communications. A paper detailing the single-chip full-duplex radio with the circulator and additional echo cancellation was presented at the 2016 IEEE International Solid-State Circuits Conference on February 2.

The work has been funded by the DARPA Microsystems Technology Office and the National Science Foundation.

Abstract of Magnetic-free non-reciprocity based on staggered commutation

Lorentz reciprocity is a fundamental characteristic of the vast majority of electronic and photonic structures. However, non-reciprocal components such as isolators, circulators and gyrators enable new applications ranging from radio frequencies to optical frequencies, including full-duplex wireless communication and on-chip all-optical information processing. Such components today dominantly rely on the phenomenon of Faraday rotation in magneto-optic materials. However, they are typically bulky, expensive and not suitable for insertion in a conventional integrated circuit. Here we demonstrate magnetic-free linear passive non-reciprocity based on the concept of staggered commutation. Commutation is a form of parametric modulation with very high modulation ratio. We observe that staggered commutation enables time-reversal symmetry breaking within very small dimensions (λ/1,250 × λ/1,250 in our device), resulting in a miniature radio-frequency circulator that exhibits reduced implementation complexity, very low loss, strong non-reciprocity, significantly enhanced linearity and real-time reconfigurability, and is integrated in a conventional complementary metal–oxide–semiconductor integrated circuit for the first time.

Abstract of Receiver with integrated magnetic-free N-path-filter-based non-reciprocal circulator and baseband self-interference cancellation for full-duplex wireless

Full-duplex (FD) is an emergent wireless communication paradigm where the transmitter (TX) and the receiver (RX) operate at the same time and at the same frequency. The fundamental challenge with FD is the tremendous amount of TX self-interference (SI) at the RX. Low-power applications relax FD system requirements [1], but an FD system with -6dBm transmit power, 10MHz signal bandwidth and 12dB NF budget still requires 86dB of SI suppression to reach the -92dBm noise floor. Recent research has focused on techniques for integrated self-interference cancellation (SIC) in FD receivers [1-3]. Open challenges include achieving the challenging levels of SIC through multi-domain cancellation, and low-loss shared-antenna (ANT) interfaces with high TX-to-RX isolation. Sharedantenna interfaces enable compact form factor, translate easily to MIMO, and ease system design through channel reciprocity.