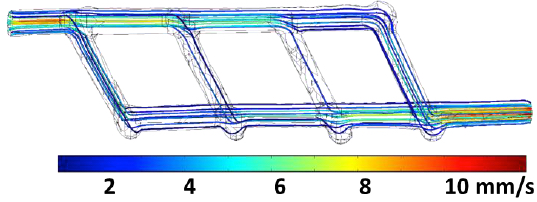

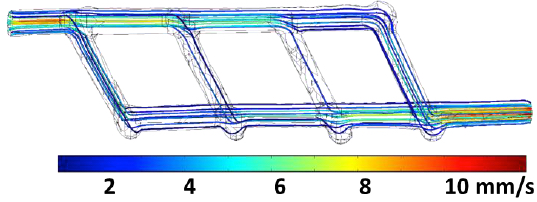

A miniature 3D-printed network of microchannels designed to link up an artery to a tissue implant to ensure blood flow of oxygen and nutrients. Inlet and outlet are ~1 millimeter in diameter; multiple smaller vessels are ~ 600 to 800 microns in diameter. Flow streamlines are color-coded corresponding to flow rate. Flow rate at the inlet is equal to 0.12 mL/min. (credit: Renganaden Sooppan et al./Tissue Engineering Part C: Methods)

Scientists have designed an innovative structure containing an intricate microchannel network of simulated blood vessels that solves one of the biggest challenges in regenerative medicine: How to deliver oxygen and nutrients to all cells in an artificial organ or tissue implant that takes days or weeks to grow in the lab prior to surgery.

The new study was performed by a research team led by Jordan Miller, assistant professor of bioengineering at Rice, and Pavan Atluri, assistant professor of surgery at Penn.

Stayin’ alive, stayin’ alive …

Miller explained that one of the hurdles of engineering large artificial tissues, such as livers or kidneys, is keeping the cells inside them alive. Tissue engineers have typically relied on the body’s own ability to grow blood vessels — for example, by implanting engineered tissue scaffolds inside the body and waiting for blood vessels from nearby tissues to spread via arbolization to the engineered constructs.

But that process can take weeks, and cells deep inside the constructs often starve or die from lack of oxygen before they’re reached by the slow-approaching blood vessels.

“What a surgeon needs in order to do transplant surgery isn’t just a mass of cells; the surgeon needs a vessel inlet and an outlet that can be directly connected to arteries and veins,” he said.

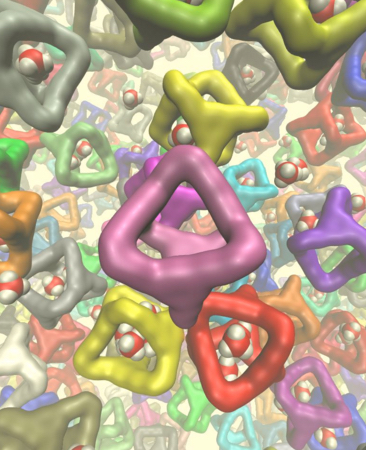

3D-printing pastry-inspired sugar glass to form an intricate microchannel capillary lattice

“We wondered if there were a way to implant a 3-D printed construct where we could connect host arteries directly to the construct and get perfusion [blood flow] immediately. In this study, we are taking the first step toward applying an analogy from transplant surgery to 3-D printed constructs we make in the lab.”

Miller turned to a method inspired by the intricate sugar glass cages crafted by pastry chefs to garnish desserts and that he had pioneered in 2012.

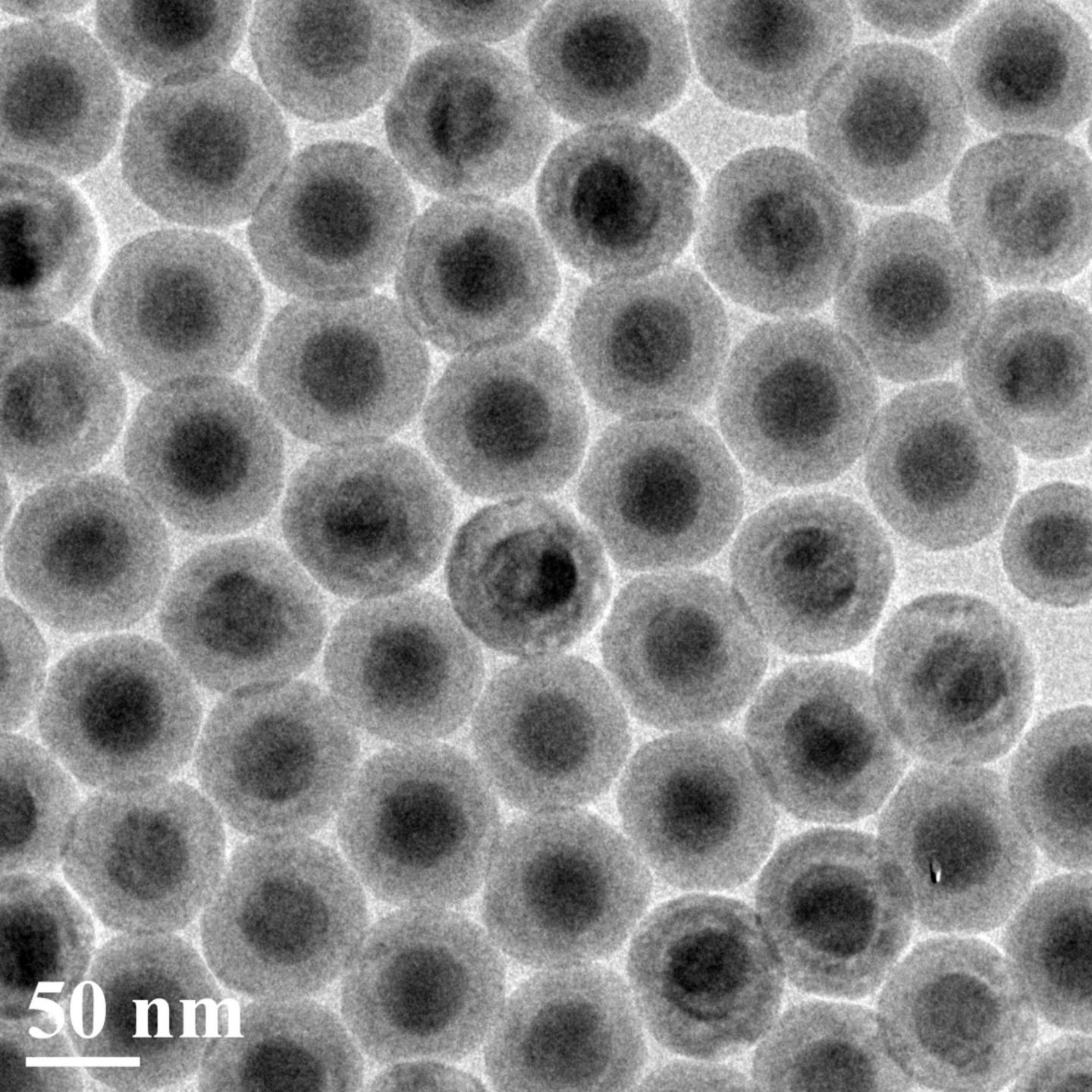

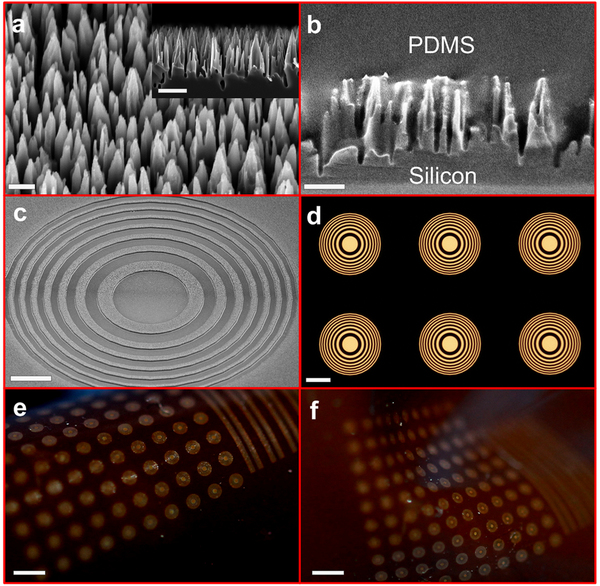

Description of sugar glass printing and initial flow testing. A: Extrusion print head in the process of printing a sugar glass lattice. B: Final sugar lattice prior to casting. The lattice contains a network of filaments supported by a surrounding well. Red line denotes the outer edge of the well that will be filled with PDMS silicone gel during casting. C: Schematic of printed sugar glass network. Drawing on the left denotes sugar filaments after printing, while the figure on the right shows trimmed filaments prior to casting. D: Final cast PDMS gel with microchannel network. (credit: Renganaden Sooppan et al./Tissue Engineering Part C: Methods)

Using an open-source 3-D printer to lay down individual filaments of sugar glass one layer at a time, the researchers printed a lattice of would-be blood vessels. Once the sugar hardened, they placed it in a mold and poured in silicone gel. After the gel cured, Miller’s team dissolved the sugar, leaving behind a network of small channels in the silicone.

“They don’t yet look like the blood vessels found in organs, but they have some of the key features relevant for a transplant surgeon,” Miller said. “We created a construct that has one inlet and one outlet, which are about 1 millimeter in diameter, and these main vessels branch into multiple smaller vessels, which are about 600 to 800 microns.”

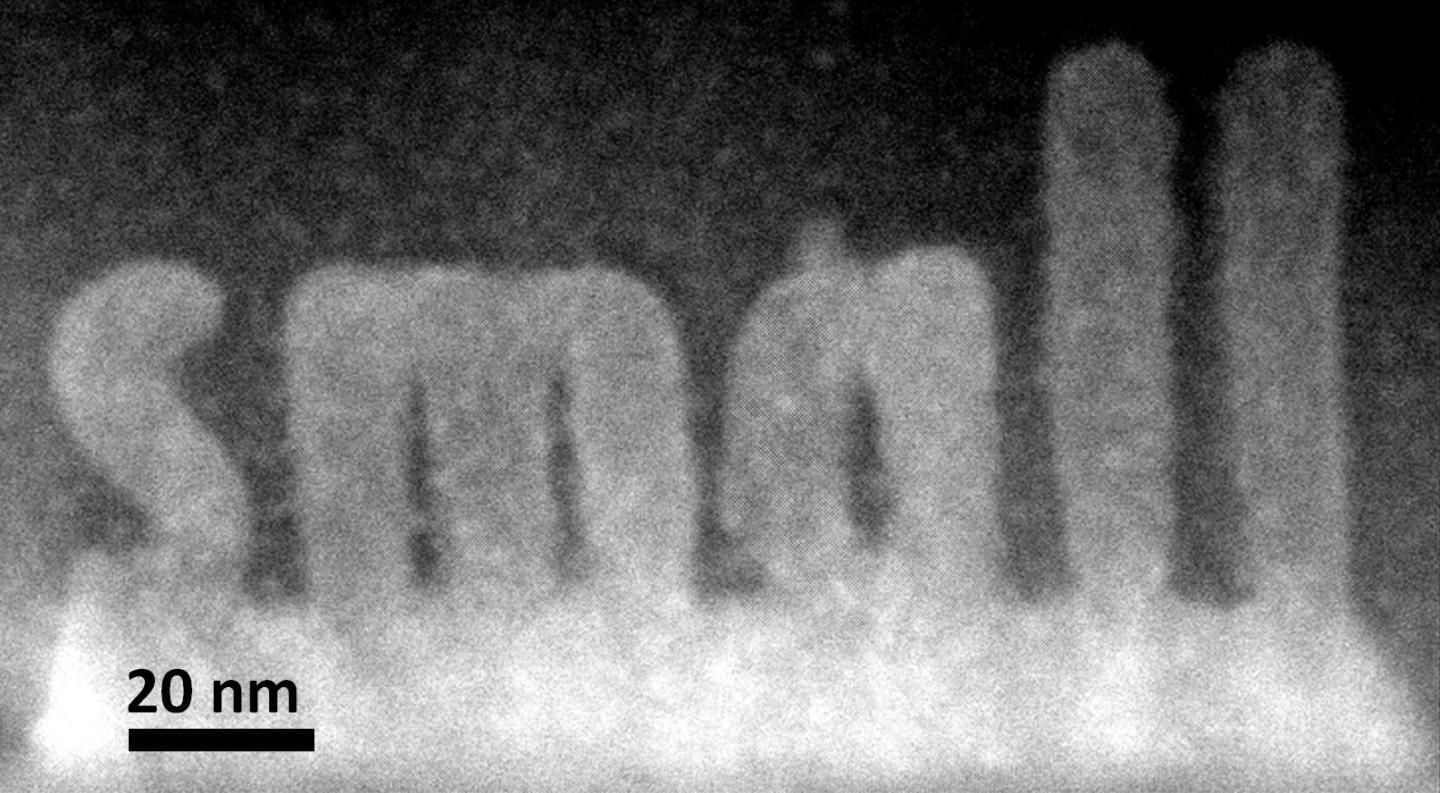

Passing the surgeon-oriented test: normal blood flow

Collaborating surgeons at Penn in Atluri’s group then connected the inlet and outlet of the engineered gel to a major (femoral) artery in a small animal model. Using Doppler imaging technology, the team observed and measured blood flow through the construct and found that it withstood physiologic pressures and remained open and unobstructed for up to three hours.

They found that blood flowed normally through test constructs that were surgically connected to native blood vessels.

“This study provides a first step toward developing a transplant model for tissue engineering where the surgeon can directly connect arteries to an engineered tissue,” Miller said. “In the future, we aim to utilize a biodegradable material that also contains live cells next to these perfusable vessels for direct transplantation and monitoring long term.”

The report was published in an

open-access paper in the journal

Tissue Engineering Part C: Methods.

Abstract of Tissue Engineering Part C: Methods

The field of tissue engineering has advanced the development of increasingly biocompatible materials to mimic the extracellular matrix of vascularized tissue. However, a majority of studies instead rely on a multi-day inosculation between engineered vessels and host vasculature, rather than the direct connection of engineered microvascular networks with host vasculature. We have previously demonstrated that the rapid casting of 3D printed sacrificial carbohydrate glass is an expeditious and reliable method of creating scaffolds with 3D microvessel networks. Here, we describe a new surgical technique to directly connect host femoral arteries to patterned microvessel networks. Vessel networks were connected in vivo in a rat femoral artery graft model. We utilized laser Doppler imaging to monitor hind limb ischemia for several hours after implantation and thus measured the vascular patency of implants that were anastomosed to the femoral artery. This study may provide a method to overcome the challenge of rapid oxygen and nutrient delivery to engineered vascularized tissues implanted in vivo.