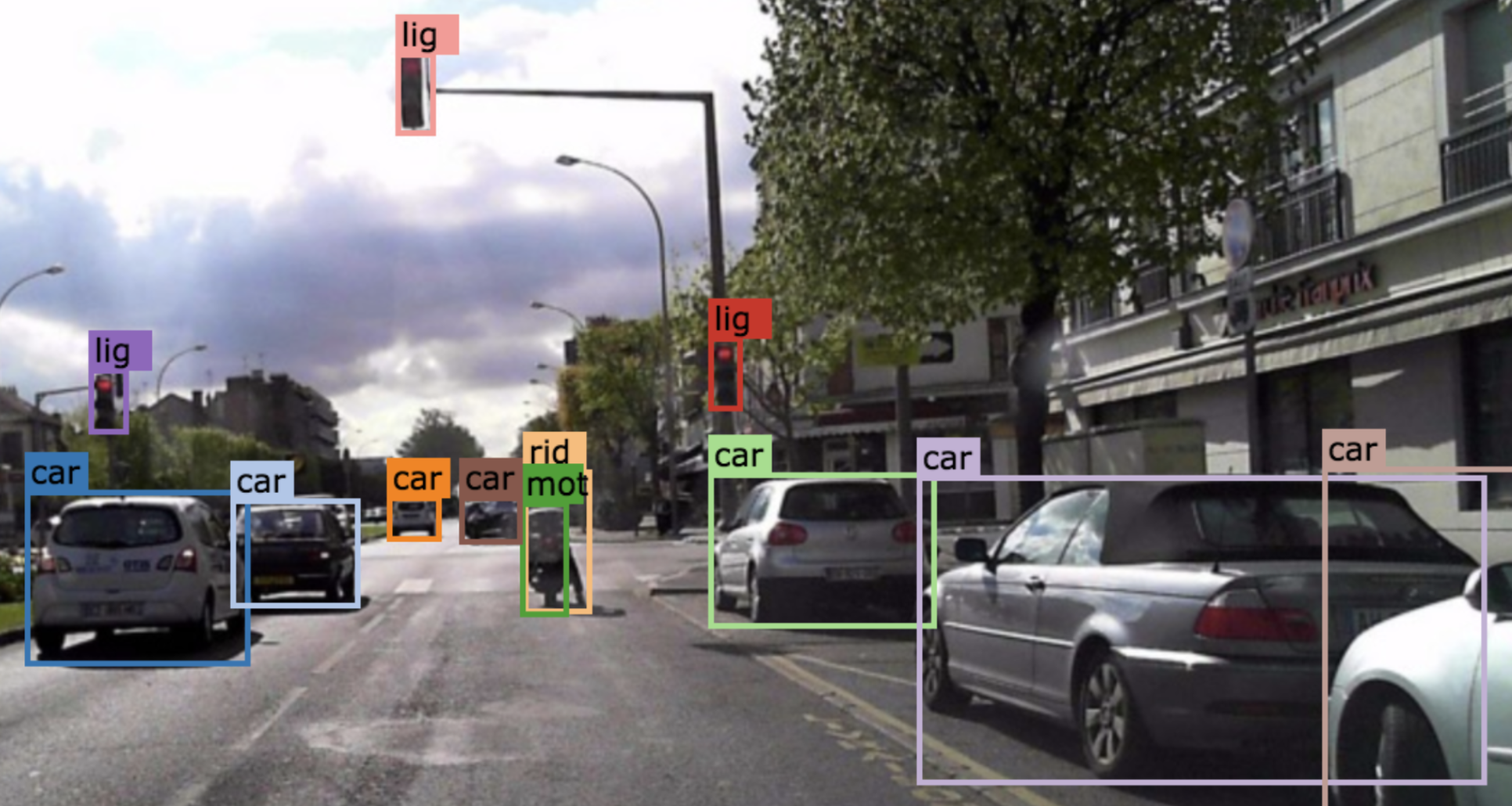

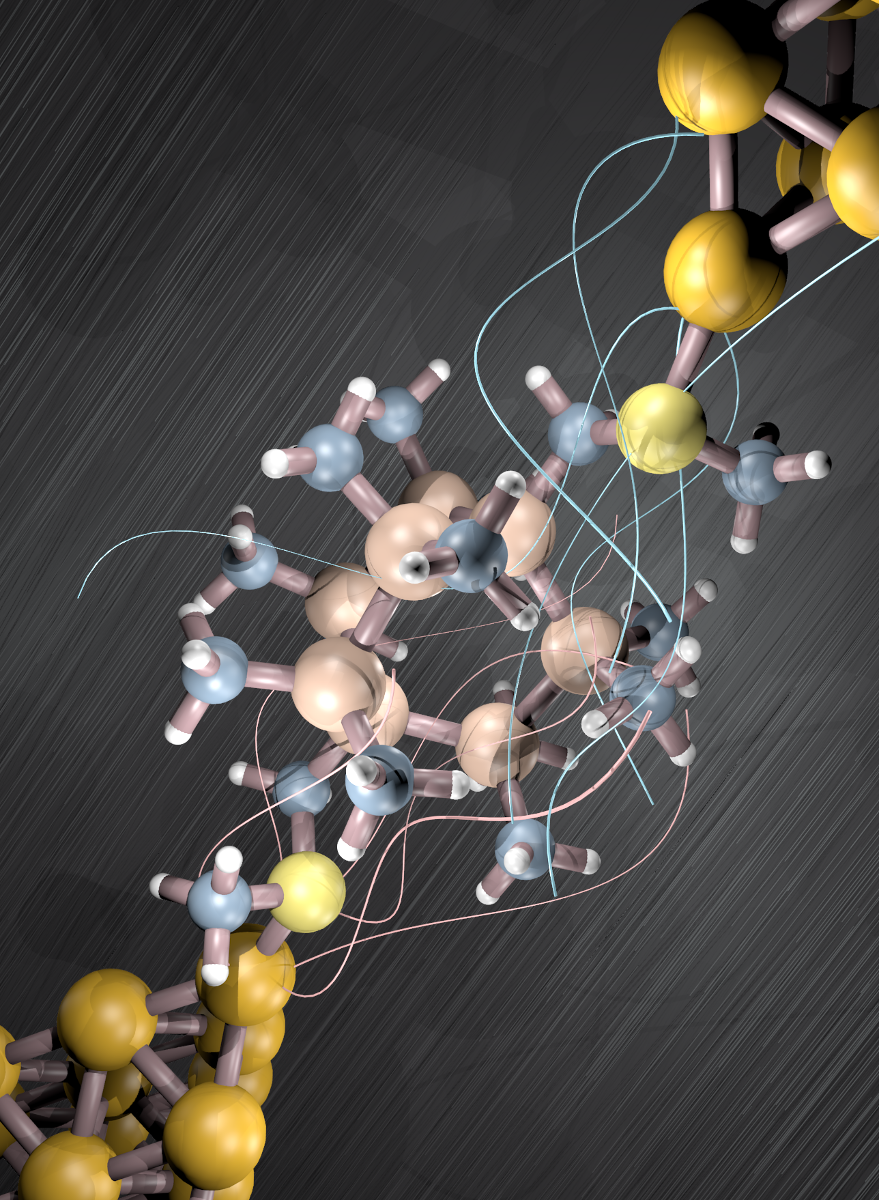

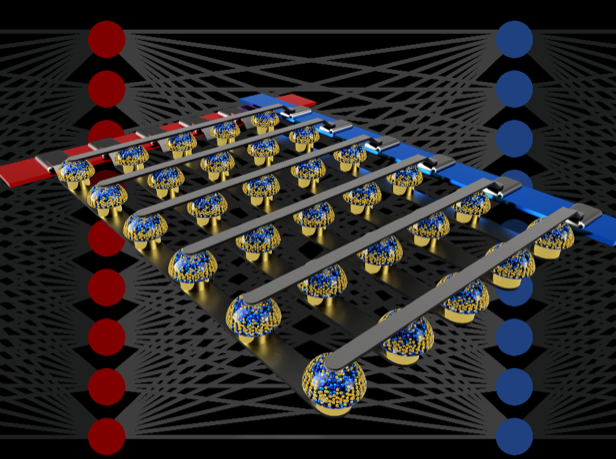

Crossbar arrays of non-volatile memories can accelerate the training of neural networks by performing computation at the actual location of the data. (credit: IBM Research)

Imagine advanced artificial intelligence (AI) running on your smartphone — instantly presenting the information that’s relevant to you in real time. Or a supercomputer that requires hundreds of times less energy.

The IBM Research AI team has demonstrated a new approach that they believe is a major step toward those scenarios.

Deep neural networks normally require fast, powerful graphical processing unit (GPU) hardware accelerators to support the needed high speed and computational accuracy — such as the GPU devices used in the just-announced Summit supercomputer. But GPUs are highly energy-intensive, making their use expensive and limiting their future growth, the researchers explain in a recent paper published in Nature.

Analog memory replaces software, overcoming the “von Neumann bottleneck”

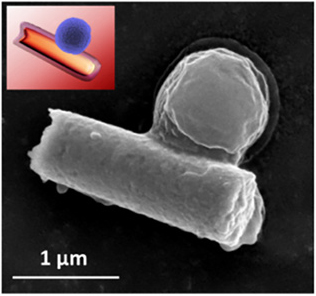

Instead, the IBM researchers used large arrays of non-volatile analog memory devices (which use continuously variable signals rather than binary 0s and 1s) to perform computations. Those arrays allowed the researchers to create, in hardware, the same scale and precision of AI calculations that are achieved by more energy-intensive systems in software, but running hundreds of times faster and at hundreds of times lower power — without sacrificing the ability to create deep learning systems.*

The trick was to replace conventional von Neumann architecture, which is “constrained by the time and energy spent moving data back and forth between the memory and the processor (the ‘von Neumann bottleneck’),” the researchers explain in the paper. “By contrast, in a non-von Neumann scheme, computing is done at the location of the data [in memory], with the strengths of the synaptic connections (the ‘weights’) stored and adjusted directly in memory.

“Delivering the future of AI will require vastly expanding the scale of AI calculations,” they note. “Instead of shipping digital data on long journeys between digital memory chips and processing chips, we can perform all of the computation inside the analog memory chip. We believe this is a major step on the path to the kind of hardware accelerators necessary for the next AI breakthroughs.”**

Given these encouraging results, the IBM researchers have already started exploring the design of prototype hardware accelerator chips, as part of an IBM Research Frontiers Institute project, they said.

Ref.: Nature. Source: IBM Research

* “From these early design efforts, we were able to provide, as part of our Nature paper, initial estimates for the potential of such [non-volatile memory]-based chips for training fully-connected layers, in terms of the computational energy efficiency (28,065 GOP/sec//W) and throughput-per-area (3.6 TOP/sec/mm2). These values exceed the specifications of today’s GPUs by two orders of magnitude. Furthermore, fully-connected layers are a type of neural network layer for which actual GPU performance frequently falls well below the rated specifications. … Analog non-volatile memories can efficiently accelerate at the heart of many recent AI advances. These memories allow the “multiply-accumulate” operations used throughout these algorithms to be parallelized in the analog domain, at the location of weight data, using underlying physics. Instead of large circuits to multiply and add digital numbers together, we simply pass a small current through a resistor into a wire, and then connect many such wires together to let the currents build up. This lets us perform many calculations at the same time, rather than one after the other.”

** “By combining long-term storage in phase-change memory (PCM) devices, near-linear update of conventional complementary metal-oxide semiconductor (CMOS) capacitors and novel techniques for cancelling out device-to-device variability, we finessed these imperfections and achieved software-equivalent DNN accuracies on a variety of different networks. These experiments used a mixed hardware-software approach, combining software simulations of system elements that are easy to model accurately (such as CMOS devices) together with full hardware implementation of the PCM devices. It was essential to use real analog memory devices for every weight in our neural networks, because modeling approaches for such novel devices frequently fail to capture the full range of device-to-device variability they can exhibit.”