Oculus announced Monday (April 30) that its much-anticipated Oculus Go virtual-reality headset is now available, priced at $199 (32GB storage version).

Oculus Go is a standalone headset (no need for a tethered PC, as with Oculus Rift, or for inserting a phone, as with Gear VR and other consumer VR devices). It features a high-resolution 2560 x 1440 pixels (538 ppi) display, hand controller, and built-in surround sound and microphone. (Ars Technica has an excellent review, including technical details.)

More than 1,000 games and experiences for the Oculus Go are already available. Notable: futuristic Ready Player One-style Anshar Online multiplayer space shooter and Oculus Rooms, a social lounge where friends (as avatars) can watch TV or movies together on a 180-inch virtual screen and play Hasbro board games — redefining the solitary VR experience.

Next-gen Oculus VR headsets

Today (May 2) at F8, Facebook’s developer conference, the company revealed a prototype Oculus VR headset called “Half Dome.” It goes beyond Oculus Go’s 100 degrees to 140 degrees to see more of the visual world in your periphery. Its variable-focus displays move up and down depending on what you’re focusing on, and make objects that are close to the wearer’s eyes appear much sharper and crisper, Engadget reports.

Apple’s super-high-resolution wireless VR-AR headset

Meanwhile, Apple is working on a powerful wireless headset for AR and VR, for 2020 release, CNET reported Friday, based on sources (Apple has not commented).* Code-named T288, the headset will feature two super-high-resolution 8K** (four times higher resolution than today’s 4K TVs) displays (for each eye), making the VR and AR images look highly lifelike — way beyond any devices currently available on the market.

To handle that extremely high resolution (and associated data rate) while connecting to an external processor box, T288 will use high-speed, short-range 60GHz wireless technology called WiGig, capable of data transfer rates up to 7 Gbit/s. The headset would also use a smaller, faster, more-power-efficient 5-nanometer-node processor, to be designed by Apple.

A patent application for VR/AR experiences in vehicles

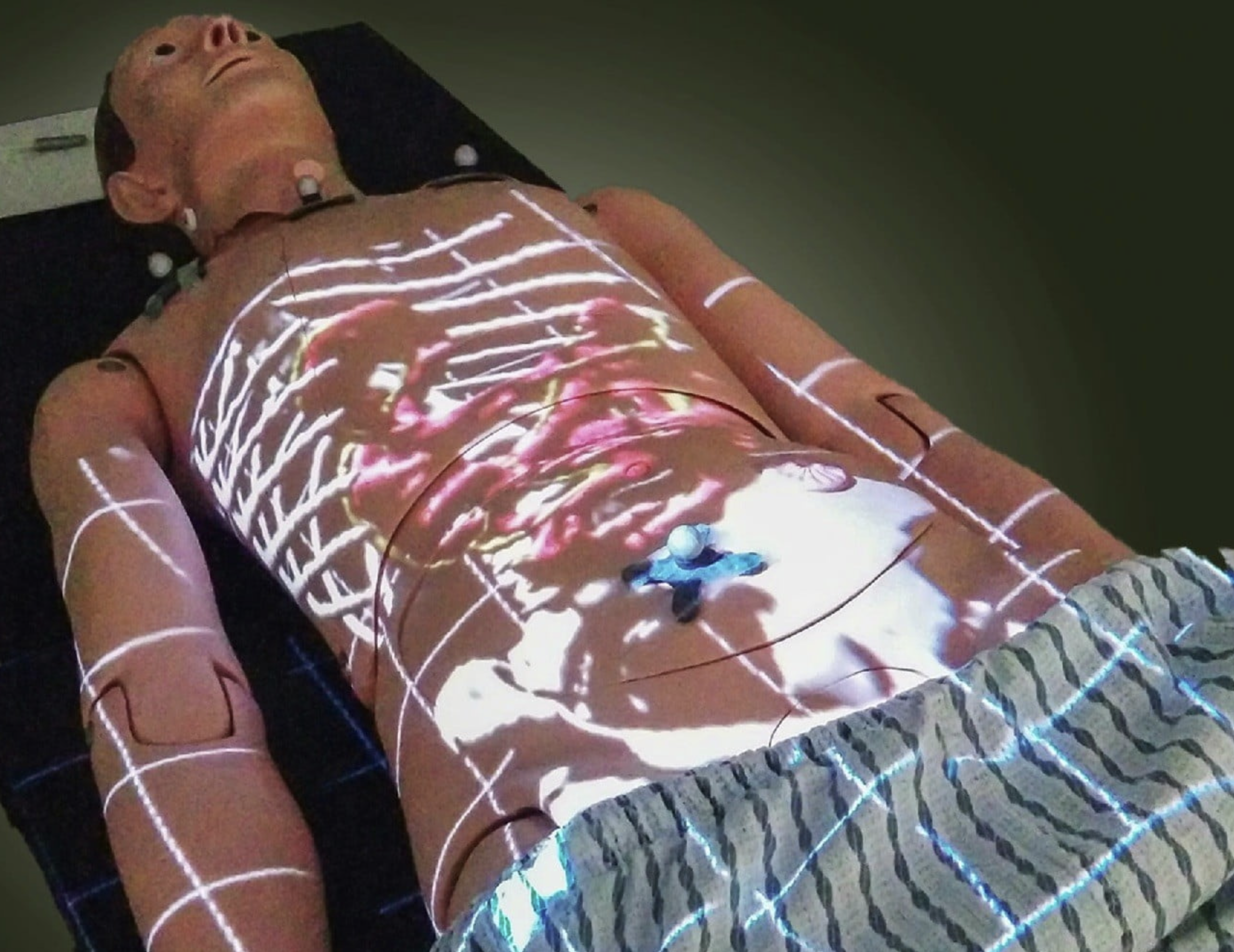

In related news, Apple has applied for a patent for a system using virtual reality (VR) and augmented reality (AR) to help alleviate both motion sickness and boredom in a moving vehicle.

The patent application covers three cases: experiences and environments using VR, AR, and mixed reality (VR + AR + the real world). Apple claims that the design will reduce nausea from vehicle movement (or from perceived movement in a virtual-reality experience).

It also claims to provide (as entertainment) motion or acceleration effects via a vehicle seat, vehicle movement, or vehicle on-board systems (such as audio effects and using a heating/cooling fan to blow “wind” or moving/shaking the seat).

For example, imagine riding in a car on a road that morphs into a prehistoric theme park (such as the Jurassic World: Blue VR experience from Universal Pictures and Felix & Paul Studios, which was announced Monday to coincide with the release of Oculus Go).

* At its 2017 June Worldwide Developers Conference (WWDC), Apple unveiled ARKit to enable developers to create augmented-reality apps for Apple products. Apple will hold its 29th WWDC on June 4–8 in San Jose.

** “8K resolution (8K UHD), is the current highest ultra high definition television (UHD TV) resolution in digital television and digital cinematography,” according to Wikipedia. “8K refers to the horizontal resolution, 7,680 pixels, forming the total image dimensions (7680×4320), otherwise known as 4320p. … It has four times as many pixels [as 4K], or 16 times as many pixels as Full HD. … 8K is speculated to become a mainstream consumer display resolution around 2023.”

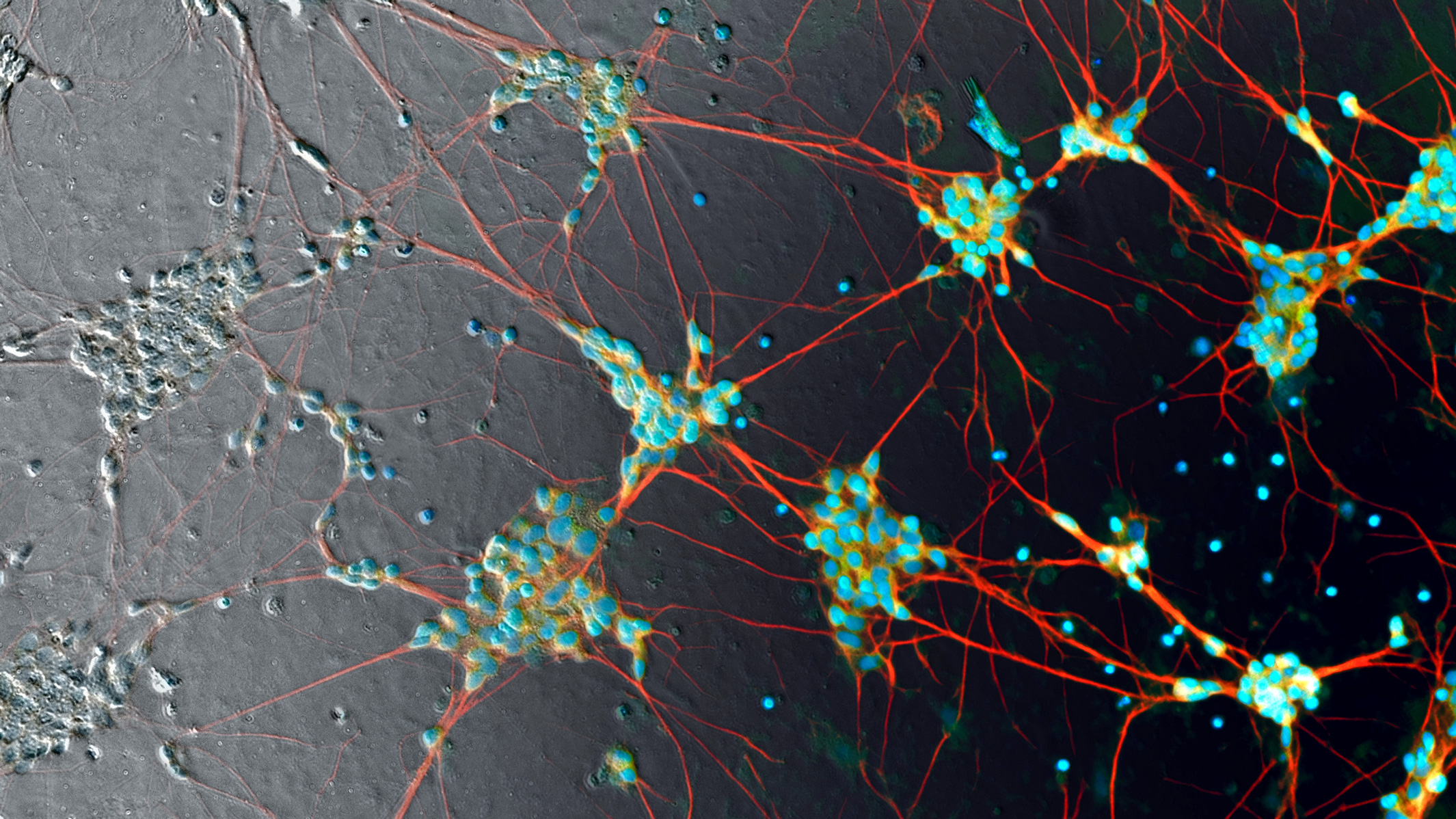

N-terminated surface of III-V nitrides from first-principles calculations. PMA ranges from 24.1 meV/u.c. in Fe/BN to 53.7 meV/u.c. in Fe/InN. Symmetry-protected degeneracy between x2 − y2 and xy orbitals and its lift by the spin-orbit coupling play a dominant role. As a consequence, PMA in Fe/III-V nitride thin films is dominated by first-order perturbation of the spin-orbit coupling, instead of second-order in conventional transition metal/oxide thin films. This game-changing scenario would also open a new field of magnetism on transition metal/nitride interfaces.

N-terminated surface of III-V nitrides from first-principles calculations. PMA ranges from 24.1 meV/u.c. in Fe/BN to 53.7 meV/u.c. in Fe/InN. Symmetry-protected degeneracy between x2 − y2 and xy orbitals and its lift by the spin-orbit coupling play a dominant role. As a consequence, PMA in Fe/III-V nitride thin films is dominated by first-order perturbation of the spin-orbit coupling, instead of second-order in conventional transition metal/oxide thin films. This game-changing scenario would also open a new field of magnetism on transition metal/nitride interfaces.