An (unshielded) view of Mars (credit: SpaceX)

A NASA-funded study of rodents exposed to highly energetic charged particles — similar to the galactic cosmic rays that will bombard astronauts during extended spaceflights — found that the rodents developed long-term memory deficits, anxiety, depression, and impaired decision-making (not to mention long-term cancer risk).

The study by University of California, Irvine (UCI) scientists* appeared Oct. 10 in Nature’s open-access Scientific Reports. It follows one last year that appeared in the May issue of open-access Science Advances, showing somewhat shorter-term brain effects of galactic cosmic rays.

The rodents were subjected to charged particle irradiation (ionized charged atomic nuclei from oxygen and titanium) at the NASA Space Radiation Laboratory at New York’s Brookhaven National Laboratory.

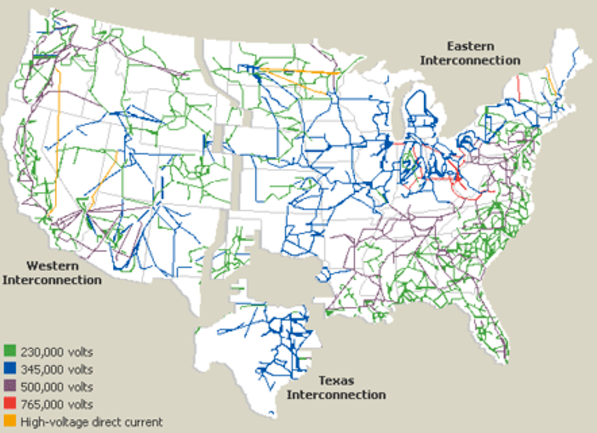

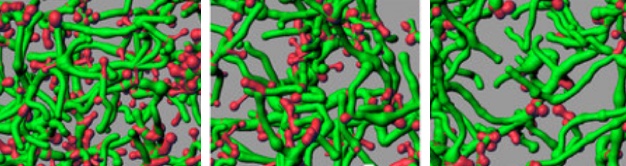

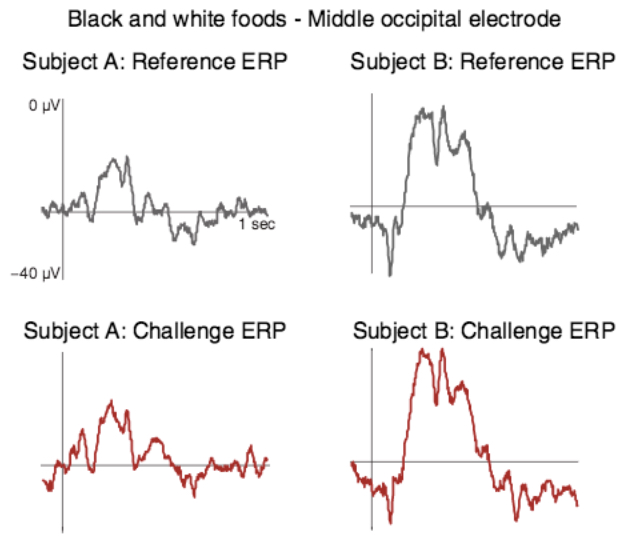

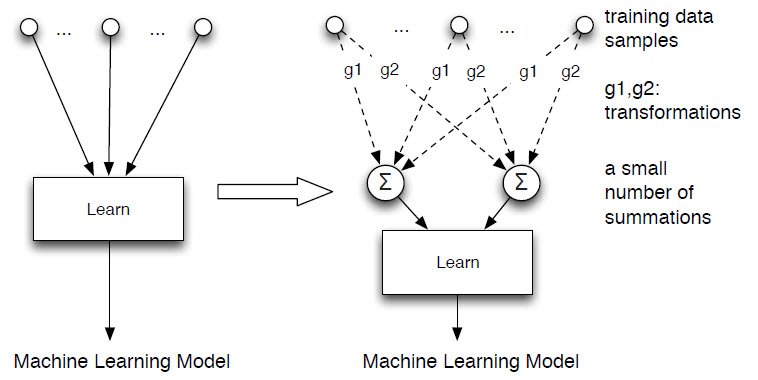

Digital imaging revealed a reduction of dendrites (green) and spines (red) on neurons of irradiated rodents, disrupting the transmission of signals among brain cells and thus impairing the brain’s neural network. Left: dendrites in unirradiated brains. Center: dendrites exposed to 0.05 Gy** ionized oxygen. Right: dendrites exposed to 0.30 Gy ionized oxygen. (credit: Vipan K. Parihar et al./Scientific Reports)

Six months after exposure, the researchers still found significant levels of brain inflammation and damage to neurons, poor performance on behavioral tasks designed to test learning and memory, and reduced “fear extinction” (an active process in which the brain suppresses prior unpleasant and stressful associations) — leading to elevated anxiety.

Similar types of more severe cognitive dysfunction (“chemo brain”) are common in brain cancer patients who have received high-dose, photon-based radiation treatments.

“The space environment poses unique hazards to astronauts,” said Charles Limoli, a professor of radiation oncology in UCI’s School of Medicine. “Exposure to these particles can lead to a range of potential central nervous system complications that can occur during and persist long after actual space travel. Many of these adverse consequences to cognition may continue and progress throughout life.”

NASA health hazards advisory

“During a 360-day round trip [to Mars], an astronaut would receive a dose of about 662 millisieverts (0.662 Gy) [twice the highest amount of radiation used in the UCI experiment with rodents] according to data from the Radiation Assessment Detector (RAD) … piggybacking on Curiosity,” said Cary Zeitlin, PhD, a principal scientist in Southwest Research Institute Space Science and Engineering Division and lead author of an article published in the journal Science in 2013. “In terms of accumulated dose, it’s like getting a whole-body CT scan once every five or six days [for a year],” he said in a NASA press release. There’s also the risk from increased radiation during periodic solar storms.

In addition, as dramatized in the movie The Martian (and explained in this analysis), there’s a risk on the surface of Mars, although less than in space, thanks to the atmosphere, and thanks to nighttime shielding of solar radiation by the planet.

In October 2015, the NASA Office of Inspector General issued a health hazards report related to space exploration, including a human mission to Mars.

“There’s going to be some risk of radiation, but it’s not deadly,” claimed SpaceX CEO Elon Musk Sept. 27 in an announcement of plans to establish a permanent, self-sustaining civilization of a million people on Mars (with an initial flight as soon as 2024). “There will be some slightly increased risk of cancer, but I think it’s relatively minor. … Are you prepared to die? If that’s OK, you’re a candidate for going.”

Sightseeers expose themselves to galactic cosmic radiation on Europa, a moon of Jupiter, shown in the background (credit: SpaceX)

Not to be one-upped by Musk, President Obama said in an op-ed on the CNN blog on Oct. 11 (perhaps channeling JFK) that “we have set a clear goal vital to the next chapter of America’s story in space: sending humans to Mars by the 2030s and returning them safely to Earth, with the ultimate ambition to one day remain there for an extended time.”

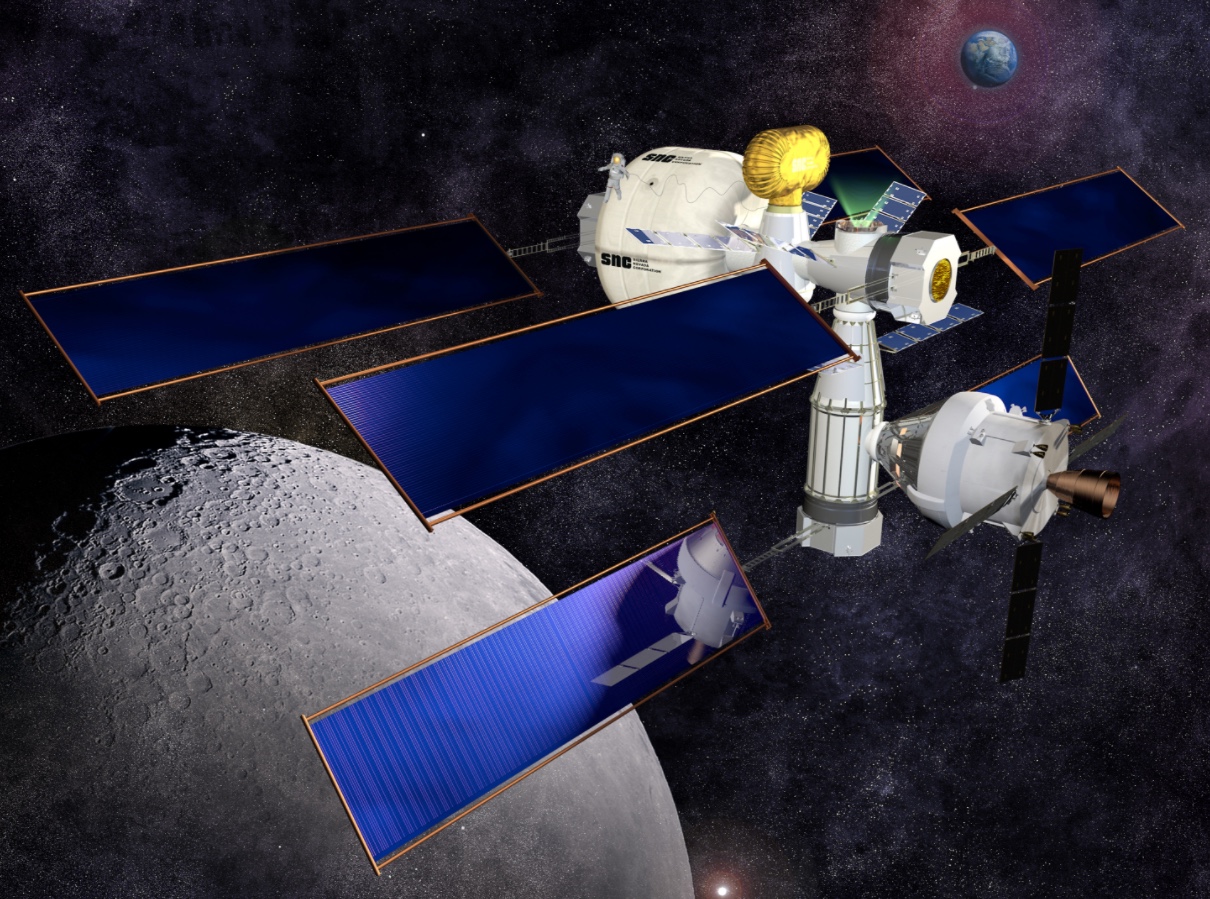

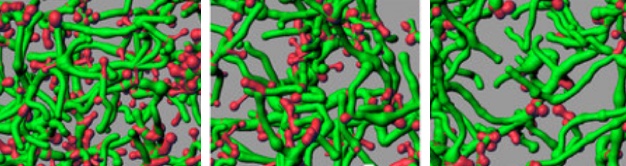

In a follow-up explainer, NASA Administrator Charles Bolden and John Holdren, Director of the White House Office of Science and Technology Policy, announced that in August, NASA selected six companies (under the Next Space Technologies for Exploration Partnerships-2 (NextSTEP-2) program) to produce ground prototypes for deep space habitat modules. No mention of plans for avoiding astronaut brain damage, and the NextSTEP-2 illustrations don’t appear to address that either.

Concept image of Sierra Nevada Corporation’s habitation prototype, based on its Dream Chaser cargo module. No multi-ton shielding is apparent. (credit: Sierra Nevada)

Hitchhiking on an asteroid

So what are the solutions (if any)? Material shielding can be effective against galactic cosmic rays, but it’s expensive and impractical for space travel. For instance, a NASA design study for a large space station envisioned four metric tons per square meter of shielding to drop radiation exposure to 2.5 millisieverts (mSv) (or 0.0025 Gy) annually (the annual global average dose from natural background radiation is 2.4 mSv (3.6 in the U.S., including X-rays), according to a United Nations report in 2008).

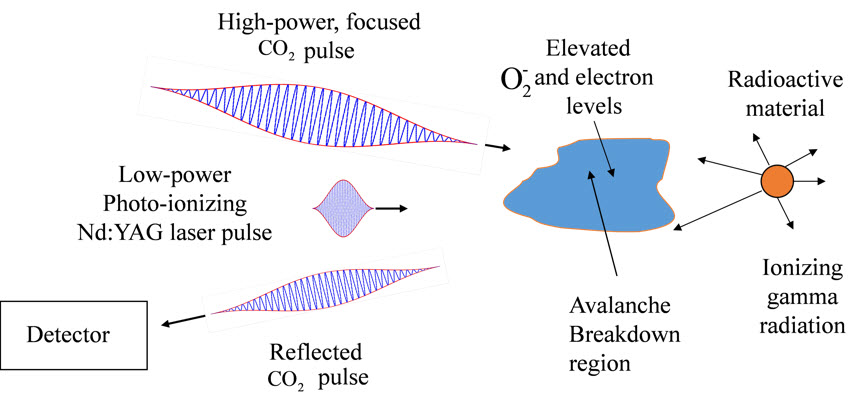

Various alternate shielding scheme have been proposed. NASA scientist Geoffrey A. Landis suggested in a 1991 paper the use of magnetic deflection of charged radiation particles (imitating the Earth’s magnetosphere***). Improvements in superconductors since 1991 may make this more practical today and possibly more so in future.

In a 2011 paper in Acta Astronautica, Gregory Matloff of New York City College of Technology suggested that a Mars-bound spacecraft could tunnel into the asteroid for shielding, as long as the asteroid is at least 33 feet wide (if the asteroid were especially iron-rich, the necessary width would be smaller), National Geographic reported.

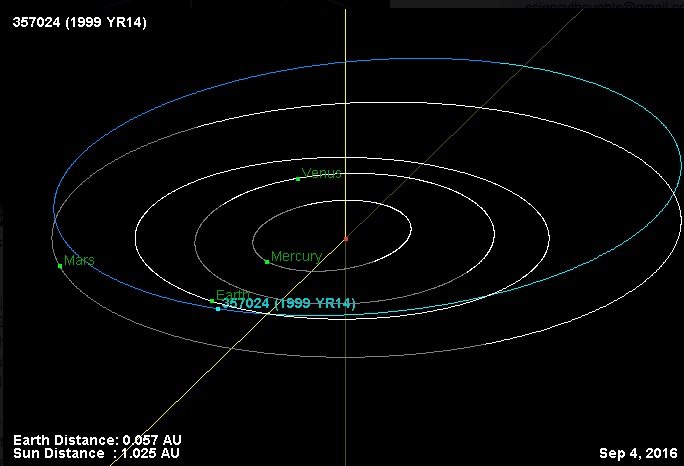

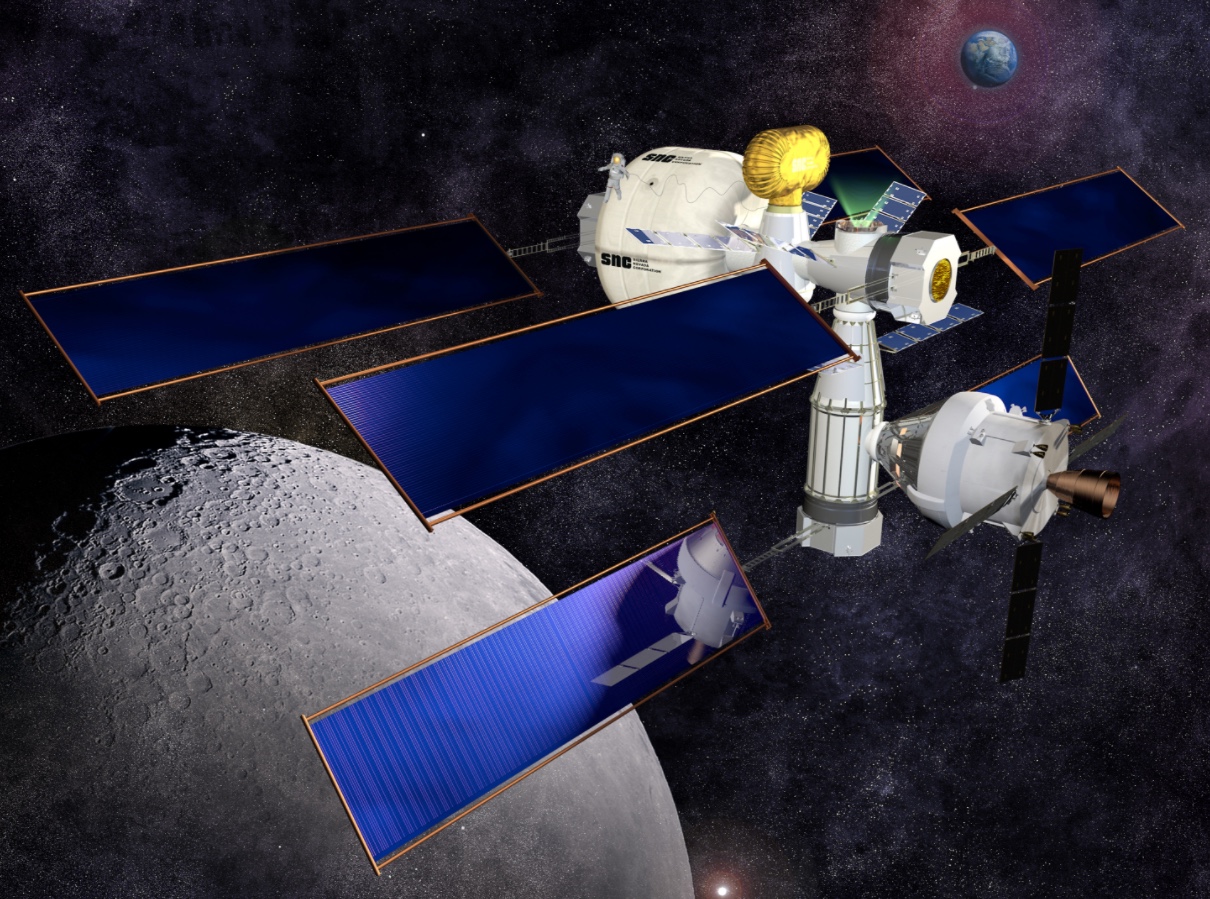

The calculated orbit of (357024) 1999 YR14 (credit: Lowell Observatory Near-Earth-Object Search)

“There are five known asteroids that fit the criteria and will pass from Earth to Mars before the year 2100. … The asteroids 1999YR14 and 2007EE26, for example, will both pass Earth in 2086, and they’ll make the journey to Mars in less than a year,” he said. Downside: it would be five years before either asteroid would swing around Mars as it heads back toward Earth.

Meanwhile, future preventive treatments may help. Limoli’s group is working on pharmacological strategies involving compounds that scavenge free radicals and protect neurotransmission.

* An Eastern Virginia Medical School researcher also contributed to the study.

** The Scientific Reports paper shows these values as centigray (cGy), a decimal fraction (0.01) of the SI derived Gy (Gray) unit of absorbed dose and specific energy (energy per unit mass). Such energies are usually associated with ionizing radiation such as gamma particles or X-rays.

*** Astronauts working for extended periods on the International Space Station do not face the same level of bombardment with galactic cosmic rays because they are still within the Earth’s protective magnetosphere. Astronauts on Apollo and Skylab missions received on average 1.2 mSv (0.0012 Gy) per day and 1.4 mSv (0.0014 Gy) per day respectively, according to a NASA study.

Abstract of Cosmic radiation exposure and persistent cognitive dysfunction

The Mars mission will result in an inevitable exposure to cosmic radiation that has been shown to cause cognitive impairments in rodent models, and possibly in astronauts engaged in deep space travel. Of particular concern is the potential for cosmic radiation exposure to compromise critical decision making during normal operations or under emergency conditions in deep space. Rodents exposed to cosmic radiation exhibit persistent hippocampal and cortical based performance decrements using six independent behavioral tasks administered between separate cohorts 12 and 24 weeks after irradiation. Radiation-induced impairments in spatial, episodic and recognition memory were temporally coincident with deficits in executive function and reduced rates of fear extinction and elevated anxiety. Irradiation caused significant reductions in dendritic complexity, spine density and altered spine morphology along medial prefrontal cortical neurons known to mediate neurotransmission interrogated by our behavioral tasks. Cosmic radiation also disrupted synaptic integrity and increased neuroinflammation that persisted more than 6 months after exposure. Behavioral deficits for individual animals correlated significantly with reduced spine density and increased synaptic puncta, providing quantitative measures of risk for developing cognitive impairment. Our data provide additional evidence that deep space travel poses a real and unique threat to the integrity of neural circuits in the brain.

Abstract of What happens to your brain on the way to Mars

As NASA prepares for the first manned spaceflight to Mars, questions have surfaced concerning the potential for increased risks associated with exposure to the spectrum of highly energetic nuclei that comprise galactic cosmic rays. Animal models have revealed an unexpected sensitivity of mature neurons in the brain to charged particles found in space. Astronaut autonomy during long-term space travel is particularly critical as is the need to properly manage planned and unanticipated events, activities that could be compromised by accumulating particle traversals through the brain. Using mice subjected to space-relevant fluences of charged particles, we show significant cortical- and hippocampal-based performance decrements 6 weeks after acute exposure. Animals manifesting cognitive decrements exhibited marked and persistent radiation-induced reductions in dendritic complexity and spine density along medial prefrontal cortical neurons known to mediate neurotransmission specifically interrogated by our behavioral tasks. Significant increases in postsynaptic density protein 95 (PSD-95) revealed major radiation-induced alterations in synaptic integrity. Impaired behavioral performance of individual animals correlated significantly with reduced spine density and trended with increased synaptic puncta, thereby providing quantitative measures of risk for developing cognitive decrements. Our data indicate an unexpected and unique susceptibility of the central nervous system to space radiation exposure, and argue that the underlying radiation sensitivity of delicate neuronal structure may well predispose astronauts to unintended mission-critical performance decrements and/or longer-term neurocognitive sequelae.