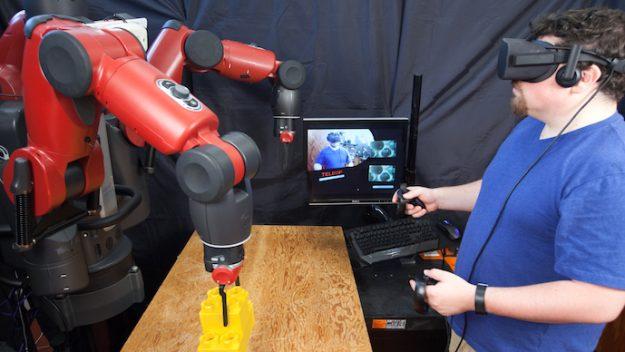

A new VR system from MIT’s Computer Science and Artificial Intelligence Laboratory could make it easy for factory workers to telecommute. (credit: Jason Dorfman, MIT CSAIL)

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a virtual-reality (VR) system that lets you teleoperate a robot using an Oculus Rift or HTC Vive VR headset.

CSAIL’s “Homunculus Model” system (the classic notion of a small human sitting inside the brain and controlling the actions of the body) embeds you in a VR control room with multiple sensor displays, making it feel like you’re inside the robot’s head. By using gestures, you can control the robot’s matching movements to perform various tasks.

The system can be connected either via a wired local network or via a wireless network connection over the Internet. (The team demonstrated that the system could pilot a robot from hundreds of miles away, testing it on a hotel’s wireless network in Washington, DC to control Baxter at MIT.)

According to CSAIL postdoctoral associate Jeffrey Lipton, lead author on an open-access arXiv paper about the system (presented this week at the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) in Vancouver), “By teleoperating robots from home, blue-collar workers would be able to telecommute and benefit from the IT revolution just as white-collars workers do now.”

Jobs for video-gamers too

The researchers imagine that such a system could even help employ jobless video-gamers by “game-ifying” manufacturing positions. (Users with gaming experience had the most ease with the system, the researchers found in tests.)

Homunculus Model system. A Baxter robot (left) is outfitted with a stereo camera rig and various end-effector devices. A virtual control room (user’s view, center), generated on an Oculus Rift CV1 headset (right), allows the user to feel like they are inside Baxter’s head while operating it. Using VR device controllers, including Razer Hydra hand trackers used for inputs (right), users can interact with controls that appear in the virtual space — opening and closing the hand grippers to pick up, move, and retrieve items. A user can plan movements based on the distance between the arm’s location marker and their hand while looking at the live display of the arm. (credit: Jeffrey I. Lipton et al./arXiv).

To make these movements possible, the human’s space is mapped into the virtual space, and the virtual space is then mapped into the robot space to provide a sense of co-location.

The team demonstrated the Homunculus Model system using the Baxter humanoid robot from Rethink Robotics, but the approach could work on other robot platforms, the researchers said.

In tests involving pick and place, assembly, and manufacturing tasks (such as “pick an item and stack it for assembly”) comparing the Homunculus Model system with existing state-of-the-art automated remote-control, CSAIL’s Homunculus Model system had a 100% success rate compared with a 66% success rate for state-of-the-art automated systems. The CSAIL system was also better at grasping objects 95 percent of the time and 57 percent faster at doing tasks.*

“This contribution represents a major milestone in the effort to connect the user with the robot’s space in an intuitive, natural, and effective manner.” says Oussama Khatib, a computer science professor at Stanford University who was not involved in the paper.

The team plans to eventually focus on making the system more scalable, with many users and different types of robots that are compatible with current automation technologies.

* The Homunculus Model system solves a delay problem with existing systems, which use a GPU or CPU, introducing delay. 3D reconstruction from the stereo HD cameras is instead done by the human’s visual cortex, so the user constantly receives visual feedback from the virtual world with minimal latency (delay). This also avoids user fatigue and nausea caused by motion sickness (known as simulator sickness) generated by “unexpected incongruities, such as delays or relative motions, between proprioception and vision [that] can lead to the nausea,” the researchers explain in the paper.

MITCSAIL | Operating Robots with Virtual Reality

Abstract of Baxter’s Homunculus: Virtual Reality Spaces for Teleoperation in Manufacturing

Expensive specialized systems have hampered development of telerobotic systems for manufacturing systems. In this paper we demonstrate a telerobotic system which can reduce the cost of such system by leveraging commercial virtual reality(VR) technology and integrating it with existing robotics control software. The system runs on a commercial gaming engine using off the shelf VR hardware. This system can be deployed on multiple network architectures from a wired local network to a wireless network connection over the Internet. The system is based on the homunculus model of mind wherein we embed the user in a virtual reality control room. The control room allows for multiple sensor display, dynamic mapping between the user and robot, does not require the production of duals for the robot, or its environment. The control room is mapped to a space inside the robot to provide a sense of co-location within the robot. We compared our system with state of the art automation algorithms for assembly tasks, showing a 100% success rate for our system compared with a 66% success rate for automated systems. We demonstrate that our system can be used for pick and place, assembly, and manufacturing tasks.