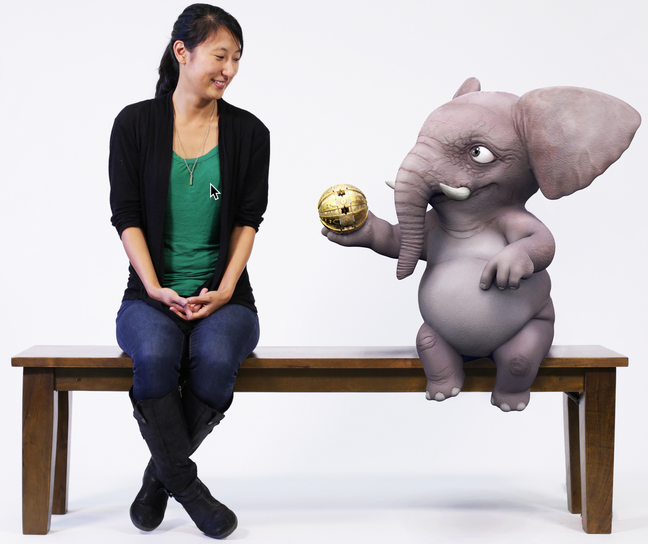

Magic Bench (credit: Disney Research)

Disney Research has created the first shared, combined augmented/mixed-reality experience, replacing first-person head-mounted displays or handheld devices with a mirrored image on a large screen — allowing people to share the magical experience as a group.

Sit on Disney Research’s Magic Bench and you may see an elephant hand you a glowing orb, hear its voice, and feel it sit down next to you, for example. Or you might get rained on and find yourself underwater.

How it works

Flowchart of the Magic Bench installation (credit: Disney Research)

People seated on the Magic Bench can see themselves on a large video display in front of them. The scene is reconstructed using a combined depth sensor/video camera (Microsoft Kinect) to image participants, bench, and surroundings. An image of the participants is projected on a large screen, allowing them to occupy the same 3D space as a computer-generated character or object. The system can also infer participants’ gaze.*

Speakers and haptic sensors built into the bench add to the experience (by vibrating the bench when the elephant sits down in this example).

The research team will present and demonstrate the Magic Bench at SIGGRAPH 2017, the Computer Graphics and Interactive Techniques Conference, which began Sunday, July 30 in Los Angeles.

* To eliminate depth shadows that occur in areas where the depth sensor has no corresponding line of sight with the color camera, a modified algorithm creates a 2D backdrop, according to the researchers. The 3D and 2D reconstructions are positioned in virtual space and populated with 3D characters and effects in such a way that the resulting real-time rendering is a seamless composite, fully capable of interacting with virtual physics, light, and shadows.

DisneyResearchHub | Magic Bench

Abstract of Magic Bench

Mixed Reality (MR) and Augmented Reality (AR) create exciting opportunities to engage users in immersive experiences, resulting in natural human-computer interaction. Many MR interactions are generated around a first-person Point of View (POV). In these cases, the user directs to the environment, which is digitally displayed either through a head-mounted display or a handheld computing device. One drawback of such conventional AR/MR platforms is that the experience is user-specific. Moreover, these platforms require the user to wear and/or hold an expensive device, which can be cumbersome and alter interaction techniques. We create a solution for multi-user interactions in AR/MR, where a group can share the same augmented environment with any computer generated (CG) asset and interact in a shared story sequence through a third-person POV. Our approach is to instrument the environment leaving the user unburdened of any equipment, creating a seamless walk-up-and-play experience. We demonstrate this technology in a series of vignettes featuring humanoid animals. Participants can not only see and hear these characters, they can also feel them on the bench through haptic feedback. Many of the characters also interact with users directly, either through speech or touch. In one vignette an elephant hands a participant a glowing orb. This demonstrates HCI in its simplest form: a person walks up to a computer, and the computer hands the person an object.

The 2016 Terasem Annual Colloquium on the Law of Futuristic Persons will take place in Second Life in ”

The 2016 Terasem Annual Colloquium on the Law of Futuristic Persons will take place in Second Life in ”