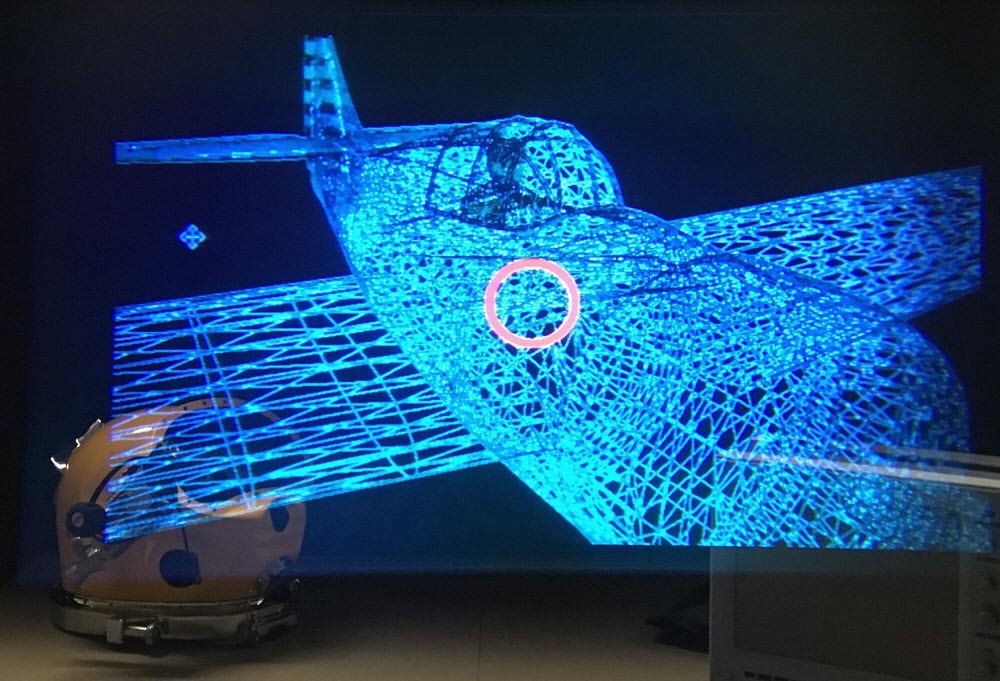

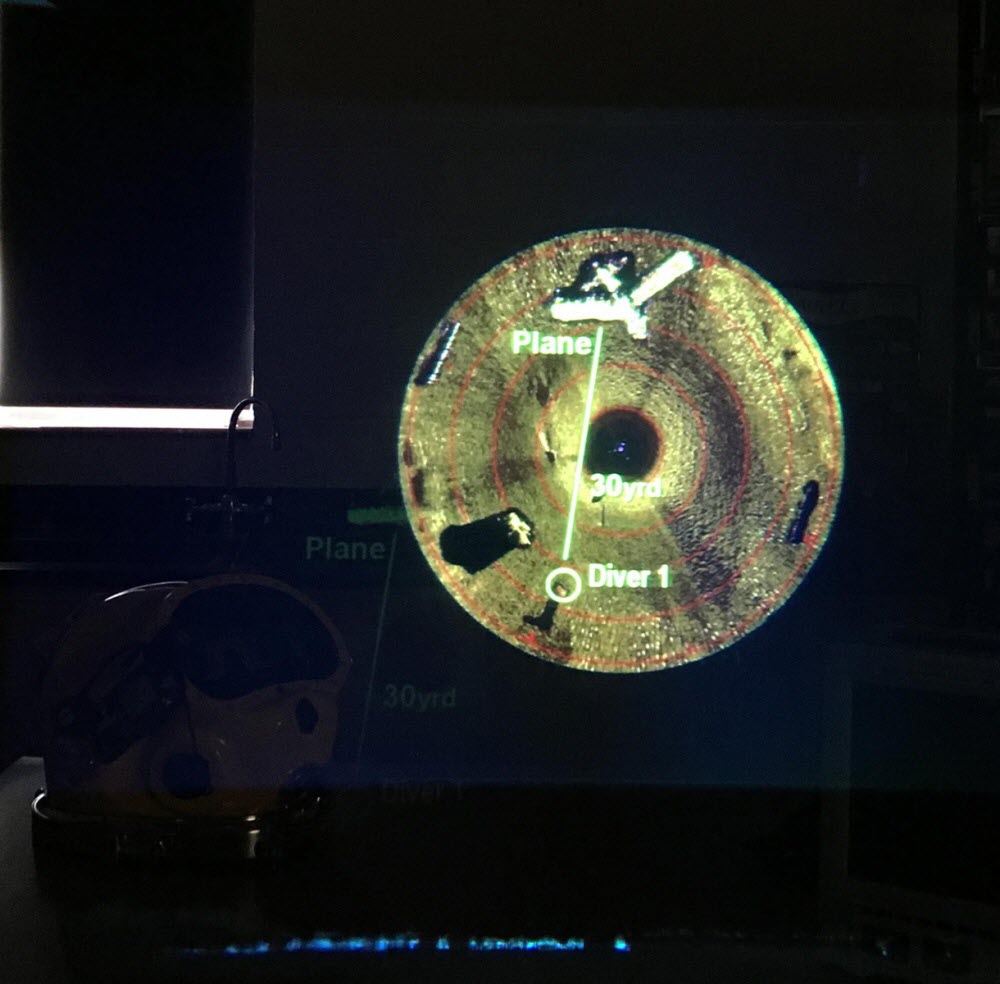

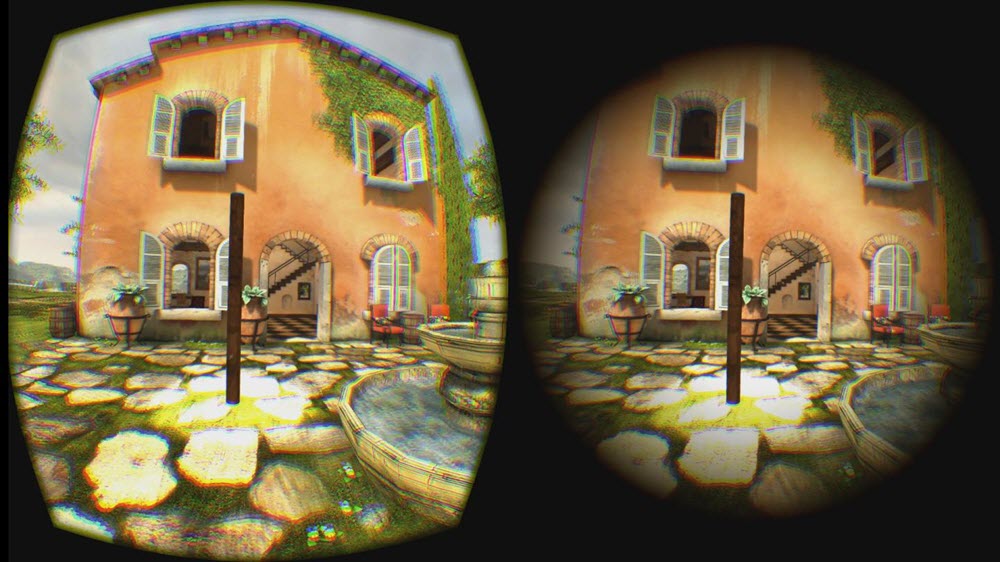

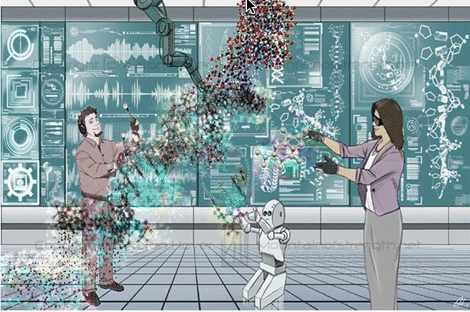

NYU-X Holodeck (credit: Winslow Burleson and Armanda Lewis)

In an open-access paper in the Journal of Artificial Intelligence Education, Winslow Burleson, PhD, MSE, associate professor, New York University Rory Meyers College of Nursing, suggests that “advanced cyberlearning environments that involve VR and AI innovations are needed to solve society’s “wicked challenges*” — entrenched and seemingly intractable societal problems.

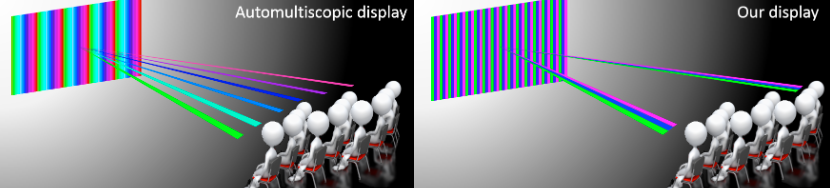

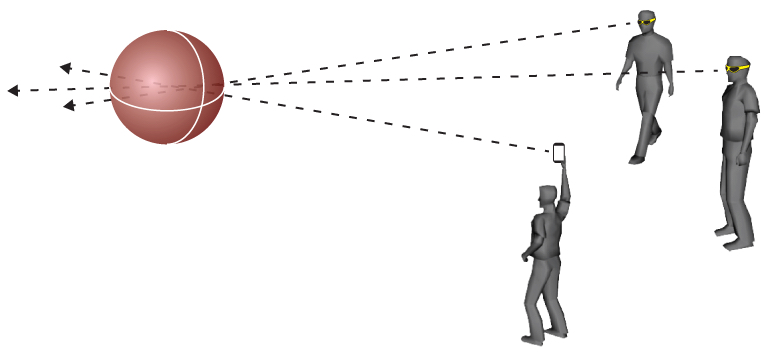

Burleson and and co-author Armanda Lewis imagine such technology in a year 2041 Holodeck, which Burleson’s NYU-X Lab is currently developing in prototype form, in collaboration with colleagues at NYU Courant, Tandon, Steinhardt, and Tisch.

“The “Holodeck” will support a broad range of transdisciplinary collaborations, integrated education, research, and innovation by providing a networked software/hardware infrastructure that can synthesize visual, audio, physical, social, and societal components,” said Burleson.

It’s intended as a model for the future of cyberlearning experience, integrating visual, audio, and physical (haptics, objects, real-time fabrication) components, with shared computation, integrated distributed data, immersive visualization, and social interaction to make possible large-scale synthesis of learning, research, and innovation.

This reminds me of the book Education and Ecstasy, written in 1968 by George B. Leonard, a respected editor for LOOK magazine and, in many respects, a pioneer in what has become the transhumanism movement. That book laid out the justification and promise of advanced educational technology in the classroom for an entire generation. Other writers, such as Harry S. Broudy in the Real World of the Public Schools (1972) followed, arguing that we can not afford “master teachers” in every classroom, but still need to do far better, both then and now.

Today, theories and models of automated planning using computers in complex situations are advanced and “wicked” social simulations can demonstrate the “potholes” in proposed action scenarios. Virtual realties, holodecks, interactive games, robotic and/or AI assistants offer “sandboxes” for learning and for sharing that learning with others. Leonard’s vision, proposed in 1968 for the year 2000, has not yet been realized. However, by 2041, according to these authors, it just might be.

— Warren E. Lacefield, Ph.D. President/CEO Academic Software, Inc.; Associate Professor (retired), Evaluation, Measurement, and Research Program, Department of Educational Leadership, Research, and Technology, Western Michigan University (aka “Asiwel” on KurzweilAI)

Key aspects of the Holodeck: personal stories and interactive experiences that make it a rich environment; open streaming content that make it real and compelling; and contributions that personalize the learning experience. The goal is to create a networked infrastructure and communication environment where “wicked challenges” can be iteratively explored and re-solved, utilizing visual, acoustic, and physical sensory feedback, human dynamics with and social collaboration.

Burleson and Lewis envision that in 2041, learning is unlimited — each individual can create a teacher, team, community, world, galaxy or universe of their own.

* In the late 1960s, urban planners Horst Rittel and Melvin Webber began formulating the concept of “wicked problems” or “wicked challenges” –problems so vexing in the realm of social and organizational planning that they could not be successfully ameliorated with traditional linear, analytical, systems-engineering types of approaches.

These “wicked challenges” are poorly defined, abstruse, and connected to strong moral, political and professional issues. Some examples might include: “How should we deal with crime and violence in our schools? “How should we wage the ‘War on Terror’? or “What is good national immigration policy?”

“Wicked problems,” by their very nature, are strongly stakeholder dependent; there is often little consensus even about what the problem is, let alone how to deal with it. And, the challenges themselves are ever shifting sets of inherently complex, interacting issues evolving in a dynamic social context. Often, new forms of “wicked challenges” emerge as a result of trying to understand and treat just one challenge in isolation.

Abstract of Optimists’ Creed: Brave New Cyberlearning, Evolving Utopias (Circa 2041)

This essay imagines the role that artificial intelligence innovations play in the integrated living, learning and research environments of 2041. Here, in 2041, in the context of increasingly complex wicked challenges, whose solutions by their very nature continue to evade even the most capable experts, society and technology have co-evolved to embrace cyberlearning as an essential tool for envisioning and refining utopias–non-existent societies described in considerable detail. Our society appreciates that evolving these utopias is critical to creating and resolving wicked challenges and to better understanding how to create a world in which we are actively “learning to be” – deeply engaged in intrinsically motivating experiences that empower each of us to reach our full potential. Since 2015, Artificial Intelligence in Education (AIED) has transitioned from what was primarily a research endeavour, with educational impact involving millions of user/learners, to serving, now, as a core contributor to democratizing learning (Dewey 2004) and active citizenship for all (billions of learners throughout their lives). An expansive experiential super computing cyberlearning environment, we affectionately call the “Holodeck,” supports transdisciplinary collaboration and integrated education, research, and innovation, providing a networked software/hardware infrastructure that synthesizes visual, audio, physical, social, and societal components. The Holodeck’s large-scale integration of learning, research, and innovation, through real-world problem solving and teaching others what you have learned, effectively creates a global meritocratic network with the potential to resolve society’s wicked challenges while empowering every citizen to realize her or his full potential.