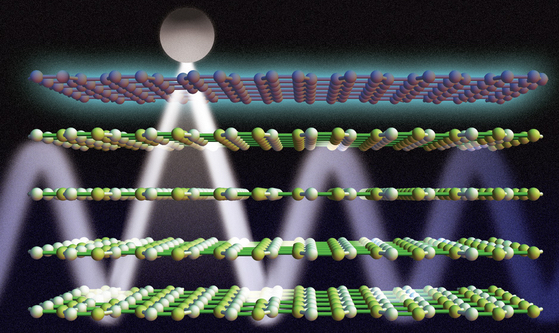

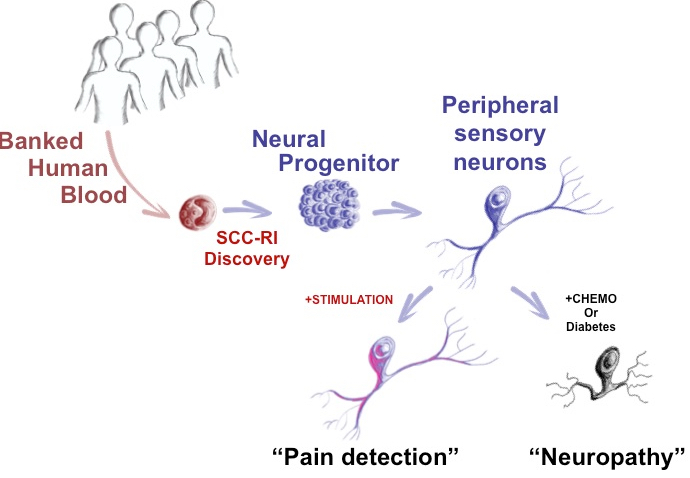

McMaster University scientists have discovered how to make adult sensory neurons from a patient’s blood sample to measure pain (credit: McMaster University

Stem-cell scientists at McMaster University have developed a way to directly convert adult human blood cells to sensory neurons, providing the first objective measure of how patients may feel things like pain, temperature, and pressure, the researchers reveal in an open-access paper in the journal Cell Reports.

Currently, scientists and physicians have a limited understanding of the complex issue of pain and how to treat it. “The problem is that unlike blood, a skin sample or even a tissue biopsy, you can’t take a piece of a patient’s neural system,” said Mick Bhatia, director of the McMaster Stem Cell and Cancer Research Institute and research team leader. “It runs like complex wiring throughout the body and portions cannot be sampled for study.

“Now we can take easy to obtain blood samples, and make the main cell types of neurological systems in a dish that is specialized for each patient,” said Bhatia. “We can actually take a patient’s blood sample, as routinely performed in a doctor’s office, and with it we can produce one million sensory neurons, [which] make up the peripheral nerves. We can also make central nervous system cells.”

Testing pain drugs

The new technology has “broad and immediate applications,” said Bhatia: It allows researchers to understand disease and improve treatments by asking questions such as: Why is it that certain people feel pain versus numbness? Is this something genetic? Can the neuropathy that diabetic patients experience be mimicked in a dish?

It also paves the way for the discovery of new pain drugs that don’t just numb the perception of pain. Bhatia said non-specific opioids used for decades are still being used today. “If I was a patient and I was feeling pain or experiencing neuropathy, the prized pain drug for me would target the peripheral nervous system neurons, but do nothing to the central nervous system, thus avoiding addictive drug side effects,” said Bhatia.

“Until now, no one’s had the ability and required technology to actually test different drugs to find something that targets the peripheral nervous system, and not the central nervous system, in a patient-specific, or personalized manner.”

A patient time machine

Bhatia’s team also successfully tested their process with cryopreserved (frozen) blood. Since blood samples are taken and frozen with many clinical trials, this give them “almost a bit of a time machine” to run tests on neurons created from blood samples of patients taken in past clinical trials, where responses and outcomes have already been recorded.

In the future, the process may have prognostic (predictive diagnostic) potential, explained Bhatia: one might be able to look at a patient with Type 2 Diabetes and predict whether they will experience neuropathy, by running tests in the lab using their own neural cells derived from their blood sample.

“This bench-to-bedside research is very exciting and will have a major impact on the management of neurological diseases, particularly neuropathic pain,” said Akbar Panju, medical director of the Michael G. DeGroote Institute for Pain Research and Care, a clinician and professor of medicine.

“This research will help us understand the response of cells to different drugs and different stimulation responses, and allow us to provide individualized or personalized medical therapy for patients suffering with neuropathic pain.”

This research was supported by the Canadian Institutes of Health Research, Ontario Institute of Regenerative Medicine, Marta and Owen Boris Foundation, J.P. Bickell Foundation, the Ontario Brain Institute, and Brain Canada.

Pain insensitivity

In related news, an international team of researchers co-led by the University of Cambridge reported Monday in the journal Nature Genetics that they have identified a gene, PRDM12, that is essential to the production of pain-sensing neurons in humans. Rare individuals — around one in a million people in the UK — are born unable to feel pain, in a condition known as congenital insensitivity to pain (CIP). These people accumulate numerous self-inflicted injuries, often leading to reduced lifespan.

The researchers are hopeful that this new gene could be an excellent candidate for drug development.

Abstract of Single Transcription Factor Conversion of Human Blood Fate to NPCs with CNS and PNS Developmental Capacity

The clinical applicability of direct cell fate conversion depends on obtaining tissue from patients that is easy to harvest, store, and manipulate for reprogramming. Here, we generate induced neural progenitor cells (iNPCs) from neonatal and adult peripheral blood using single-factor OCT4 reprogramming. Unlike fibroblasts that share molecular hallmarks of neural crest, OCT4 reprogramming of blood was facilitated by SMAD+GSK-3 inhibition to overcome restrictions on neural fate conversion. Blood-derived (BD) iNPCs differentiate in vivo and respond to guided differentiation in vitro, producing glia (astrocytes and oligodendrocytes) and multiple neuronal subtypes, including dopaminergic (CNS related) and nociceptive neurons (peripheral nervous system [PNS]). Furthermore, nociceptive neurons phenocopy chemotherapy-induced neurotoxicity in a system suitable for high-throughput drug screening. Our findings provide an easily accessible approach for generating human NPCs that harbor extensive developmental potential, enabling the study of clinically relevant neural diseases directly from patient cohorts.