Injecting specially prepared human adult stem cells directly into the brains of chronic stroke patients proved safe and effective in restoring motor (muscle) function in a small clinical trial led by Stanford University School of Medicine investigators.

The 18 patients had suffered their first and only stroke between six months and three years before receiving the injections, which involved drilling a small hole through their skulls.

For most patients, at least a full year had passed since their stroke — well past the time when further recovery might be hoped for. In each case, the stroke had taken place beneath the brain’s outermost layer, or cortex, and had severely affected motor function. “Some patients couldn’t walk,” Steinberg said. “Others couldn’t move their arm.”

Sonia Olea Coontz had a stroke in 2011 that affected the movement of her right arm and leg. After modified stem cells were injected into her brain as part of a clinical trial, she says her limbs “woke up.” (credit: Mark Rightmire/Stanford University School of Medicine)

One of those patients, Sonia Olea Coontz, of Long Beach, California, now 36, had a stroke in May 2011. “My right arm wasn’t working at all,” said Coontz. “It felt like it was almost dead. My right leg worked, but not well.” She walked with a noticeable limp. “I used a wheelchair a lot. After my surgery, they woke up,” she said of her limbs.

‘Clinically meaningful’ results

The promising results set the stage for an expanded trial of the procedure now getting underway. They also call for new thinking regarding the permanence of brain damage, said Gary Steinberg, MD, PhD, professor and chair of neurosurgery.

“This was just a single trial, and a small one,” cautioned Steinberg, who led the 18-patient trial and conducted 12 of the procedures himself. (The rest were performed at the University of Pittsburgh.) “It was designed primarily to test the procedure’s safety. But patients improved by several standard measures, and their improvement was not only statistically significant, but clinically meaningful. Their ability to move around has recovered visibly. That’s unprecedented. At six months out from a stroke, you don’t expect to see any further recovery.”

The trial’s results are detailed in a paper published online June 2 in Stroke. Steinberg, who has more than 15 years’ worth of experience in work with stem cell therapies for neurological indications, is the paper’s lead and senior author.

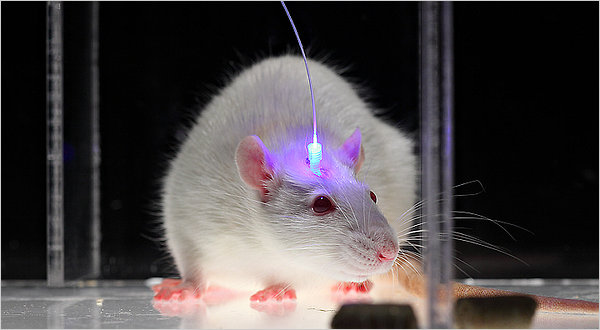

The procedure involved injecting SB623 mesenchymal stem cells, derived from the bone marrow of two donors and then modified to beneficially alter the cells’ ability to restore neurologic function.*

Motor-function improvements

Substantial improvements were seen in patients’ scores on several widely accepted metrics of stroke recovery. Perhaps most notably, there was an overall 11.4-point improvement on the motor-function component of the Fugl-Meyer test, which specifically gauges patients’ movement deficits. “Patients who were in wheelchairs are walking now,” said Steinberg, who is the Bernard and Ronni Lacroute-William Randolph Hearst Professor in Neurosurgery and Neurosciences.

“We know these cells don’t survive for more than a month or so in the brain,” he added. “Yet we see that patients’ recovery is sustained for greater than one year and, in some cases now, more than two years.”

Importantly, the stroke patients’ postoperative improvement was independent of their age or their condition’s severity at the onset of the trial. “Older people tend not to respond to treatment as well, but here we see 70-year-olds recovering substantially,” Steinberg said. “This could revolutionize our concept of what happens after not only stroke, but traumatic brain injury and even neurodegenerative disorders. The notion was that once the brain is injured, it doesn’t recover — you’re stuck with it. But if we can figure out how to jump-start these damaged brain circuits, we can change the whole effect.

“We thought those brain circuits were dead. And we’ve learned that they’re not.”

New trial now recruiting 156 patients

A new randomized, double-blinded multicenter phase-2b trial aiming to enroll 156 chronic stroke patients is now actively recruiting patients. Steinberg is the principal investigator of that trial. For more information, you can e-mail stemcellstudy@stanford.edu. “There are close to 7 million chronic stroke patients in the United States,” Steinberg said. “If this treatment really works for that huge population, it has great potential.”

Some 800,000 people suffer a stroke each year in the United States alone. About 85 percent of all strokes are ischemic: They occur when a clot forms in a blood vessel supplying blood to part of the brain, with subsequent intensive damage to the affected area. The specific loss of function incurred depends on exactly where within the brain the stroke occurs, and on its magnitude.

Although approved therapies for ischemic stroke exist, to be effective they must be applied within a few hours of the event — a time frame that often is exceeded by the amount of time it takes for a stroke patient to arrive at a treatment center.

Consequently, only a small fraction of patients benefit from treatment during the stroke’s acute phase. The great majority of survivors end up with enduring disabilities. Some lost functionality often returns, but it’s typically limited. And the prevailing consensus among neurologists is that virtually all recovery that’s going to occur comes within the first six months after the stroke.

* Mesenchymal stem cells are the naturally occurring precursors of muscle, fat, bone and tendon tissues. In preclinical studies, though, they’ve not been found to cause problems by differentiating into unwanted tissues or forming tumors. Easily harvested from bone marrow, they appear to trigger no strong immune reaction in recipients even when they come from an unrelated donor. In fact, they may actively suppress the immune system. For this trial, unlike the great majority of transplantation procedures, the stem cell recipients received no immunosuppressant drugs.

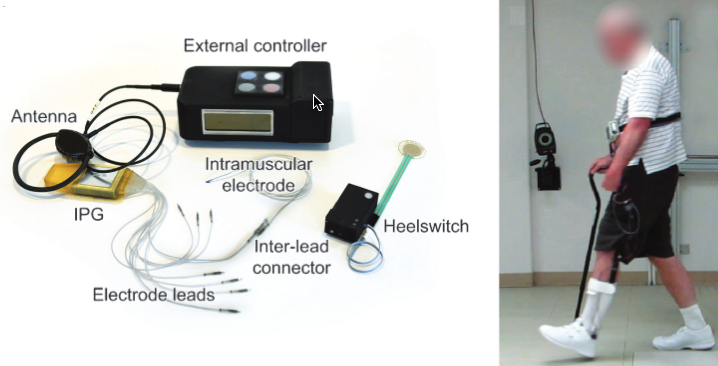

During the procedure, patients’ heads were held in fixed positions while a hole was drilled through their skulls to allow for the injection of SB623 cells, accomplished with a syringe, into a number of spots at the periphery of the stroke-damaged area, which varied from patient to patient.

Afterward, patients were monitored via blood tests, clinical evaluations and brain imaging. Interestingly, the implanted stem cells themselves do not appear to survive very long in the brain. Preclinical studies have shown that these cells begin to disappear about one month after the procedure and are gone by two months. Yet, patients showed significant recovery by a number of measures within a month’s time, and they continued improving for several months afterward, sustaining these improvements at six and 12 months after surgery. Steinberg said it’s likely that factors secreted by the mesenchymal cells during their early postoperative presence near the stroke site stimulates lasting regeneration or reactivation of nearby nervous tissue.

No relevant blood abnormalities were observed. Some patients experienced transient nausea and vomiting, and 78 percent had temporary headaches related to the transplant procedure.

Abstract of Clinical Outcomes of Transplanted Modified Bone Marrow–Derived Mesenchymal Stem Cells in Stroke: A Phase 1/2a Study

Background and Purpose—Preclinical data suggest that cell-based therapies have the potential to improve stroke outcomes.

Methods—Eighteen patients with stable, chronic stroke were enrolled in a 2-year, open-label, single-arm study to evaluate the safety and clinical outcomes of surgical transplantation of modified bone marrow–derived mesenchymal stem cells (SB623).

Results—All patients in the safety population (N=18) experienced at least 1 treatment-emergent adverse event. Six patients experienced 6 serious treatment-emergent adverse events; 2 were probably or definitely related to surgical procedure; none were related to cell treatment. All serious treatment-emergent adverse events resolved without sequelae. There were no dose-limiting toxicities or deaths. Sixteen patients completed 12 months of follow-up at the time of this analysis. Significant improvement from baseline (mean) was reported for: (1) European Stroke Scale: mean increase 6.88 (95% confidence interval, 3.5–10.3;P<0.001), (2) National Institutes of Health Stroke Scale: mean decrease 2.00 (95% confidence interval, −2.7 to −1.3; P<0.001), (3) Fugl-Meyer total score: mean increase 19.20 (95% confidence interval, 11.4–27.0; P<0.001), and (4) Fugl-Meyer motor function total score: mean increase 11.40 (95% confidence interval, 4.6–18.2;P<0.001). No changes were observed in modified Rankin Scale. The area of magnetic resonance T2 fluid-attenuated inversion recovery signal change in the ipsilateral cortex 1 week after implantation significantly correlated with clinical improvement at 12 months (P<0.001 for European Stroke Scale).

Conclusions—In this interim report, SB623 cells were safe and associated with improvement in clinical outcome end points at 12 months.